Unmanned aerial vehicle staged autonomous landing method based on visual information fusion

A visual information, drone technology, applied in non-electric variable control, instruments, control/regulation systems, etc., can solve the problem that the safety of the drone at the end of the boat needs to be improved, the drone is difficult to maintain stability, and the camera is lost. Landmark vision and other issues, to achieve the effect of simplifying computational complexity, lowering technical thresholds, and avoiding lag

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

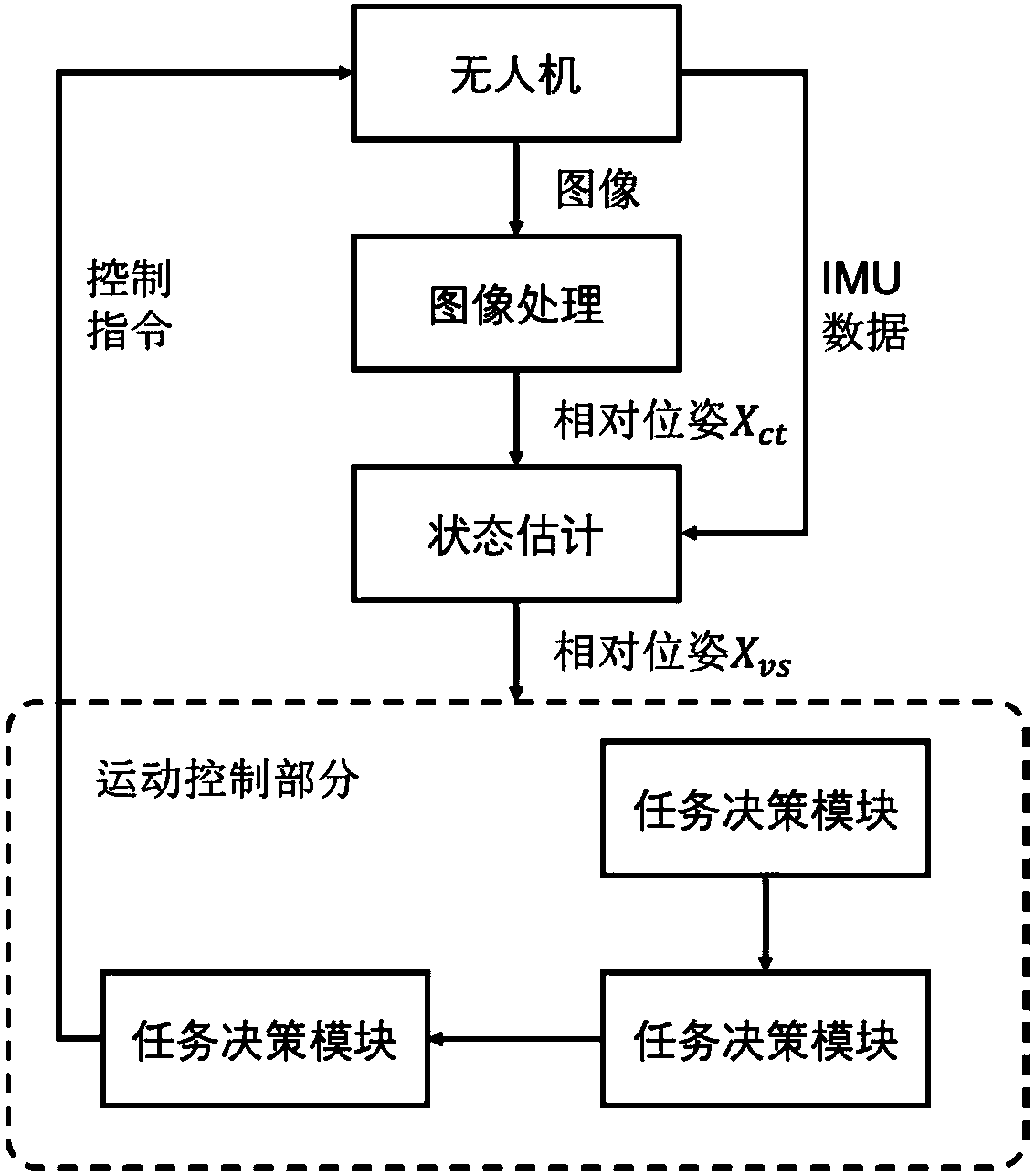

[0063] The following is attached image 3 The technical solution of the present invention is described in detail.

[0064] For the convenience of explanation, the following symbol conventions are first made:

[0065] x ij Refers to the pose of the j reference frame in the i reference frame, where the pose is defined as a 6-dimensional column vector [x y z φθ ψ] T , where (x, y, z) are the position coordinates in the reference system, (φ, θ, ψ) are the angles of rotation around the x-axis, y-axis, and z-axis, respectively, called roll angle, pitch angle, and yaw horn. The reference frames used are: drone reference frame {v}, local reference frame {l}, unmanned boat reference frame {s}, camera reference frame {c}, landmark reference frame {t}. At the same time define some basic symbols of reference frame transformation: if i, j, k represent three reference frames, the symbols Represents the accumulation of transformations, satisfying symbol express The inverse operat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com