Three-dimensional gesture recognition method based on depth image and interaction system

A technology of three-dimensional gestures and recognition methods, which is applied in character and pattern recognition, input/output of user/computer interaction, graphic reading, etc., and can solve the problems of limiting the degree of freedom and generalization, complexity, accuracy, and complexity of gesture recognition , to achieve the effect of enriching uses, overcoming limitations, and high recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

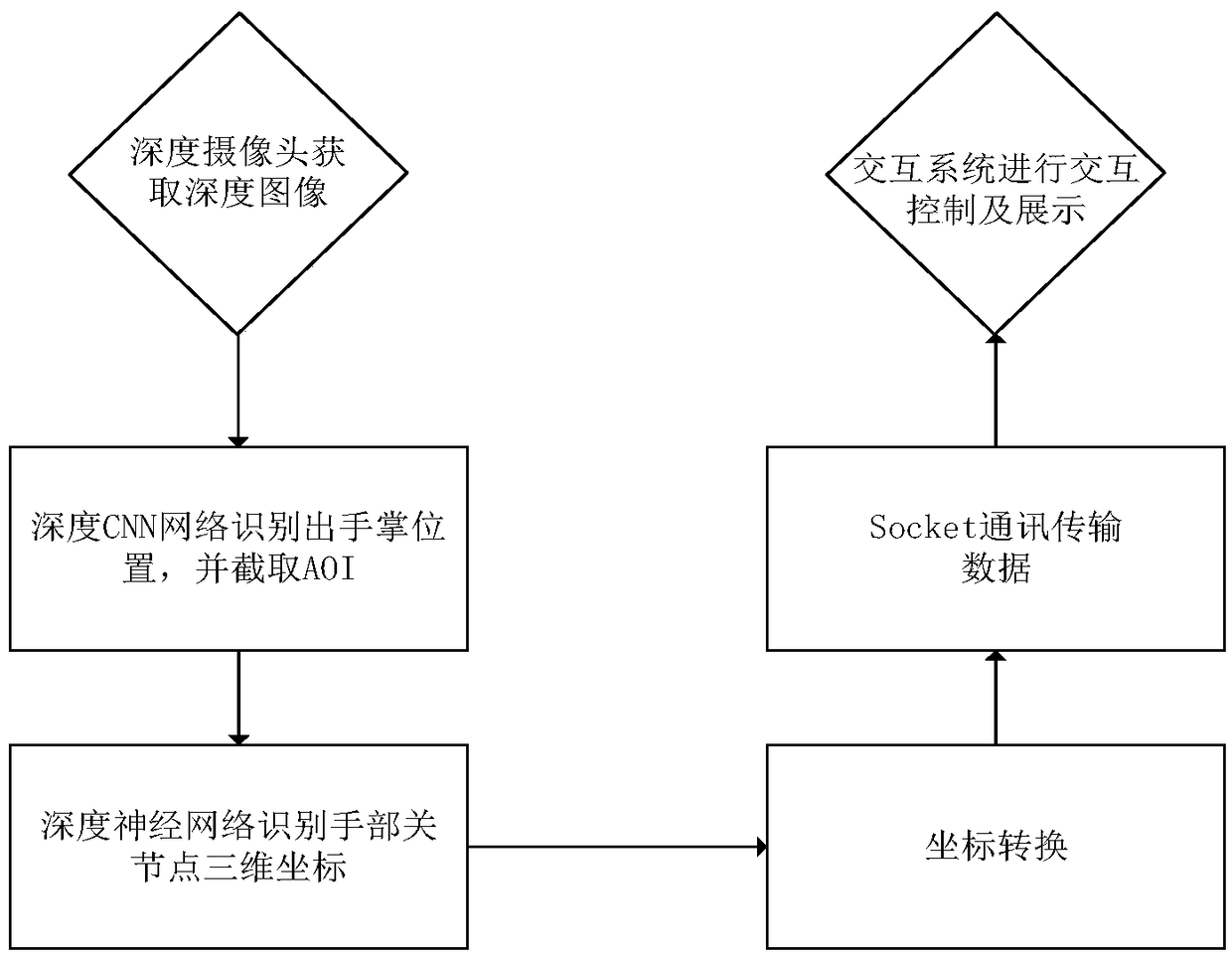

[0033] In order to solve the problems of low accuracy of gesture recognition, diversity of template matching methods, limited method recognition results and inability to be modified by the user, the present invention is based on the third method, that is, training a deep neural network for gesture recognition, first through deep convolutional neural network detection The position of the hand, and then get the final three-dimensional coordinates of each joint point of the palm through ResNet and autoencoder, and finally restore the entire palm through the gesture interaction system and perform corresponding interactive actions, so as to achieve better three-dimensional gesture recognition and interaction effect .

[0034] Such as figure 1 As shown, the gesture recognition method and its interaction system based on the depth information map provided in this embodiment include the following steps:

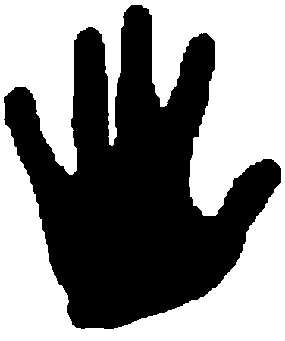

[0035] 1) Use the depth sensor to obtain the depth information map;

[0036] Th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com