Robot 3D vision hand-eye calibration method

A hand-eye calibration and robot technology, which is applied in the field of robot 3D vision hand-eye calibration, can solve the problems that the accuracy is difficult to meet the requirements, the accuracy is not enough, and the physical accuracy requirements are very high, and the effect of meeting the accuracy requirements and improving the accuracy is achieved.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

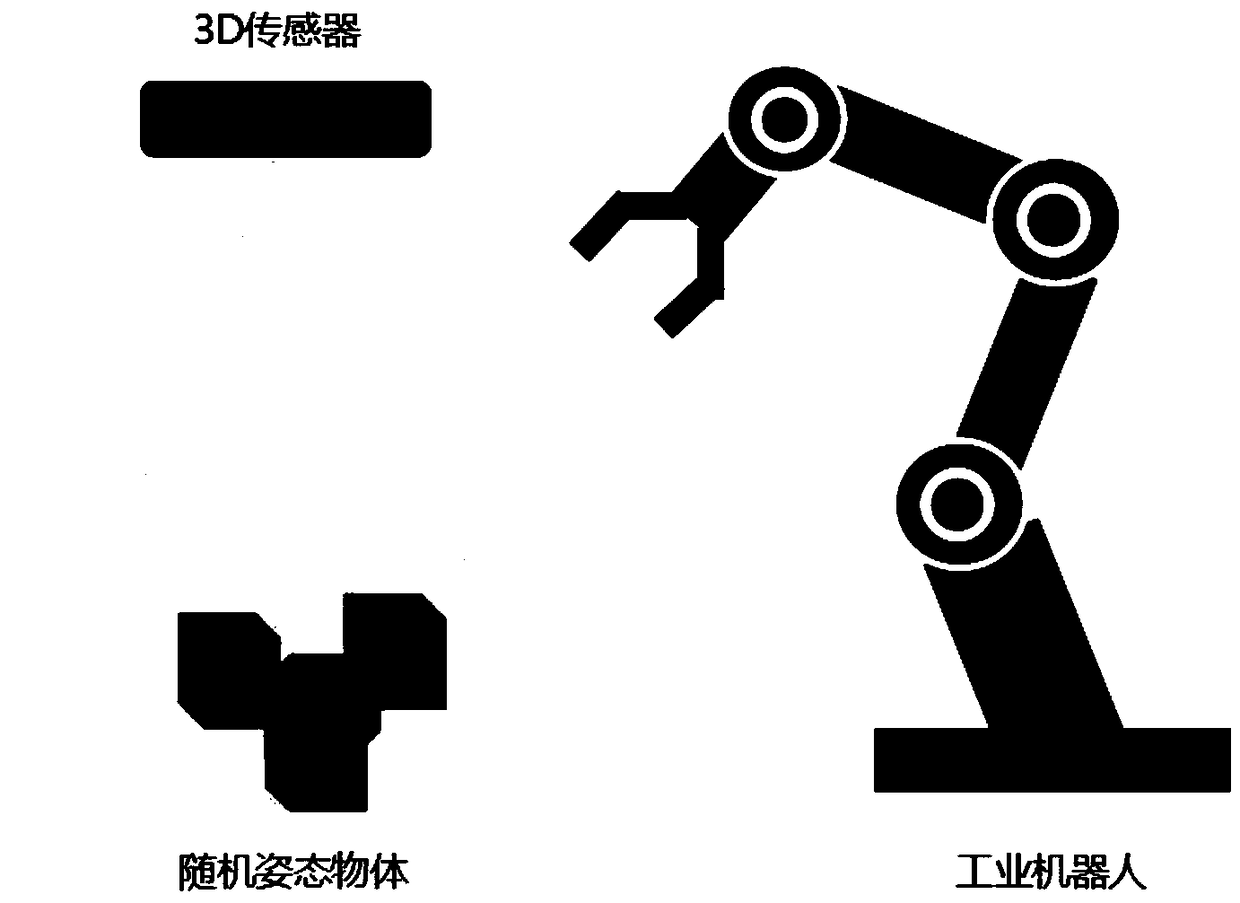

[0034] Such as figure 1 As shown, in this embodiment, a 3D structured light sensor and a six-degree-of-freedom industrial robot are taken as examples to illustrate the robot 3D vision hand-eye calibration method and based on the hand-eye calibration method, the position and posture of the workpiece under the 3D sensor are converted into the base coordinates of the robot. The specific realization of the position and attitude of the system.

[0035] The steps of the robot 3D vision hand-eye calibration method in this embodiment are as follows:

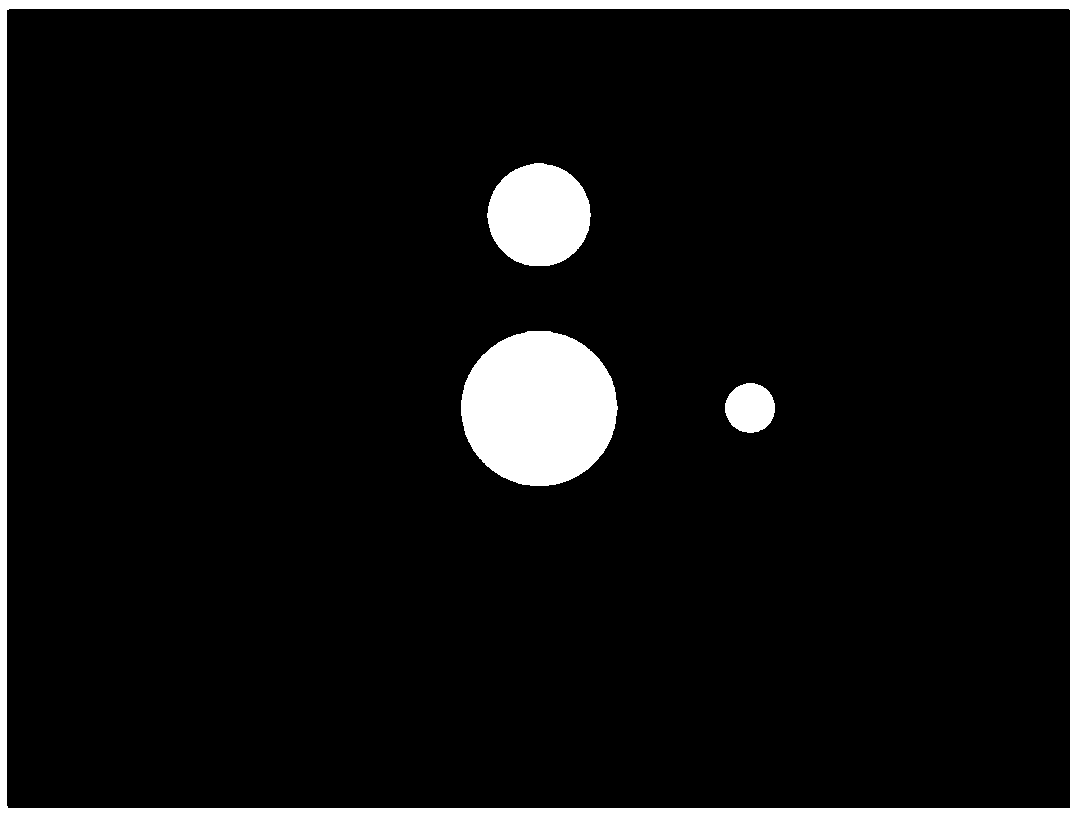

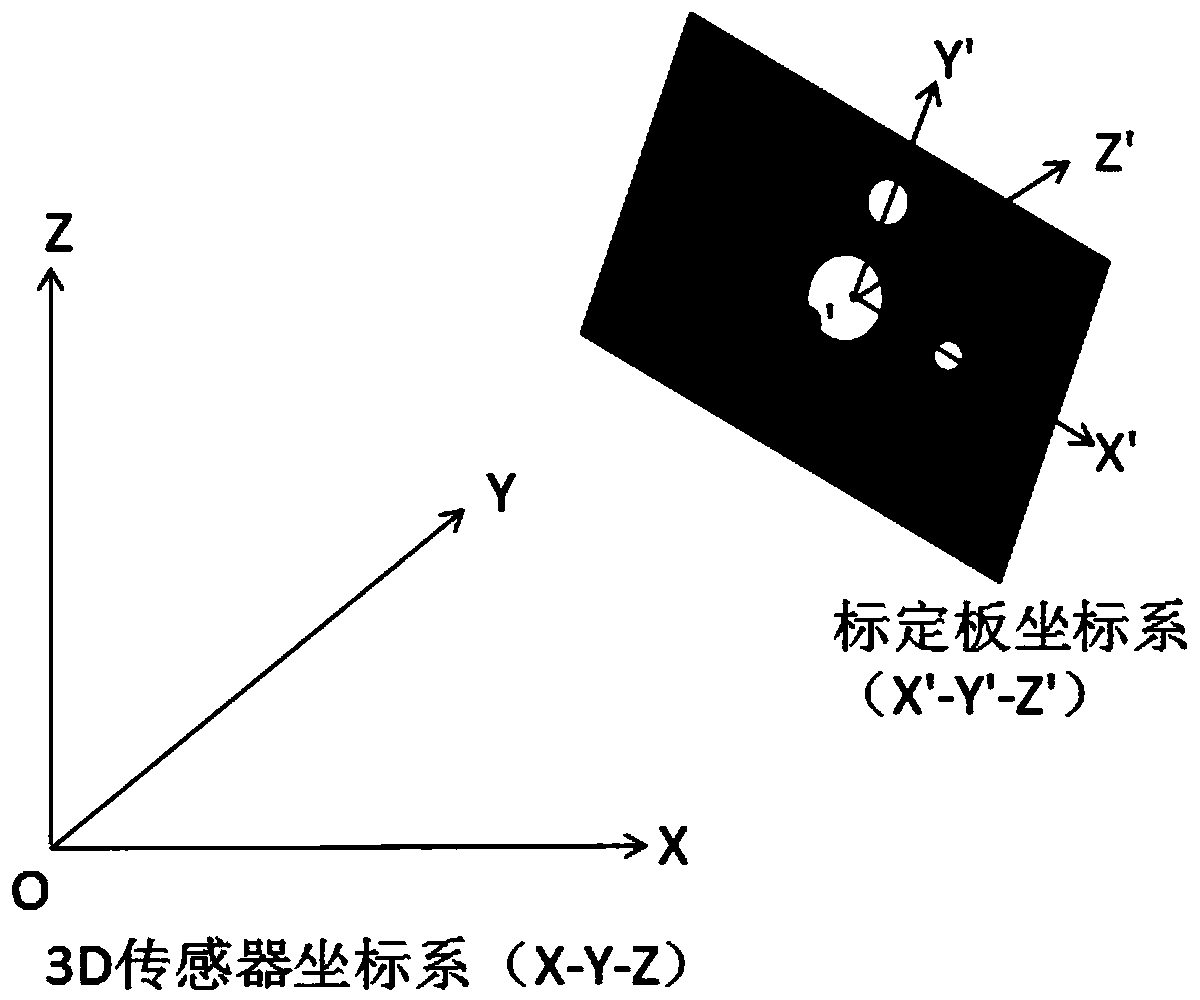

[0036] Step S1: set as figure 2 The shown calibration plate is installed on the robot flange, move the end of the robot actuator, change the position and attitude of the flange, and obtain the attitude of multipl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com