Vegetable and background segmentation method based on inertial measurement unit and visual information

An inertial measurement unit and visual information technology, applied in the field of image processing, can solve problems such as complicated use and a large number of manual markings, and achieve the effect of high precision and wide use scenarios

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

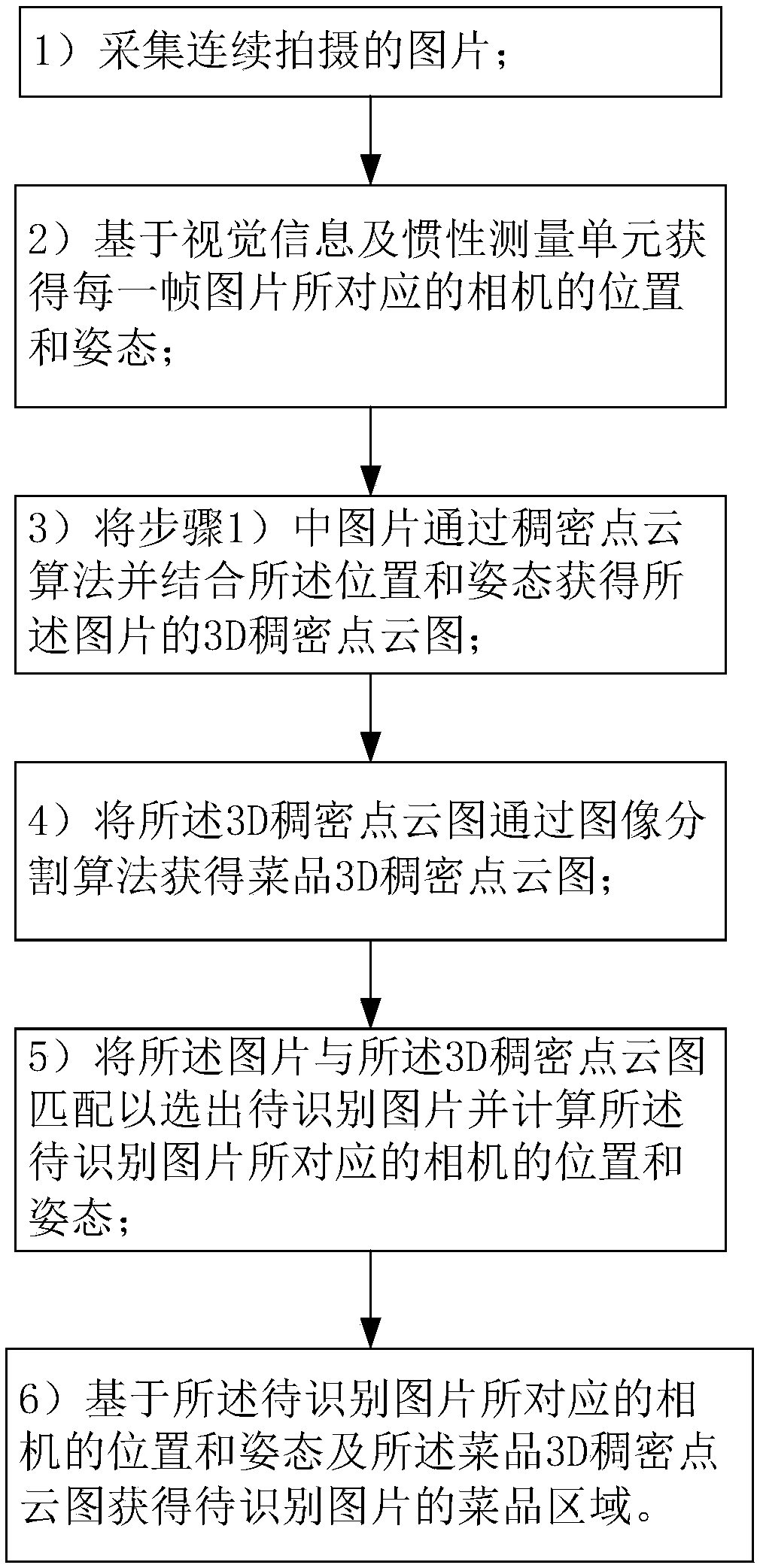

[0040] 1) To collect continuous shooting pictures, the user rotates 90 degrees around the dishes in the longitude direction to take pictures of the dishes, and the time for taking pictures is 10 seconds.

[0041] 2) Obtain the position and attitude of the camera corresponding to each frame of pictures based on the visual-inertial odometry, and the visual-inertial odometry uses a combination of filtering methods and optimization methods.

[0042] 3) Pass the picture in step 1) through a dense point cloud algorithm and combine the position and attitude to obtain a 3D dense point cloud image of the picture.

[0043] 4) The 3D dense point cloud image is obtained through an image segmentation algorithm to obtain a 3D dense point cloud image of the dish, and the image segmentation algorithm uses height as a distinguishing technical feature.

[0044] 5) Match the picture with the 3D dense point cloud image to select the picture to be recognized and obtain the position and attitude of...

Embodiment 2

[0047] 1) To collect continuous shooting pictures, the user rotates 90 degrees around the dishes in the longitude direction to take pictures of the dishes, and the time for taking pictures is 10 seconds.

[0048] 2) Obtain the position and attitude of the camera corresponding to each frame of pictures based on the visual-inertial odometry, and the visual-inertial odometry uses a combination of filtering methods and optimization methods.

[0049] 3) Pass the picture in step 1) through a dense point cloud algorithm and combine the position and attitude to obtain a 3D dense point cloud image of the picture.

[0050] 4) The 3D dense point cloud image is obtained through an image segmentation algorithm to obtain a 3D dense point cloud image of the dish, and the image segmentation algorithm uses height as a distinguishing technical feature.

[0051] 5) Matching the picture with the 3D dense point cloud to select the picture to be recognized and obtain the position and attitude of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com