A method for estimating that posture of a three-dimensional human body based on a video stream

A technology of human posture and video streaming, applied in the field of virtual reality, can solve problems such as poor inference effect, difficult learning, and poor practical application effect, and achieve the effects of improving accuracy and real-time performance, reducing nonlinearity, and avoiding cumulative errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

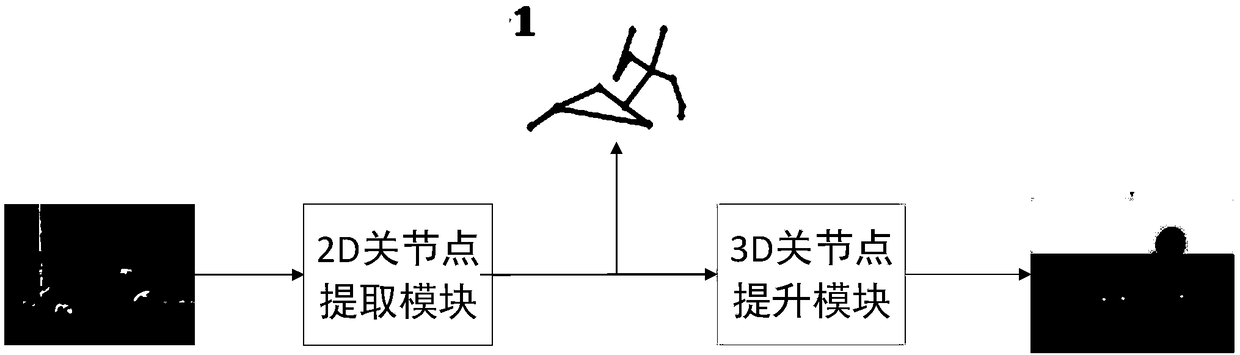

[0043] Example 1, such as image 3 As shown, the method of 3D human pose estimation based on video stream is as follows:

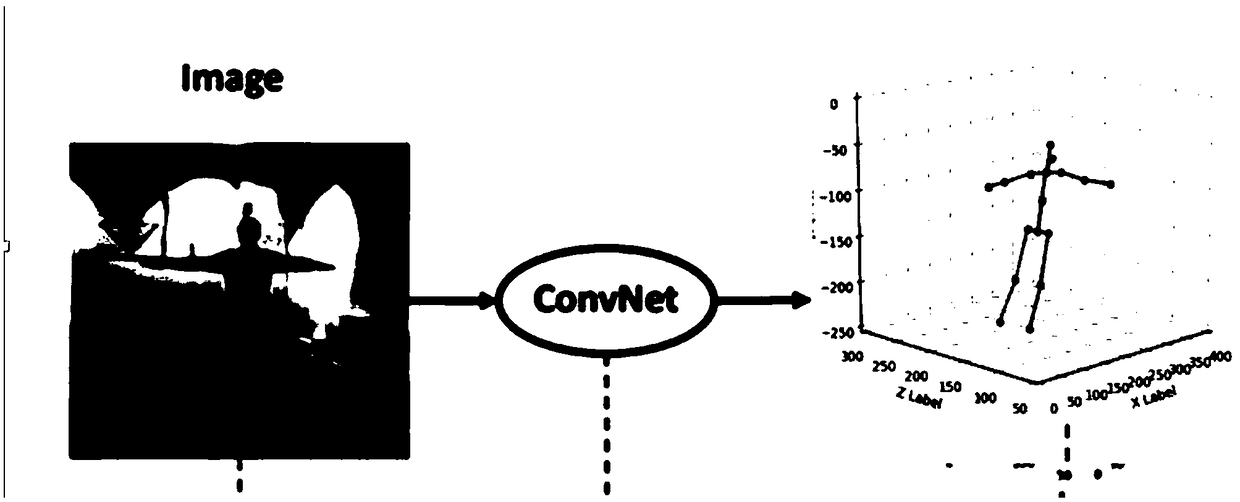

[0044] For the first frame of the video, 1) input the two-dimensional image of the current frame, use the hourglass network module to extract the two-dimensional posture of the human body, and generate a heat map of the two-dimensional joint points of the human body in the first frame; The heat map is output to the 3D joint point inference module, and the 2D to 3D spatial mapping is performed to generate the 3D joint point heat map of the human body;

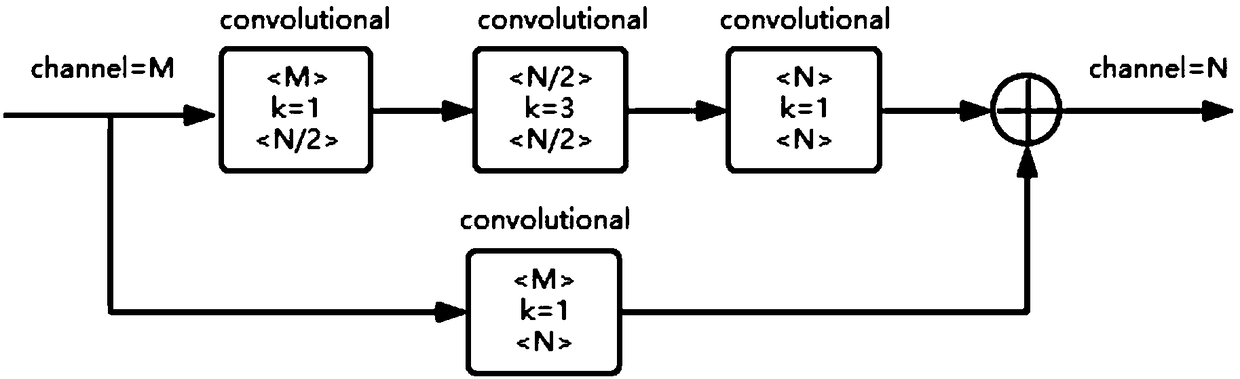

[0045] In the second frame of the video, 1) input the two-dimensional image of the current frame, and use the shallow neural network module to generate a shallow image; Input it to the LSTM module to generate a deep feature map; 3) The deep image feature map generated by the current frame is output to the residual module to generate the heat map of the two-dimensional joint points of the human body in the c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com