Mobile robot visual navigation method based on deep learning

A mobile robot and deep learning technology, which is applied in the field of visual navigation of mobile robots based on deep learning, can solve the problems of inability to recognize objects and high price of lidar, and achieve the effect of low cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

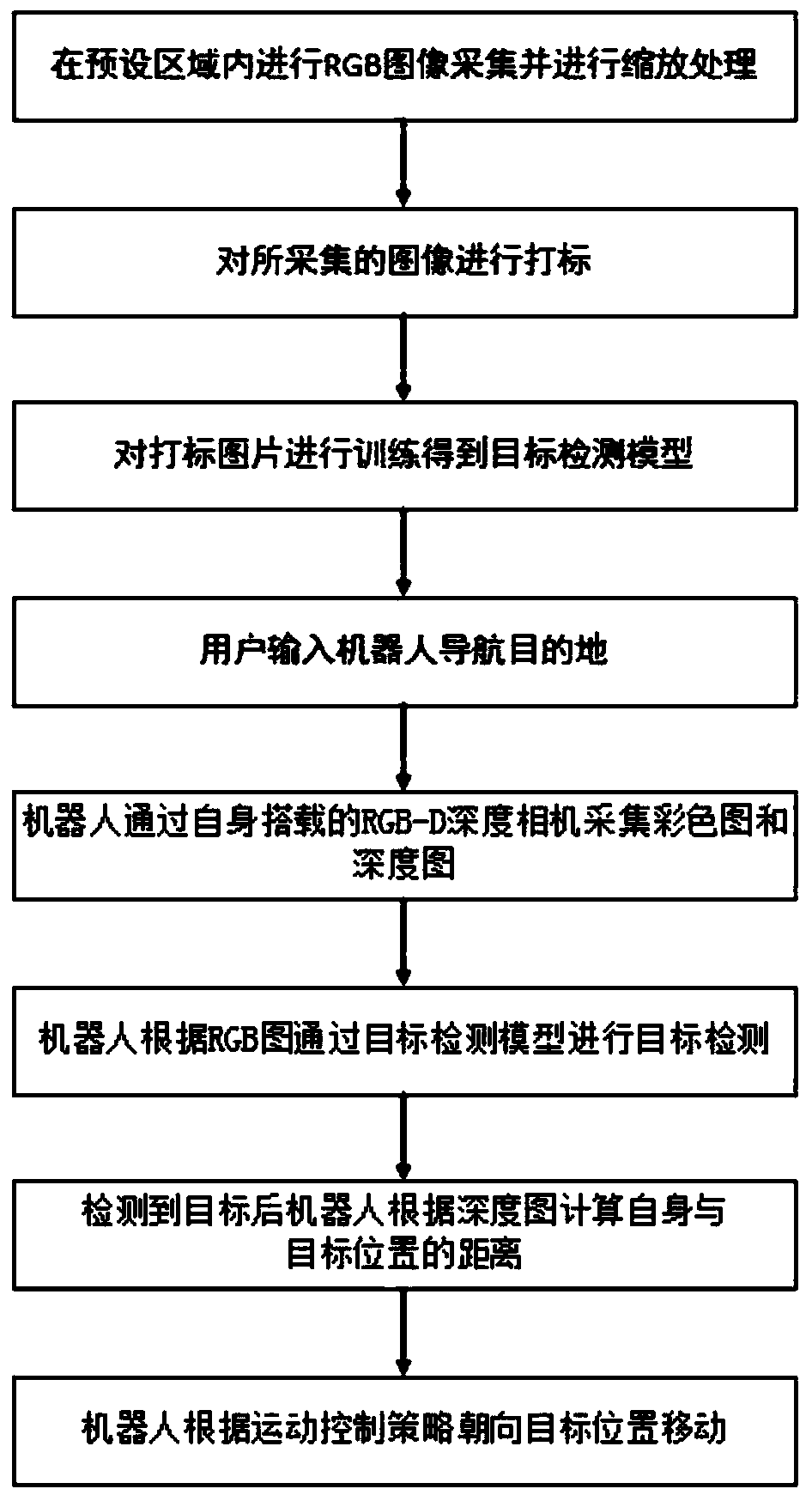

Method used

Image

Examples

Embodiment Construction

[0034] Embodiments of the present invention are described in detail below. This embodiment is implemented under the premise of the technical solution of the present invention, and detailed implementation and specific operation process are provided, but the protection scope of the present invention is not limited to the following embodiments.

[0035] Step 1: The robot randomly explores in the unknown environment, and takes images at a given frequency, and names and saves the images according to the shooting time sequence.

[0036] This embodiment is an indoor environment of a building. Since the robot is in an unknown environment, the robot needs to conduct random exploration in the environmental space to recognize the environment, and record the spatial information of the environment through random exploration.

[0037] The RGB-D depth camera collects images from various angles in the environment space at a high frequency. The image collection in this step only saves the color...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com