A fast monocular vision odometer navigation and positioning method combining a feature point method and a direct method

A monocular vision and feature fusion technology, which is applied in the field of navigation and positioning, can solve the technical solutions of using both the fusion feature point method and the direct method for navigation and positioning, the poor robustness of large baseline motions, and the high requirements for camera internal parameters. Average tracking time, improved robustness and stability, improved running frame rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

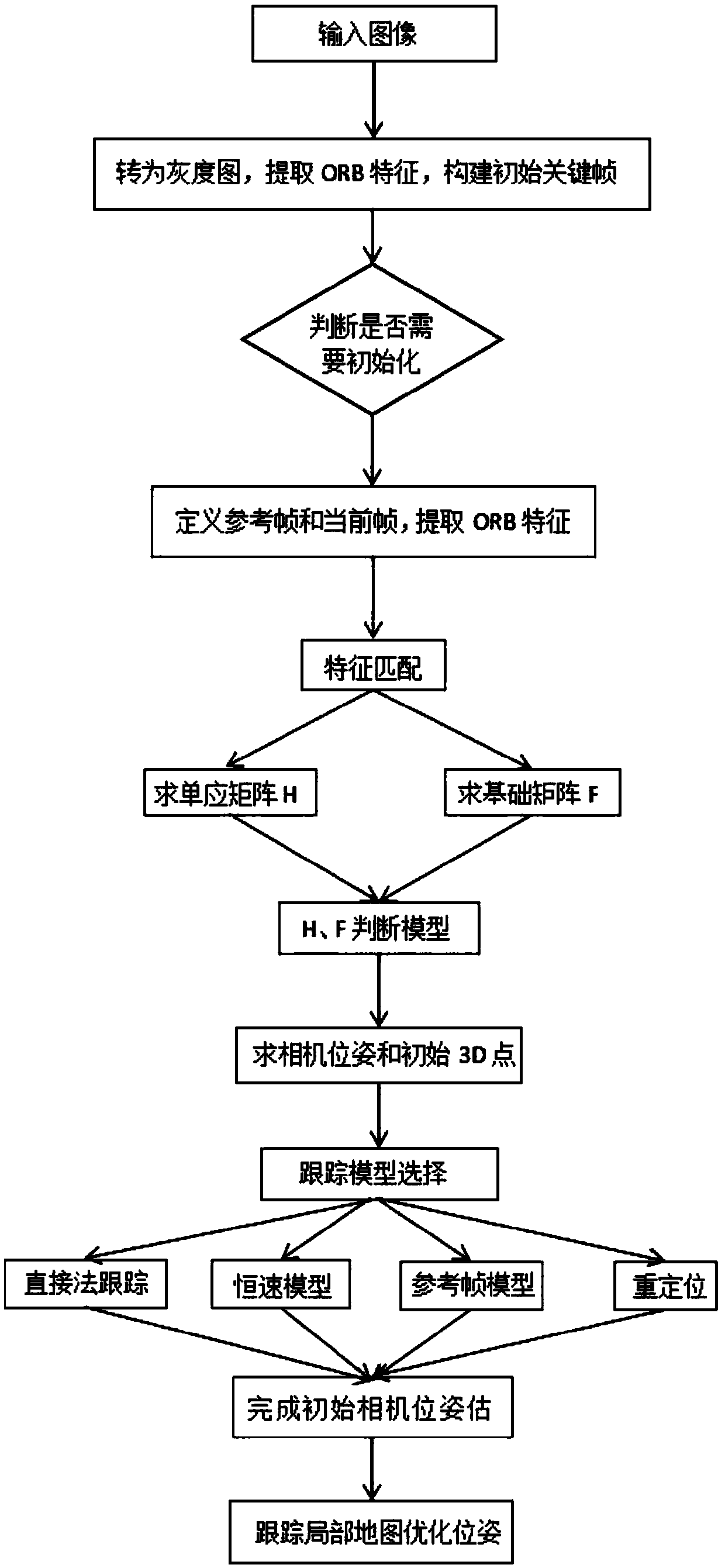

[0070] like figure 1 As shown, the present embodiment is a fast monocular visual odometer navigation and positioning method that combines the feature point method and the direct method, including the following steps:

[0071] S1. Turn on the visual odometry and acquire the first frame of image I 1 , converted into a grayscale image, and ORB feature points are extracted, and an initialization key frame is constructed.

[0072] S2. Determine whether it has been initialized; if it has been initialized, go to step S6, otherwise go to step S3.

[0073] S3. Define a reference frame and a current frame, extract ORB features, and perform feature matching.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com