A visual positioning method based on a diverse identification candidate box generation network

A technology of visual positioning and candidate frame, applied in the field based on deep neural network, can solve the problem of high computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0110] The detailed parameters of the present invention will be further specifically described below.

[0111] Step (1), training diverse discriminative candidate frame generation network (Diversified and DiscriminativeProposal Networks, DDPN)

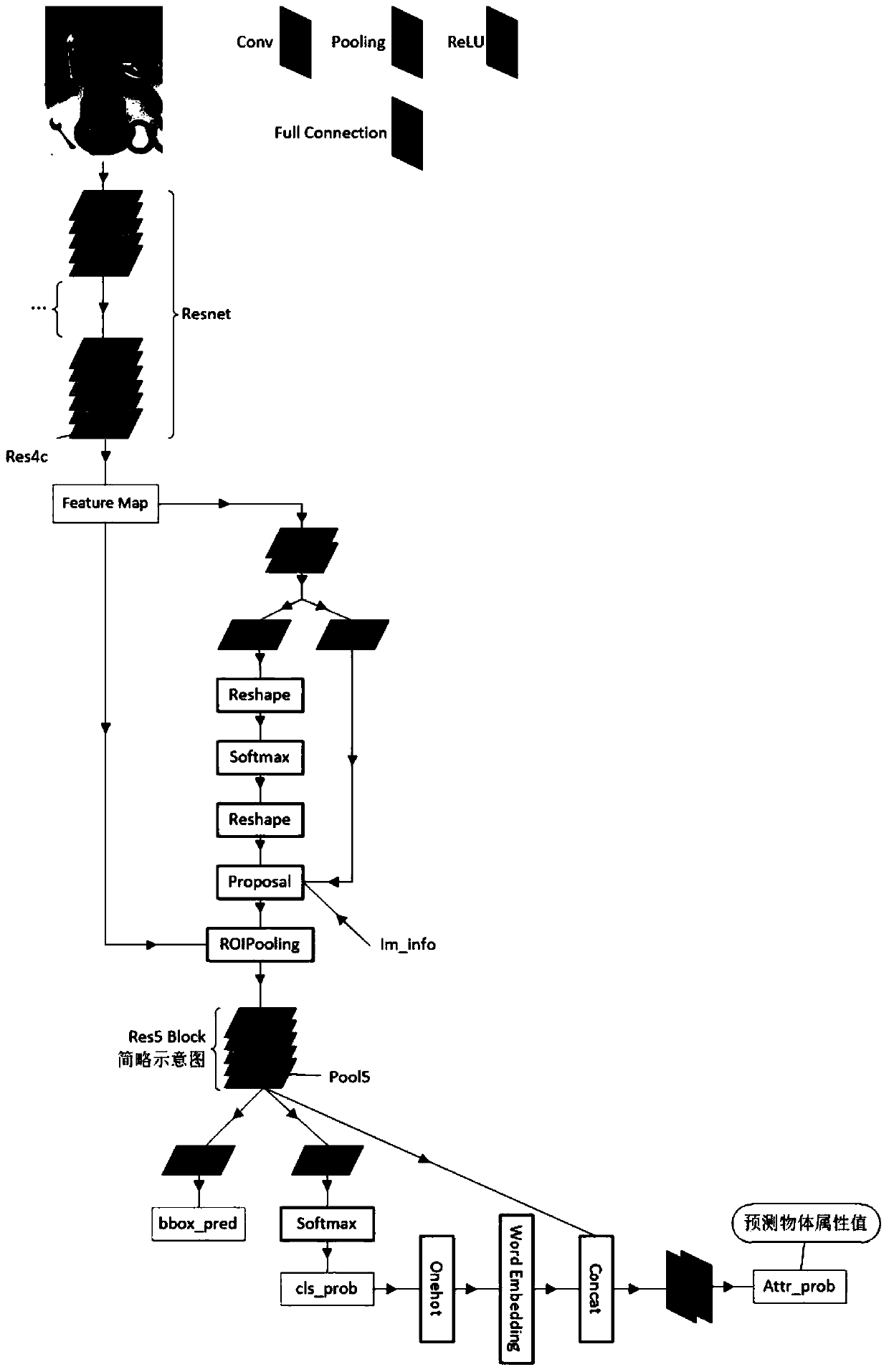

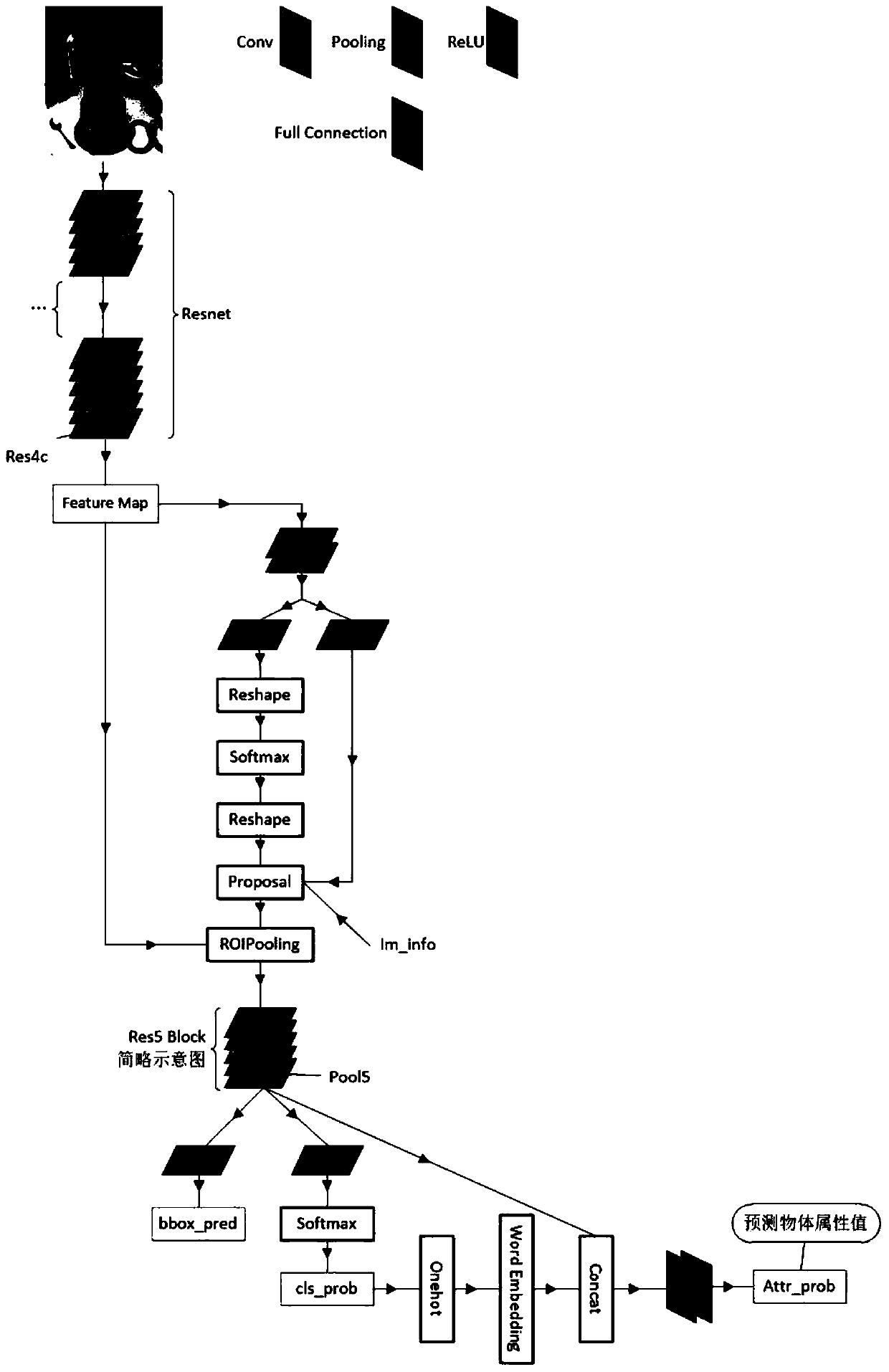

[0112] Use Faster-RCNN (an image detection algorithm) and add the prediction of the attribute value of the object on the basis of it, such as figure 1 As shown, it is trained on the Visual Genome dataset until the network converges, and the resulting converged network is called the DDPN network.

[0113] The described use DDPN network of step (2) extracts feature to image, specifically as follows:

[0114] 2-1. Here the DDPN network is used to predict 100 candidate boxes in the input image.

[0115] 2-2. Input the image area corresponding to 100 candidate frames into the DDPN network, extract the output data of the Pool5 layer as the feature pf corresponding to the candidate frame, And splicing the features corresponding to all the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com