Dynamic two-level cache flash translation layer address mapping method based on page-level mapping

A flash memory conversion layer and second-level cache technology, applied in the field of computer science, can solve the problems of I/O performance degradation, and achieve the effect of increasing response time, improving performance, and reducing the number of modifications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] In order to make the purpose, technical solutions and advantages of the present invention more clearly expressed, the following describes the embodiments of the present invention in detail with reference to the drawings and specific implementation steps, but it is not intended to limit the present invention.

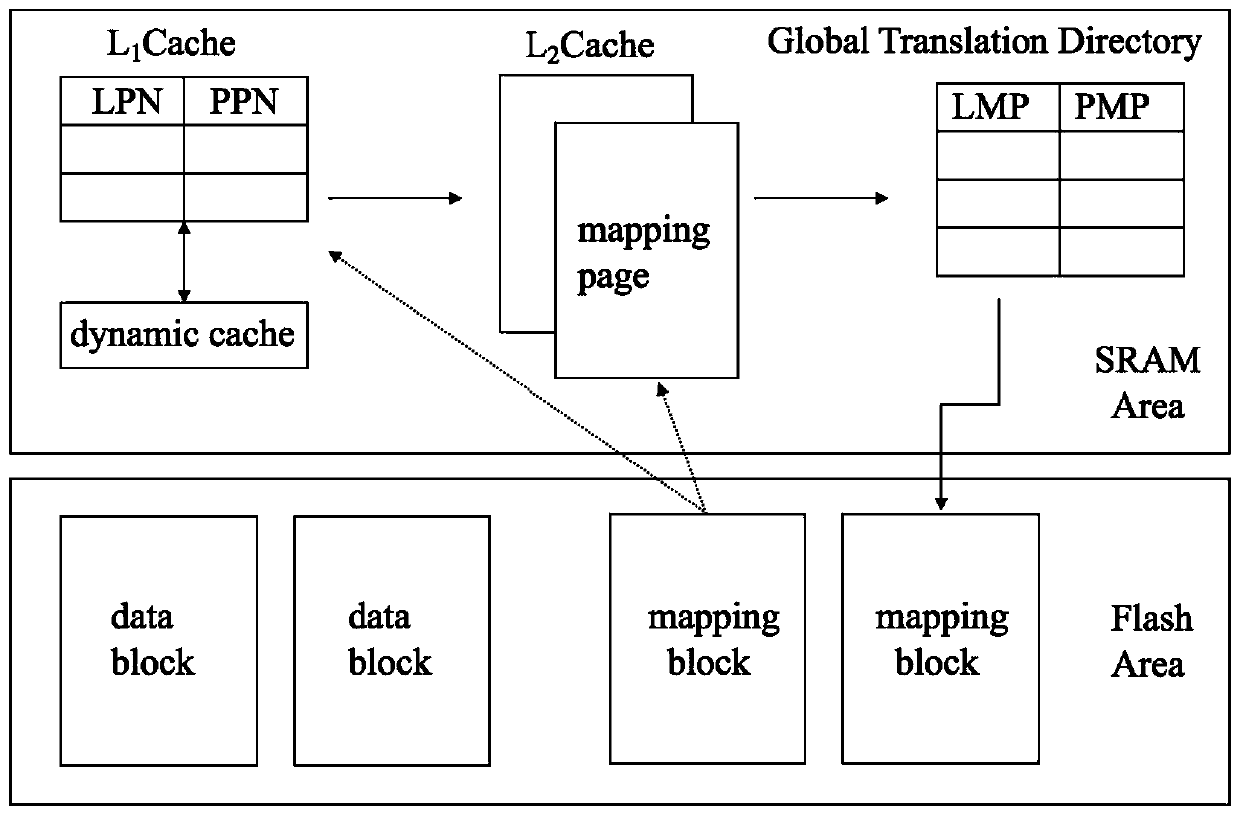

[0046] figure 1 It is a schematic diagram of the structure of the dynamic L2 cache flash translation layer address mapping method based on page level mapping of the present invention.

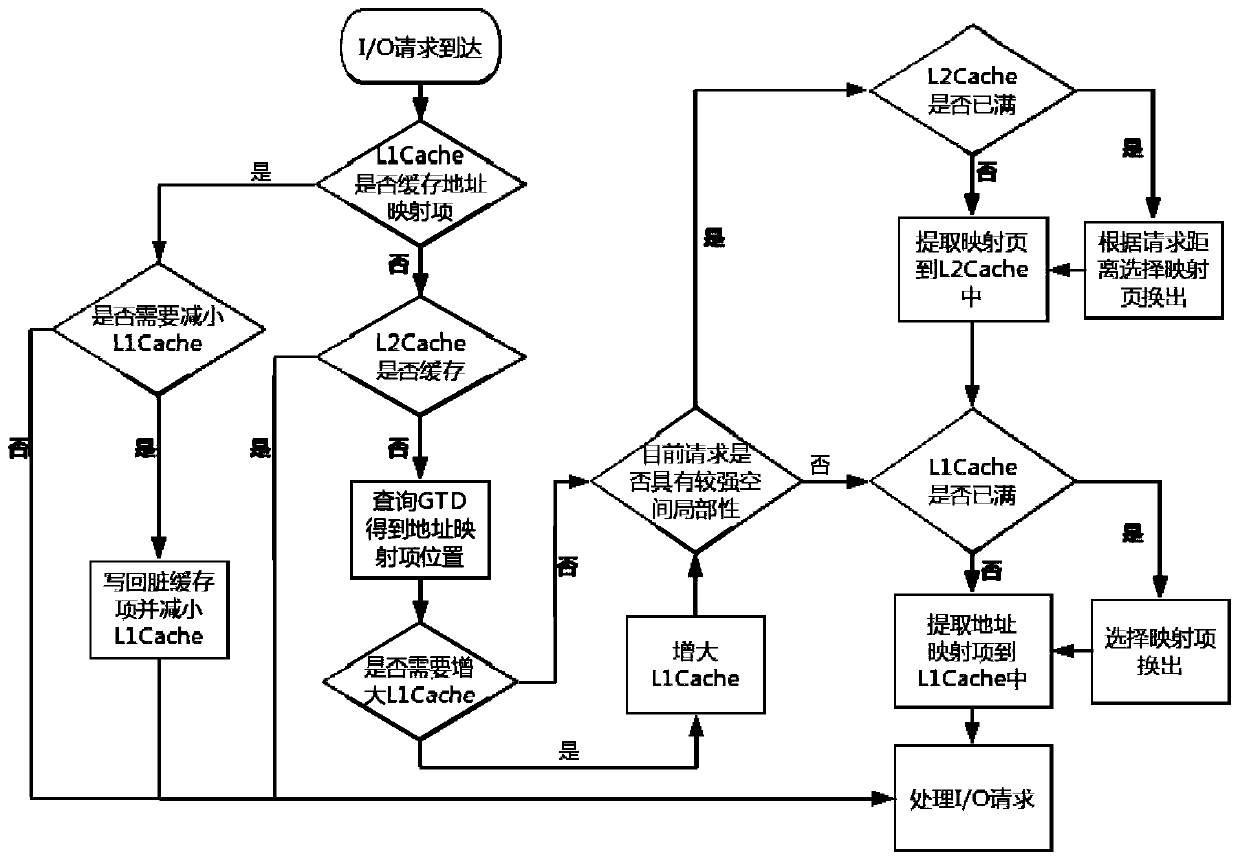

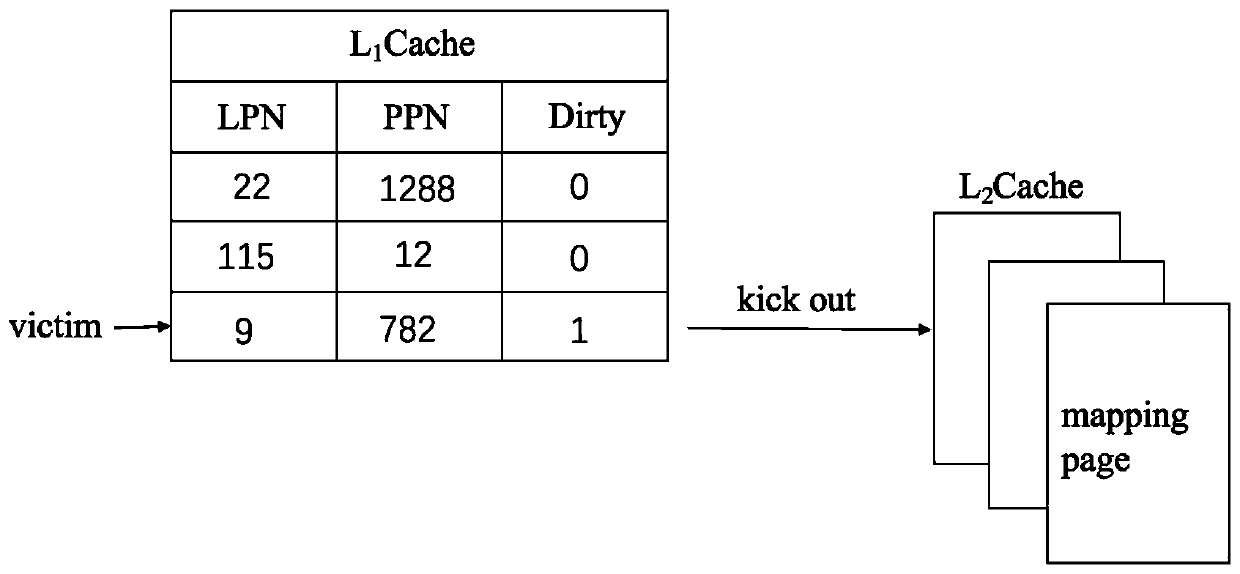

[0047] The present invention uses the time locality and space locality of sequential I / O to set the first level cache L respectively 1 Cache, secondary cache L 2 Cache, using different cache management strategies on the two-level cache, the first-level cache L 1 Cache is used to cache a single address mapping item, the second level cache L 2 Cache is used to cache the entire mapped page; the spatial locality detection method is used on the secondary cache to detect a certain number of cu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com