Neural network model block compression method, training method, computing device and system

A network model and neural network technology, applied in the field of neural networks, can solve the problems of inability to compress, reduce the running speed of the memristor array, and cannot adapt to the neural network model of the chip, and achieve the effect of saving resource overhead.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] In order to enable those skilled in the art to better understand the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

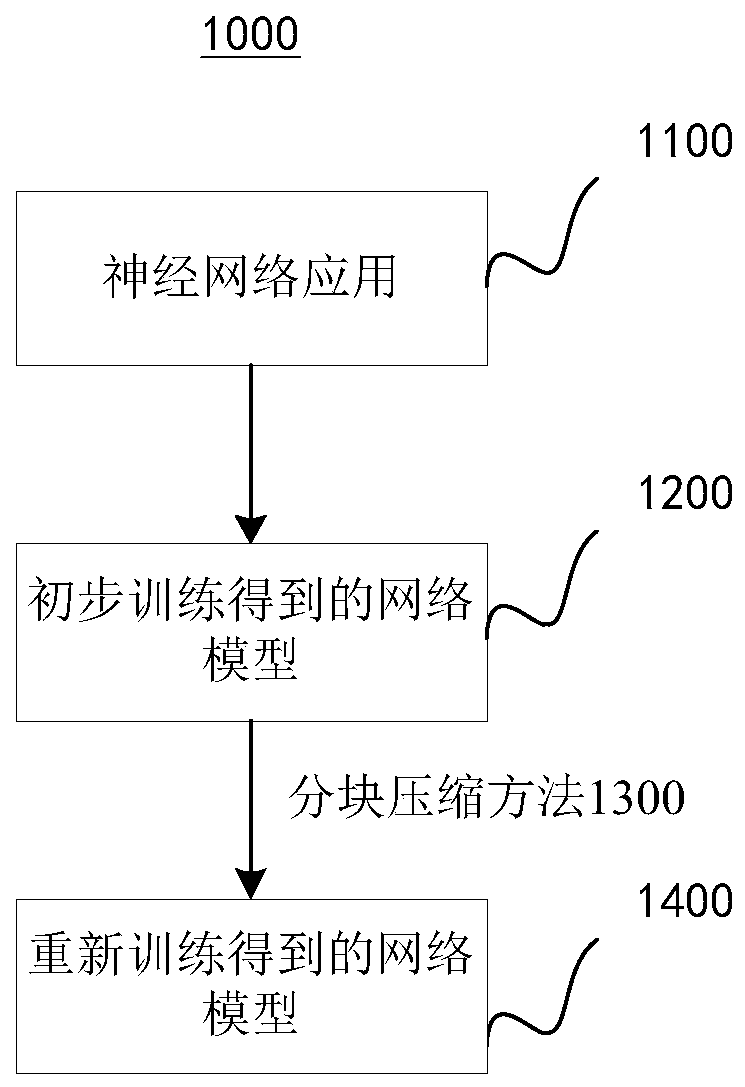

[0044] image 3 A schematic diagram showing an application scenario 1000 of the neural network network model block compression technology according to the present invention.

[0045] Such as image 3 As shown, the general inventive concept of the present disclosure is: perform preliminary neural network training on the neural network application 1100, learn to obtain the network model 1200, and perform block compression on the network model 1200 at a predetermined compression rate through the network model block compression method 1300 , and then re-training, and then compression-retraining-recompression-retraining..., such iterations, in order to fine-tune and learn to improve the accuracy rate, until the predetermined iteration termination requi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com