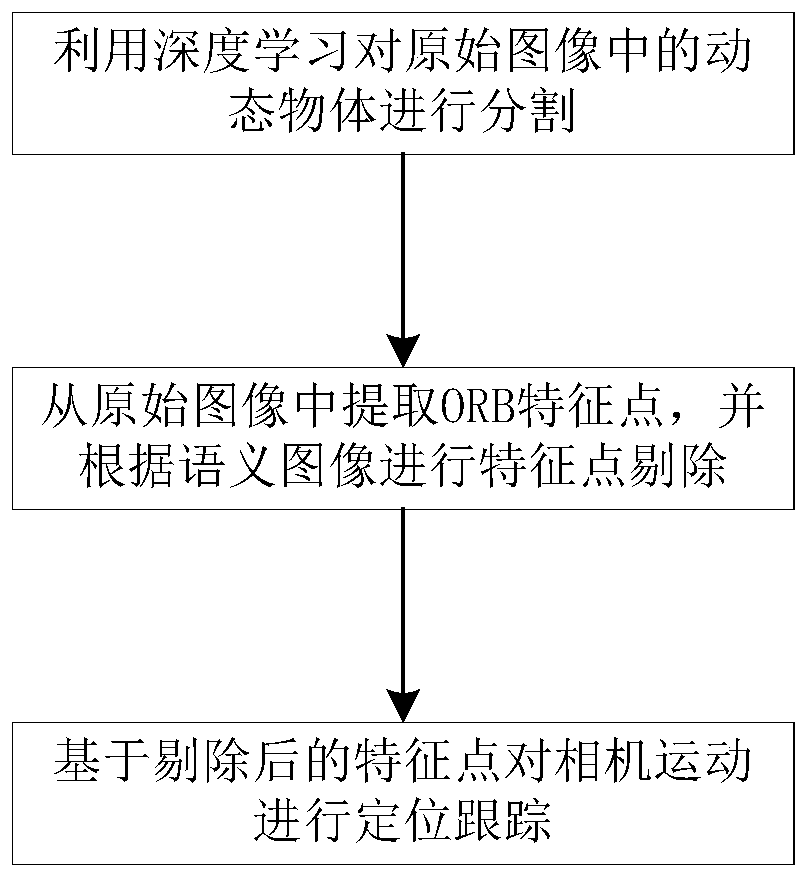

Dynamic scene visual positioning method based on image semantic segmentation

A technology for semantic segmentation and dynamic scenes, which is applied in image analysis, image data processing, instruments, etc., can solve the problem of positioning accuracy and robustness to be improved, and achieves improved positioning accuracy and robustness, improved positioning accuracy, and improved positioning accuracy. Excellent results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

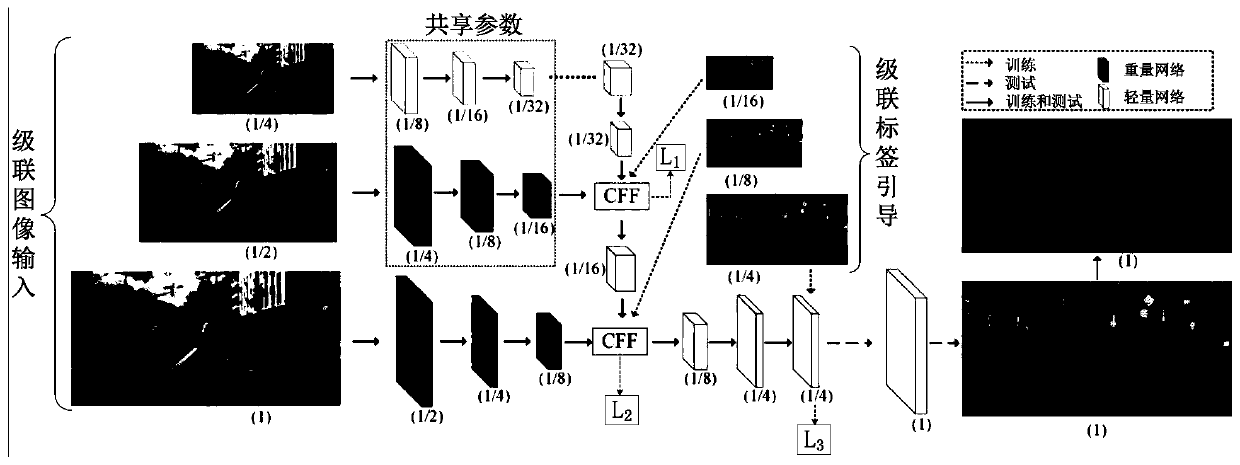

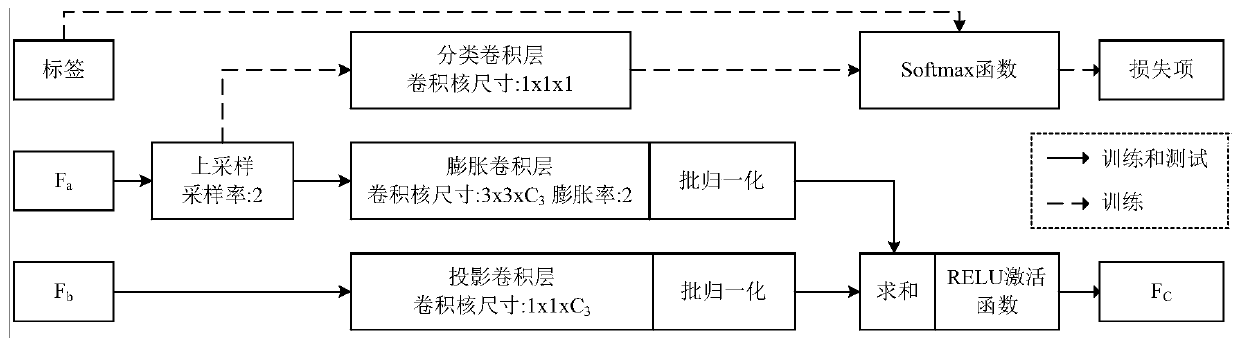

Method used

Image

Examples

Embodiment 1

[0081] The present invention is evaluated using Frankfurt monocular image sequences, which are part of the Cityscapes dataset. The entire Frankfurt sequence provides more than 100,000 frames of outdoor environment images and provides ground-truth localization results. Divide the sequence into several smaller sequences containing 1300-2500 frames of sequences of dynamic objects such as driving cars or pedestrians. The configuration of the experimental platform is: Intel XeonE5-2690V4; 128GB RAM; NVIDIA TitanVGPU.

[0082] The sequence isolated from the original Frankfurt sequence is as follows:

[0083] Seq.01:frankfurt_000001_054140_leftImg8bit.png-frankfurt_000001_056555_leftImg8bit.png

[0084] Seq.02:frankfurt_000001_012745_leftImg8bit.png-frankfurt_000001_014100_leftImg8bit.png

[0085] Seq.03:frankfurt_000001_003311_leftImg8bit.png-frankfurt_000001_005555_leftImg8bit.png

[0086] Seq.04:frankfurt_000001_010580_leftImg8bit.png-frankfurt_000001_012739_leftImg8bit.png

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com