Human movement mode speculation model based on variation track context perception, training method and speculation method

A technology of moving patterns and training methods, applied in computing models, character and pattern recognition, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

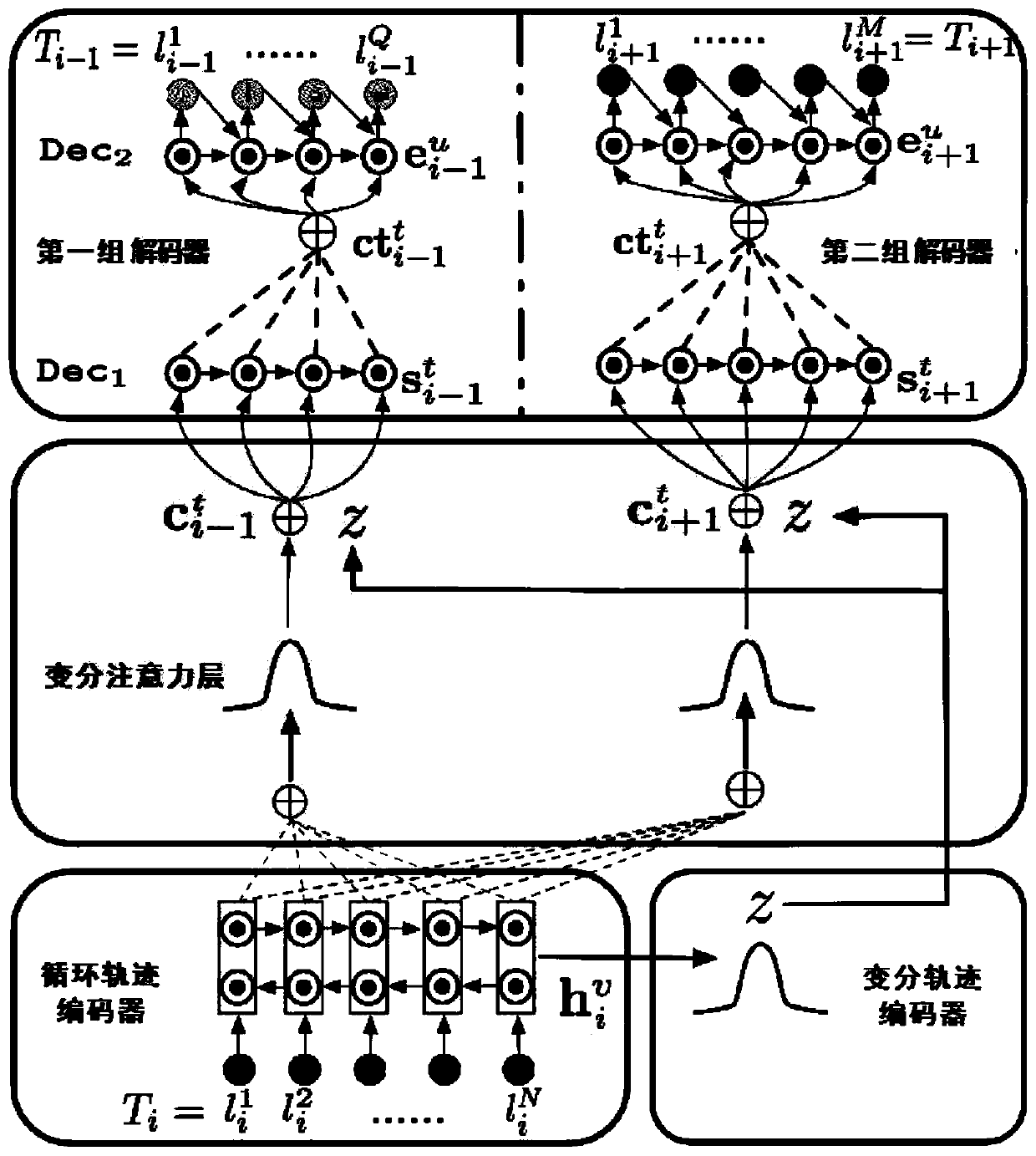

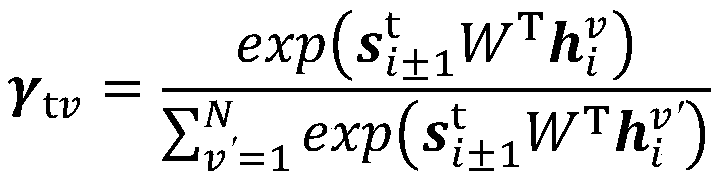

[0061] This embodiment provides a human movement pattern estimation model based on variational trajectory context perception, such as figure 1 As shown, it includes data preprocessing module, recurrent trajectory encoder, variational trajectory encoder, variational attention layer and decoder. The data preprocessing module is used to obtain the embedding vector of each track point of the current track. The loop trajectory encoder is used to encode the input current trajectory embedding vector to obtain the current trajectory semantic vector. The variational trajectory encoder is used to learn the input current trajectory embedding vector, and obtain the variational latent variable of the current trajectory satisfying the Gaussian distribution. The variational attention layer is used to obtain the attention vector of the current trajectory according to the semantic vector of the current trajectory based on the variational attention mechanism, and then cascade the attention vec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com