Multispectral and panchromatic image fusion method based on dense and jump connection deep convolutional network

A multi-spectral image, panchromatic image technology, applied in the field of multi-spectral and panchromatic image fusion, can solve the problem that high spatial resolution multi-spectral images cannot be accurately generated

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

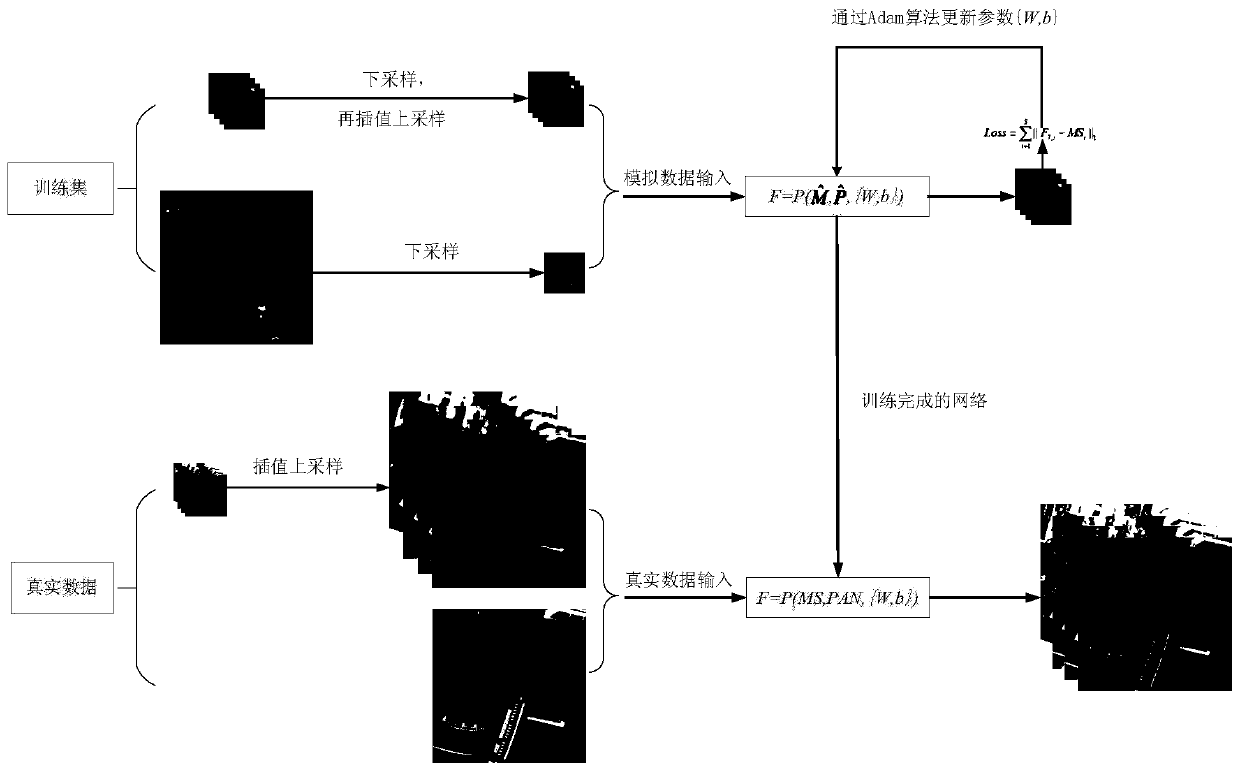

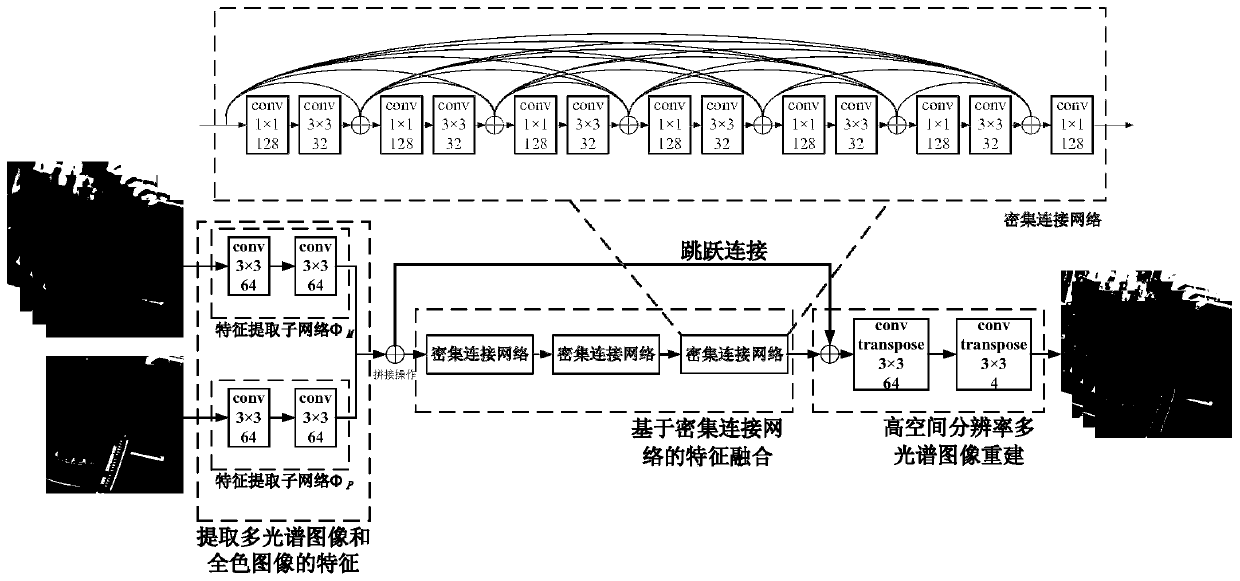

[0075] The present invention provides a multispectral and panchromatic image fusion method based on a dense and skip-connected deep convolutional network. The method is divided into two parts: model training and image fusion. In the model training stage, the original clear multispectral and panchromatic images are first down-sampled to obtain simulated training image pairs; then the features of the simulated multispectral and panchromatic images are extracted, the features are fused using densely connected networks, and high-resolution images are reconstructed using skip connections. Spatial resolution multispectral images; finally, the parameters of the model are adjusted using the Adam algorithm. In the image fusion stage, the features of multispectral and panchromatic images are first extracted, the features are fused using a densely connected network, and co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com