Resource allocation method and device based on reinforcement learning in ultra-dense network

A resource allocation device and ultra-dense network technology, applied in the field of resource allocation based on reinforcement learning, can solve the problem that reinforcement learning cannot meet the dense connection of ultra-dense networks, and achieve the effect of improving energy efficiency and achieving load balancing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

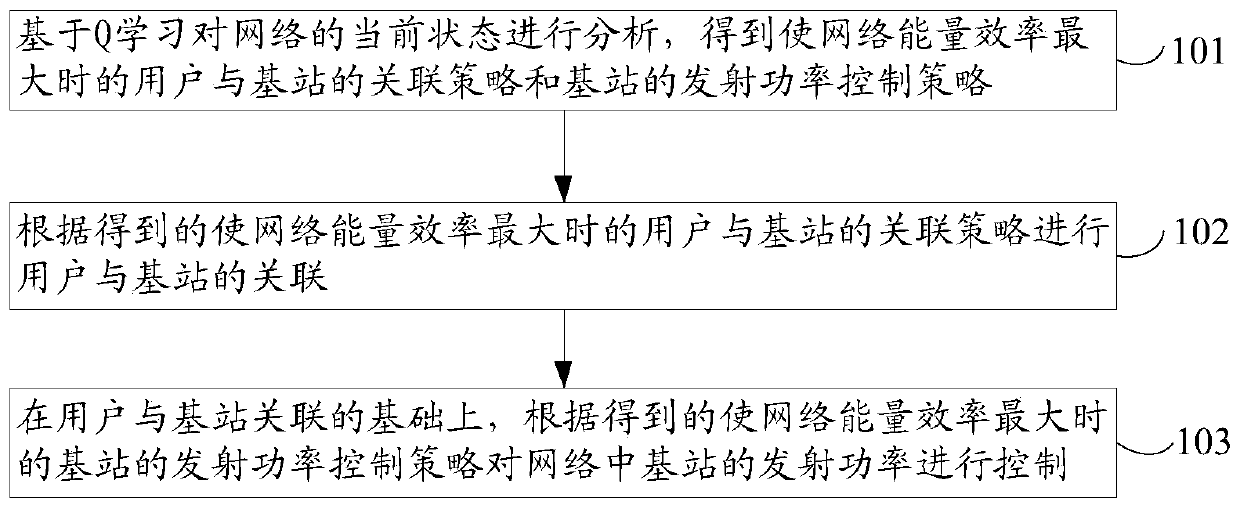

[0047] like figure 1 As shown, the resource allocation method based on reinforcement learning in the ultra-dense network provided by the embodiment of the present invention includes:

[0048] S101, analyze the current state of the network based on Q-learning, and obtain the association strategy between the user and the base station and the transmission power control strategy of the base station when the energy efficiency of the network is maximized;

[0049] S102, associate the user with the base station according to the obtained association strategy between the user and the base station when the energy efficiency of the network is maximized;

[0050] S103, on the basis of association between the user and the base station, control the transmit power of the base station in the network according to the acquired transmit power control strategy of the base station when the energy efficiency of the network is maximized.

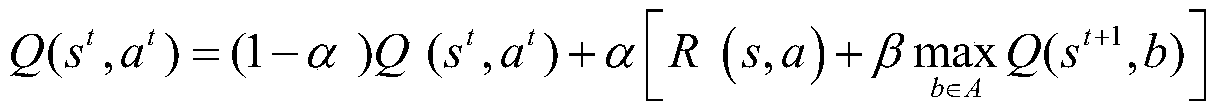

[0051] The resource allocation method based on reinforcemen...

Embodiment 2

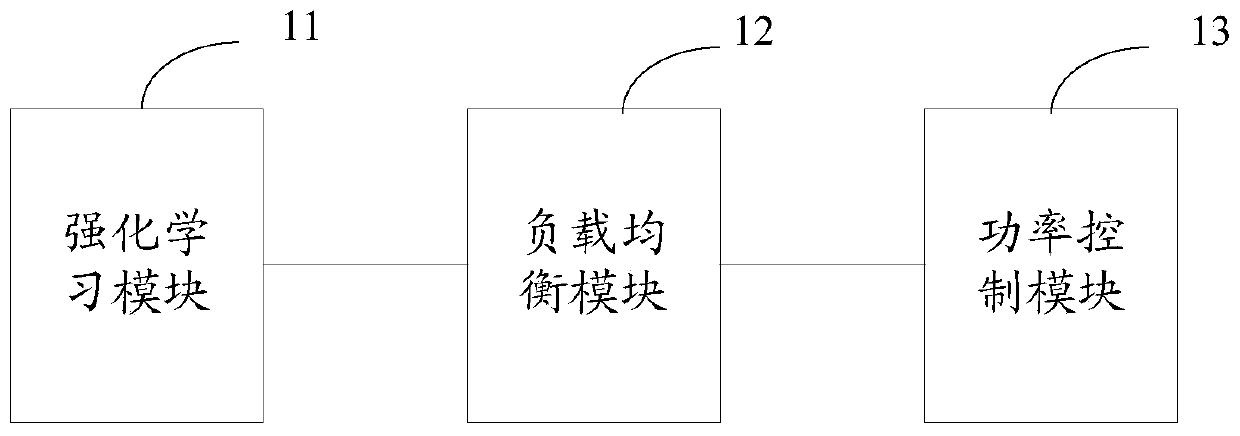

[0079] The present invention also provides a specific embodiment of a resource allocation device based on reinforcement learning in an ultra-dense network, because the resource allocation device based on reinforcement learning in an ultra-dense network provided by the present invention is the same as the resource allocation device based on reinforcement learning in the aforementioned ultra-dense network Corresponding to the specific implementation of the method, the resource allocation device based on reinforcement learning in the ultra-dense network can realize the purpose of the present invention by executing the process steps in the specific implementation of the above method, so the resource allocation based on reinforcement learning in the above-mentioned ultra-dense network The explanations in the specific implementation of the allocation method are also applicable to the specific implementation of the resource allocation device based on reinforcement learning in the ultra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com