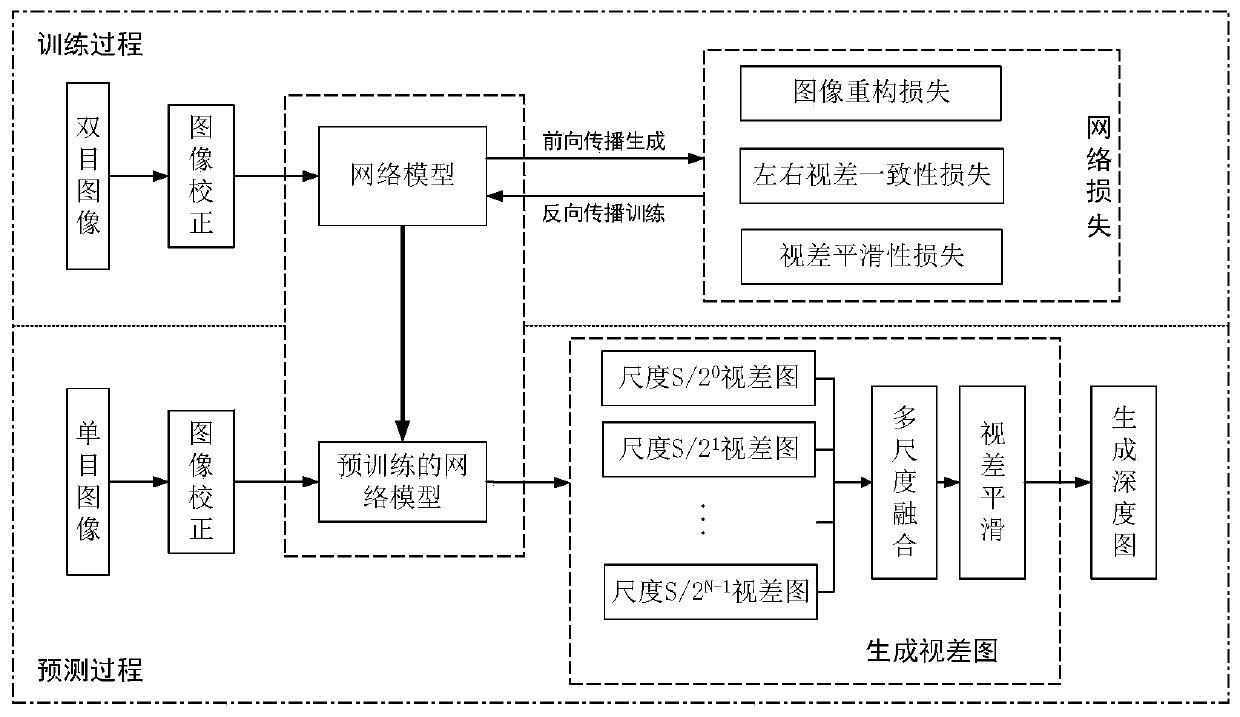

Monocular vision scene depth estimation method based on deep learning

A monocular vision and scene depth technology, applied in computing, image data processing, instruments, etc., can solve the problems of slow reasoning, expensive acquisition methods, and low accuracy of results, achieving strong constraints, improving computer vision technology, and improving The effect of inference speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

[0036] The hardware environment of this experiment is: GPU: Intel Xeon series, memory: 8G, hard disk: 500G mechanical hard disk, independent graphics card: NVIDIA GeForce GTX 1080Ti, 11G; the system environment is Ubuntu 16.0.4; the software environment is python3.6, opencv4.0, Tensorflow.

[0037] In this paper, two sets of experiments have been done on the depth estimation of monocular images. One set is based on the KITTI public data set to verify the accuracy and effectiveness of the invented method; the other set is based on the actual collected monocular image data to verify the The practicality of the method.

[0038] The present invention is specifically implemented as follows:

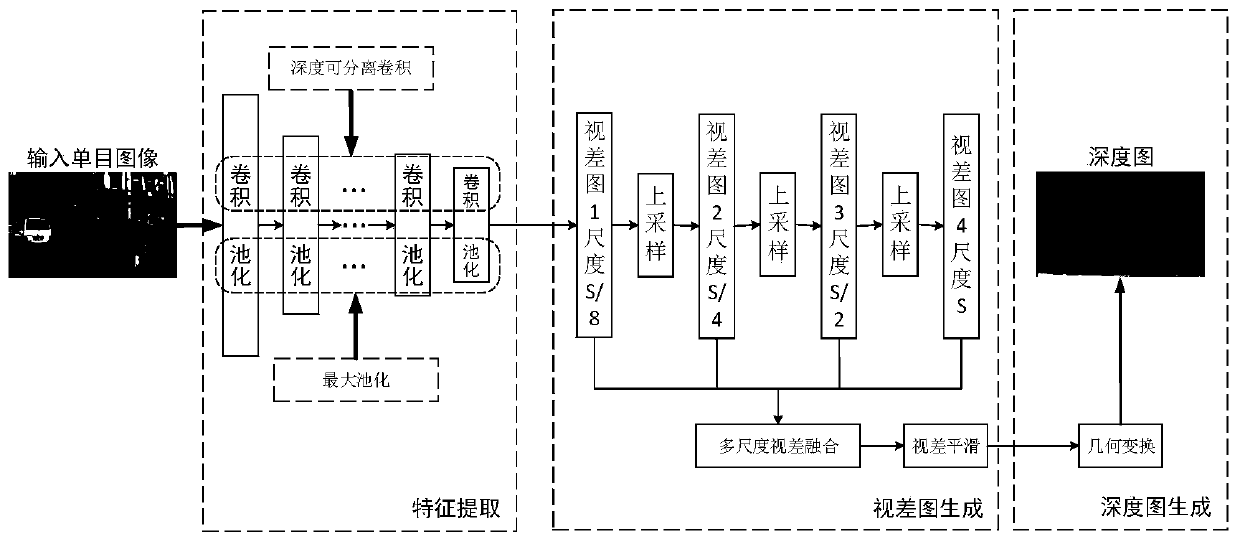

[0039] Step 1 Construction and training of the network model: using the standard VGG-13 network model, using depth separable convolution to replace the standard convolution in ea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com