Positioning and navigation method and device based on lidar and binocular camera

A technology of laser radar and binocular camera, which is applied in the directions of surveying and navigation, navigation, measuring devices, etc., to achieve the effect of fast matching and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be further described below in conjunction with accompanying drawing:

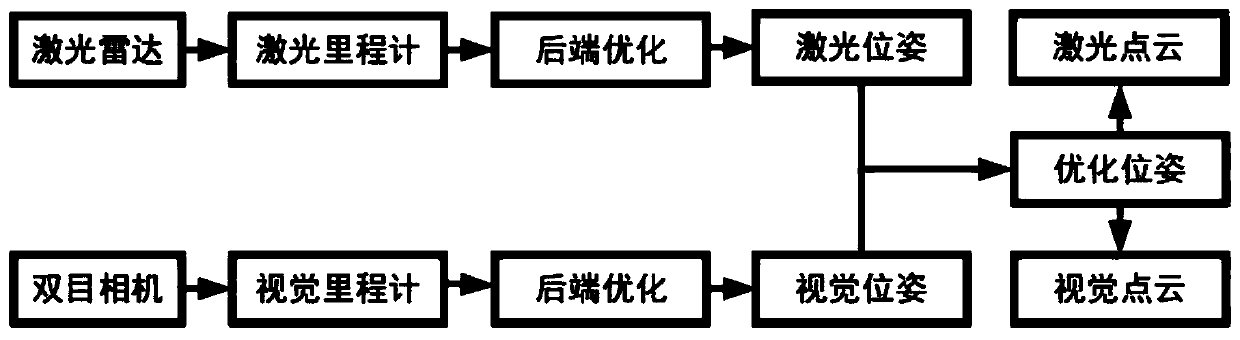

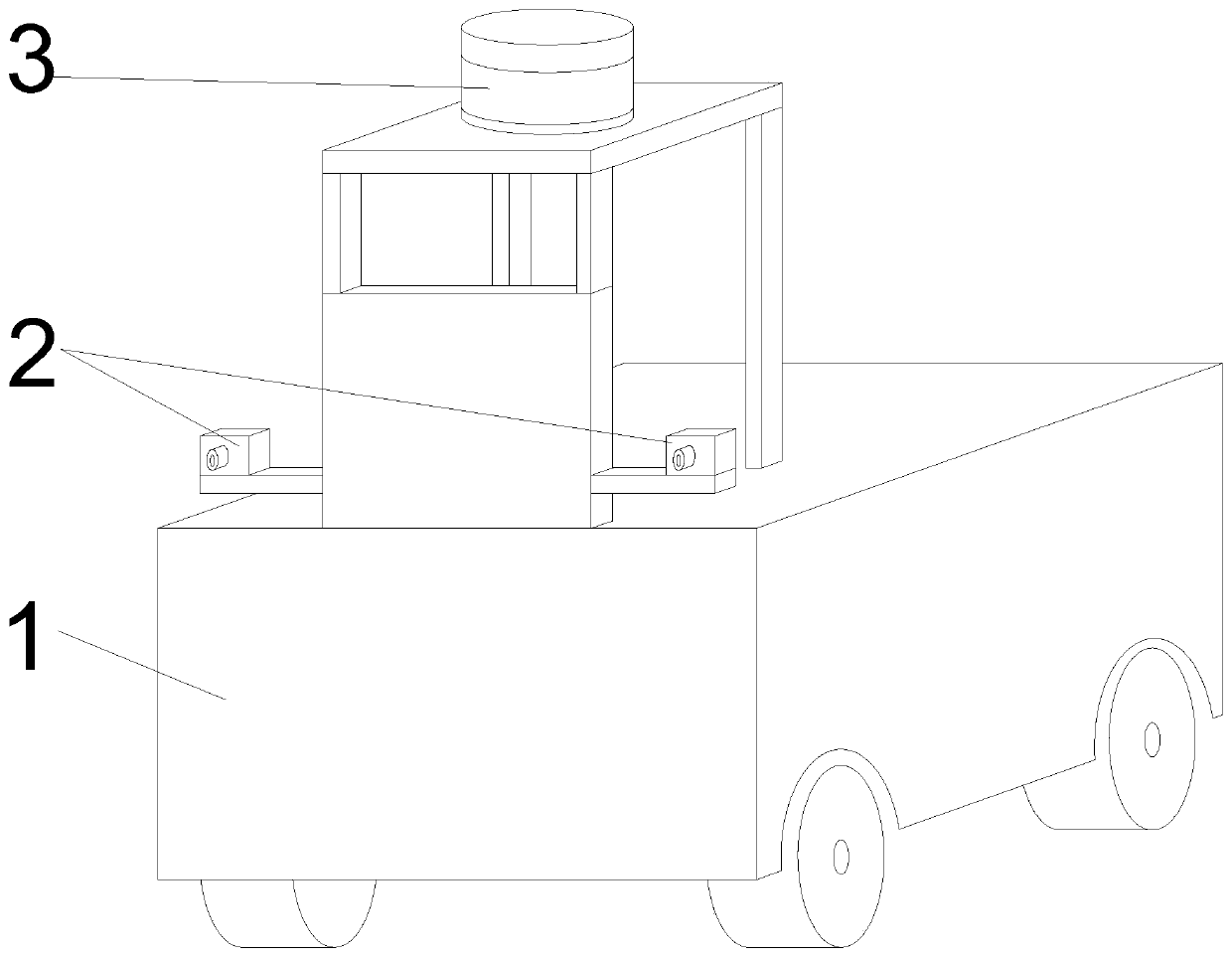

[0025] Such as figure 1 Shown: The positioning and navigation method based on lidar 3 and binocular camera 2, including steps:

[0026] Obtain the camera image through the binocular stereo camera, process the image information from the binocular stereo camera to obtain the pose;

[0027] Obtain radar images through LiDAR 3, and process image information from LiDAR 3 to obtain poses;

[0028] The pose from the binocular stereo camera image and the pose from the lidar 3 are optimized and fused, and the dense point cloud model and sparse point cloud model of the environment are obtained for navigation and precise positioning respectively.

[0029] The image pose acquisition steps of the binocular stereo camera include:

[0030] 1. Extract feature points from the collected images obtained by the left and right cameras, and complete the matching of the feature points of the l...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com