Urban sound event classification method based on dual-feature 2-DenseNet in parallel

A classification method and parallel technology, applied in speech analysis, computer components, instruments, etc., to achieve the effect of high classification accuracy and strong generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

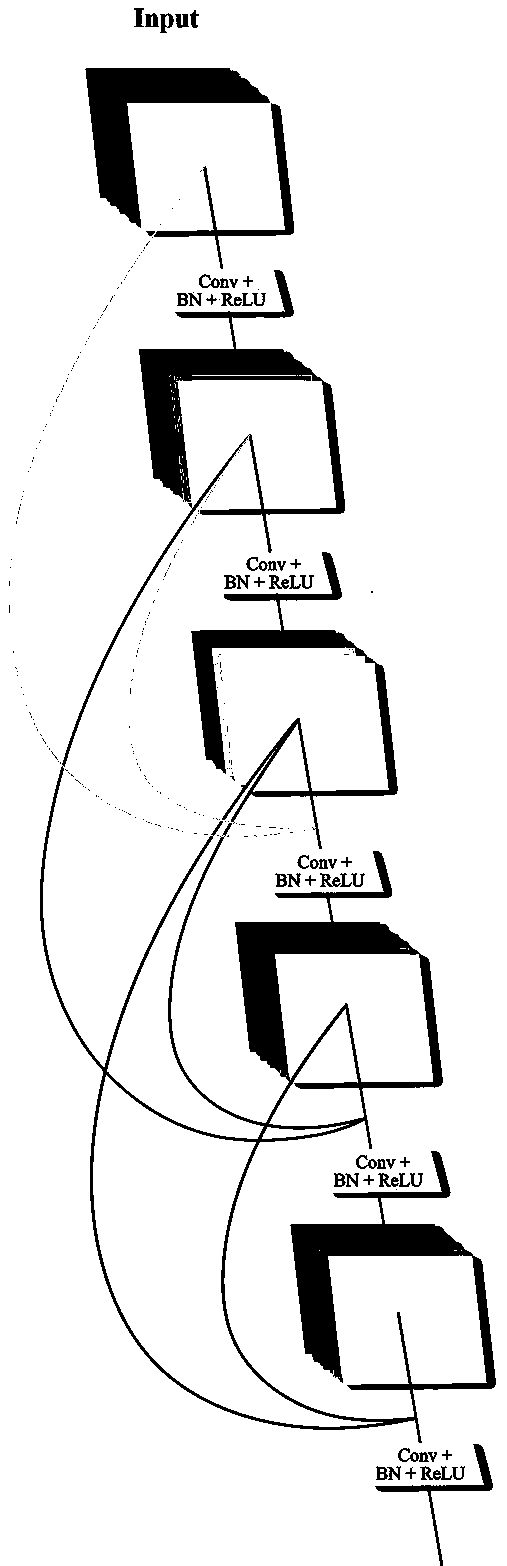

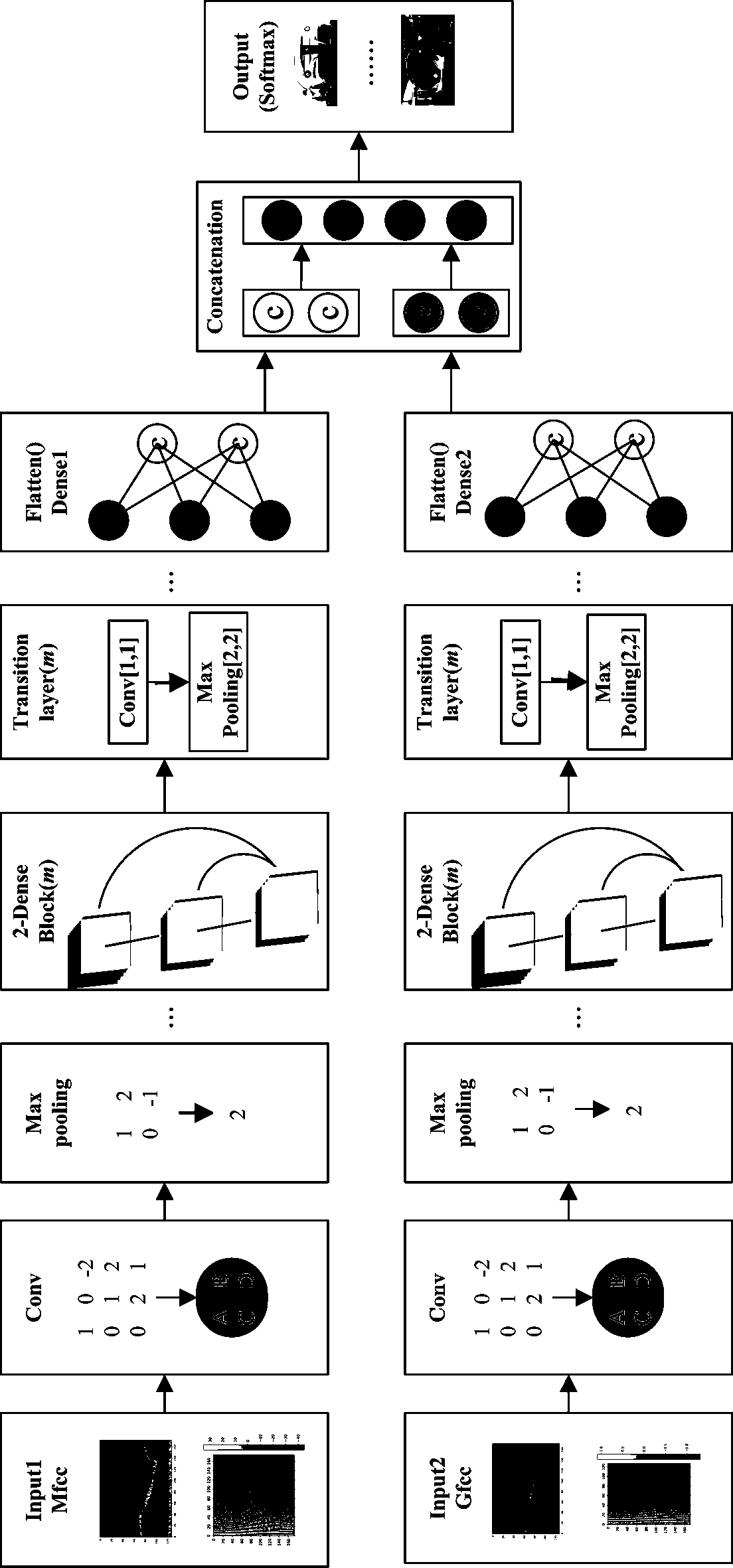

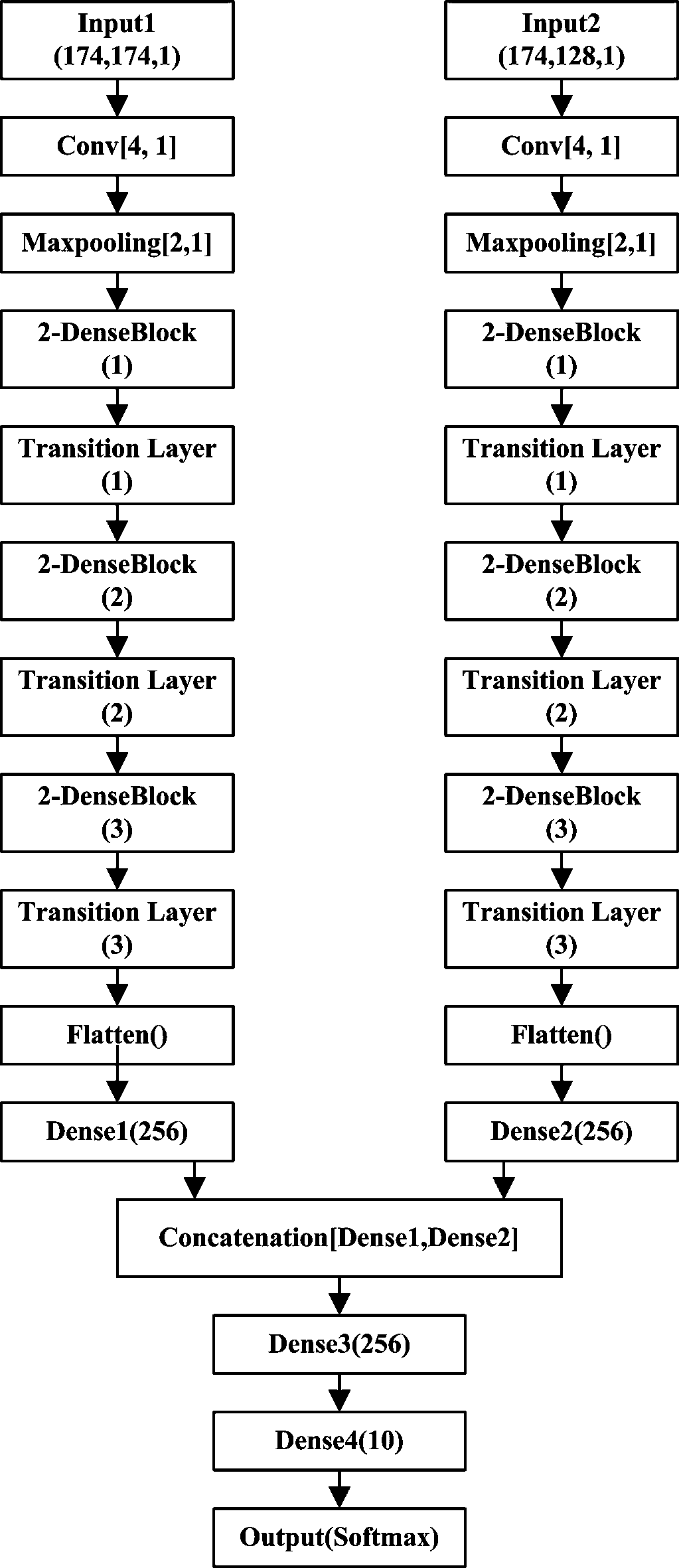

[0049] like Figure 1 to Figure 4 Shown, the present invention is based on the urban sound event classification method of double feature 2-DenseNet parallel connection, and it comprises the following steps:

[0050] S1: Collect audio data to be processed, preprocess the audio data to be processed, and output audio frame sequence; preprocessing operations include: sampling and quantization, pre-emphasis processing, and windowing;

[0051] S2: Perform time domain and frequency domain analysis on the audio frame sequence, extract Mel-frequency cepstral coefficients (Mfcc) and Gammatone frequency cepstral coefficients (Gfcc) respectively, and output double feature vectors Sequence; the structure of the double-feature feature vector is a 2-dimensional vector, the first bit vector is the number of frames after sampling the audio data, and the second bit vector is the dimension of the feature, that is, the Mel frequency cepstral coefficient and the gamma pass Dimension of the cepstr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com