Patents

Literature

67 results about "Sound classification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Classification of Sounds. THE ALPHABET. ORTHOGRAPHY. 2. The simple Vowels are a, e, i, o, u, y. The Diphthongs are ae, au, ei, eu, oe, ui, and, in early Latin, ai, oi, ou. In the diphthongs both vowel sounds are heard, one following the other in the same syllable.

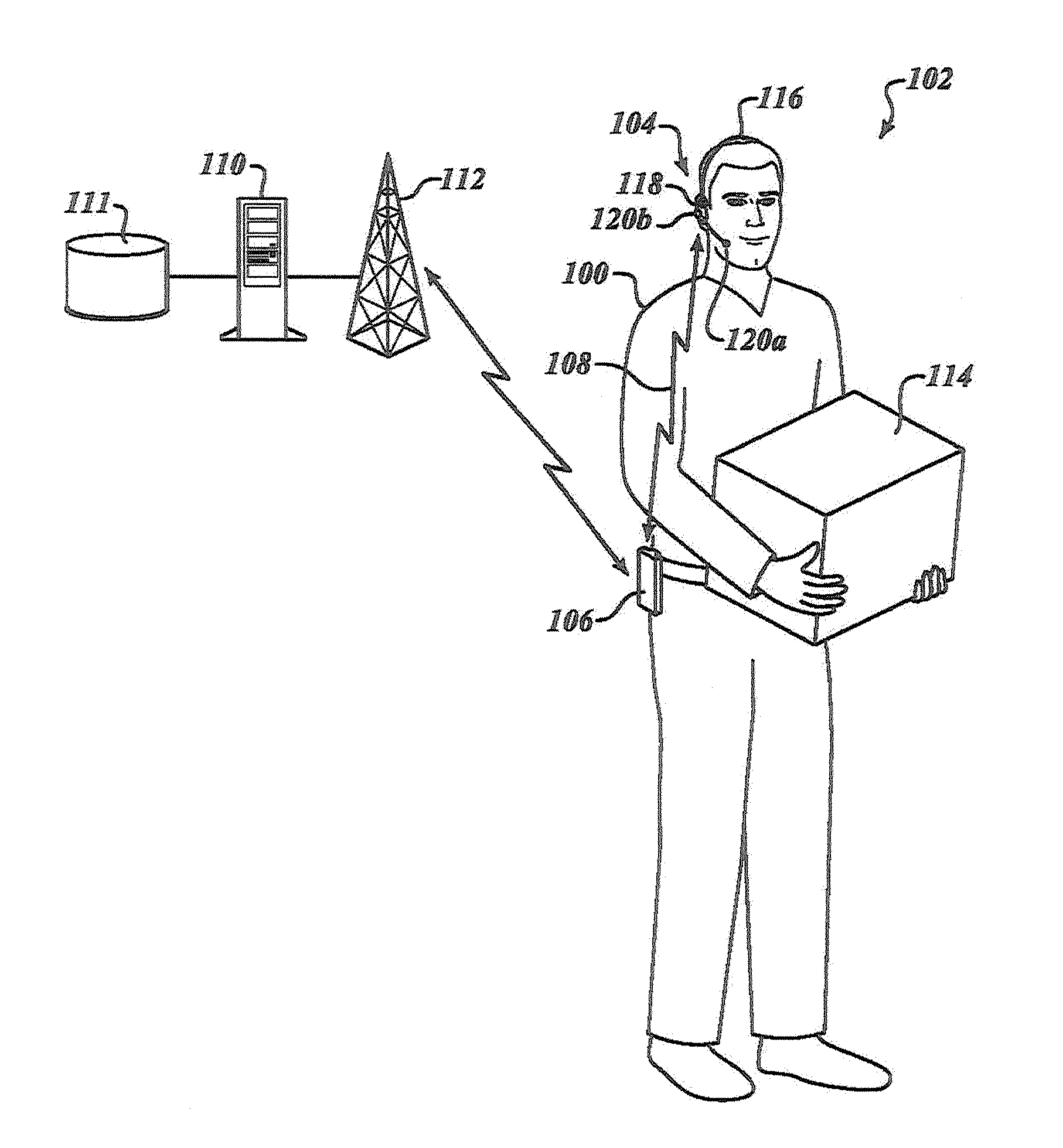

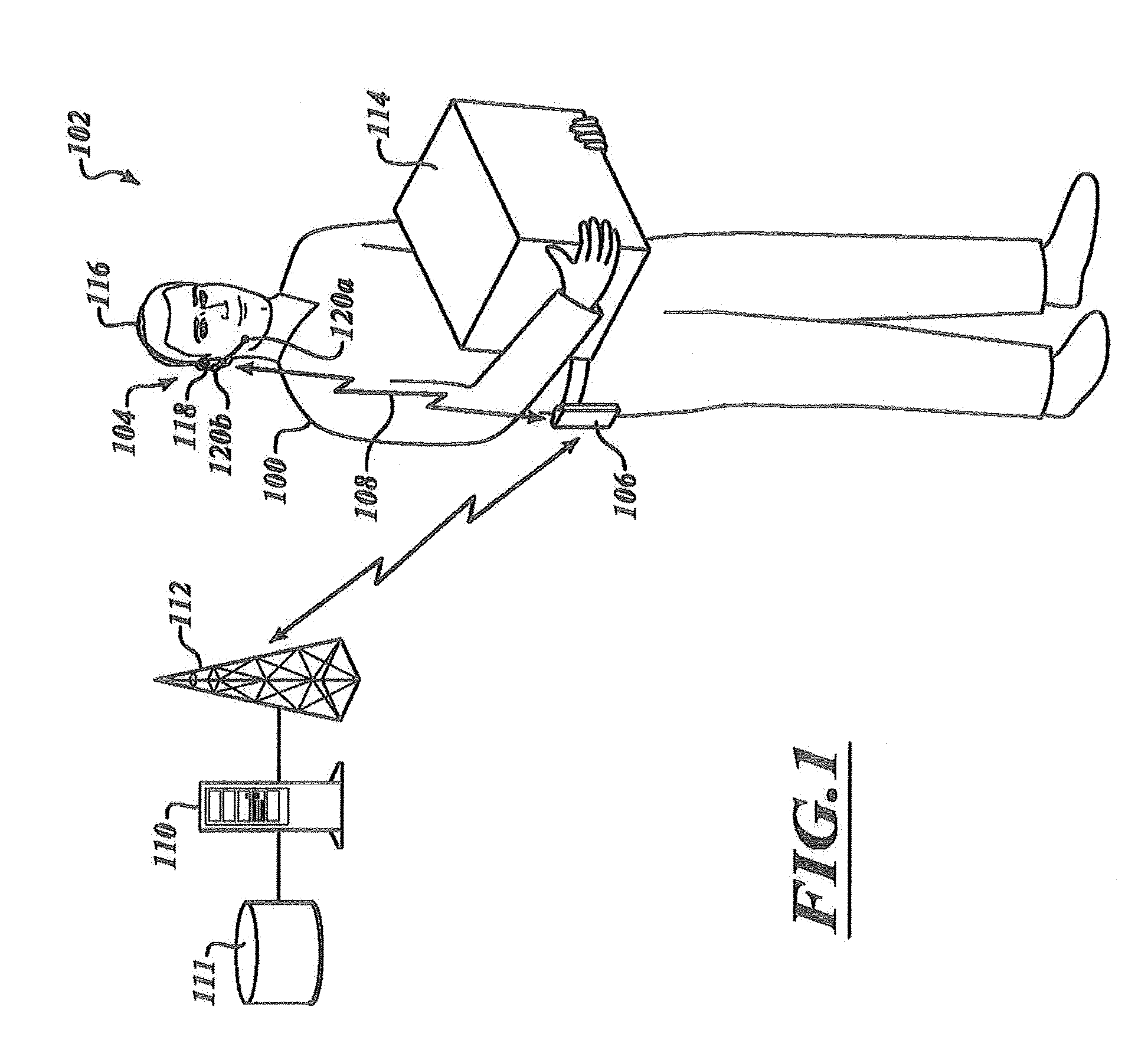

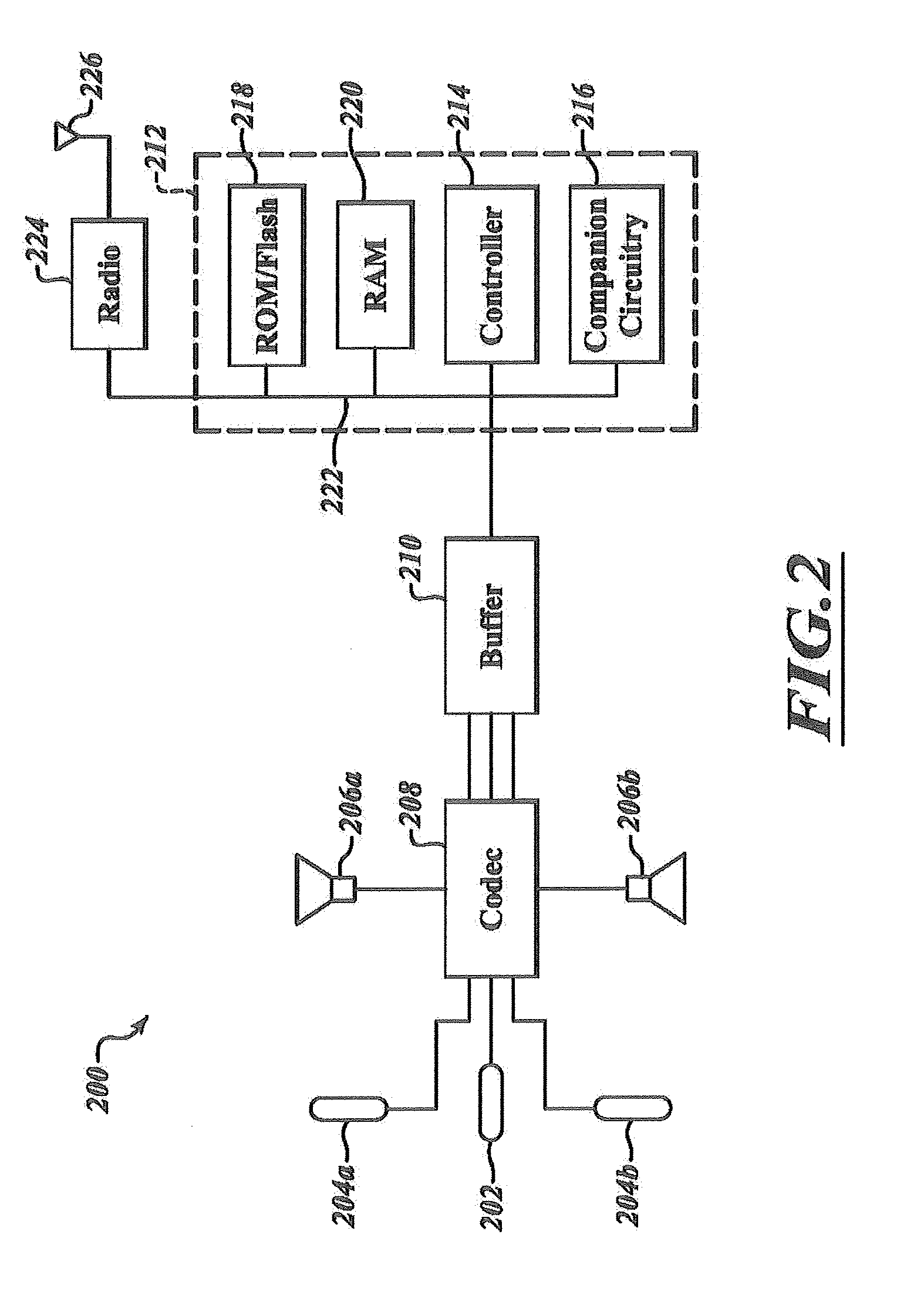

Apparatus and method to classify sound to detect speech

Audio frames are classified as either speech, non-transient background noise, or transient noise events. Probabilities of speech or transient noise event, or other metrics may be calculated to indicate confidence in classification. Frames classified as speech or noise events are not used in updating models (e.g., spectral subtraction noise estimates, silence model, background energy estimates, signal-to-noise ratio) of non-transient background noise. Frame classification affects acceptance / rejection of recognition hypothesis. Classifications and other audio related information may be determined by circuitry in a headset, and sent (e.g., wirelessly) to a separate processor-based recognition device.

Owner:INTERMEC IP

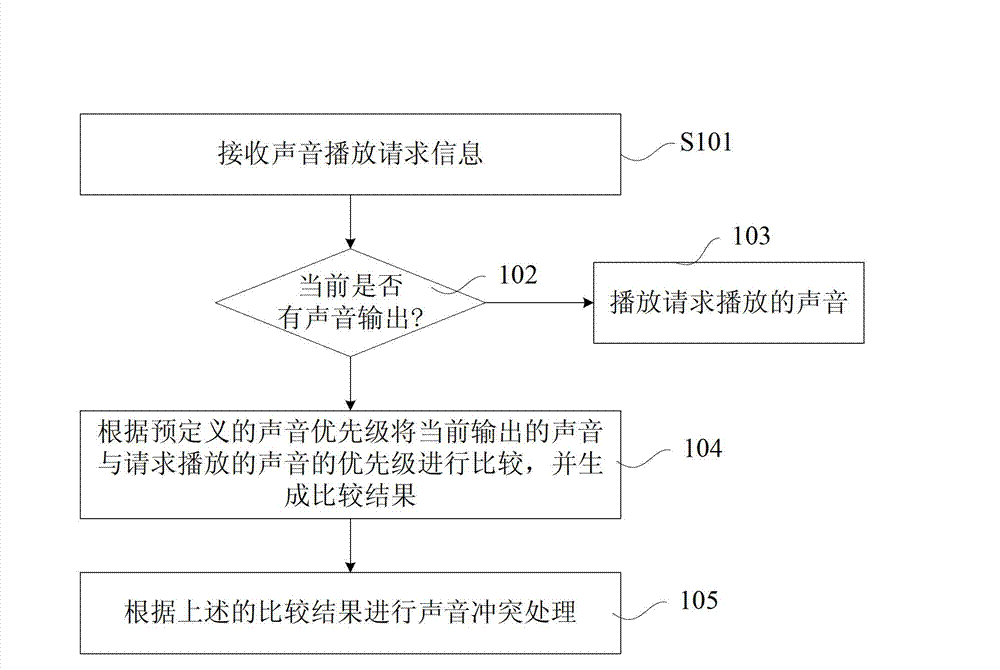

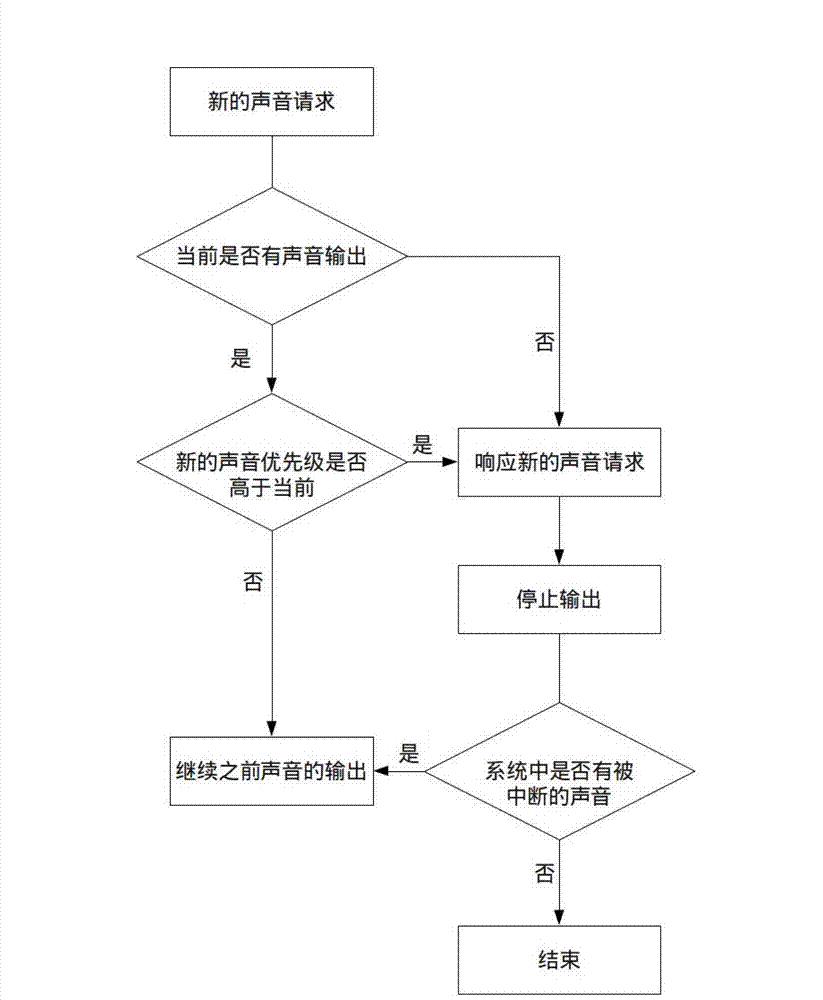

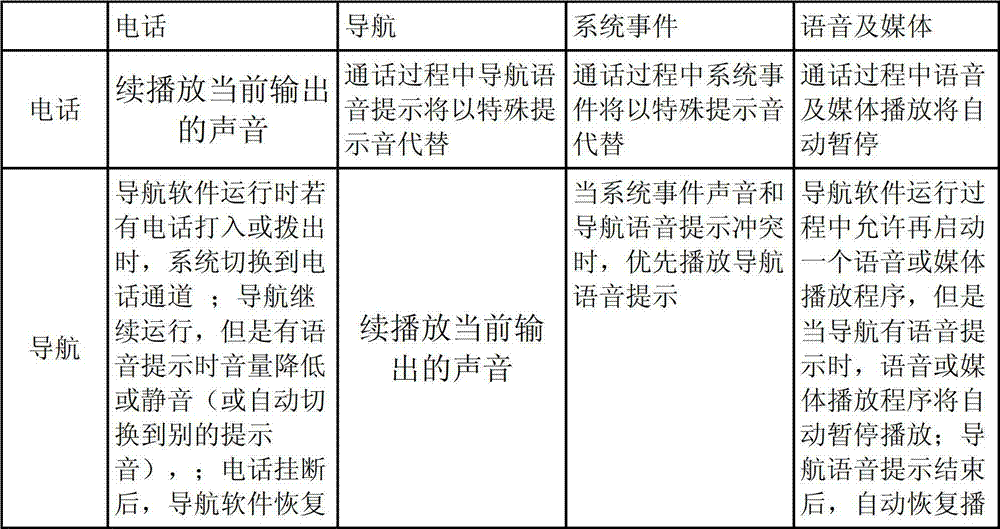

Sound conflict processing method based on vehicle-mounted information entertainment system

InactiveCN102930889AAchieve deleteDelete dynamicallyRecord information storageRecording signal processingSound classificationUsability

The invention provides a sound conflict processing method based on a vehicle-mounted information entertainment system. The sound conflict processing method includes that sound playing request information is received; whether sound output exists at present is judged; if on yes judgment, sound priority of current output sound is compared with that of sound required to be played according to predefined sound, and comparing results are generated; and sound conflict processing is performed according to the comparing results. Sound classification is used for performing playing control, sound in the vehicle-mounted information entertainment system is classified, sound with low priority is interrupted by sound with high priority, the system can recover the interrupted sound automatically after the sound with high priority is output, and usability of the system is increased.

Owner:北京世纪联成科技有限公司

Sound image combined monitoring method and system

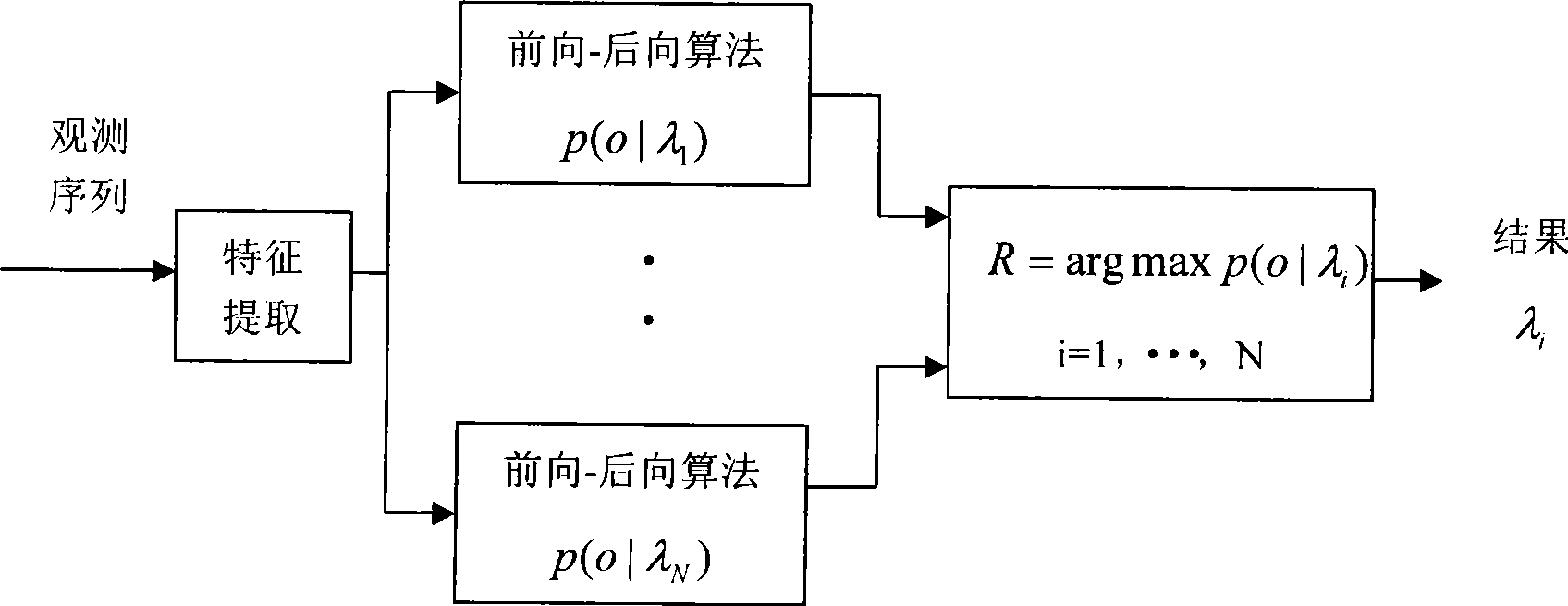

InactiveCN101364408AReduce re-developmentClosed circuit television systemsSpeech recognitionVideo monitoringSound image

The invention particularly relates to an audio / video combined monitoring method and a system thereof, belongs to the technical field of industrial environment monitoring, and aims to solve the problems in the prior art, for example, the monitoring is only conducted by video monitoring operators on duty, who are easy to be fatigue and hardly identify places with potential safety hazards, moreover, video monitoring is limited by functions and viewing angles, so that the potential hazard cannot be found in time, thereby missing rescue opportunities. The audio / video combined monitoring method uses audio signals and video signals at the same time to conduct environmental monitoring, and guides the operators on duty to selectively observe video windows by using the identification results of the audio signals. The processing method of the audio signals comprises the following steps: (1) feature extraction, (2) model training, (3) sound classification, (4) online study, and (5) hazard rating evaluation.

Owner:XI AN CHENGFENG TECH CO LTD

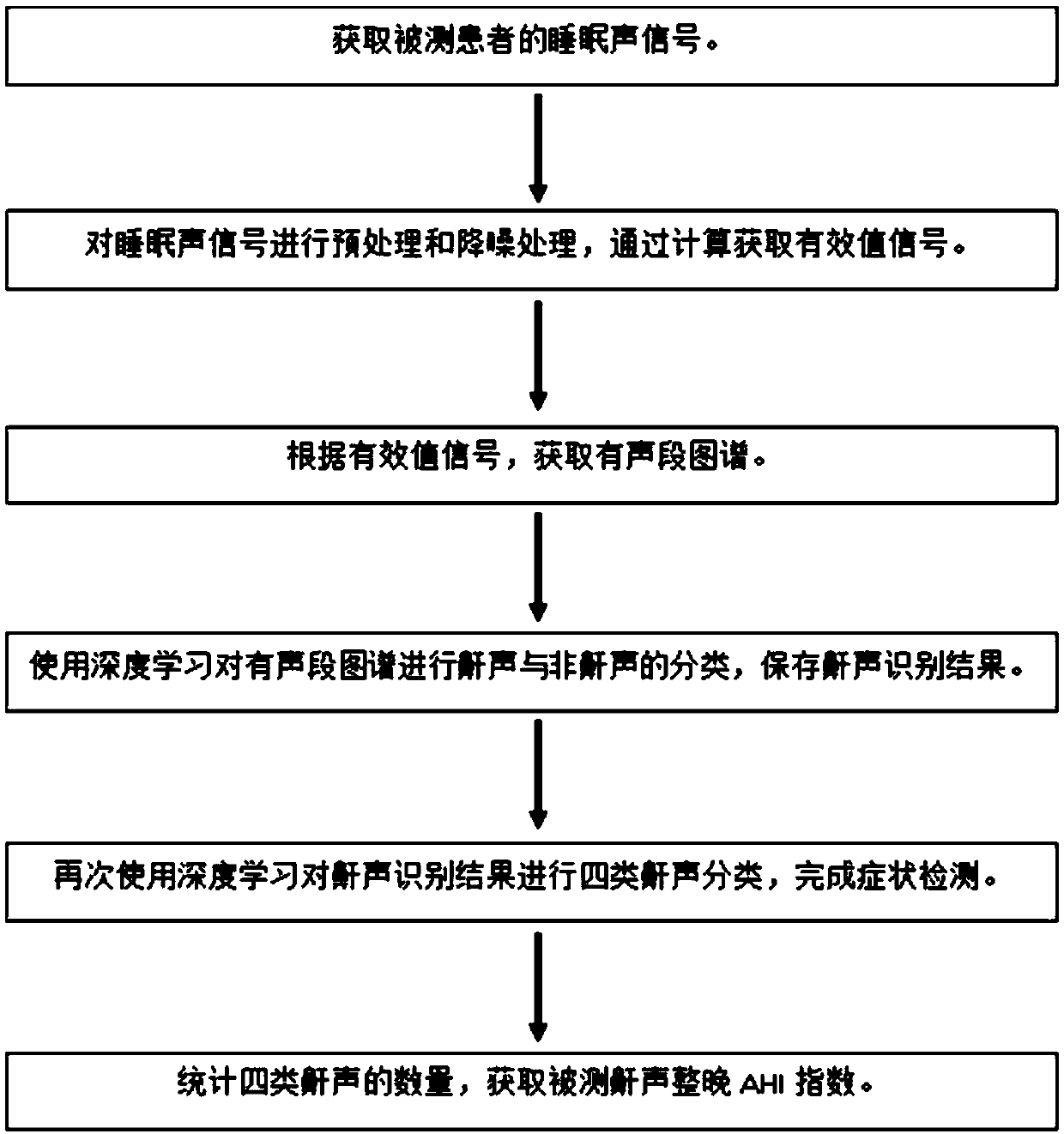

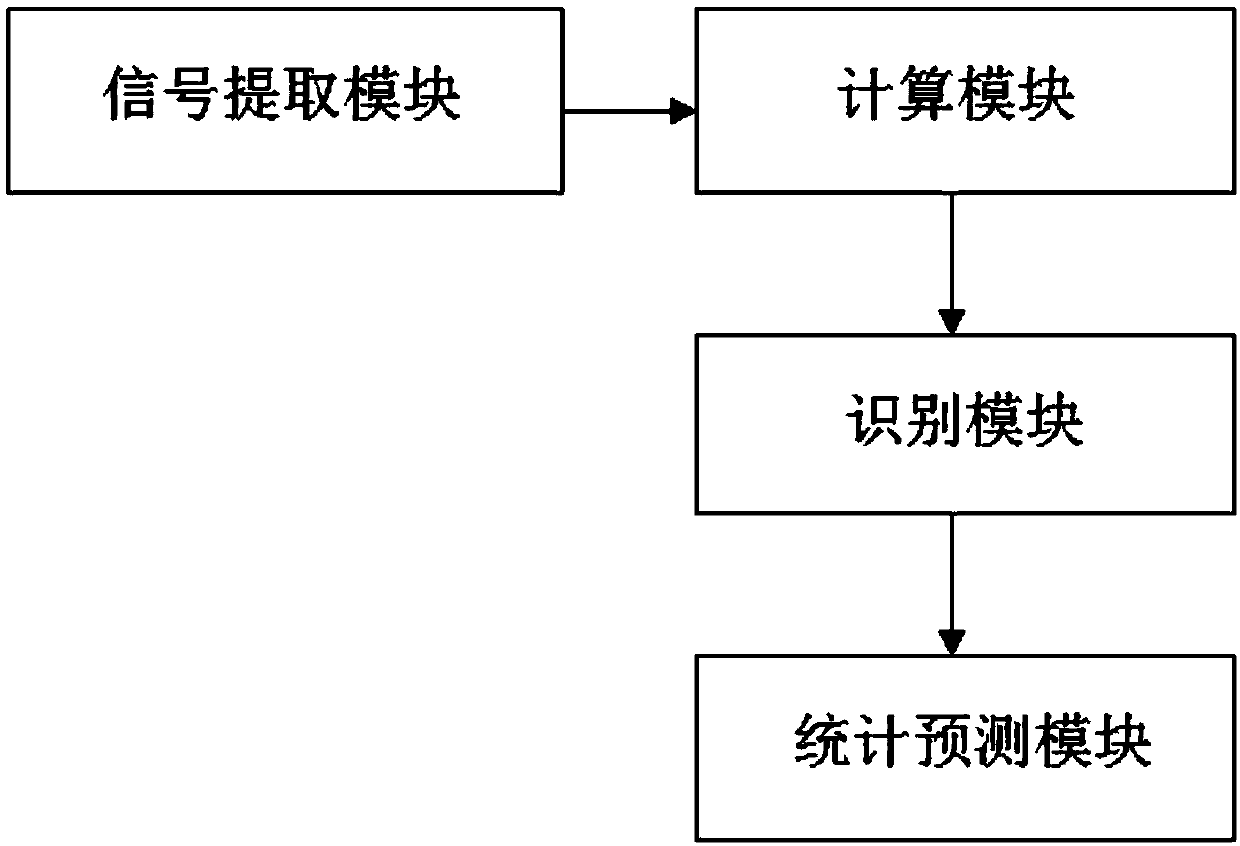

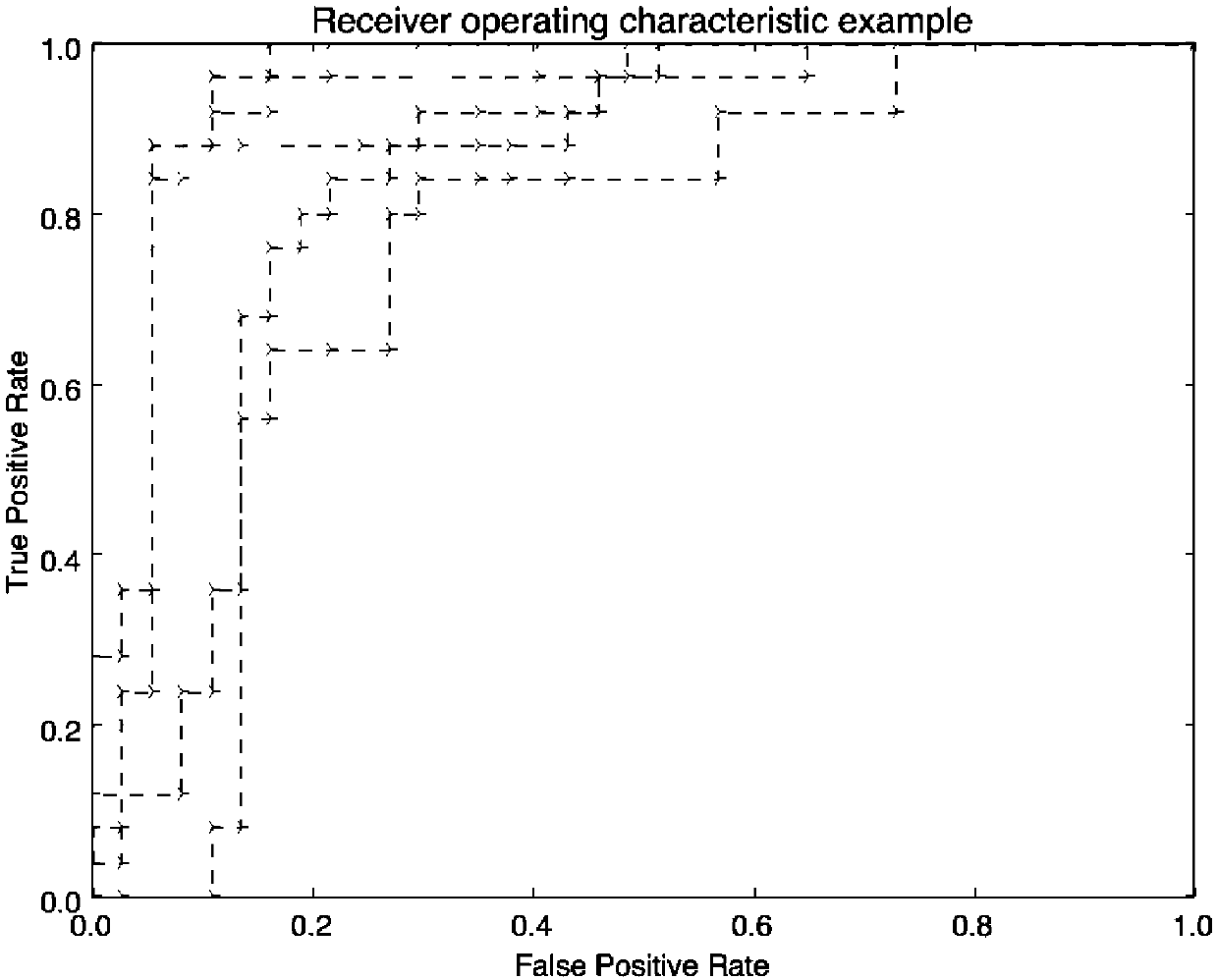

Sleep snoring sound classification detecting method and system based on depth learning

ActiveCN108670200AImplement automatic classificationAvoid affecting the recognition effectAuscultation instrumentsDiagnostic recording/measuringHypopneaDatum reference

The invention discloses a sleep snoring sound classification detecting method based on depth learning. The method mainly comprises the steps of collecting sleep sound signals of a patient to be detected all night long through a sensor, detecting sonic sections in the sleep sound signals, and obtaining a sonic section map in the sleep sound signals; adopting depth learning for performing snoring sound and non-snoring sound classification on the sonic section map, and reserving a pure-snoring sound recognition result; then, adopting the depth learning for classifying four types of snore sounds for the pure-snoring sound recognition result, and completing automatic recognition and detection on snoring sounds of the patient suffering from obstructive sleep apnea-hypopnea syndrome (OSAHS); according to the snoring sound recognizing and detecting result, counting the number of snoring sounds of each type of the patient to be detected all night long, and obtaining an AHI index of the patientto be detected all night long. The invention further discloses a detecting system of the sleep snoring sound classification detecting method based on depth learning. The method and system can effectively and accurately evaluate whether or not a snoring object falls ill and evaluate the illness degree, and data references are provided for the patient suffering from the OSAHS.

Owner:SOUTH CHINA UNIV OF TECH

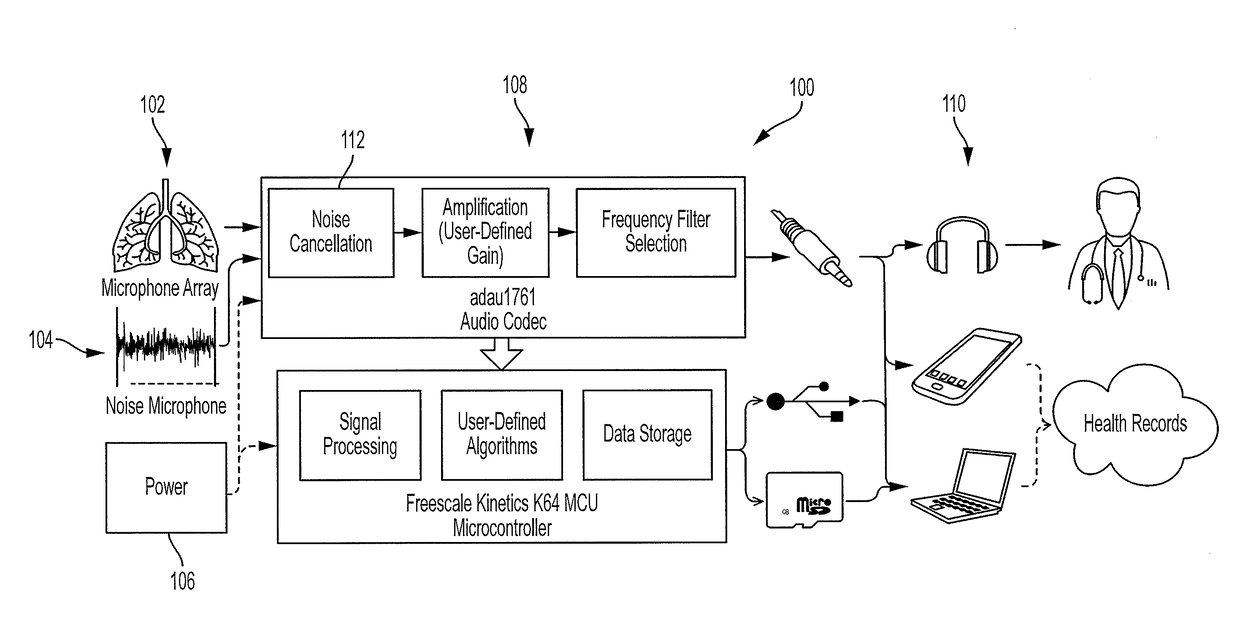

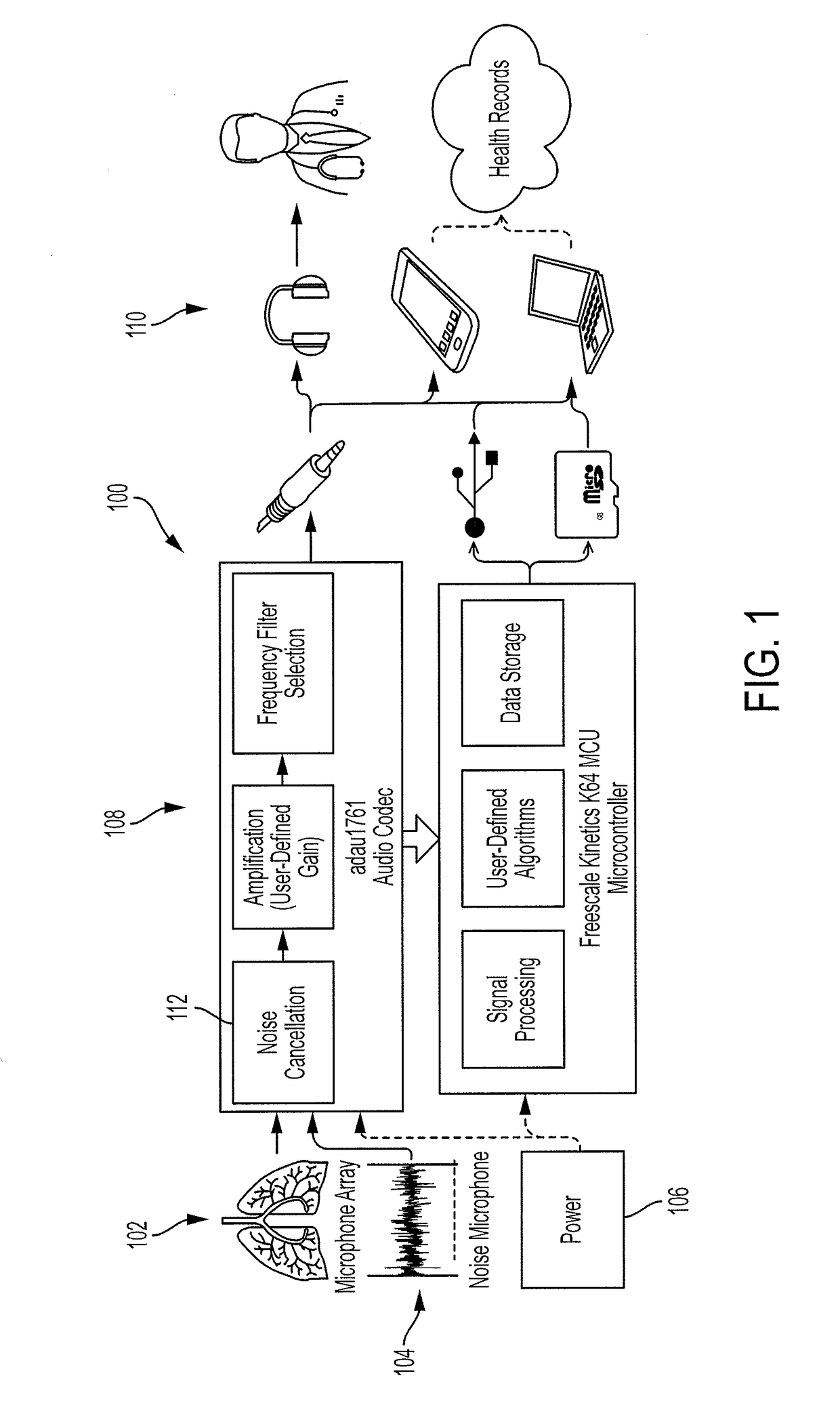

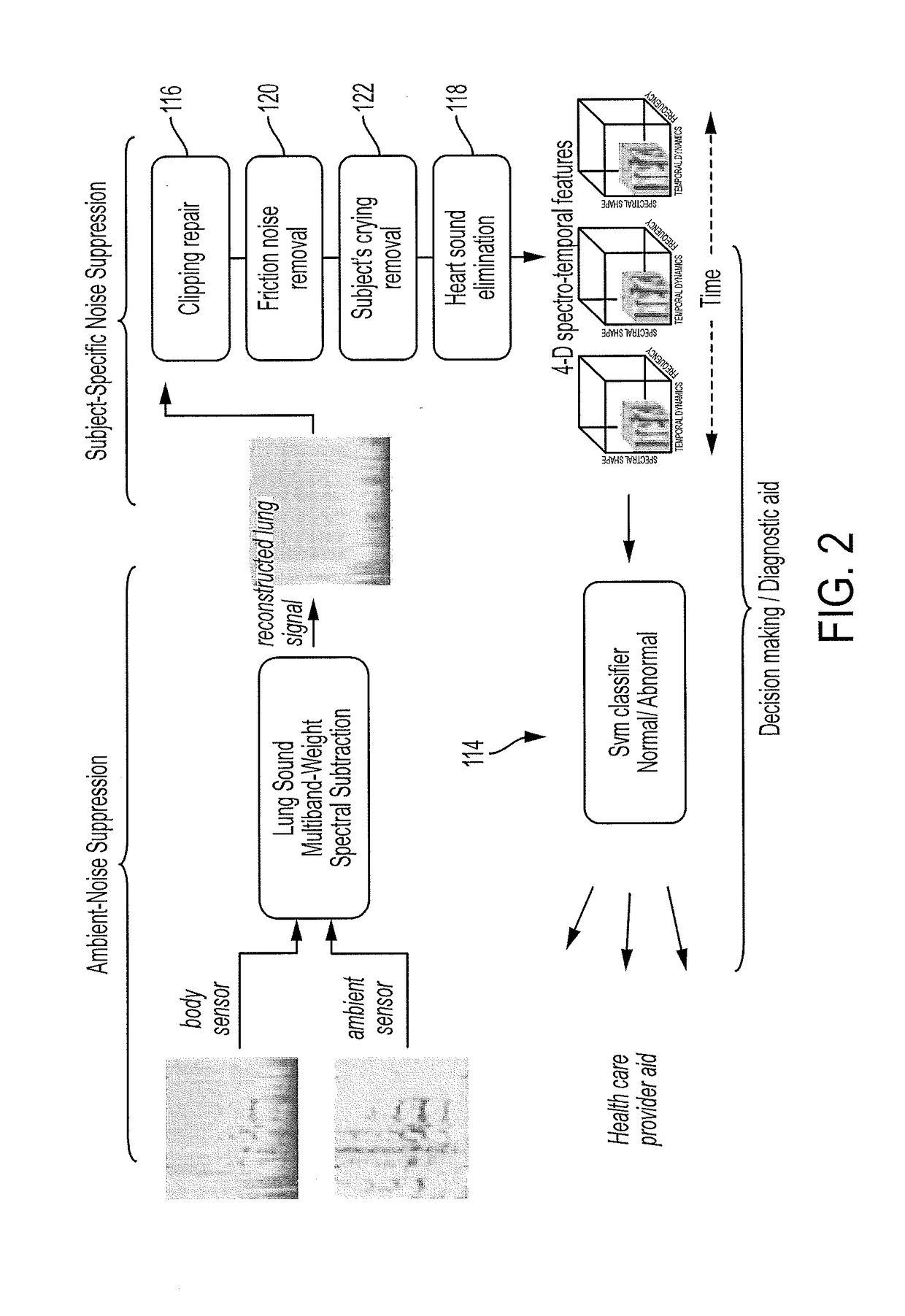

Programmable electronic stethoscope devices, algorithms, systems, and methods

ActiveUS20180317876A1Clean auscultation signalDistortion freeStethoscopeSpeech analysisEnvironmental noiseStationary noise

A digital electronic stethoscope includes an acoustic sensor assembly that includes a body sensor portion and an ambient sensor portion, the body sensor portion being configured to make acoustically coupled contact with a subject while the ambient sensor portion is configured to face away from the body sensor portion so as to capture environmental noise proximate the body sensor portion; a signal processor and data storage system configured to communicate with the acoustic sensor assembly so as to receive detection signals therefrom, the detection signals including an auscultation signal comprising body target sound and a noise signal; and an output device configured to communicate with the signal processor and data storage system to provide at least one of an output signal or information derived from the output signal. The signal processor and data storage system includes a noise reduction system that removes both stationary noise and non-stationary noise from the detection signal to provide a clean auscultation signal substantially free of distortions. The signal processor and data storage system further includes an auscultation sound classification system configured to receive the clean auscultation signal and provide a classification thereof as at least one of a normal breath sound or an abnormal breath sound.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE

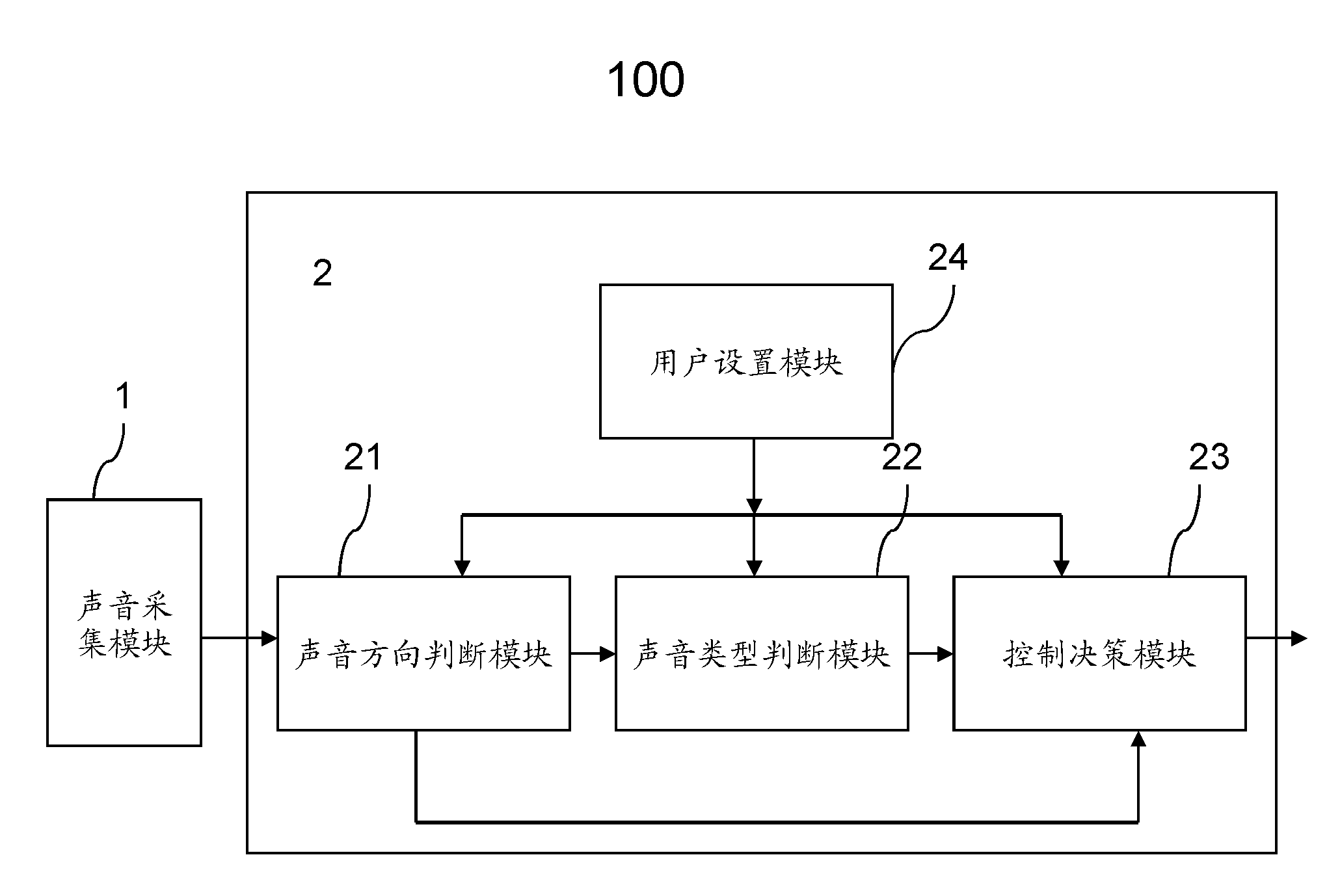

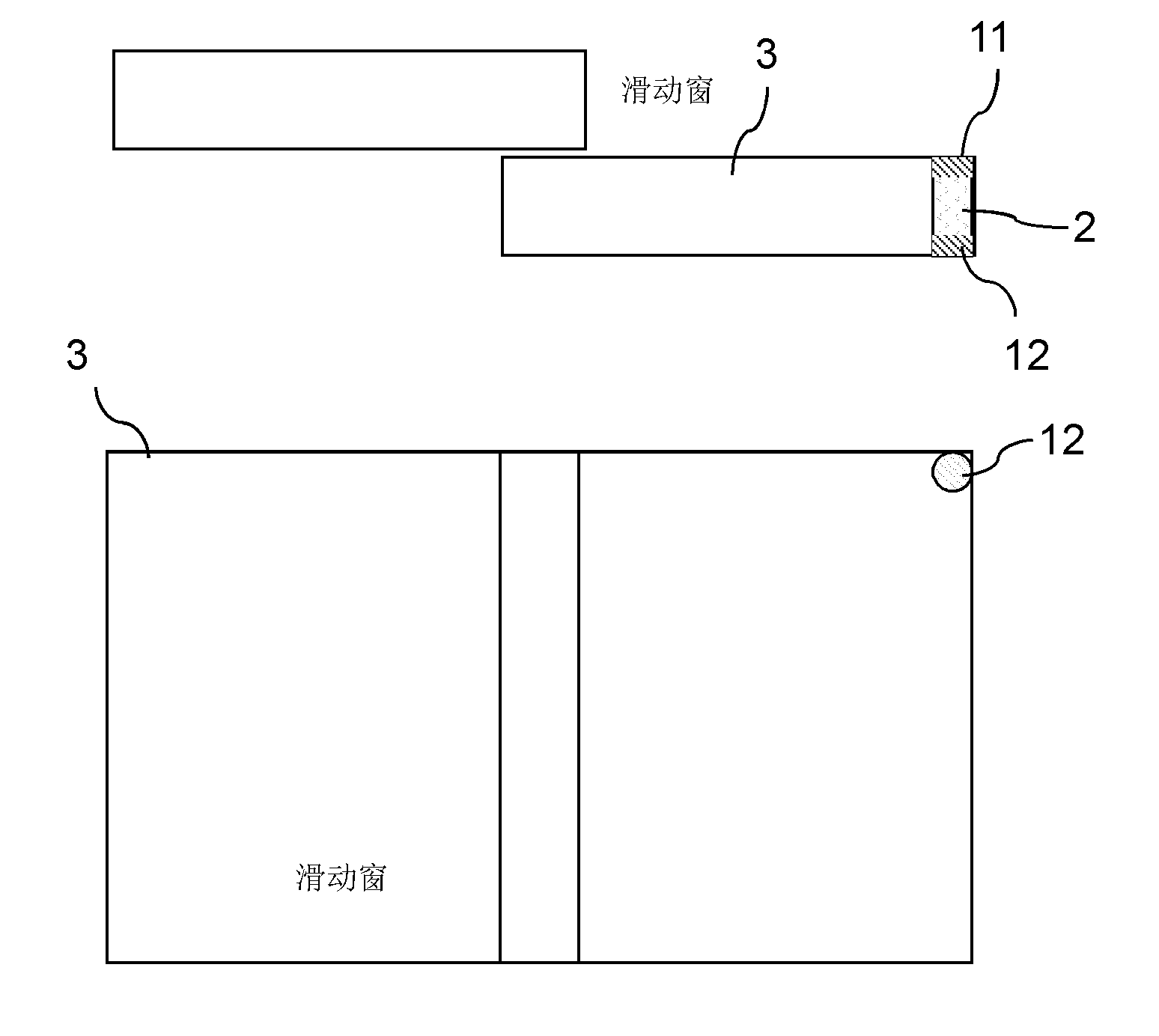

Control system and method for acoustic control window

ActiveCN102052036AFulfill control requirementsAvoid misusePower-operated mechanismSpeech recognitionControl systemSound classification

The invention provides a control system and method for an acoustic control window. In the system, whether a sound comes inside the window or outside the window can be determined through a microphone array; and the type of the sound can be determined through a sound classification module; and a control module customized by a user automatically decides the state of the window. The design scheme ensures that the window can be automatically closed according to user setting so as to bring convenience for the user.

Owner:NANJING ZGMICRO CO LTD

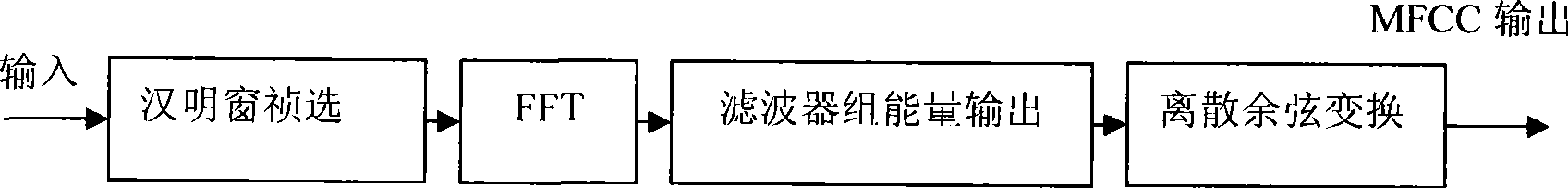

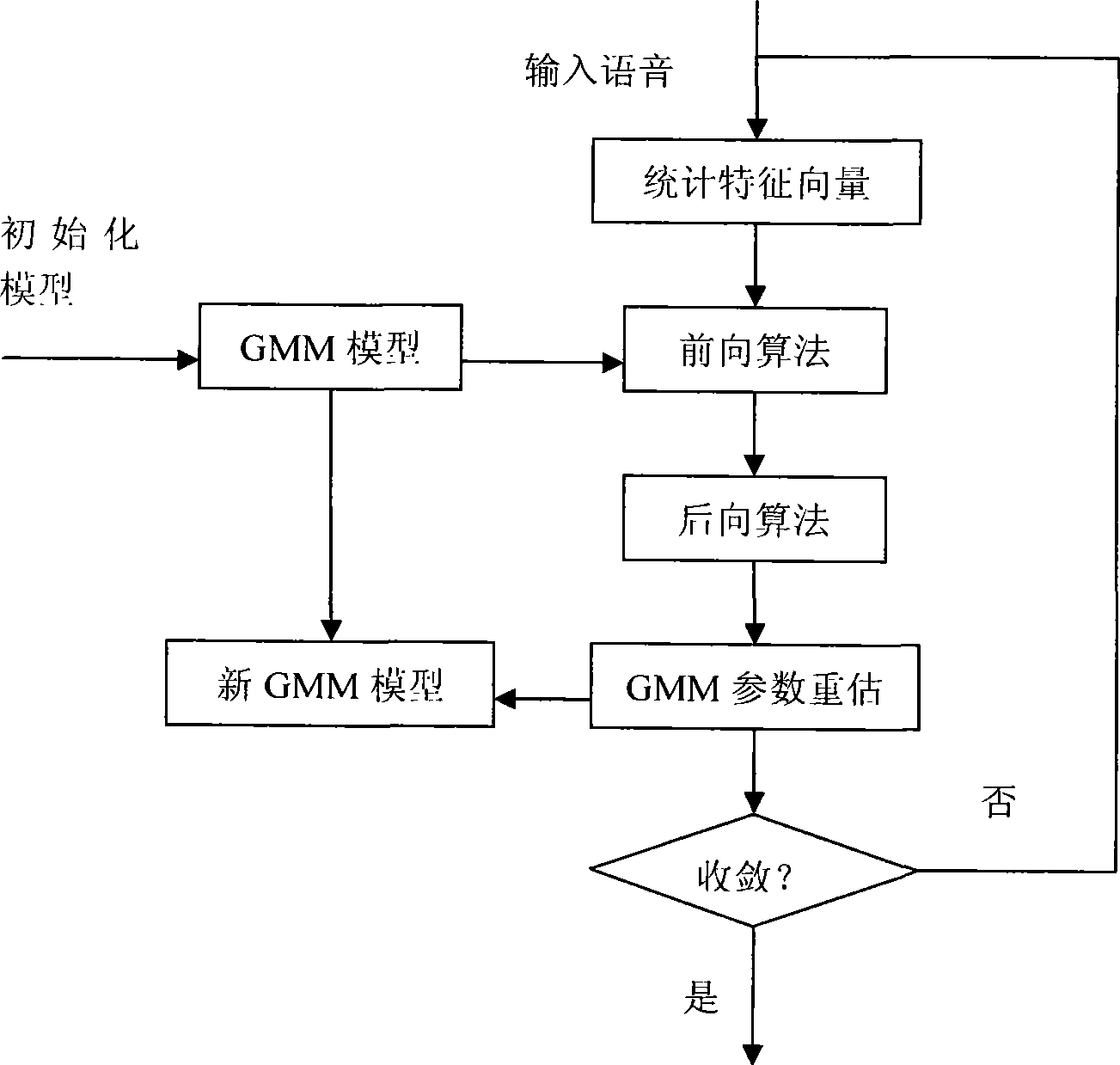

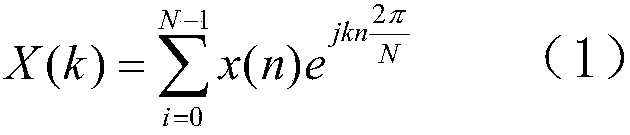

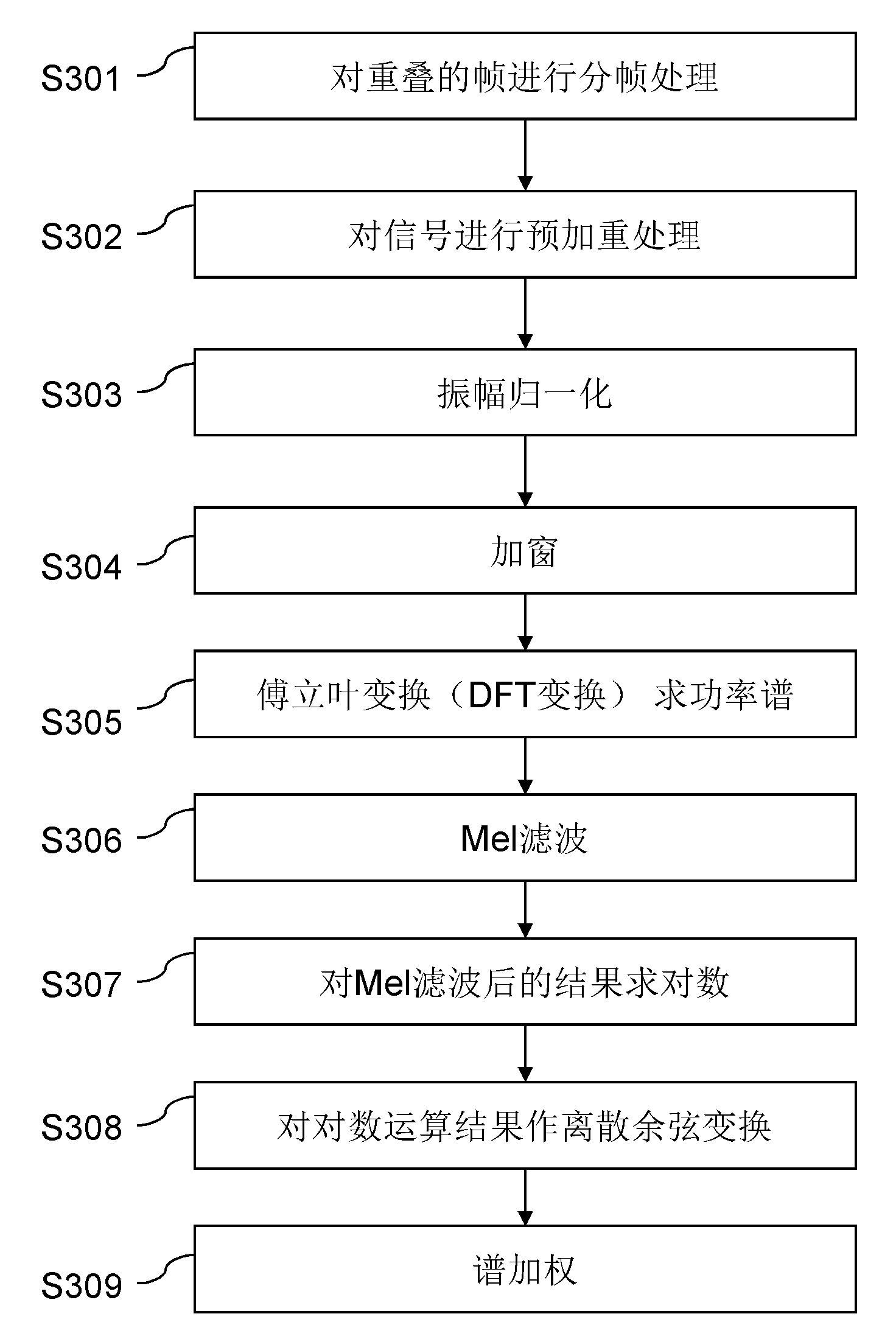

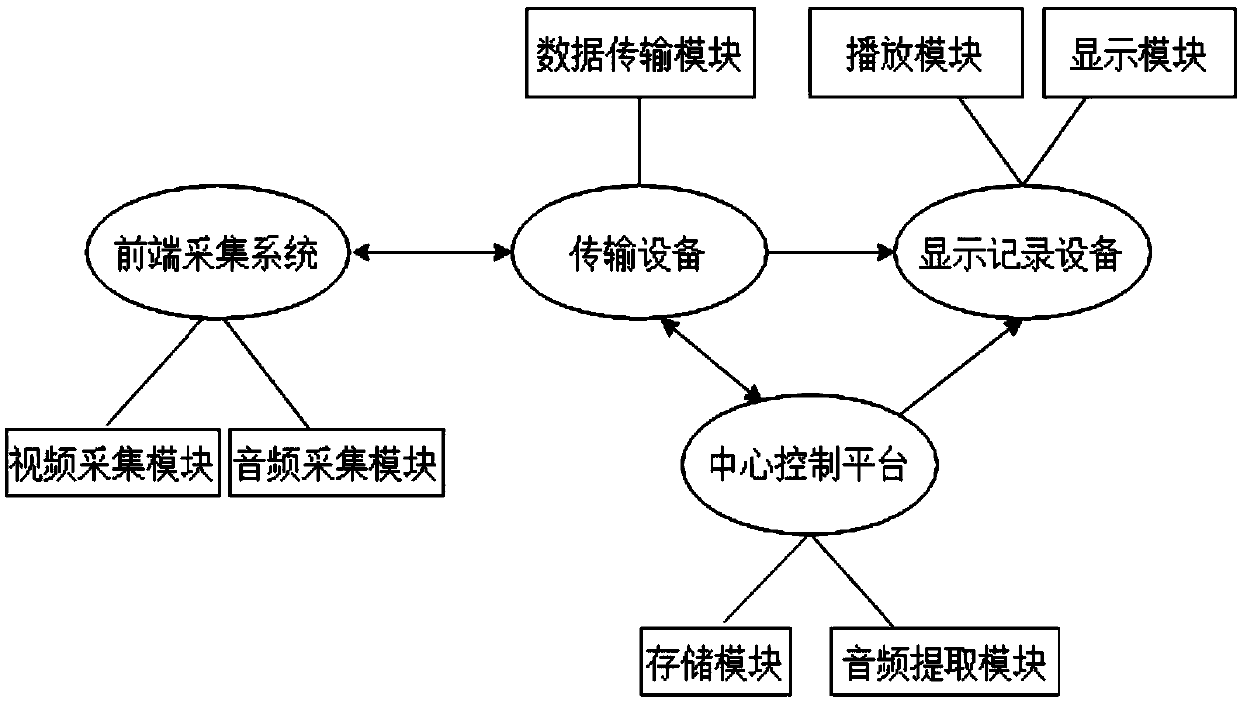

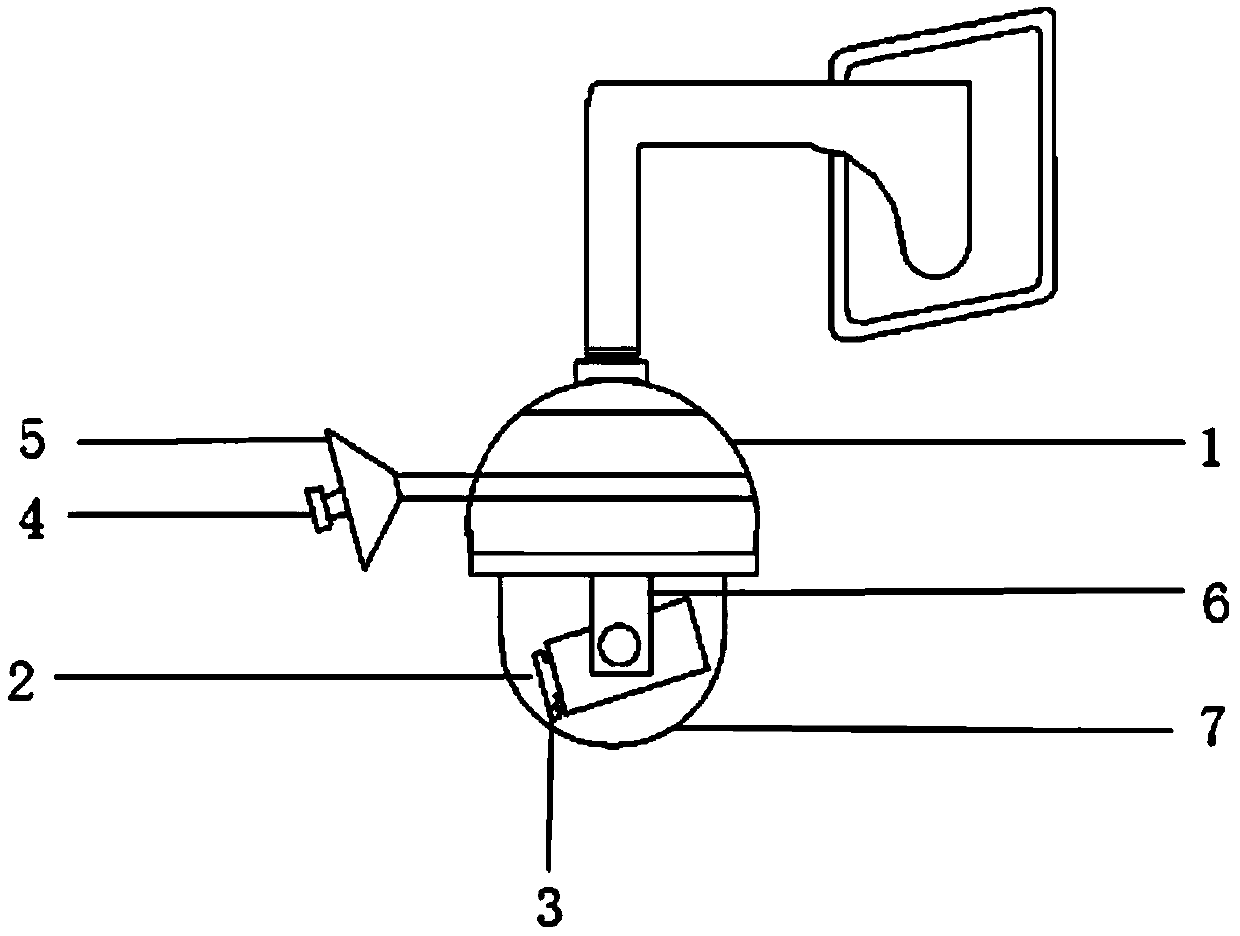

Holographic video monitoring system and method for directional picture capture based on sound classification algorithm

InactiveCN109547695AAvoid the lack of intelligent image captureRealize holographic video surveillance systemTelevision system detailsColor television detailsMel-frequency cepstrumSupport vector machine

The invention provides a holographic video monitoring system and method for directional picture capture based on a sound classification algorithm. The system comprises a front end collection system, atransmission device, a central control platform and a display recording device; the front end collection system is configured to collect onsite audio data and video data and transmit the sane to thecentral control platform through the transmission device; the central control platform is configured to perform noise reduction processing and sound classification on the audio data through a supportvector machine identification algorithm of the Mel frequency cepstrum coefficient, perform segmentation extraction on the audio data required by the user, send the audio data required by the user andthe corresponding video data to the display recording device, and directionally capture and amplify a corresponding video picture by selecting the specific sound; and the display recording device is configured to synchronously play the monitoring data of the monitoring system in real time, call the monitoring data of any time period in real time, and play the corresponding video picture corresponding to the capture and amplification of the specific sound.

Owner:SHANDONG JIAOTONG UNIV

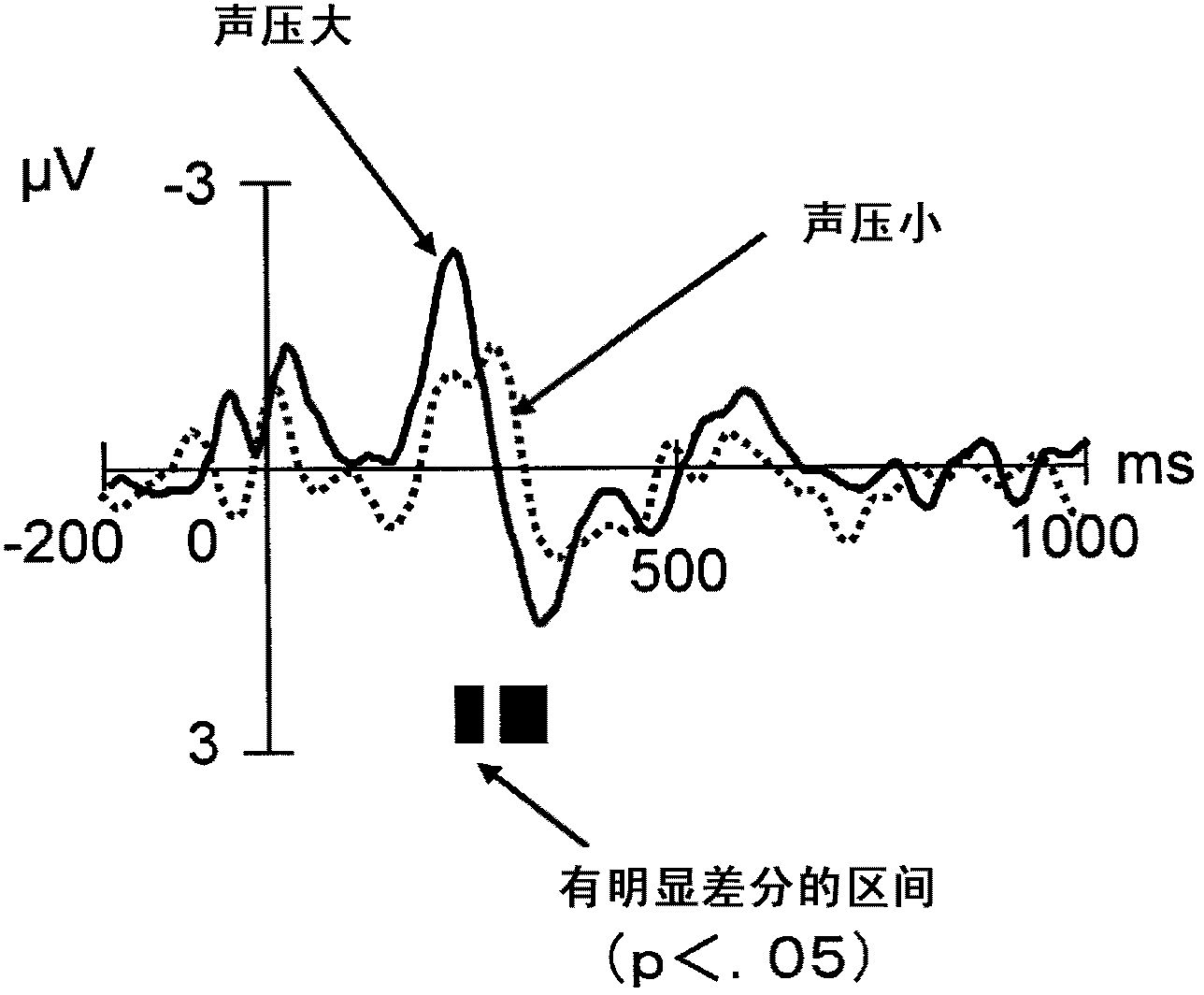

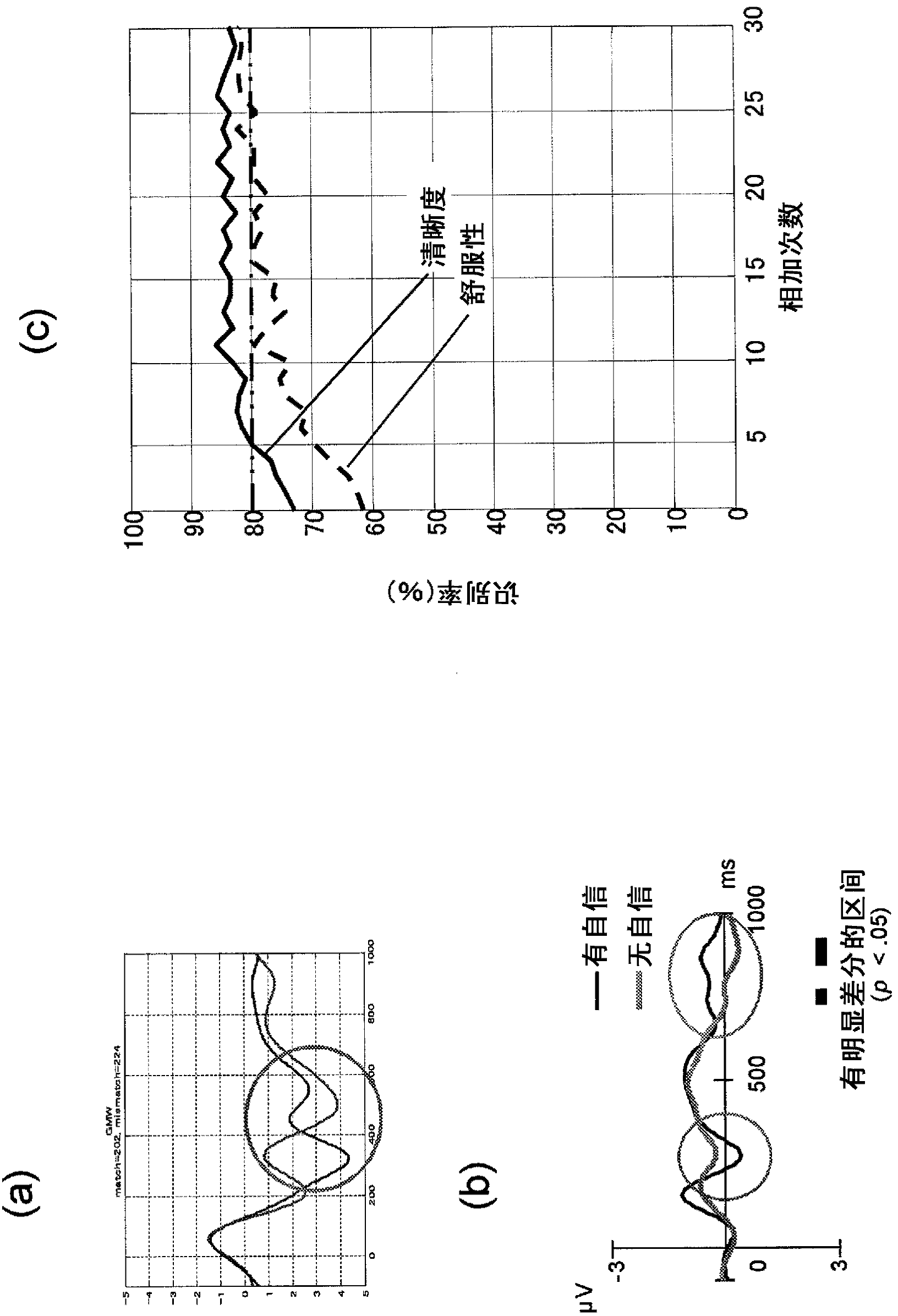

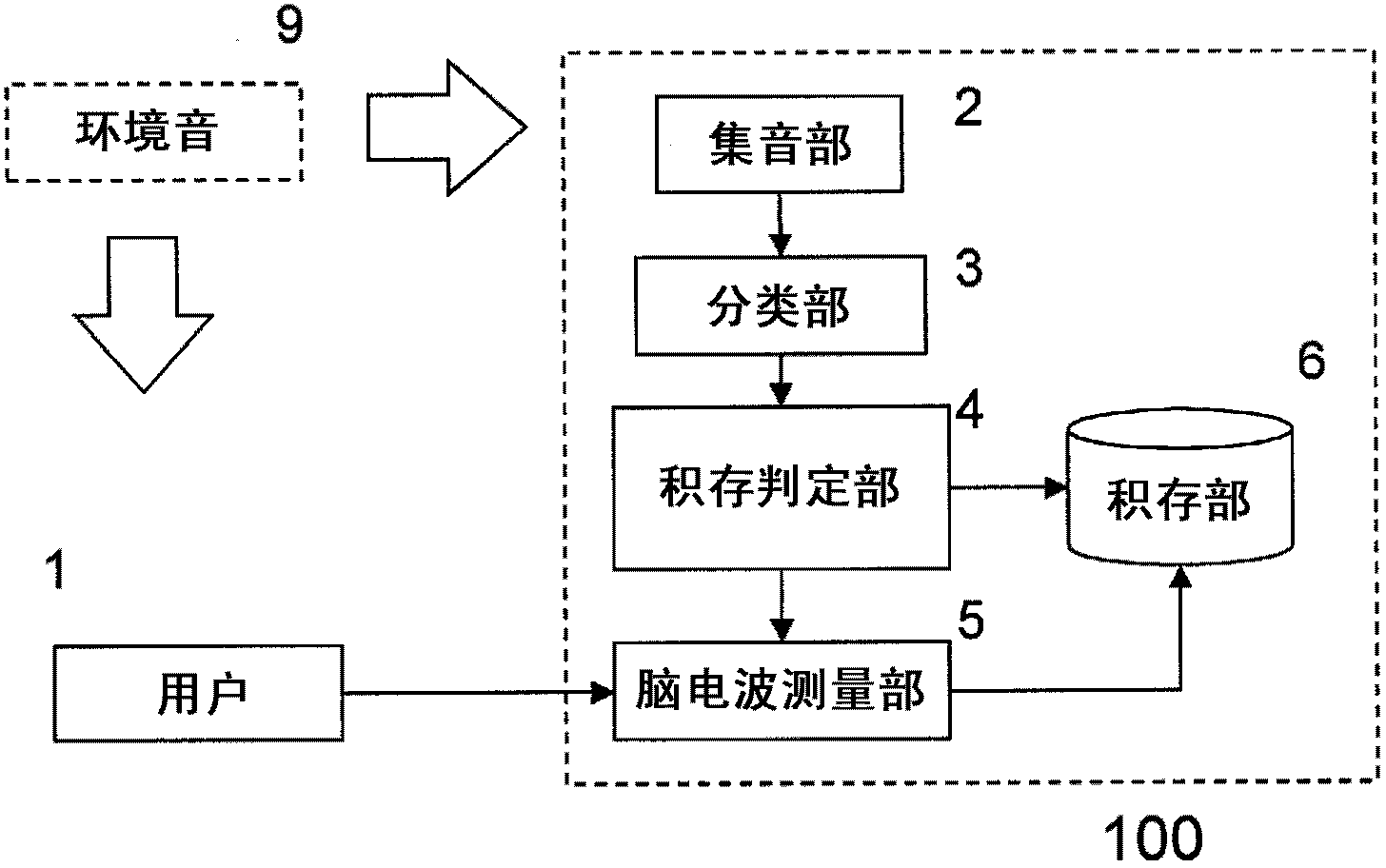

Electroencephalograph, hearing aid, electroencephalogram recording method and program for same

InactiveCN103270779AAudiometeringHearing aids signal processingEnvironmental soundsSound classification

The objective of the present invention is to suitably record ambient environmental sound and information related to hearing of a user at the time in order to collect data of a volume of data necessary for fitting at a hearing aid store. This electroencephalograph is provided with a sound collection unit which generates sound data of sound of the outside, an electroencephalography unit for generating brain wave data of brain waves of a user, a classification unit for classifying the collected sound into a plurality of divisions which have been pre-defined in relation to sound pressure, an accumulation assessment unit for assessing whether or not to record the brain wave data on the basis of whether the number of times of data accumulation has reached a pre-set target value for the divisions into which the sound has been classified, and an accumulation unit for associating the brain wave data and the sound data and accumulating thereof if an assessment has been made to record the brain wave data. A target value is set for each division so that when the target value of a first division, into which sounds of an expected minimum value are classified, is treated as a first value, and the target value of a second division, into which sounds of sound pressure greater than the minimum value are classified, is treated as a second value, the first value is set so that the first value becomes greater than or equal to the second value for the accumulation assessment unit.

Owner:PANASONIC CORP

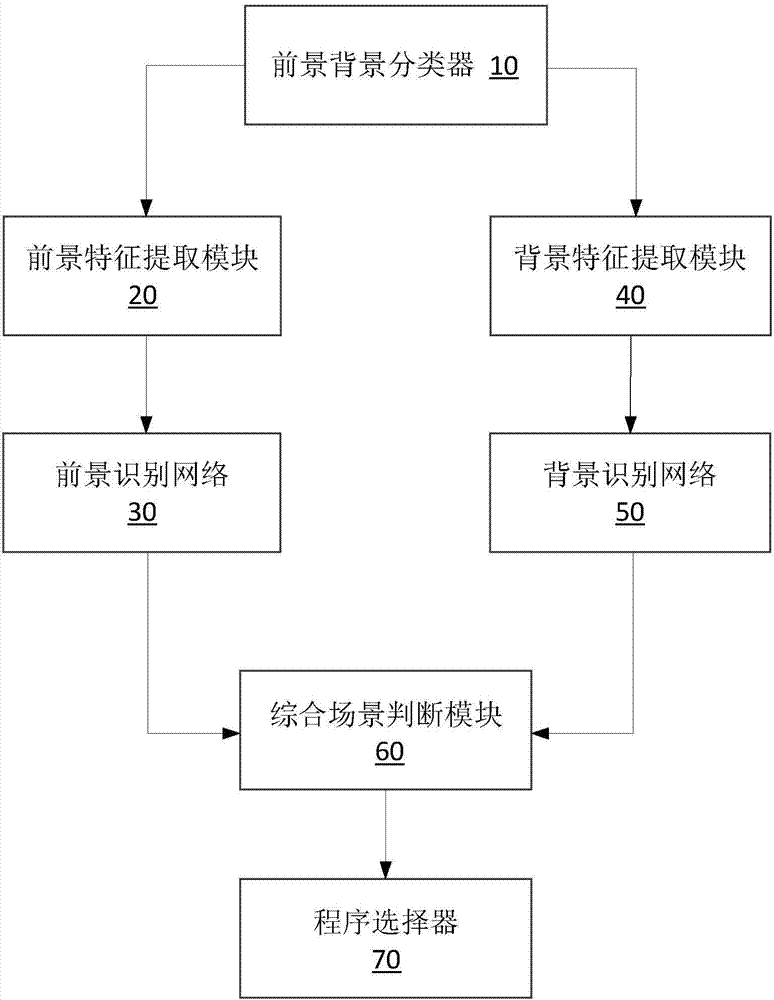

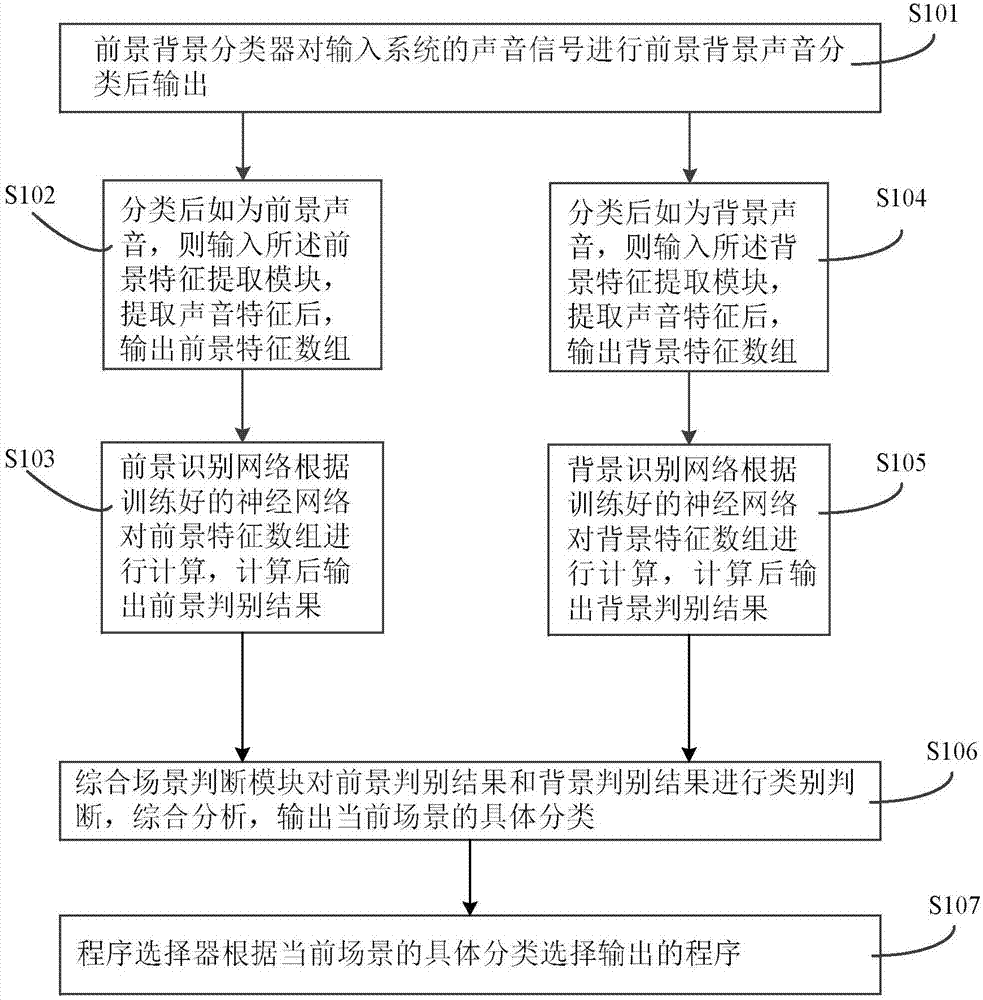

Cochlear implantation sound scene identification system and method

ActiveCN107103901AImprove the quality of lifeAccurate identificationSets with customised acoustic characteristicsSpeech recognitionArray data structureFeature extraction

The present invention discloses a cochlear implantation sound scene identification system and method. The system comprises a foreground and background classifier, a foreground feature extraction module, a foreground identification network, a background feature extraction module, a background identification network, a comprehensive scene determination module and a program selector, and the foreground and background classifier performs classification of foreground and background sound for sound signals of an input system and then outputs the processed sound signals; after classification of the foreground and background classifier, if the sound signals are foreground sound, the sound signals are input to the foreground feature extraction module, and after extraction of sound features, a foreground feature array is output to the foreground identification network; if the sound signals are background sound, the sound signals are input to the background feature extraction module, and after extraction of sound features, a background feature array is output to the background identification network; through comprehensive analysis, the concrete classification of the current scene is output; and an output program is selected. Compared to a traditional scene identification system, the cochlear implantation sound scene identification system and method can identify more sound scenes.

Owner:ZHEJIANG NUROTRON BIOTECH

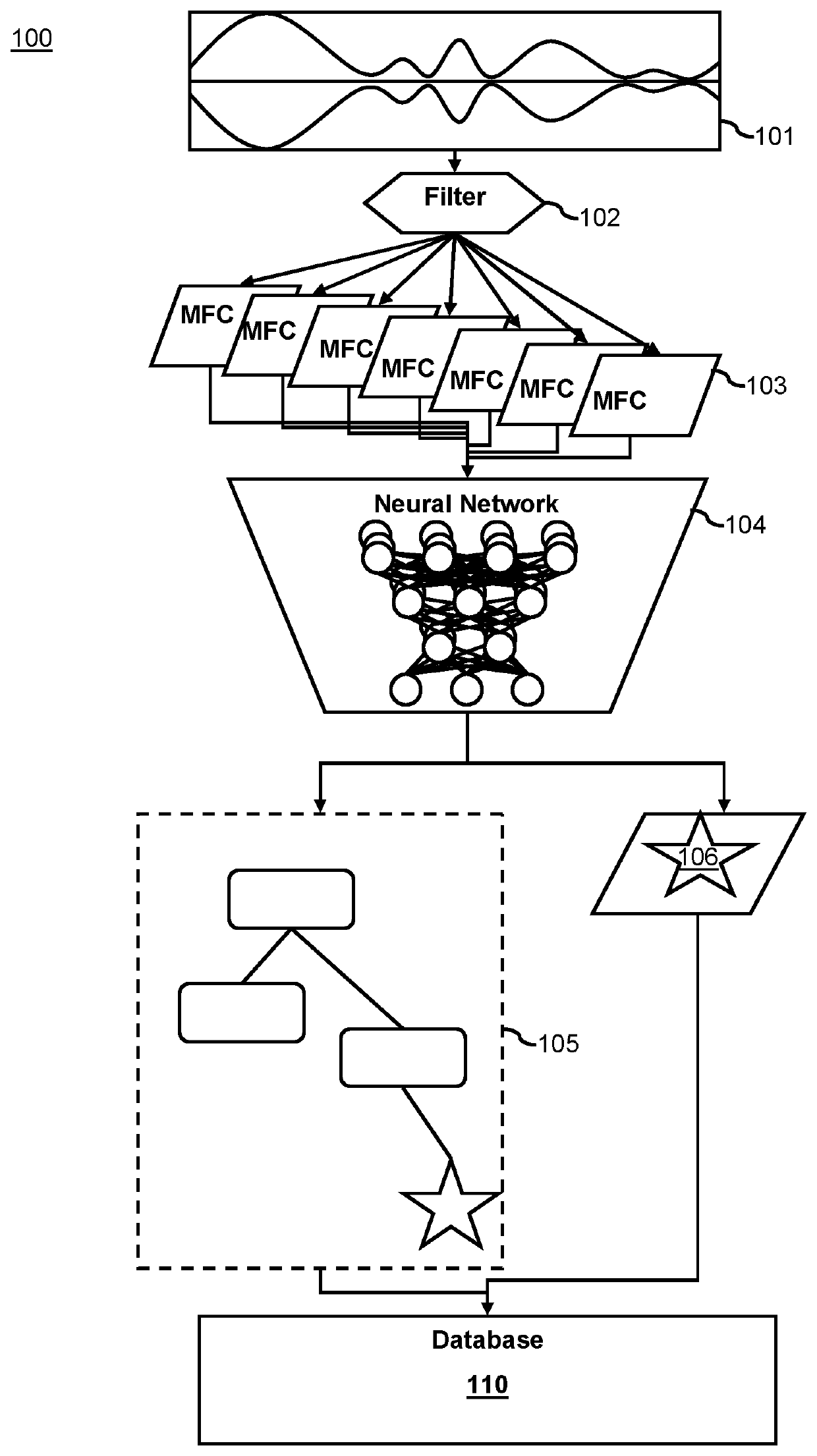

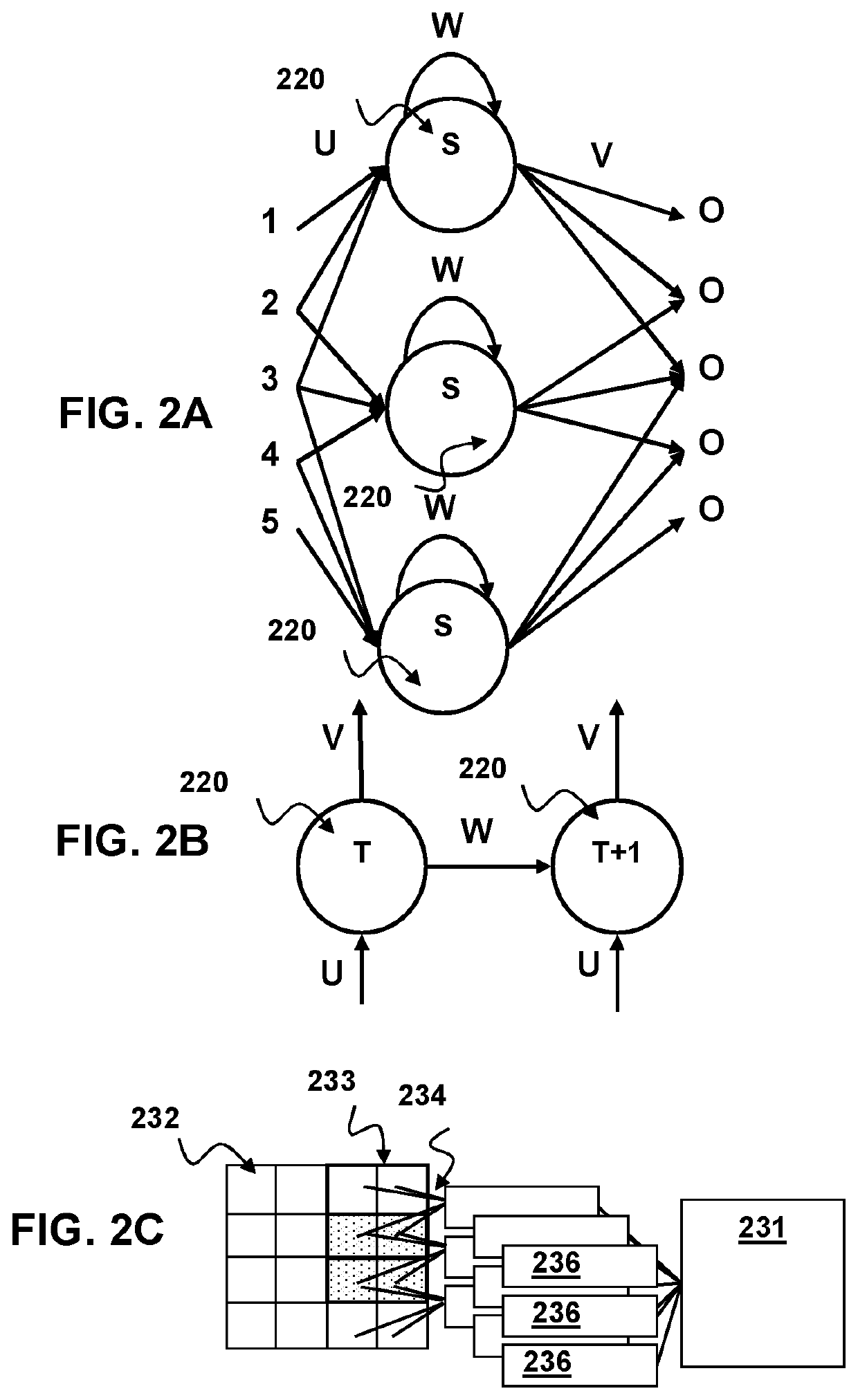

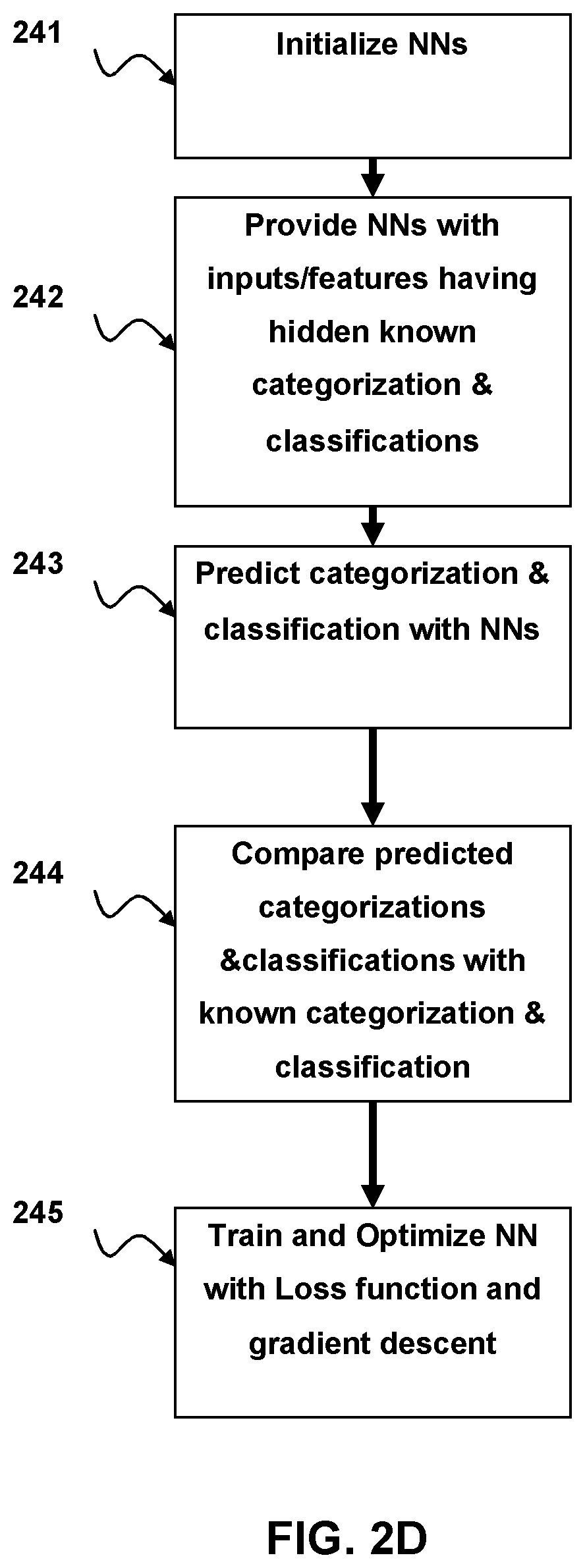

Sound categorization system

A system method and computer program product for hierarchical categorization of sound comprising one or more neural networks implemented on one or more processors. The one or more neural networks are configured to categorize a sound into a two or more tiered hierarchical course categorization and a finest level categorization in the hierarchy. The categorization of a sound may be used to search a database for similar or contextually related sounds.

Owner:SONY COMPUTER ENTERTAINMENT INC

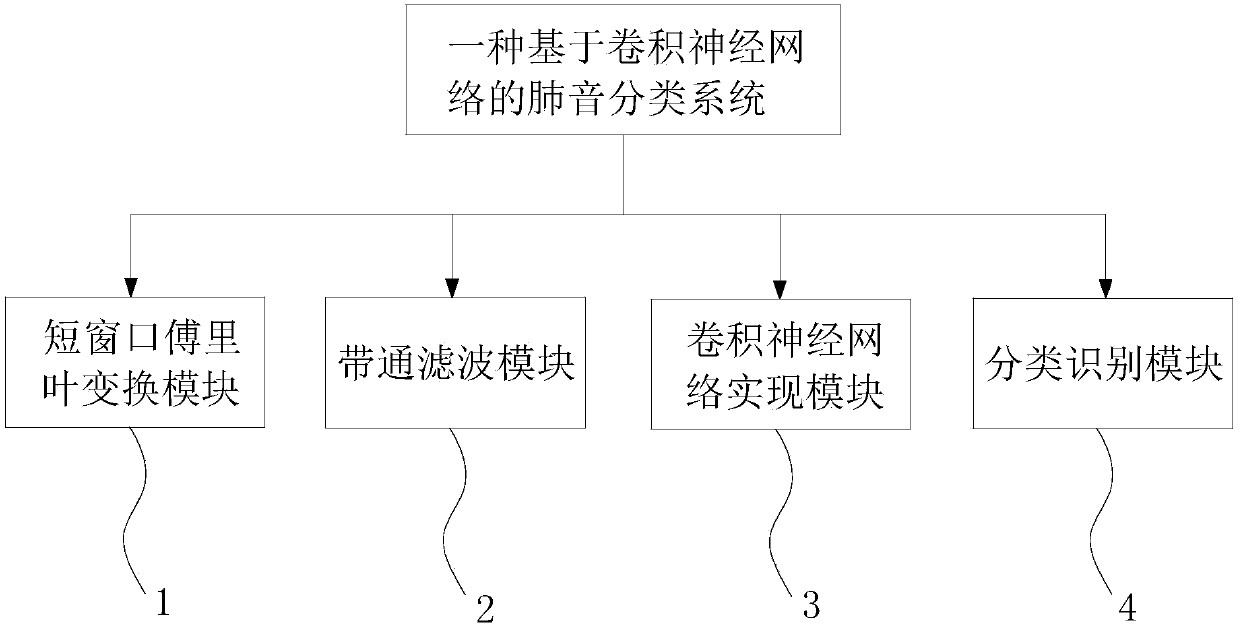

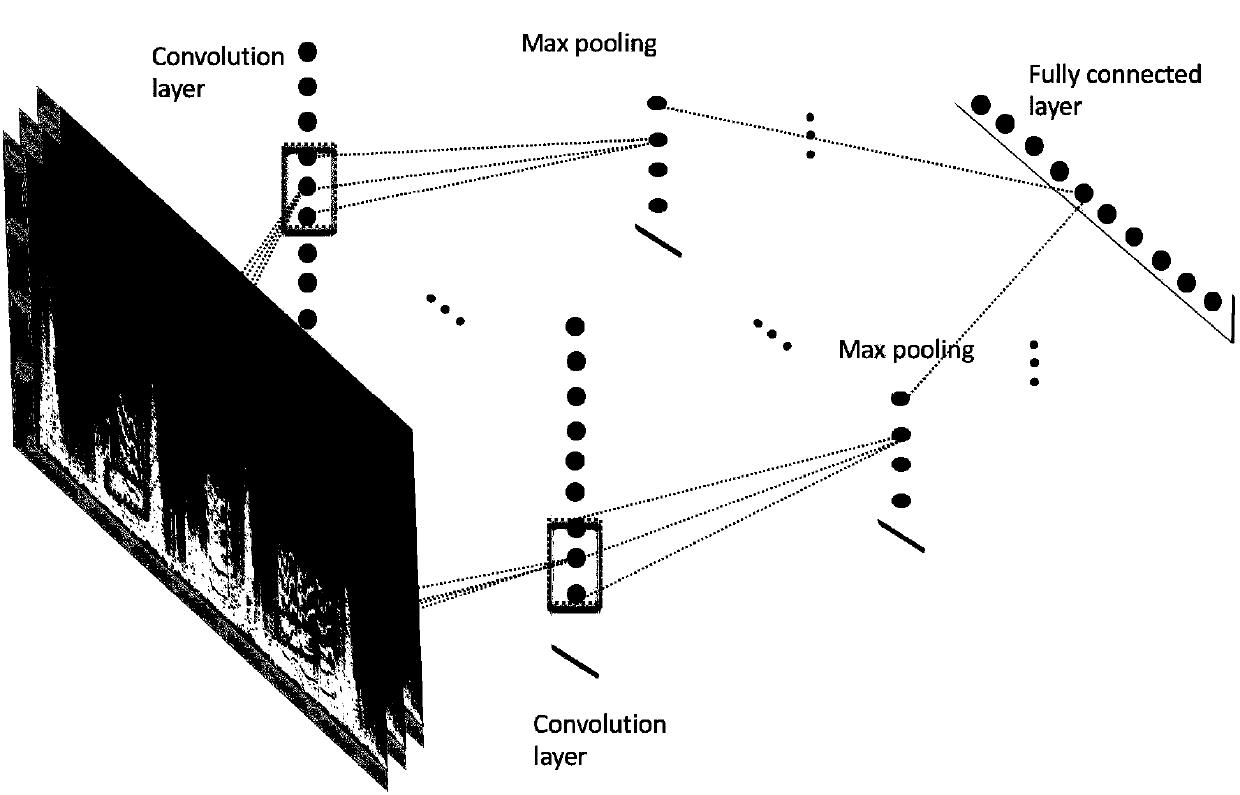

Lung sound classification method, system and application based on convolutional neural network

The present invention relates to a lung sound classification method, system and application based on a convolutional neural network. An image identification convolutional neural network is applied tolung sound classification, a general model is used to solve huge defects that a traditional algorithm has manual extraction of features and join experience limitation conditions and cannot be generally suitable for all the samples, and solve problems that a traditional algorithm cannot be enhanced with a data set and cannot be subjected to autonomic learning enhancement.

Owner:成都力创昆仑网络科技有限公司

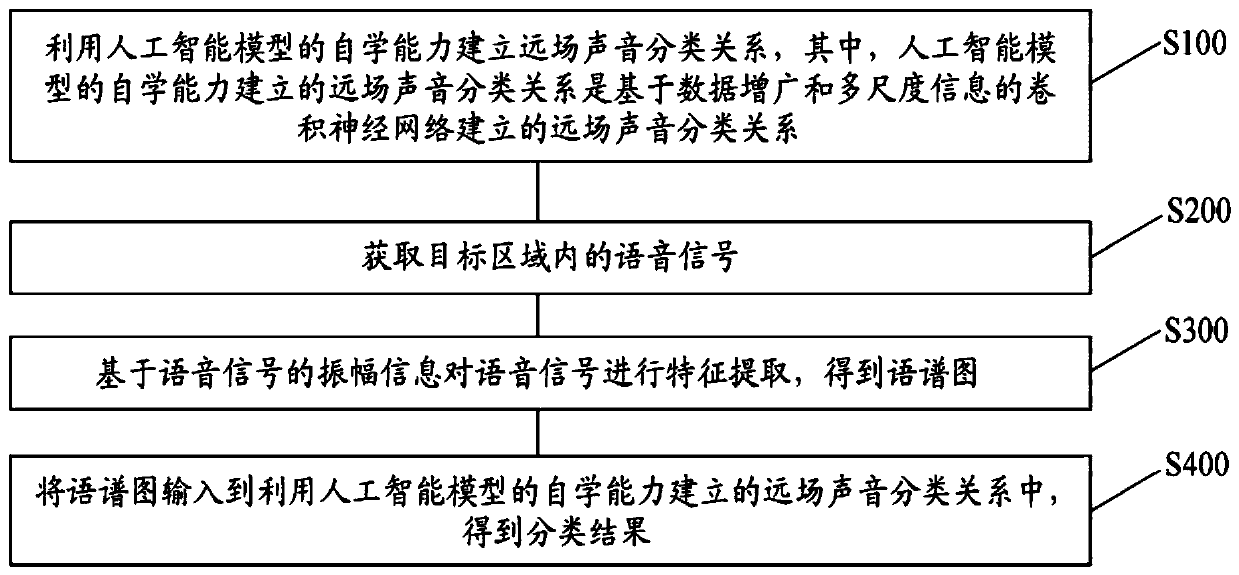

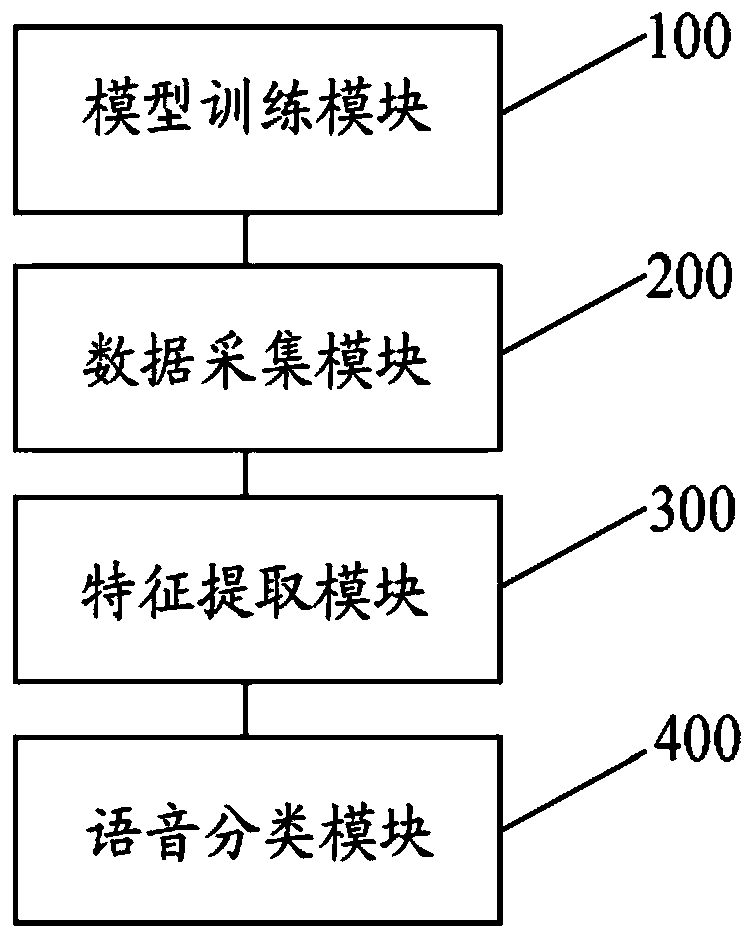

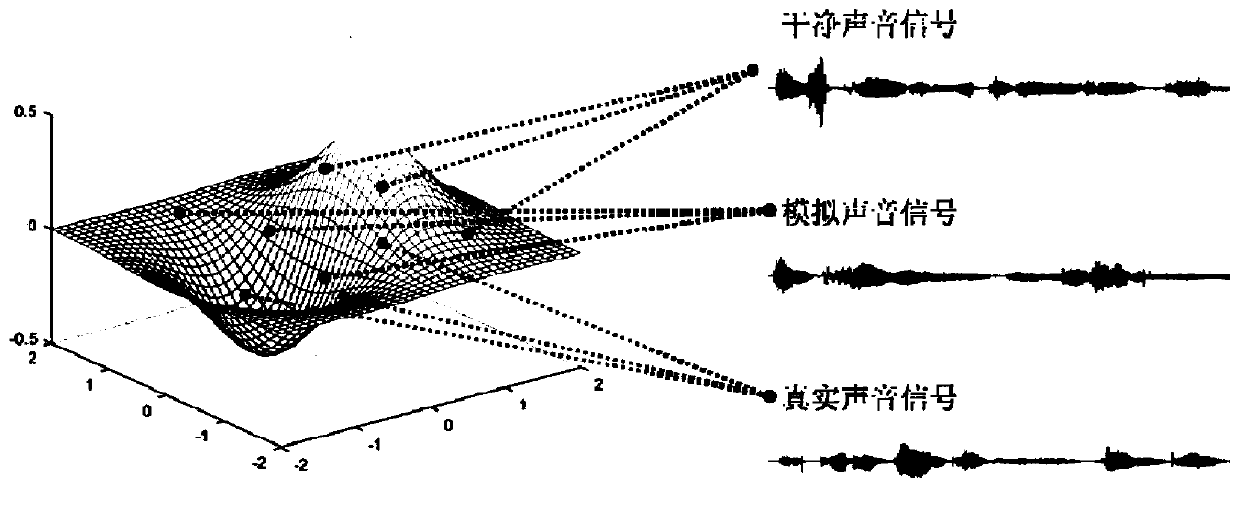

Far-field sound classification method and device

The embodiment of the invention provides a far-field sound classification method, and the method comprises the steps: building a far-field sound classification relation through the self-learning capability of an artificial intelligence model, and enabling the far-field sound classification relation built by the self-learning capability of the artificial intelligence model to be a far-field sound classification relation built based on data augmentation and a convolution neural network of multi-scale information; acquiring a voice signal in the target area; performing feature extraction on the voice signal based on the amplitude information of the voice signal to obtain a spectrogram; and inputting the spectrogram into a far-field sound classification relationship established by utilizing the self-learning ability of the artificial intelligence model to obtain a classification result. Audio data of sound classification is matched with signal distribution received by a microphone in a real environment; according to the method, noise, reverberation and other interference factors are removed, and sound classification is carried out by using a data augmentation mode, so that training data of the model can better fit data distribution of a real environment, better robustness can be obtained, and the accuracy of a sound classification task is improved.

Owner:慧言科技(天津)有限公司 +1

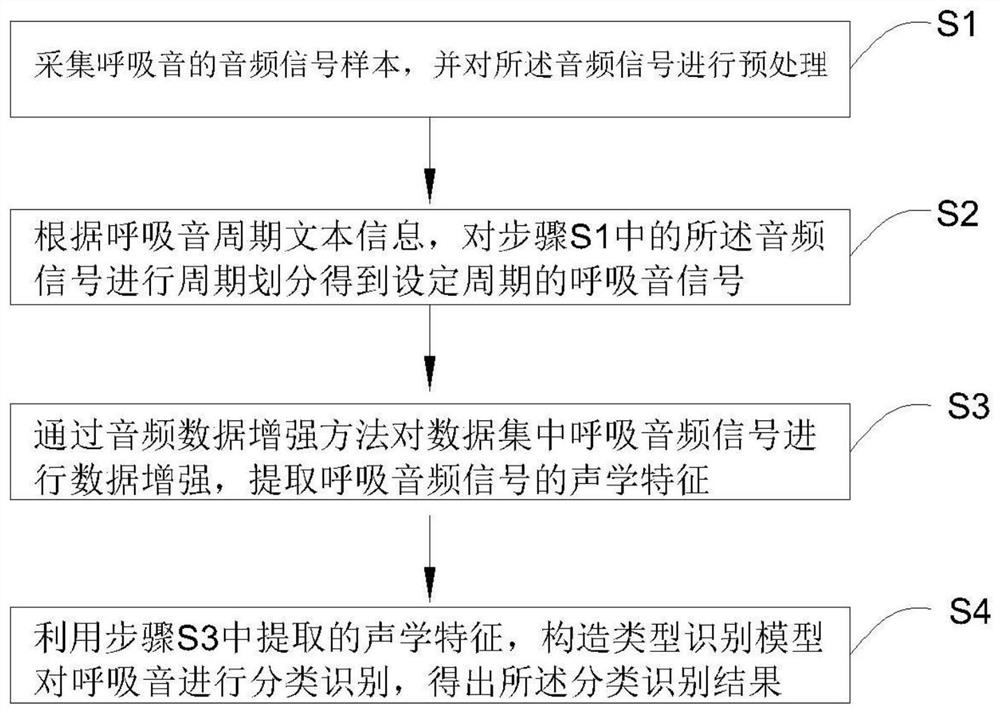

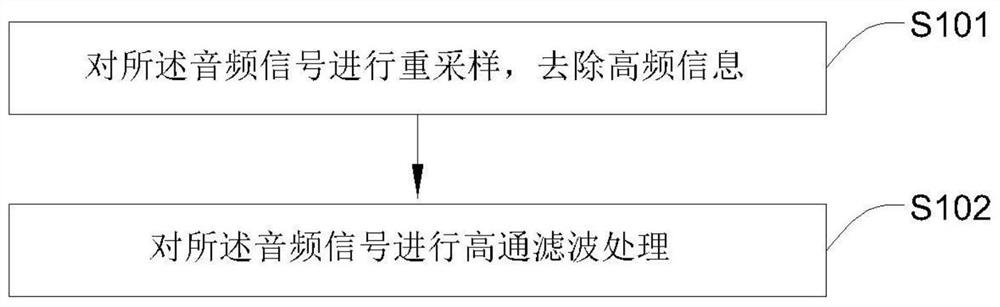

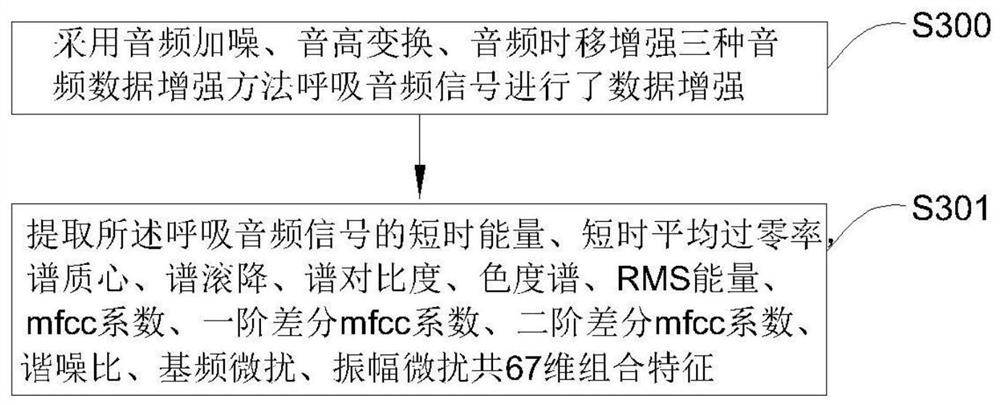

Breathing sound classification method based on deep learning

InactiveCN111640439ATargetedImprove classification recognition rateSpeech analysisSound classificationBreathing sounds

The invention relates to the field of audio signal recognition, and in particular, relates to a breathing sound classification method based on deep learning. The method comprises the steps: S1, acquiring an audio signal sample of breathing sound, and preprocessing the audio signal; S2, according to breathing sound period text information, performing period division on the audio signal in the stepS1 to obtain a breathing sound signal of a set period; performing data enhancement on the breathing audio signal in the data set through an audio data enhancement method, and extracting acoustic features of the breathing audio signal; and S3, constructing a type recognition model by using the acoustic features extracted in the step S3 to classify and recognize the breathing sound to obtain a classification and recognition result.

Owner:NANKAI UNIV

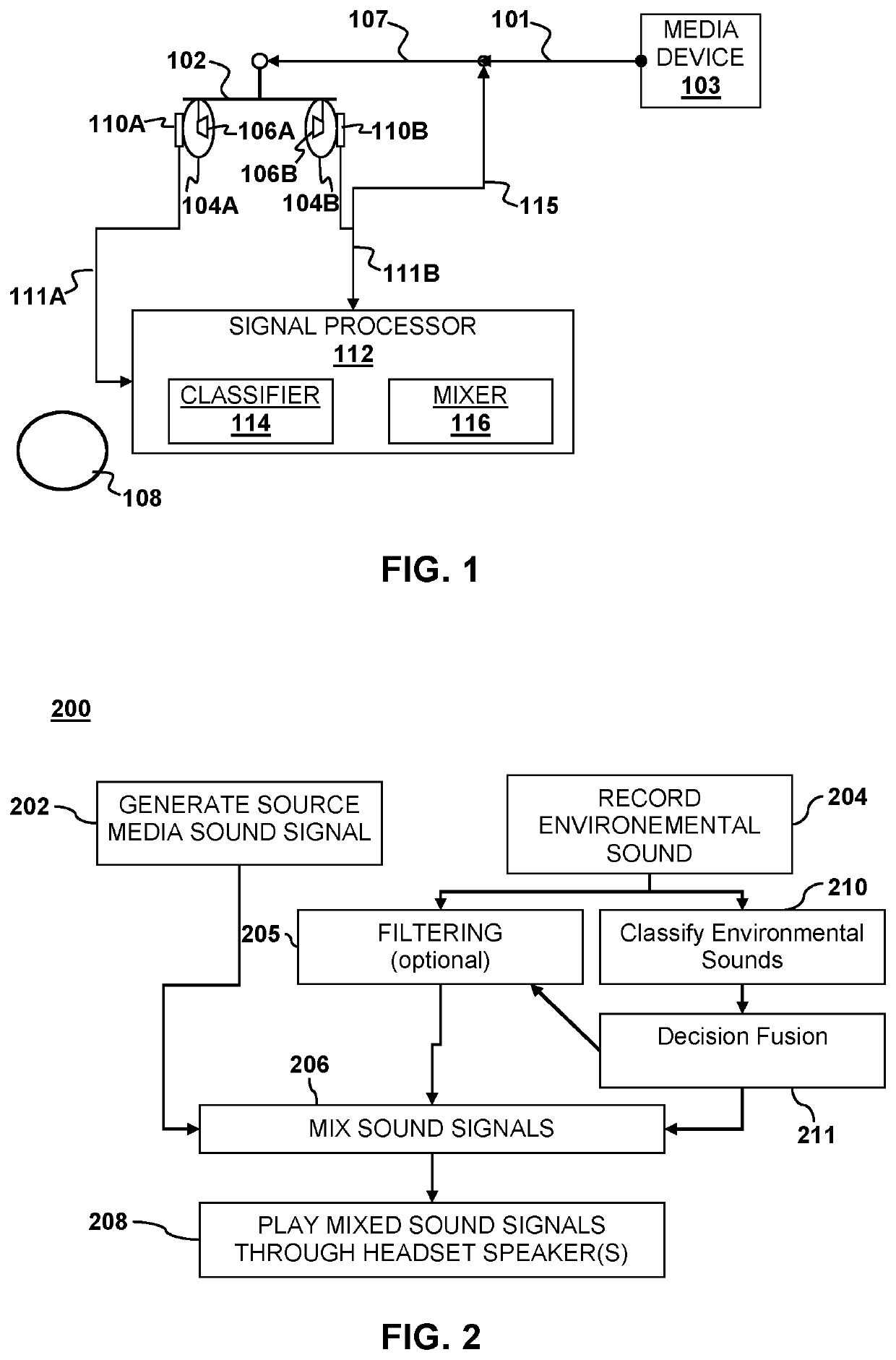

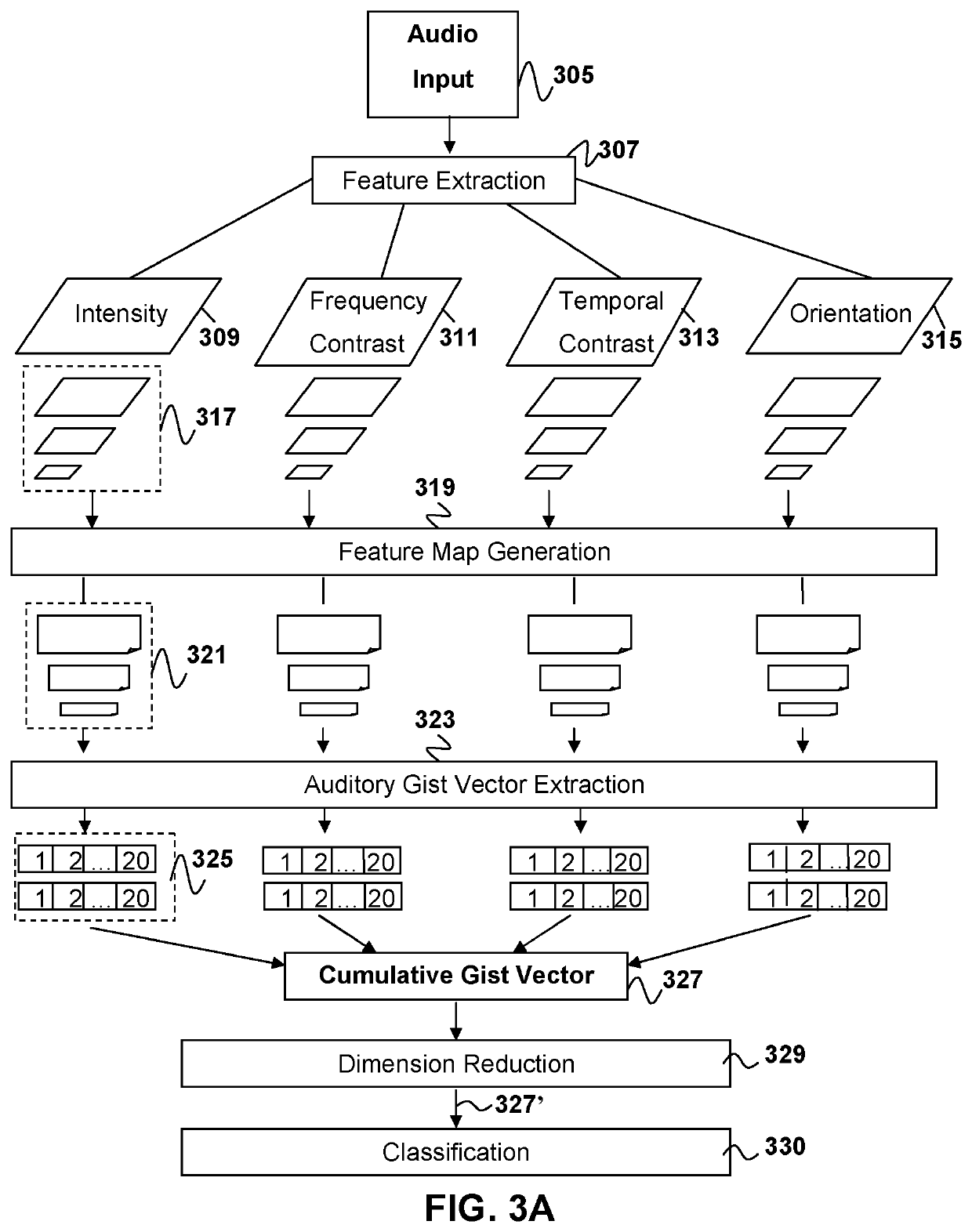

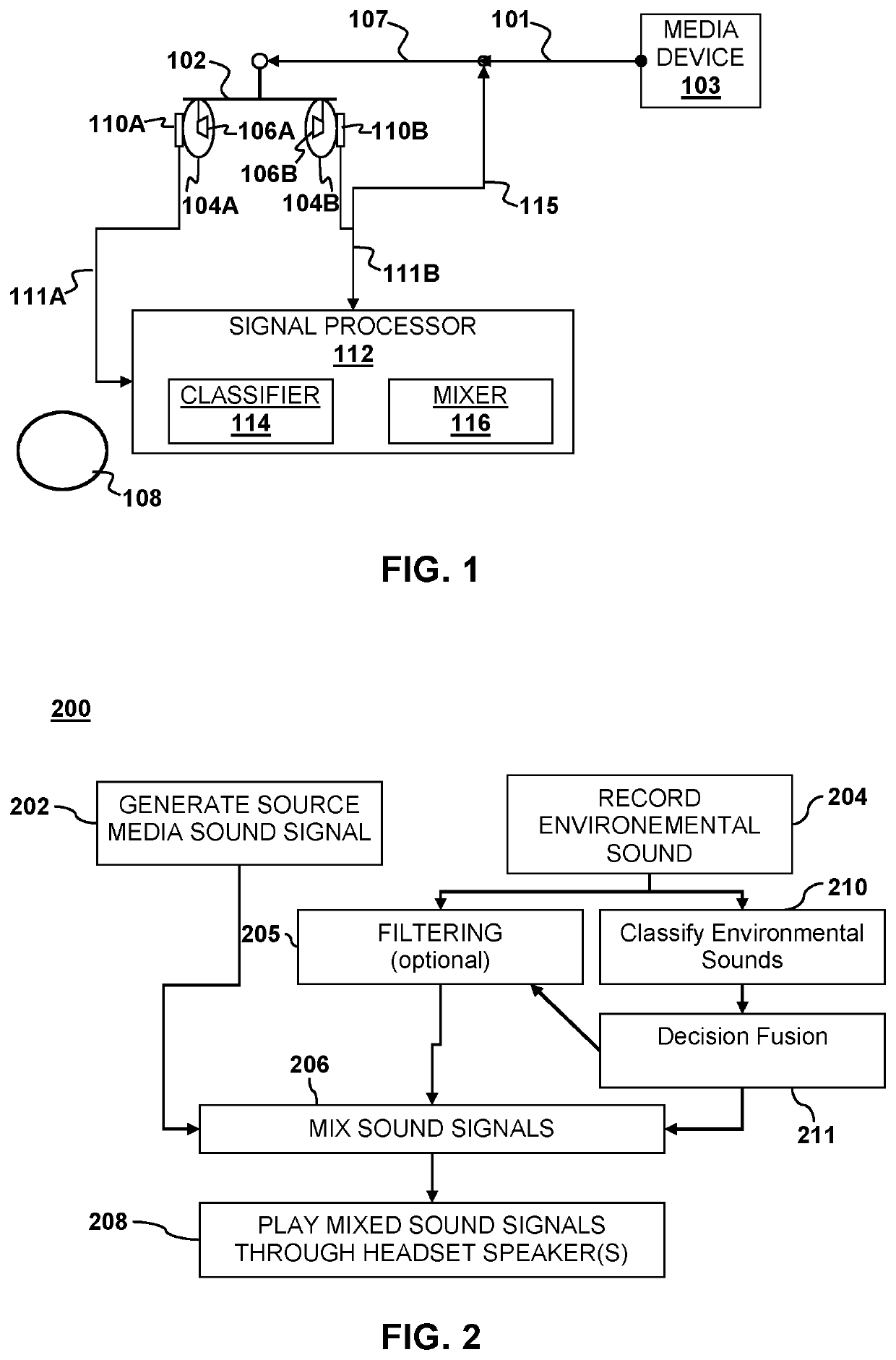

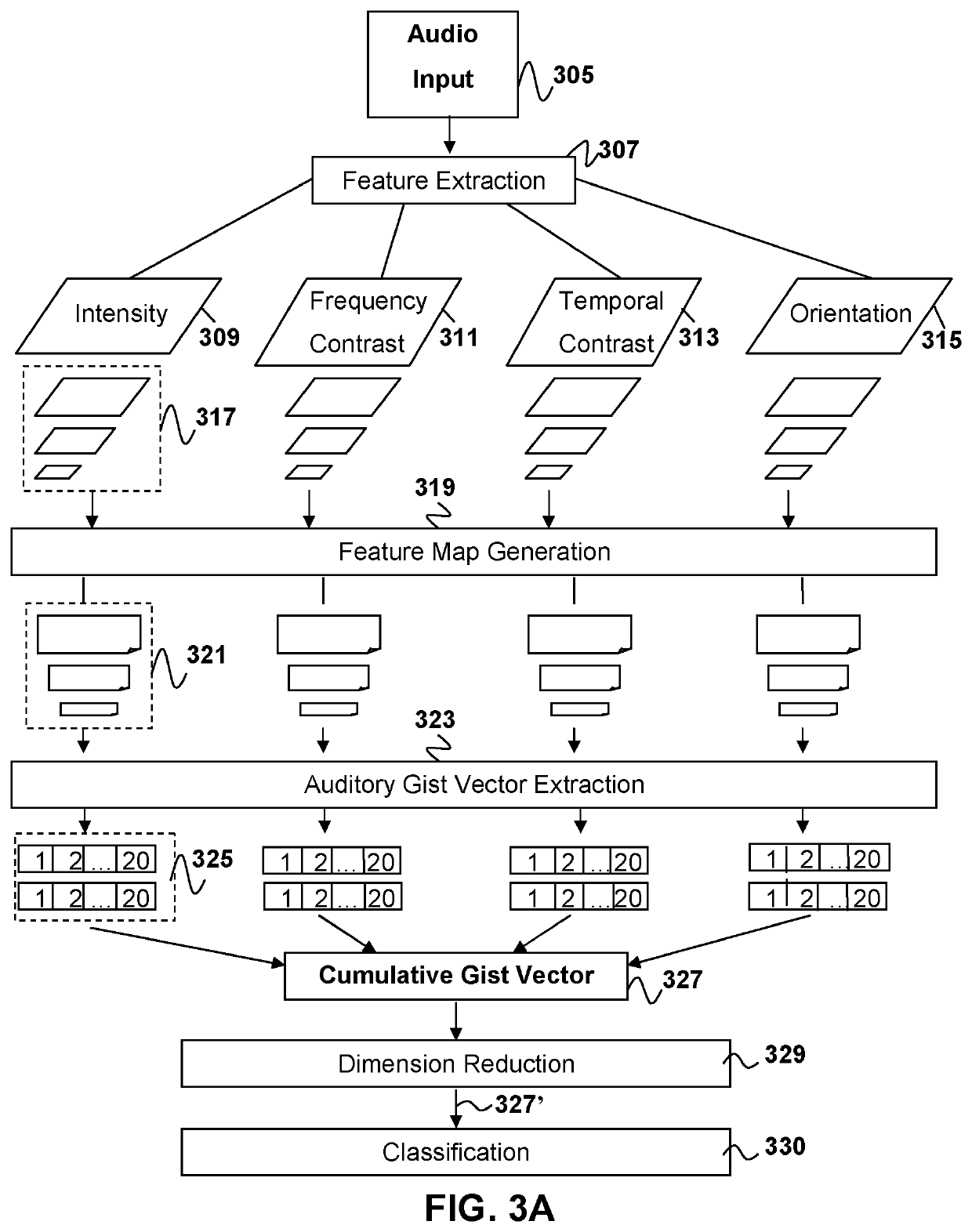

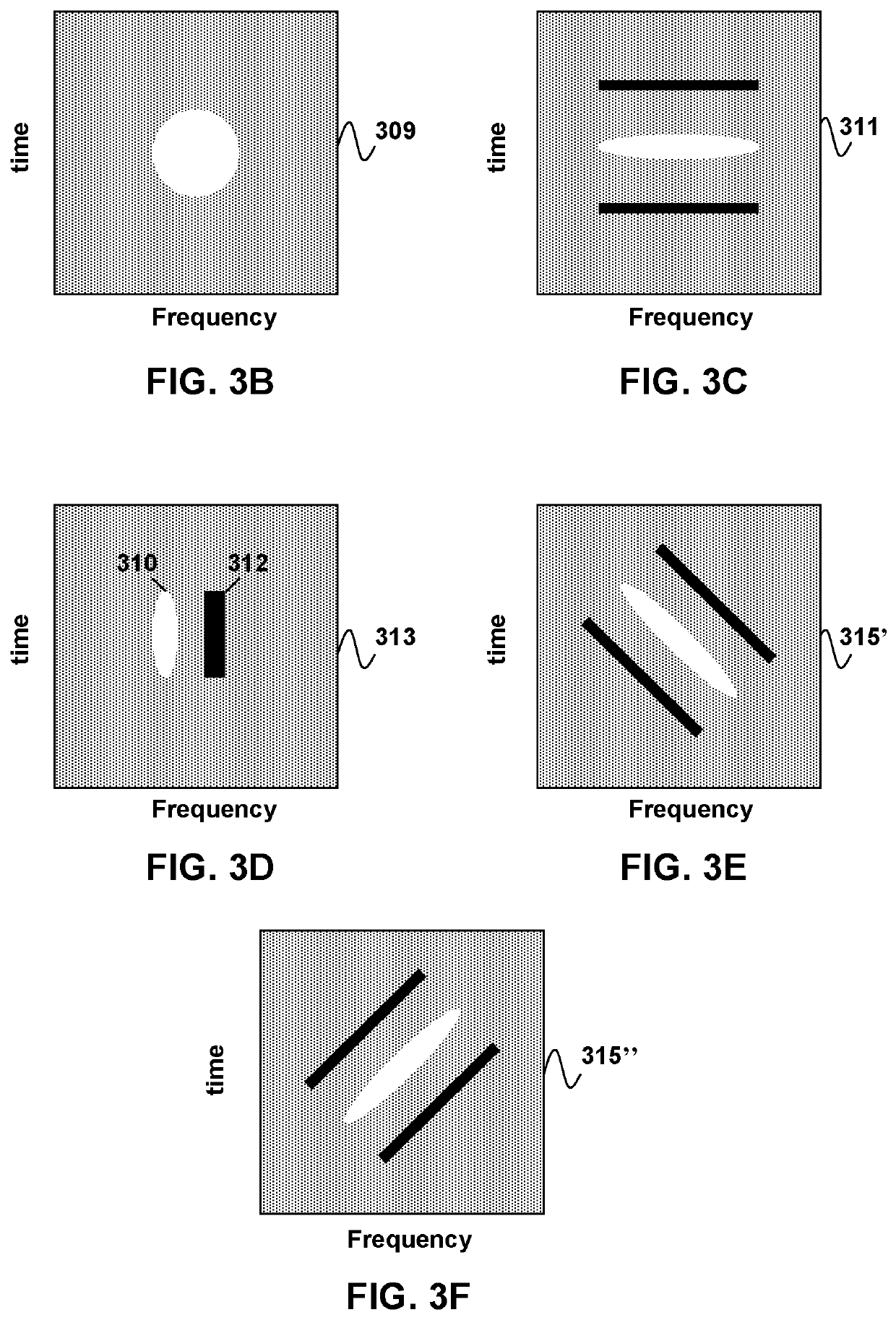

Ambient sound activated device

ActiveUS20200036354A1Manually-operated gain controlSignal processingEnvironmental soundsSound classification

In device having at least one microphone and one or more speakers, environmental sound may be recorded using the microphone, classified and mixed with source media sound to produce a mixed sound depending on the classification. The mixed sound may then be played over the one or more speakers.

Owner:SONY COMPUTER ENTERTAINMENT INC

Sound classification system and method capable of adding and correcting a sound type

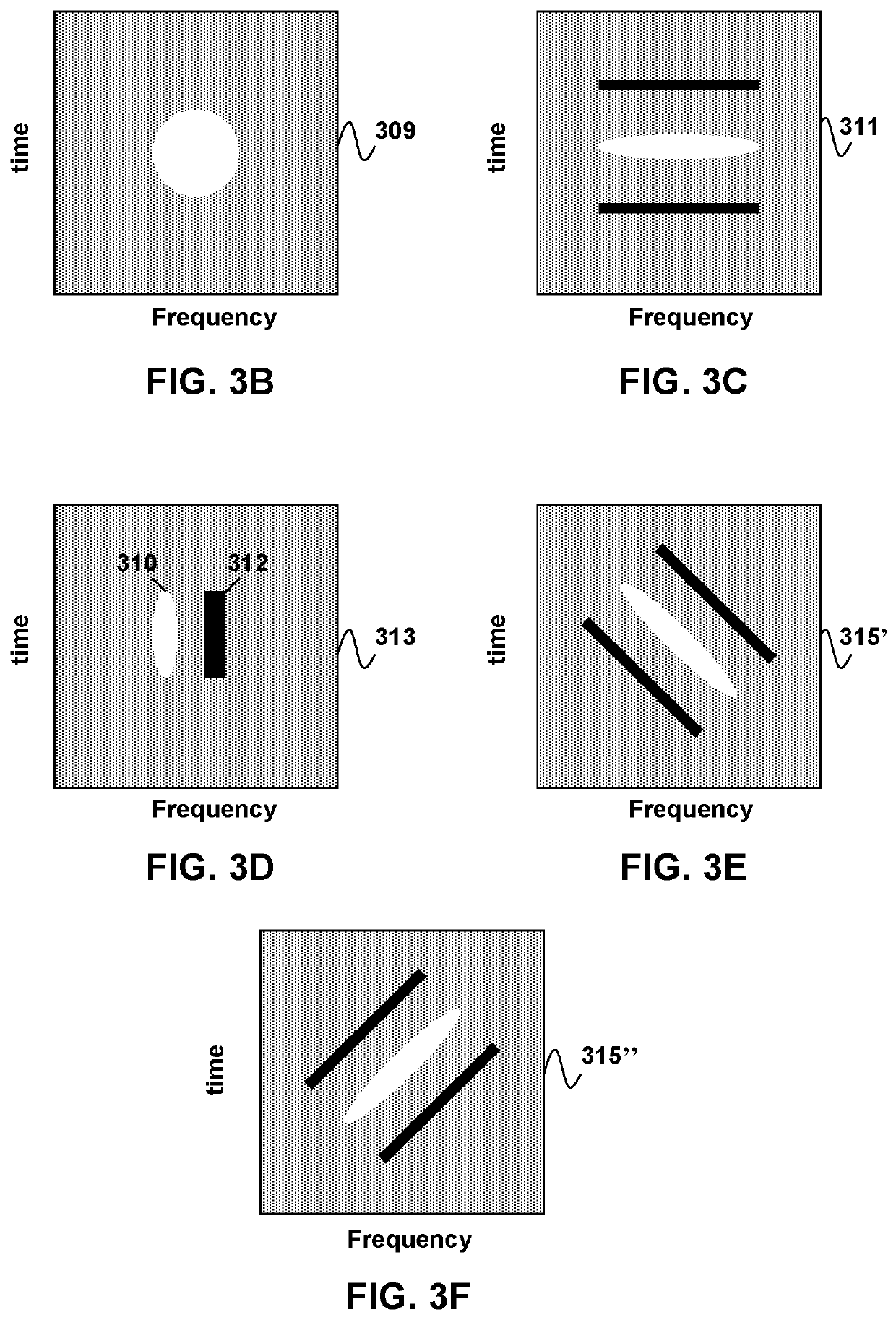

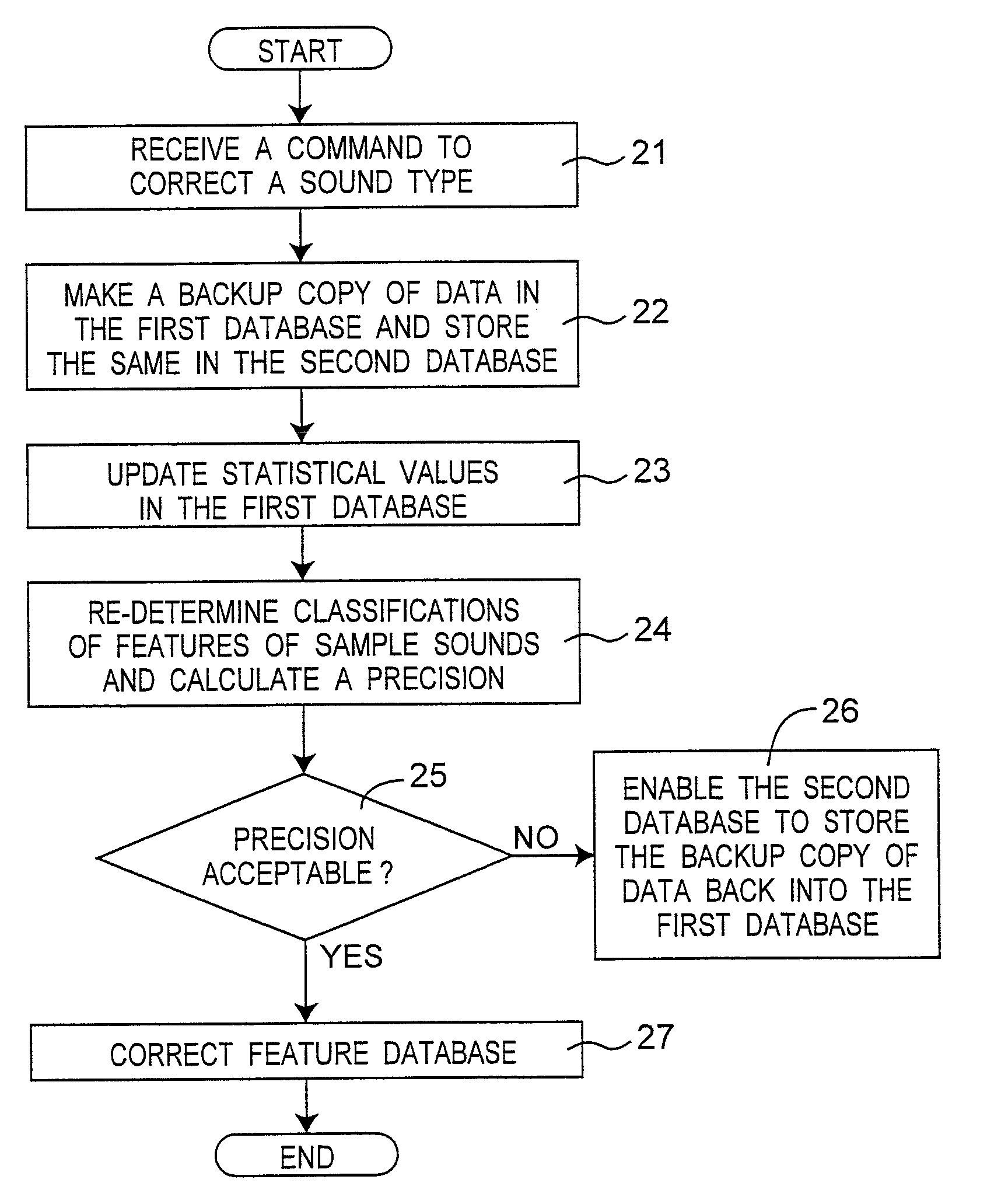

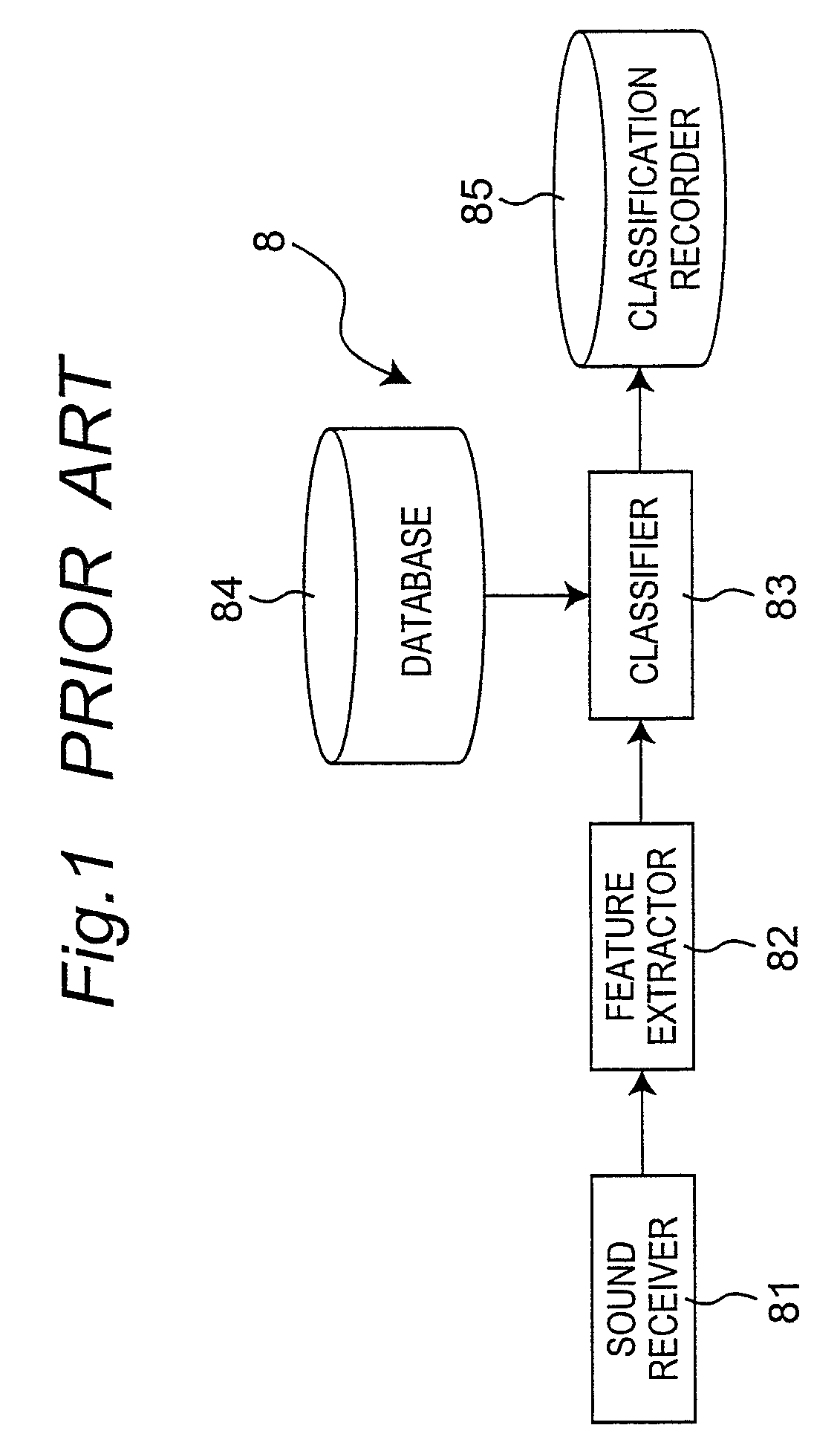

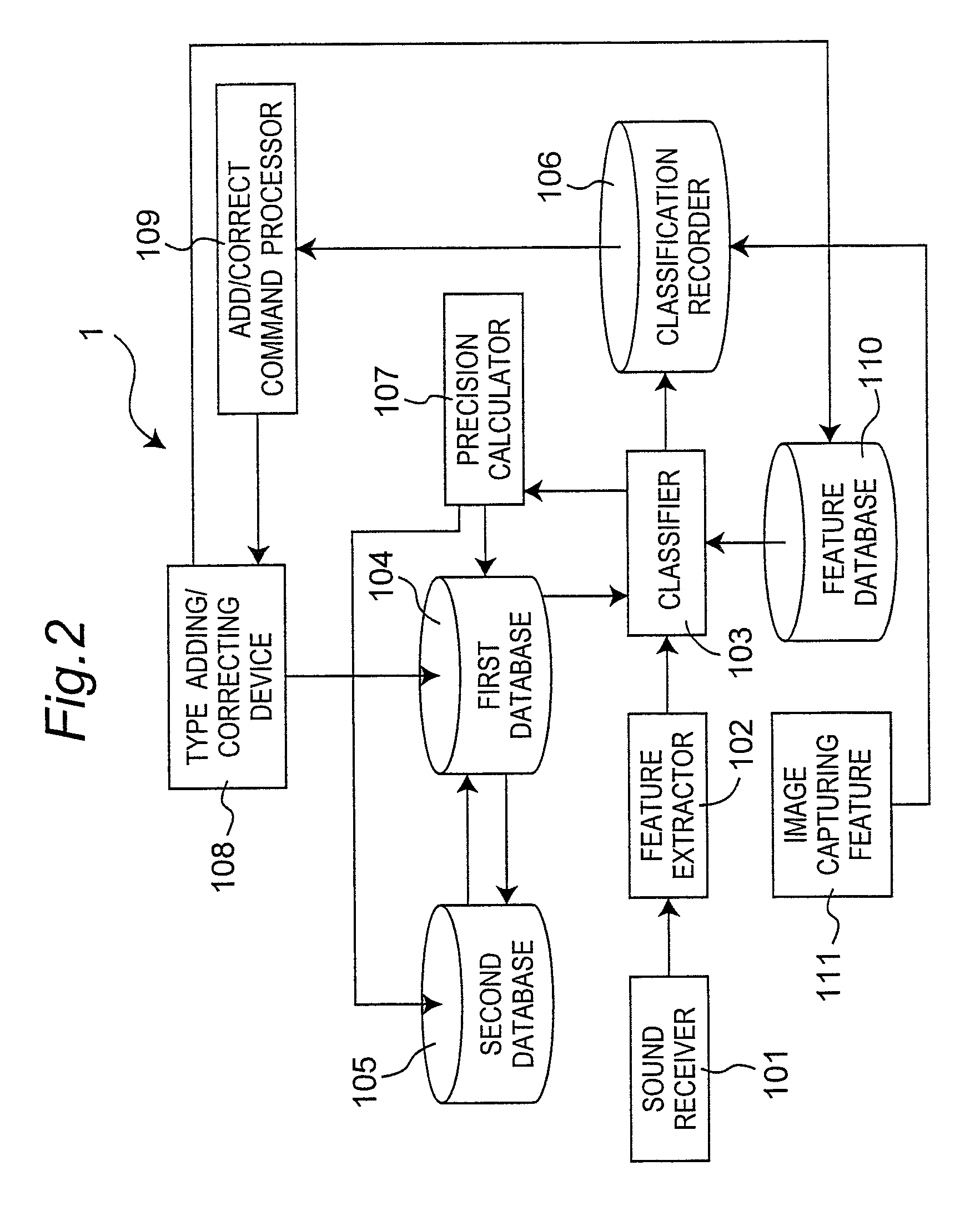

InactiveUS8037006B2Type accurateDigital computer detailsDigital dataSound classificationComputer science

A sound classification system for adding and correcting a sound type is disclosed. When the add / correct command processor receives a command to add or correct a sound type, the data in the first database is stored in the second database, and the type adding / correcting device adds the feature of the sound to the first database, and re-calculates the statistical values. Besides, the classifier re-classifies the sample sounds, and the precision calculator calculates a ratio of accurate classification. When the ratio is high, the type adding / correcting device stores, in the feature database, the feature of the sound for which a type is to be added or corrected. When the ratio is low, the second database restores the data back to the first database.

Owner:PANASONIC CORP

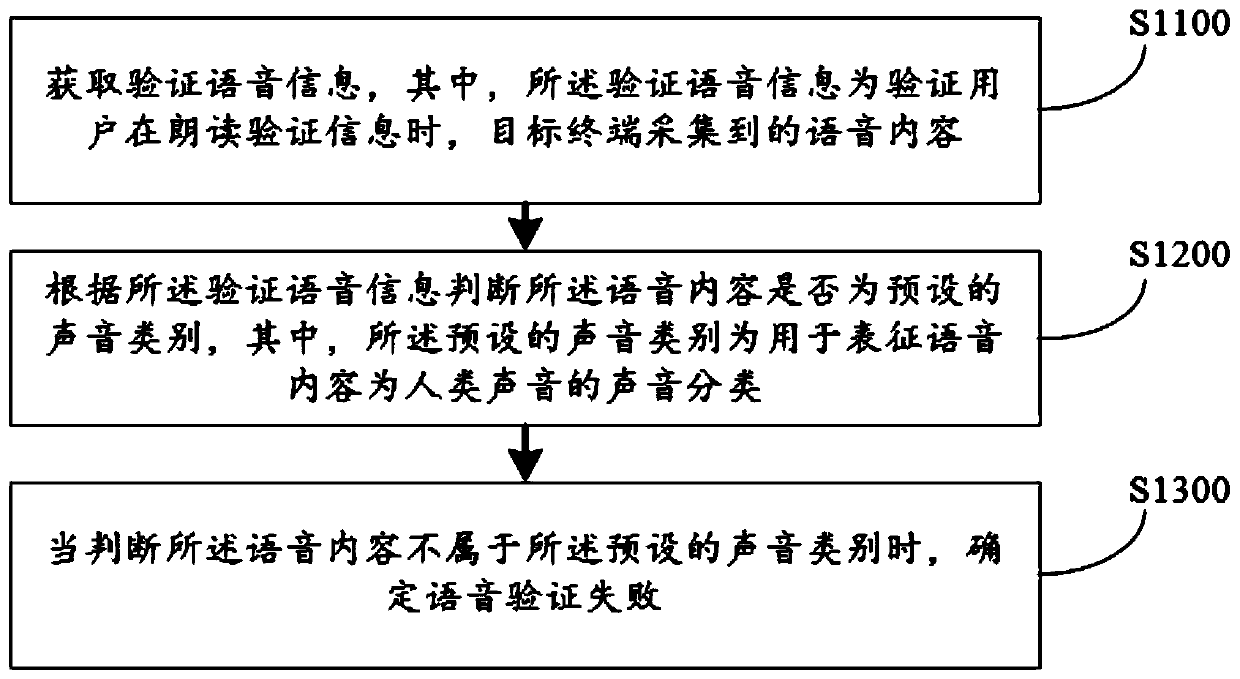

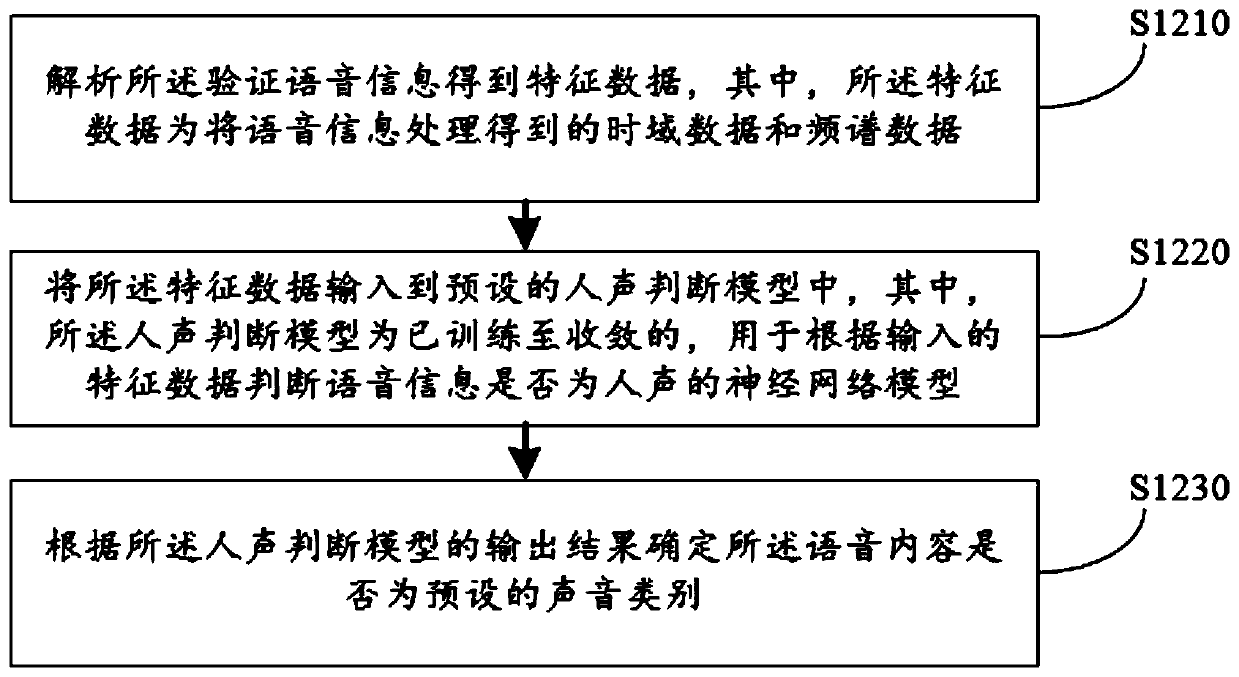

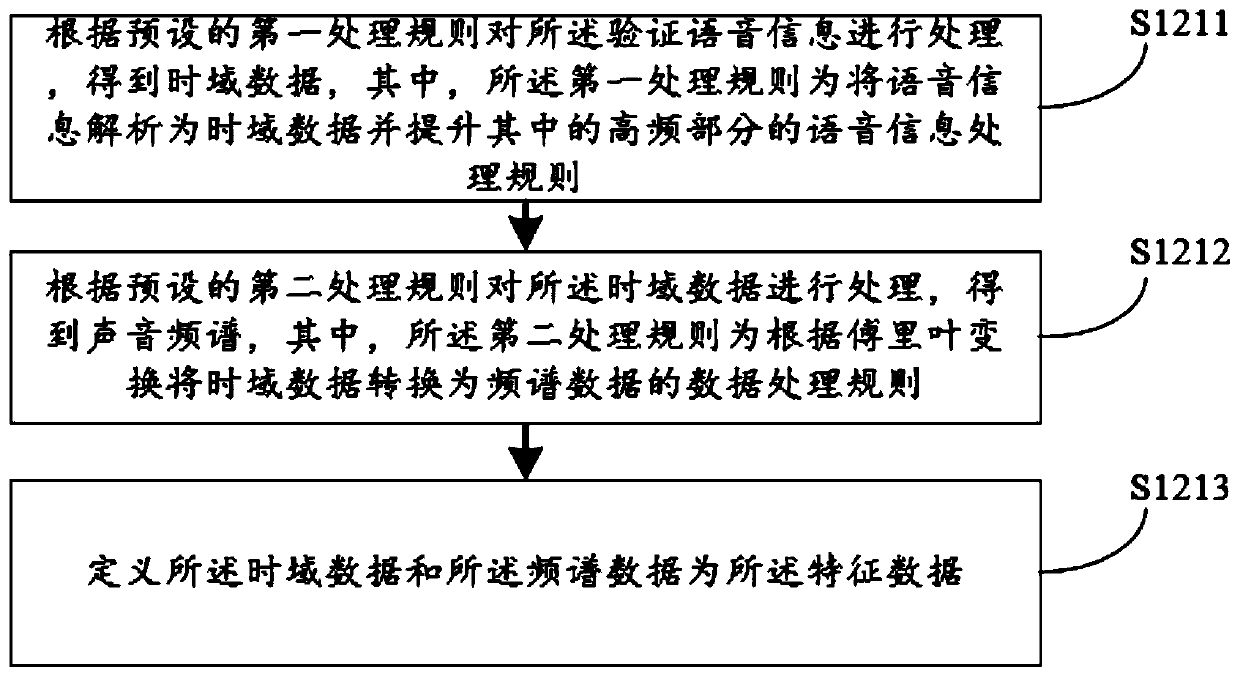

Voice verification method, voice verification device, computer equipment and storage medium

PendingCN109801638AGuarantee authenticityImprove securitySpeech analysisDigital data authenticationSound classificationComputer terminal

An embodiment of the invention discloses a voice verification method, a voice verification device, computer equipment and a storage medium. The method includes the steps: acquiring verification voiceinformation; judging whether a voice content is a preset sound category or not according to the verification voice information; determining that voice verification fails when judging that the voice content does not belong to the preset sound category. The verification voice information is the voice content acquired by a target terminal when a verification user reads the verification information, and the preset sound category is sound classification representing the voice content of human voice. The method verifies whether the verification voice is real human voice or not, malicious users suchas a machine, AI (artificial intelligence) and crawlers can be effectively removed, attack of the malicious users to networks and platforms is prevented, validity and reality of verification users areensured, and voice verification safety is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

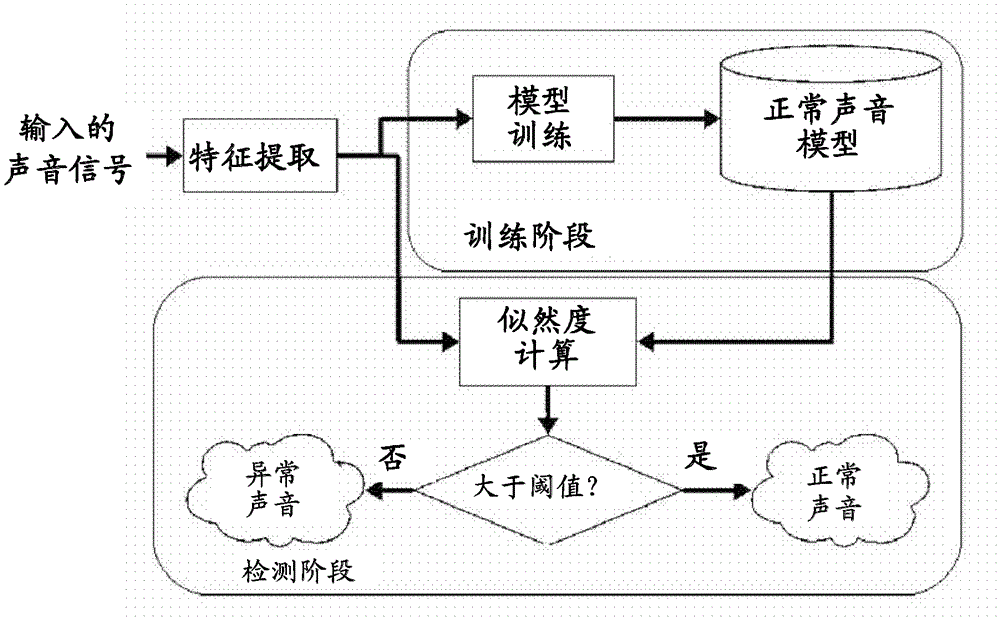

Method and device for generating sound classifier and detecting abnormal sound, and monitoring system

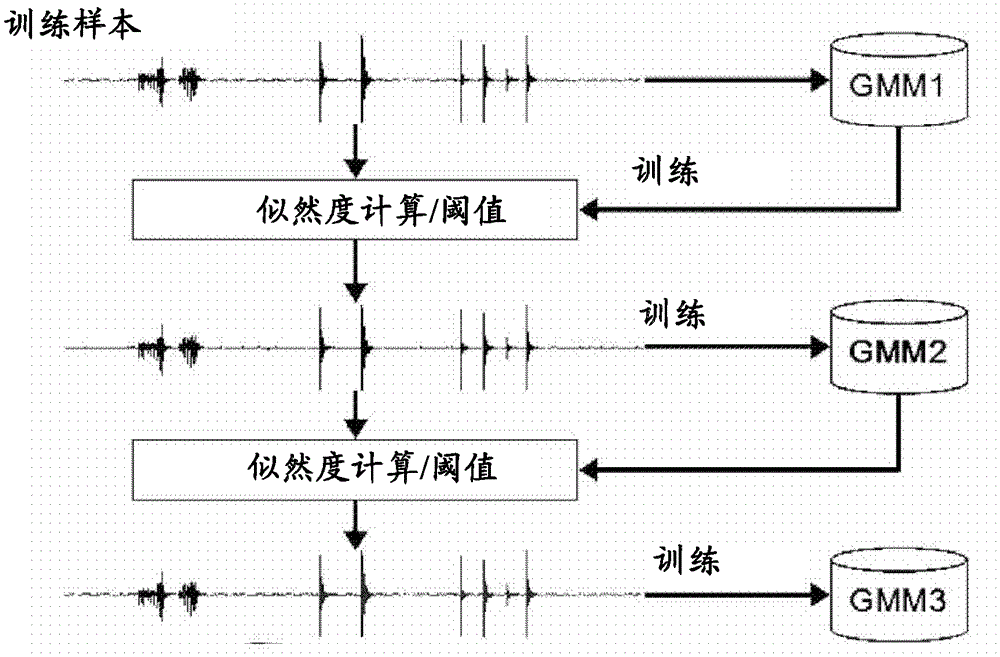

The invention relates to methods and devices for generating a sound classifier and detecting an abnormal sound, and a monitoring system. The sound classifier comprises at least one classifier grade. For generating each sound classifier grade, the method for generating the sound classifier comprises the following steps: generating, based on an input sound sample, a normal sound model; calculating, based on the input sound sample and the normal sound model, a first threshold value, wherein the input sound sample is segmented to a first normal sound sample and a first abnormal sound sample according to the normal sound model and the first threshold value; generating, based on a specific abnormal sound sample and the first abnormal sound sample, an abnormal sound model; and calculating, based on the first normal sound sample and the abnormal sound model, a second threshold value, wherein the first normal sound sample is segmented to a second normal sound sample and a second abnormal sound sample according to the abnormal sound model and the second threshold value. The each classifier grade comprises the normal sound model, the first threshold value, the abnormal sound model and the second threshold value.

Owner:CANON KK

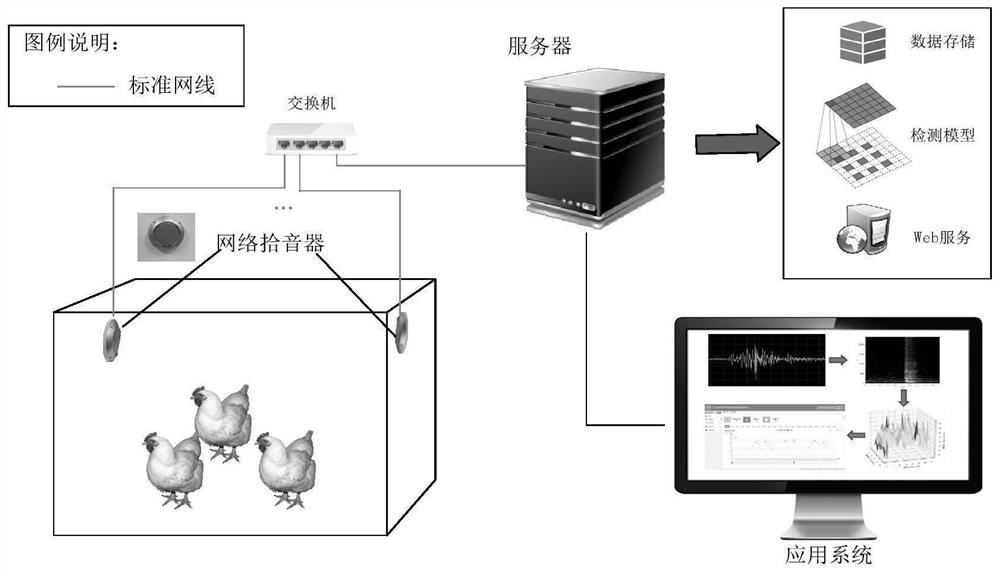

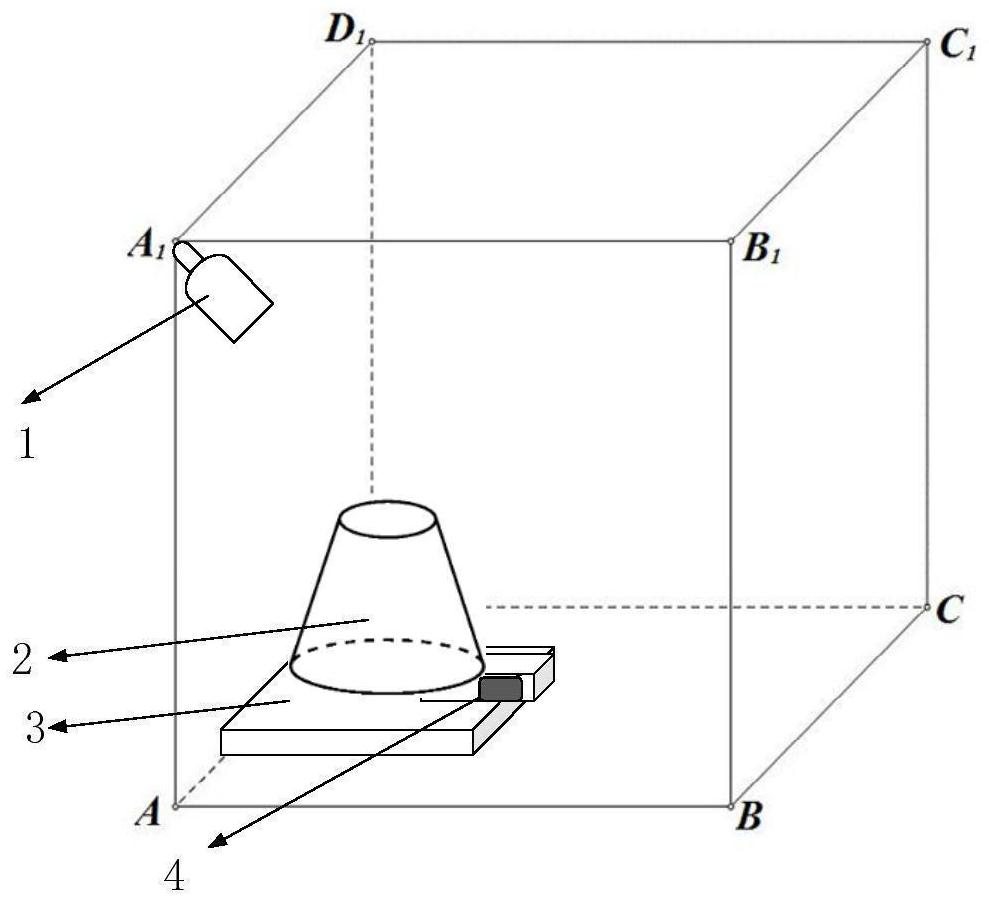

Broiler feed intake detection system based on audio technology

PendingCN112331231AHigh precisionAvoiding the Current Situation of Manually Measuring Group Feed IntakeSpeech analysisAvicultureSupport vector machineSound classification

The invention discloses a broiler feed intake detection system based on an audio technology. The system comprises a sound collection chamber, a switch, an upper computer and a server, and is characterized in that the sound collection chamber is used for collecting broiler pecking audio data; the switch is used for transmitting broiler pecking audio data; the upper computer is connected with the server and reads the audio data at regular time; a broiler pecking sound classification and recognition model algorithm based on a one-class support vector machine OC-SVM operated in a server makes thesound divided into pecking sound and non-pecking sound, and pecking and non-pecking are accurately judged by taking power spectral density as sound recognition features; and the broiler feed intake isobtained based on the relationship between the broiler pecking times and the feed intake. According to the method, an audio detection technology is taken as a carrier, the relationship between pecking times and feed intake is analyzed and determined according to the pecking times of the broiler chickens during feeding, and the feed intake of the broiler chickens is calculated by utilizing high correlation between the pecking times and the feed intake.

Owner:NANJING AGRICULTURAL UNIVERSITY

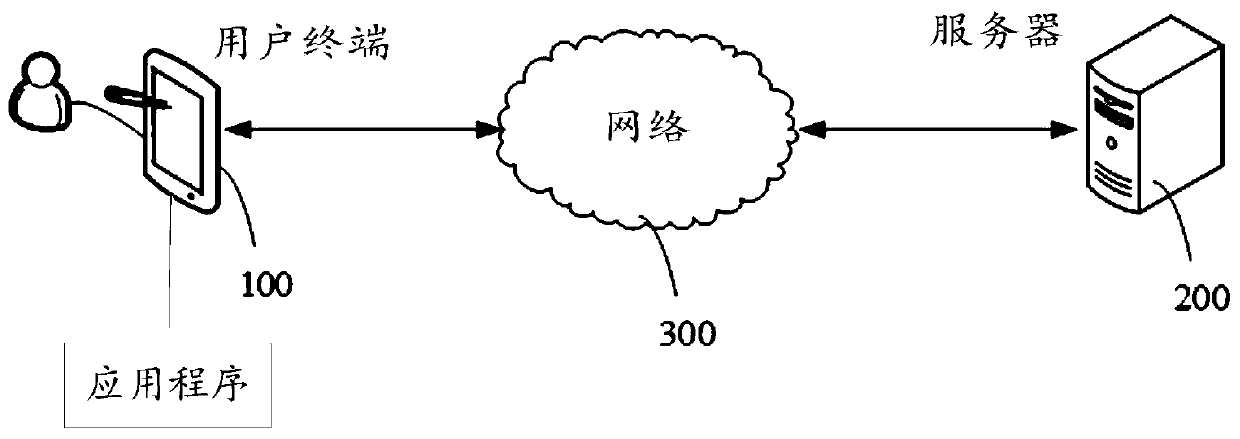

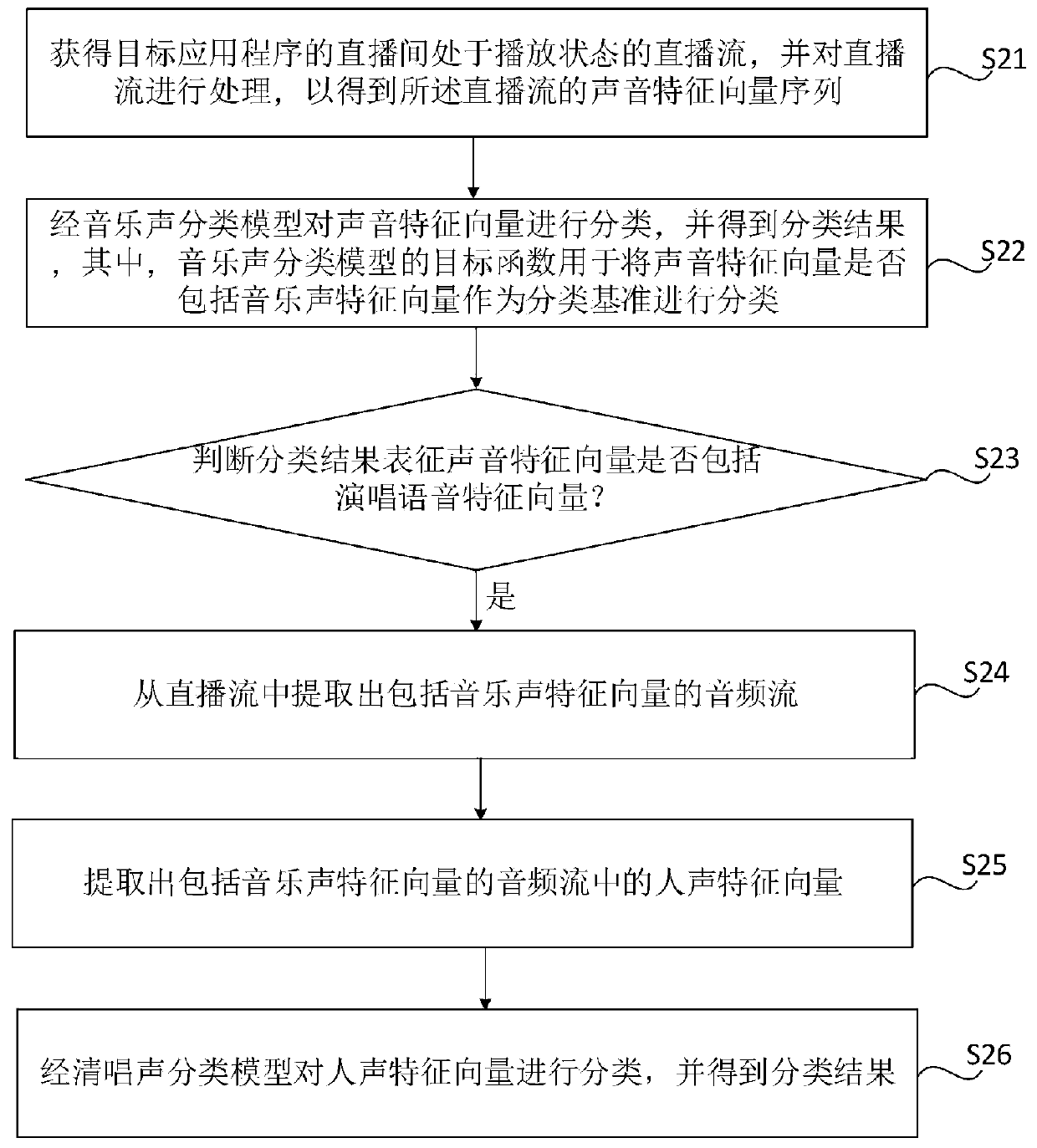

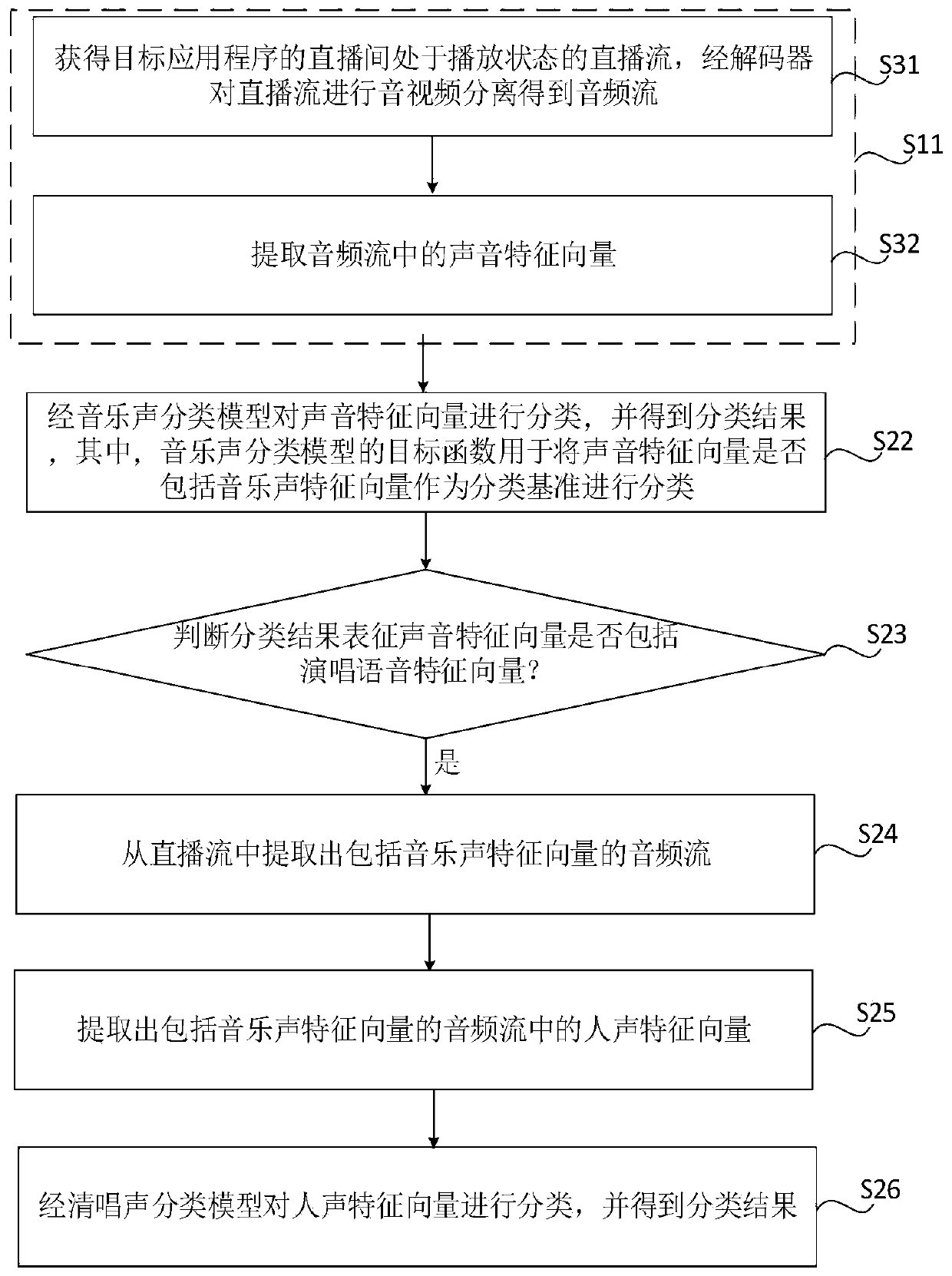

Direct broadcasting room singing identification method and device, server and storage medium

ActiveCN111147871AAccurately determineThe classification result is accurateElectrophonic musical instrumentsSpeech analysisSound classificationBroadcasting

The invention relates to a direct broadcasting room singing identification method and device, a server and a storage medium, and relates to the field of direct broadcasting. Firstly, a sound feature vector sequence is classified through a music sound classification model, and a classification result is obtained; if the classification result represents that the sound feature vector sequence comprises a music sound feature vector, an audio stream comprising the music sound feature vector is extracted from the direct broadcasting stream; then a human voice feature vector in the audio stream including the music voice feature vector is extracted; and finally, the human voice feature vectors are classified through a singing voice classification model to obtain a classification result. With the above analysis, the sound feature vector sequences are classified through the music sound classification model, and then the human voice feature vectors are classified through the singing sound classification model so that the obtained classification result is more accurate, and whether the anchor in the current direct broadcasting room is singing or not can be determined more accurately.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

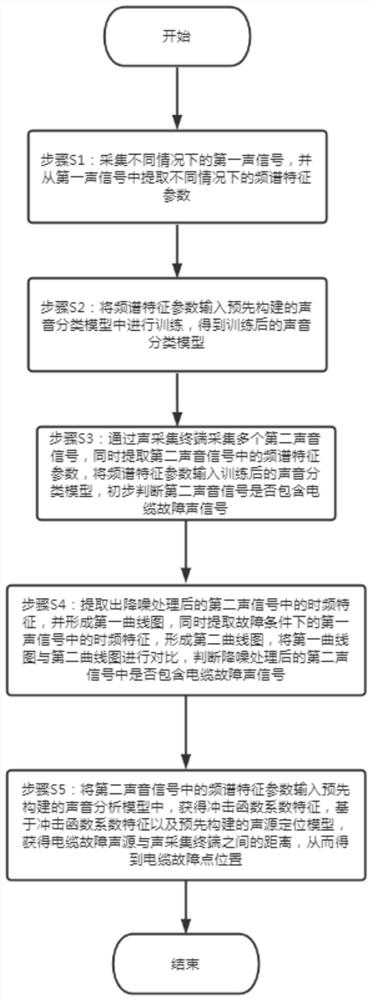

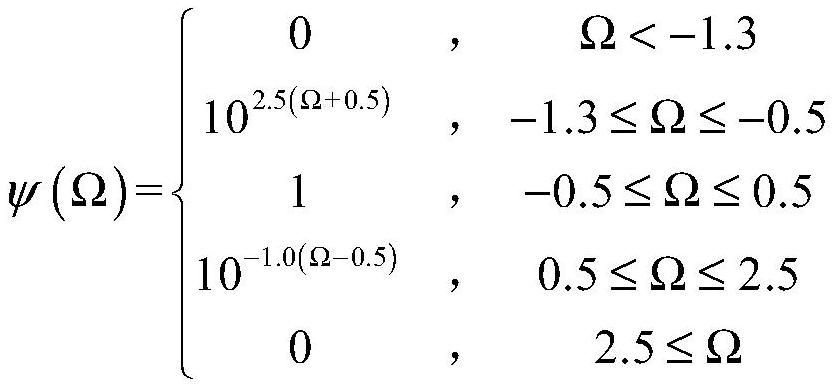

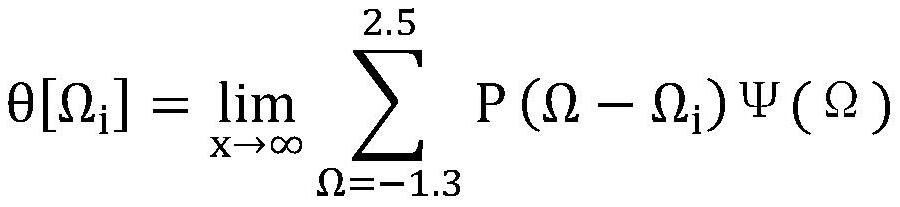

Cable fault point rapid positioning method and device

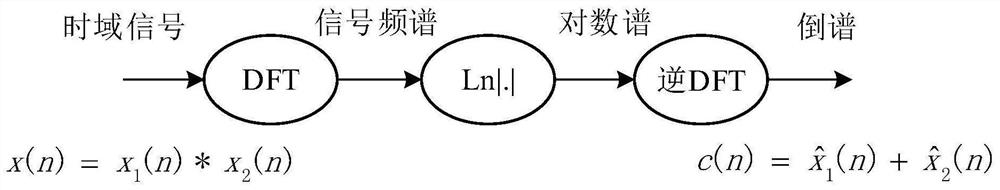

InactiveCN113466616ALow costSolve the problem of inaccurate distance measurementFault location by conductor typesSound sourcesFrequency spectrum

The invention provides a cable fault point rapid positioning method and device. The fault point positioning method comprises the steps of collecting a plurality of second sound signals, extracting spectrum characteristic parameters, inputting the spectrum characteristic parameters into a trained sound classification model, and preliminarily judging whether the second sound signals comprise a cable fault sound signal or not; performing noise reduction processing on the second sound signals through a noise reducer, and judging whether the second sound signals after noise reduction processing contain a cable fault sound signal or not; and if the second sound signals after noise reduction processing contain the cable fault sound signal, inputting the frequency spectrum characteristic parameter in the second sound signals into a pre-constructed sound analysis model to obtain an impact function coefficient characteristic, and based on the impact function coefficient characteristic and a pre-constructed sound source positioning model, obtaining the distance between the cable fault sound source and a sound acquisition terminal, and then obtaining the position of the cable fault point.

Owner:海南电网有限责任公司乐东供电局

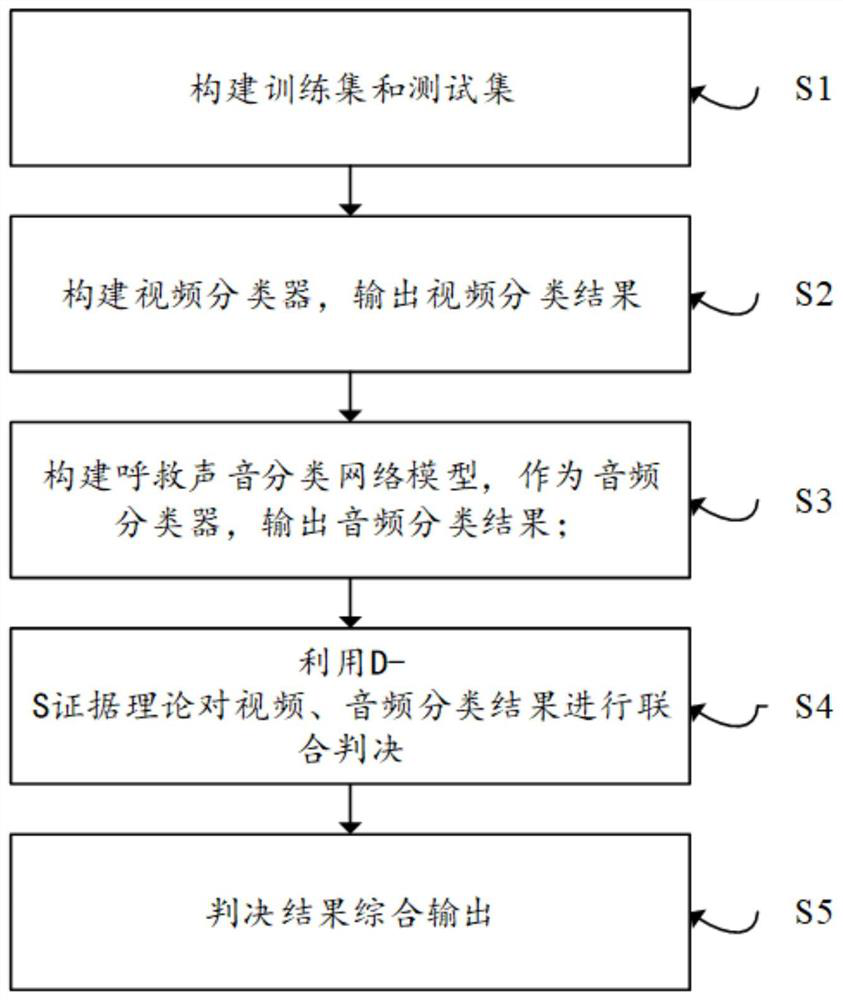

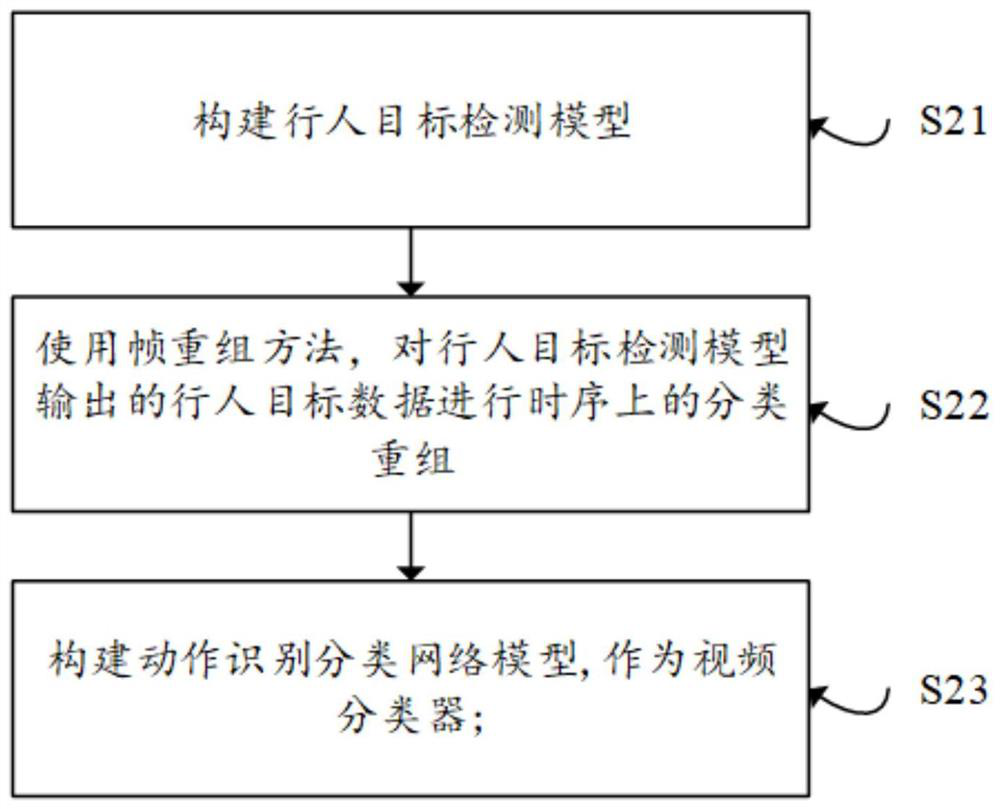

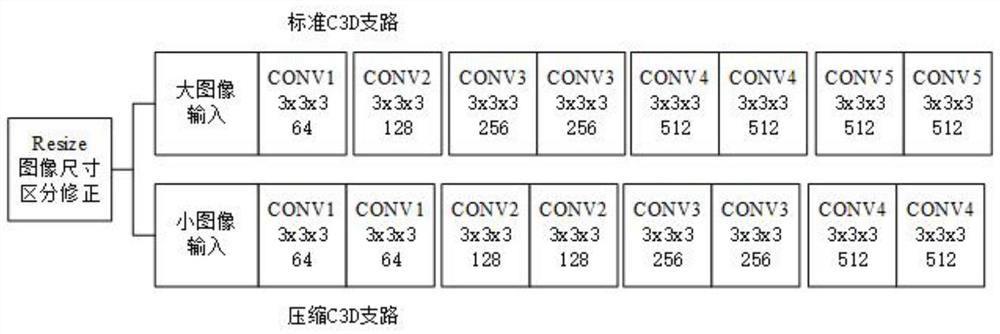

Audio and video combined pedestrian accidental tumble monitoring method based on neural network

PendingCN113887446AImprove object detection accuracyReduce computing timeCharacter and pattern recognitionNeural architecturesVideo monitoringSound classification

The invention provides an audio and video combined pedestrian accidental tumble monitoring method based on a neural network. The method comprises the steps of constructing an initial training set and a test set; constructing a pedestrian target detection model; carrying out time sequence classification recombination on the pedestrian targets output by the pedestrian target detection model by using a frame recombination method; constructing an action recognition classification network model as a video classifier; constructing a call-for-help sound classification network model as an audio classifier; performing joint judgment on video and audio classification results by using a D-S evidence theory; and comprehensively outputting a judgment result. According to the invention, the tumble accident of the pedestrian is monitored by using the audio and video monitoring data, assistance is provided for public safety behavior analysis, and the loss of life and property is reduced.

Owner:黑龙江雨谷科技有限公司

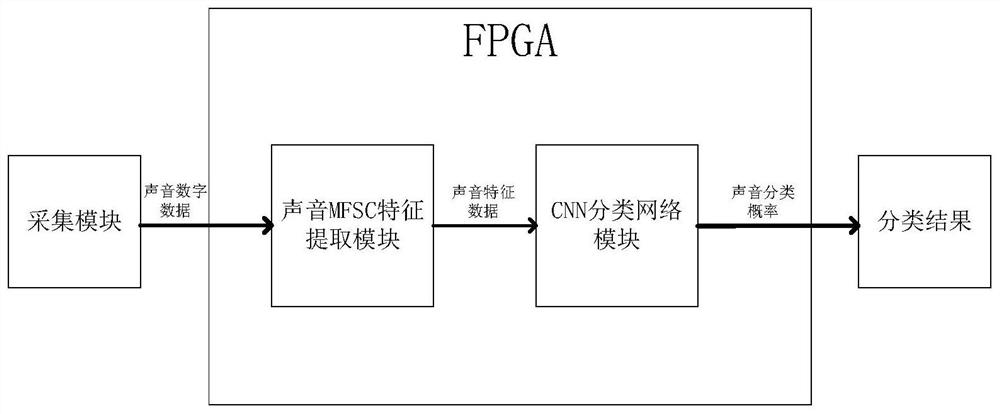

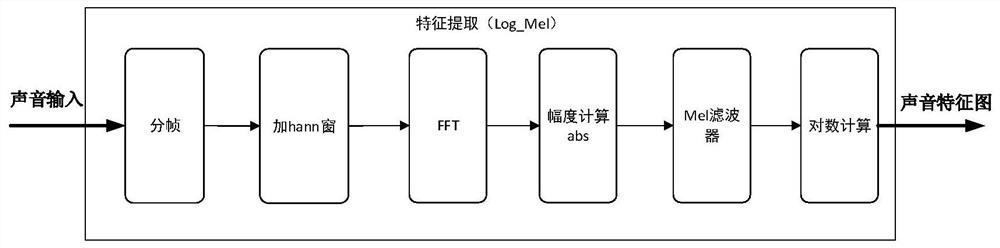

Real-time sound classification method and system based on FPGA

ActiveCN112397090AReduce power consumptionLow costSpeech analysisNeural architecturesFeature extractionSound classification

The invention discloses a real-time sound classification method based on an FPGA, wherein the method comprises the steps: carrying out feature extraction of sound data through the FPGA, obtaining an MFSC feature map of the sound data, carrying out calculation of the obtained MFSC feature map through a CNN classification network, and achieving the classification function of collected sounds; and monitoring and classifying the external sound conveniently and quickly anytime and anywhere. The system has the advantages of low power consumption, low cost, portability, real-time performance, high practicability and the like.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Ambient sound activated device

ActiveUS10666215B2Signal processingManually-operated gain controlEnvironmental soundsSound classification

In device having at least one microphone and one or more speakers, environmental sound may be recorded using the microphone, classified and mixed with source media sound to produce a mixed sound depending on the classification. The mixed sound may then be played over the one or more speakers.

Owner:SONY INTERACTIVE ENTERTAINMENT INC

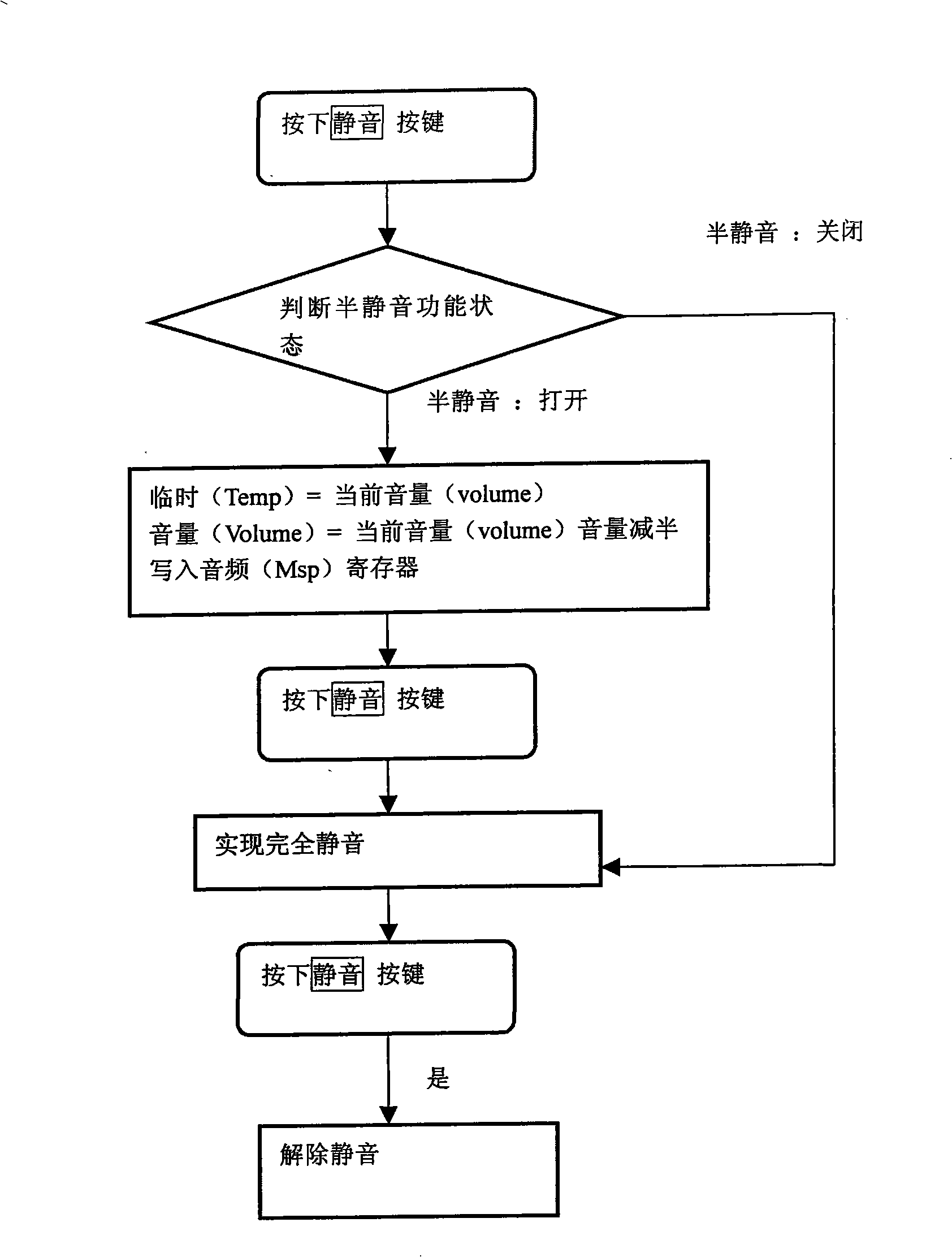

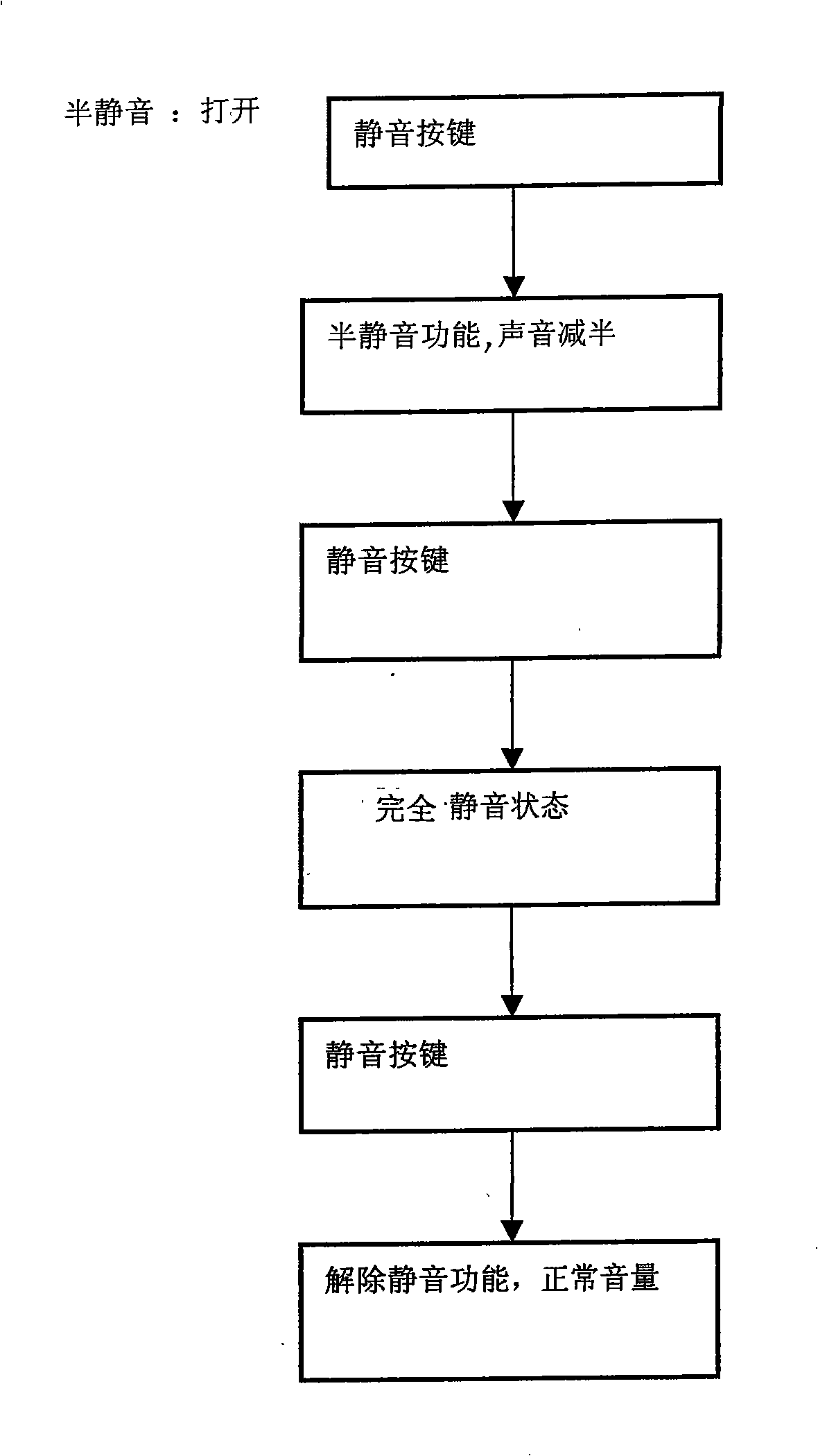

Method for selecting TV half silence playing

InactiveCN101262578AAchieve semi-silent stateAchieve complete silence functionTelevision system detailsColor television detailsKey pressingTemporary variable

The invention relates to a method for selecting the semi-mute broadcasting mode of a television. The method comprises the steps as follows: (1) entering a sound classification menu from a view-guiding main menu and configuring a semi-mute interface at the same time; (2) configuring the control of the navigation and direction keys of a TV remote controller and adjusting left navigation keys and right navigation keys corresponding to a channel classification menu; (3) selecting a semi-mute function to be in 'on' state with the semi-mute function started; (4) firstly recording current volume variable data and storing the variable data in the temporary variable and then halving the volume variable data to be read into a corresponding register by a mute key, with the volume instantly halved so as to realize the semi-mute state; pressing the mute key again and clearing the volume variable to zero to be read into the corresponding register, with full mute state; (5) selecting the semi-mute function to be in 'off' state with the semi-mute function not started and then pressing the mute key again to have original mute function and realize the full mute state. The method for selecting a semi-mute broadcasting mode of a television provided can rapidly and conveniently reduce the volume, with convenient use.

Owner:TIANJIN SAMSUNG ELECTRONICS DISPLAY

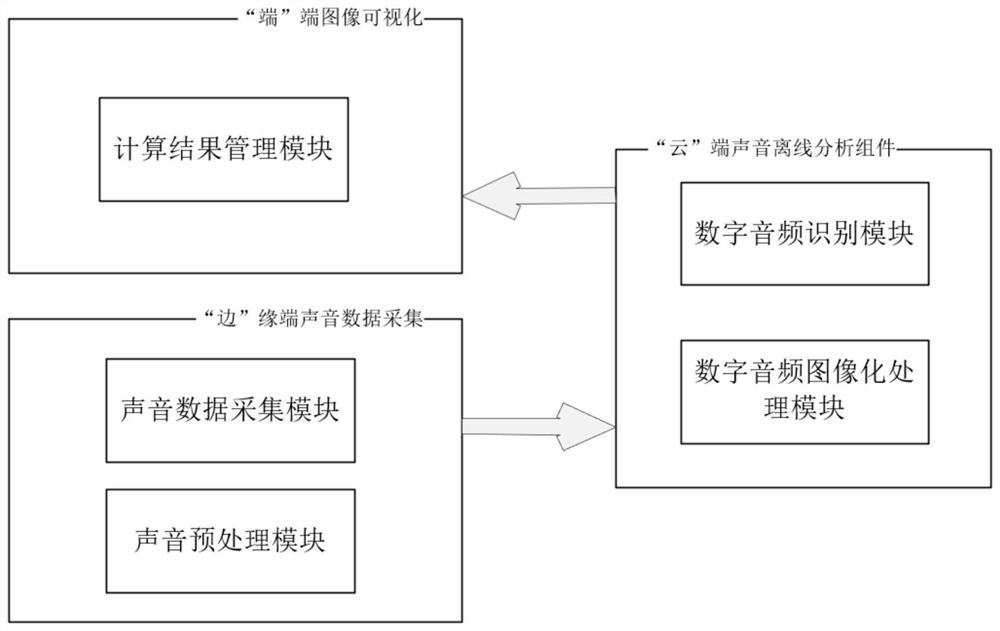

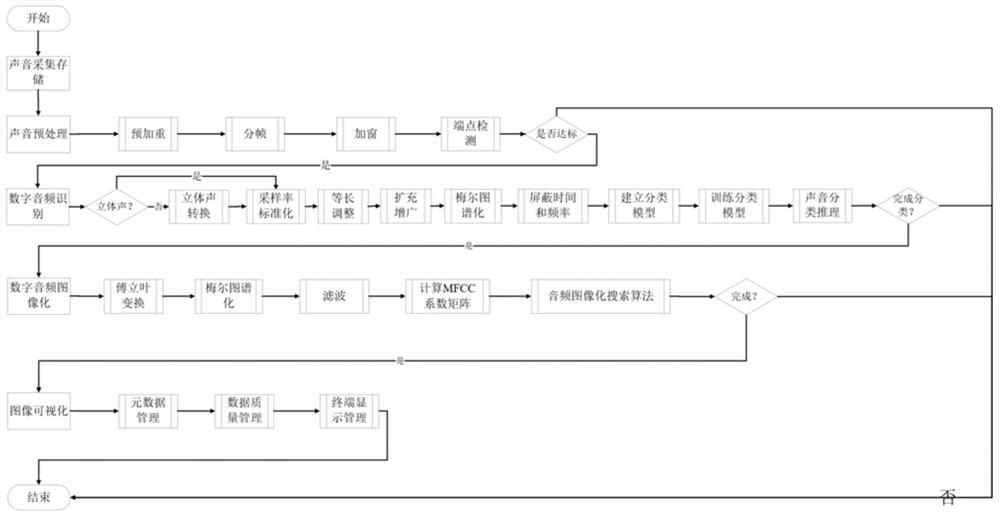

Sound big data analysis and calculation imaging system based on cloud side end

PendingCN113539298AUnderstand the warning meaningMeet the needs of intelligenceSpeech analysisCharacter and pattern recognitionData acquisitionSound classification

The invention provides a sound big data analysis and calculation imaging system based on a cloud side end. The system comprises a sound data acquisition module, a sound preprocessing module, a digital audio recognition module, a digital audio imaging processing module and a calculation result management module. According to the invention, a new early warning form and method are provided for city safety monitoring, and the intelligent demand of city emergency early warning in digital city construction is satisfied by using a deep learning sound classification model and a sound graphical search algorithm of the cloud.

Owner:CHINA INFOMRAITON CONSULTING & DESIGNING INST CO LTD

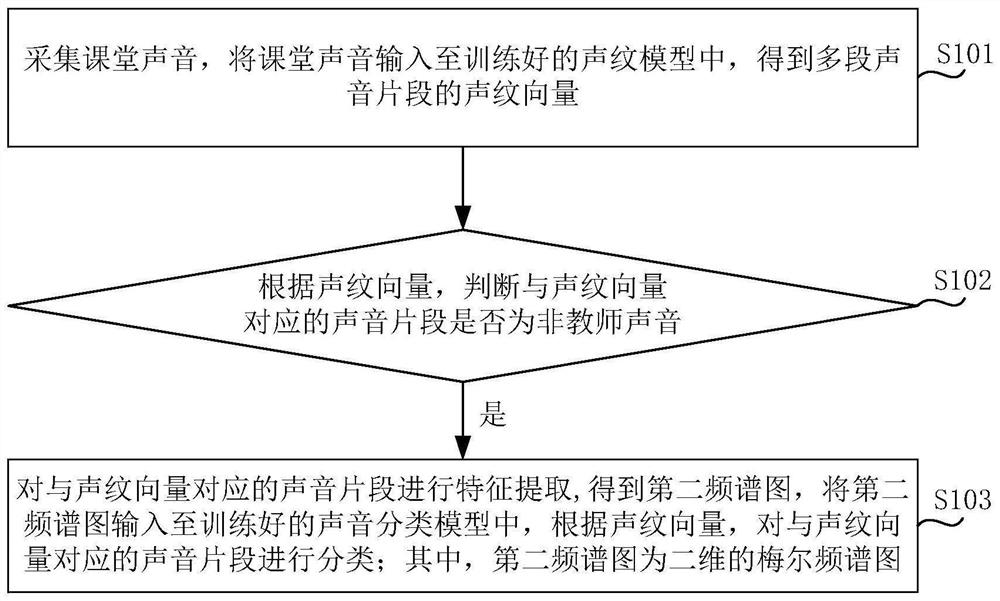

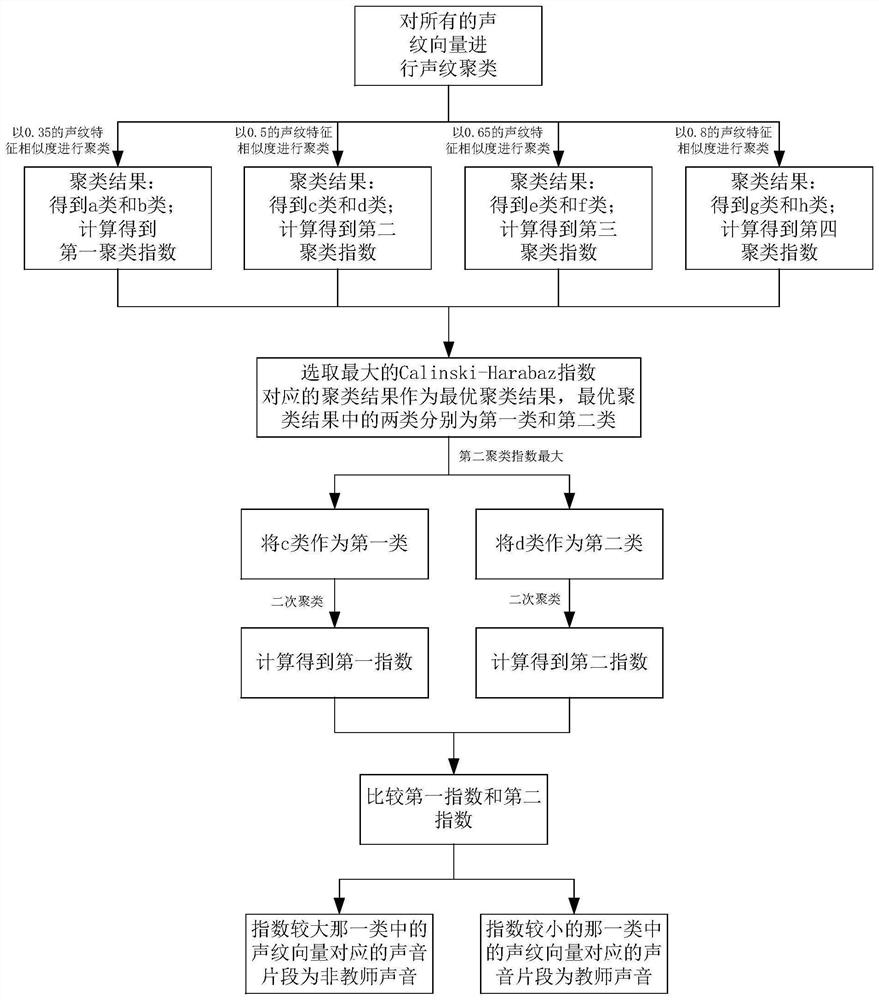

Method and device for distinguishing different sounds in class, equipment and storage medium

PendingCN114822557ASpeech analysisCharacter and pattern recognitionSound classificationNetwork structure

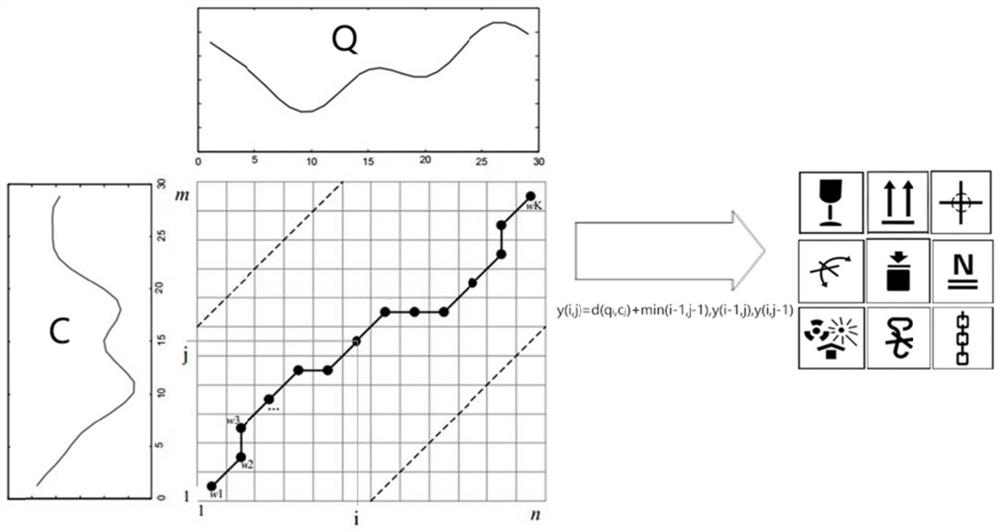

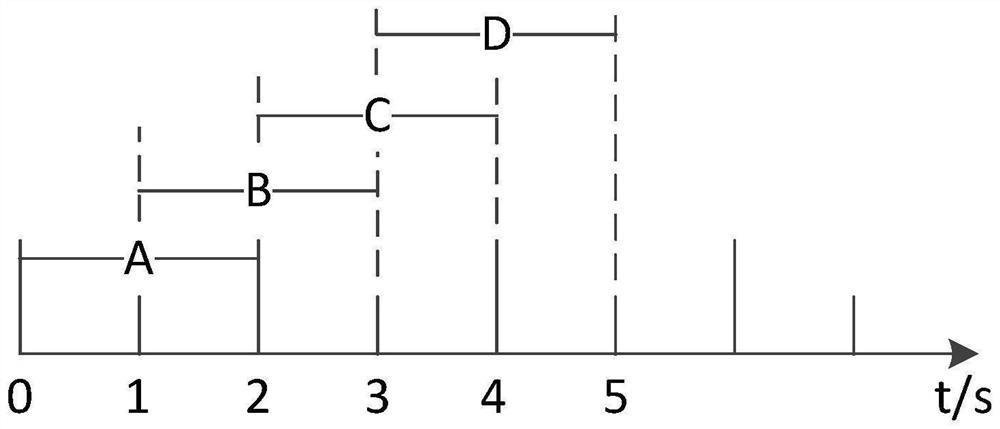

The invention relates to a method and device for distinguishing different sounds in a classroom, equipment and a storage medium, and relates to the technical field of sound classification, and the method comprises the steps: collecting classroom sounds, inputting the classroom sounds into a trained voiceprint model, and obtaining voiceprint vectors of a plurality of sound segments; according to the voiceprint vector, judging whether a sound fragment corresponding to the voiceprint vector is a non-teacher sound; if yes, inputting the voiceprint vector into a trained sound classification model, and classifying sound segments corresponding to the voiceprint vector according to the voiceprint vector; the training method of the sound classification model comprises the following steps: extracting Mel spectrum features of each training sample in a training sample set; converting the Mel spectrum features into a two-dimensional Mel spectrum graph; and inputting the Mel spectrogram into the sound classification model, and training the sound classification model by using a VGG11 network structure. The method and the device have the effect of distinguishing different sounds in the classroom.

Owner:BEIJING ZHONGQING MODERN TECH CO LTD

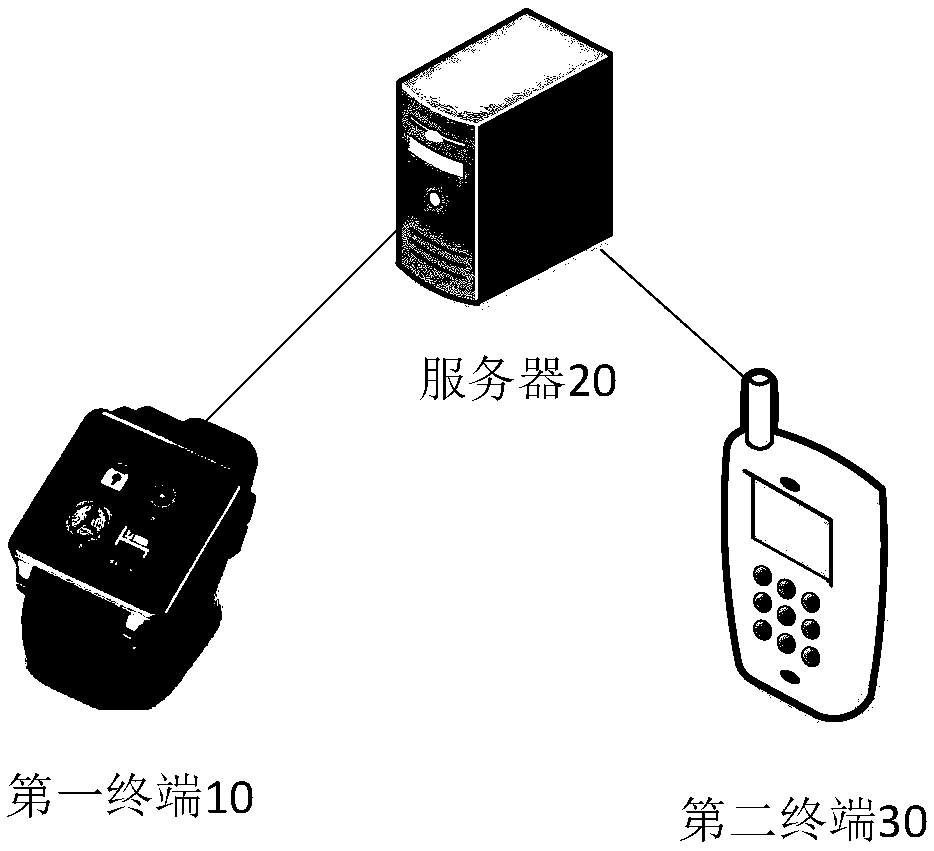

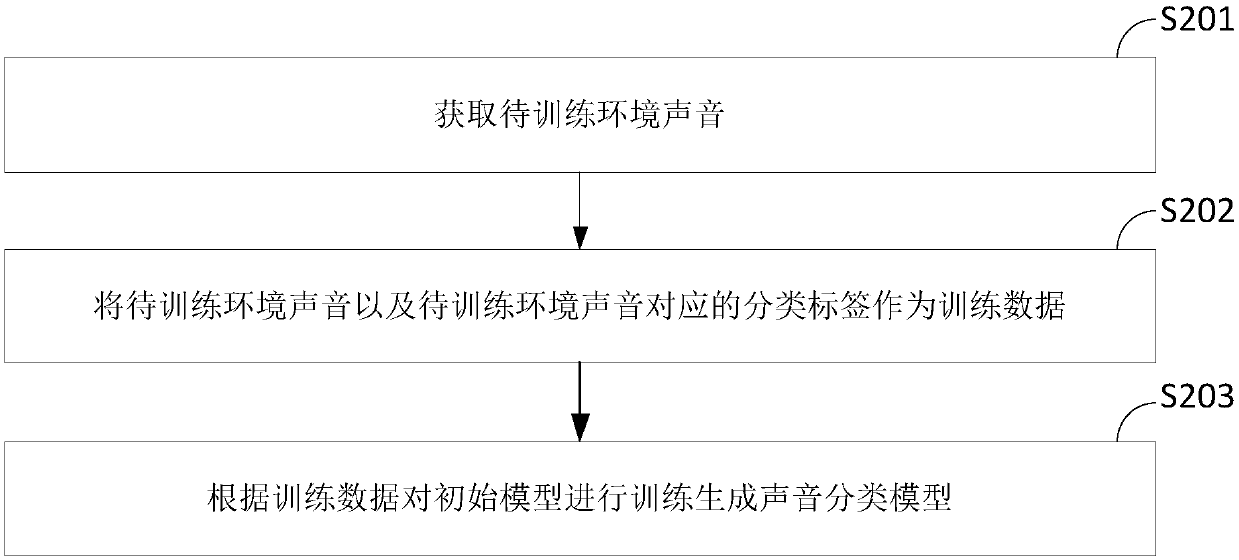

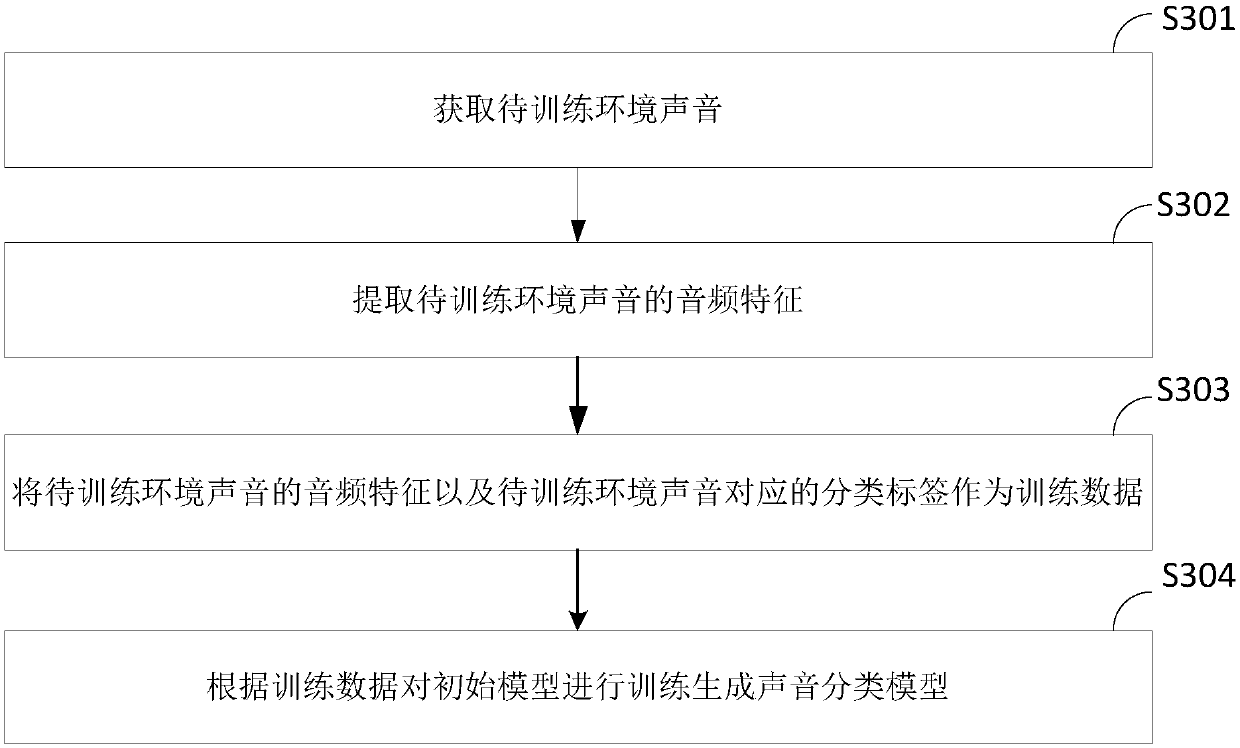

Method and device for realizing alarming

InactiveCN111243224AFull security protectionRealize intelligent alarmAlarmsSpeech recognitionAlarm messageEnvironmental sounds

The embodiment of the invention discloses a method and a device for realizing alarming. In specific implementation, the method comprises the following steps of: acquiring ambient sound of the environment where a user is , inputting the acquired ambient sound into a sound classification model generated by pre-training to obtain a classification result of the ambient sound, and when the classification result of the ambient sound belongs to a dangerous environment, sending alarming information to a first terminal used by a user and / or second terminal equipment bound with the first terminal equipment. Namely, when the user is in a dangerous environment, the user does not need to actively trigger the alarm function, the user is identified to be in the dangerous environment according to the sound of the current environment of the user, and the alarm information is automatically sent to the first terminal and / or the second terminal, so that the purposes of intelligent alarming, reminding theuser to pay attention to safety and / or notifying relatives of the user are achieved, and the user safety is fully protected.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

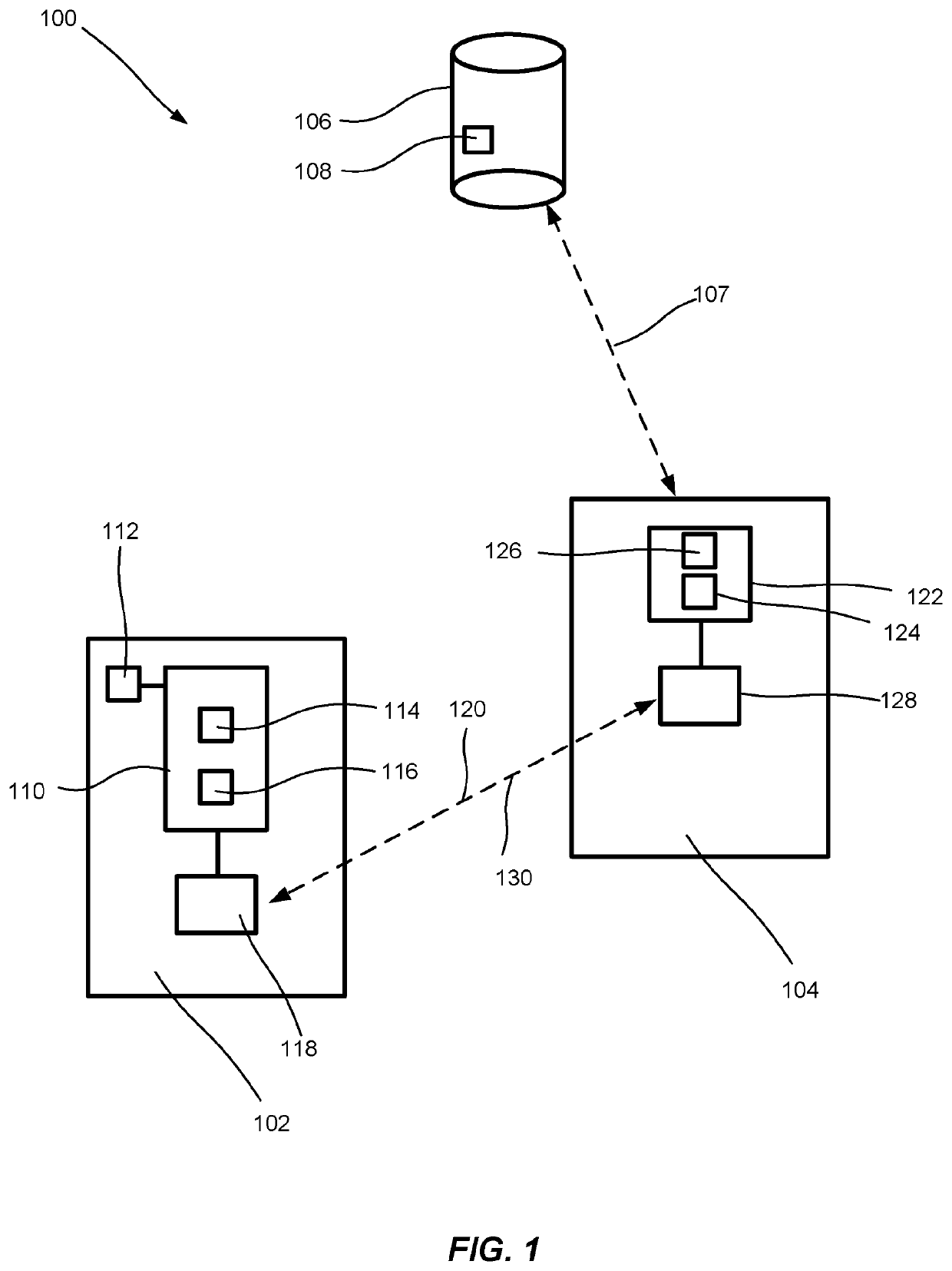

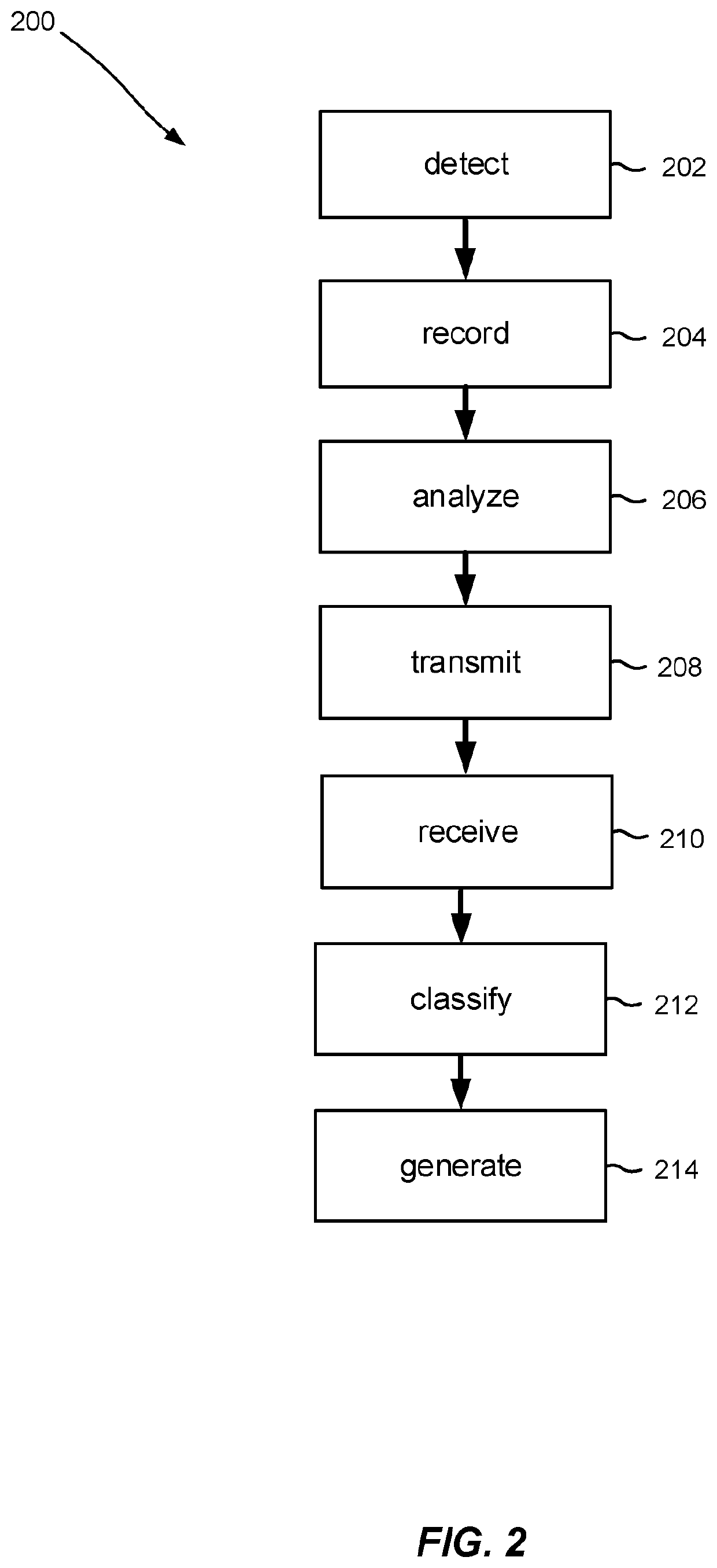

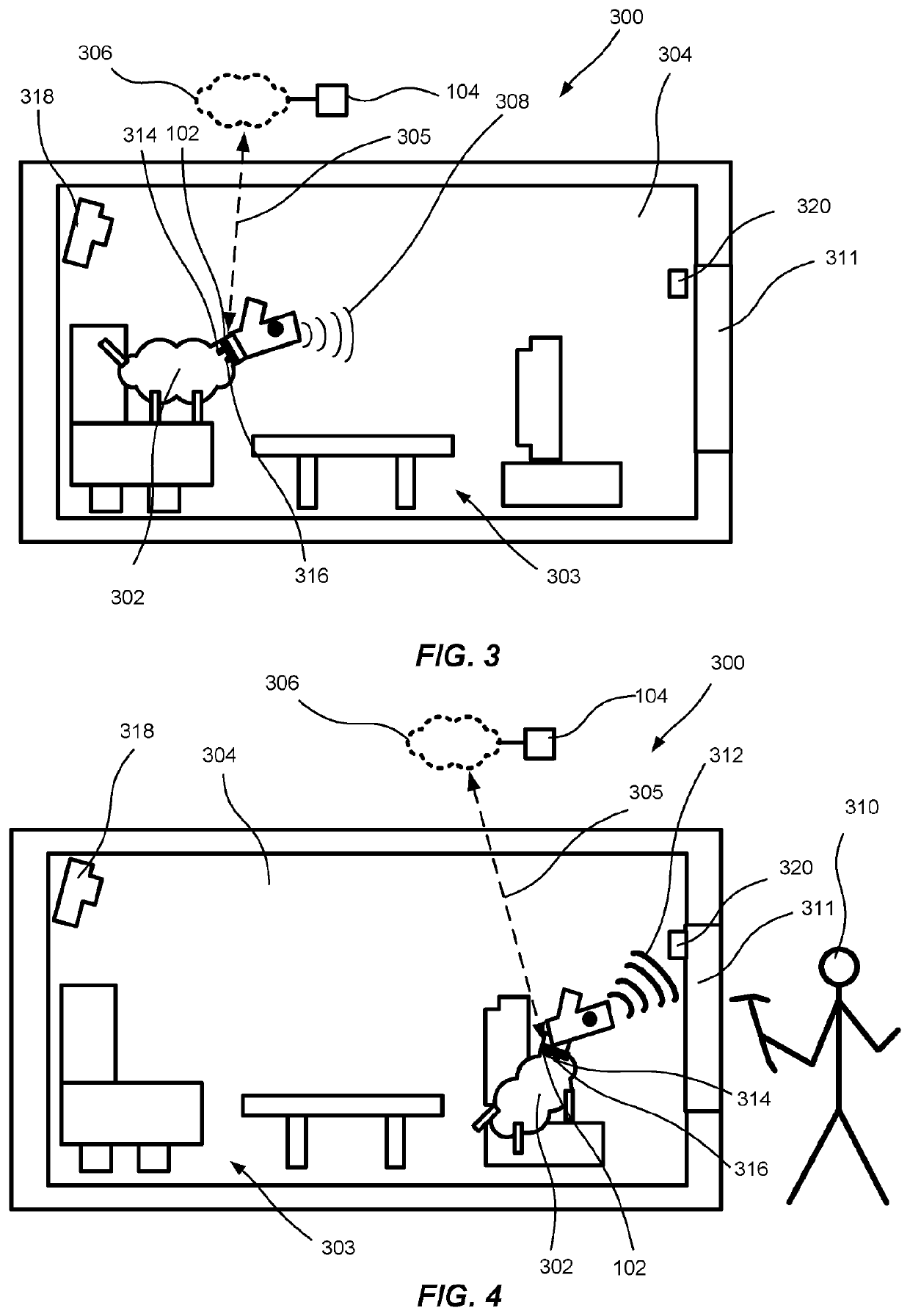

A system and method for generating a status output based on sound emitted by an animal

ActiveUS20200043309A1Security arrangementBurglar alarm mechanical vibrations actuationSound generationSound classification

The disclosure relates to a system for generating a status output based on sound emitted by an animal. The system comprising: a client (102), a server (104) and a database (106); the database (106) is accessible (107) by the server (104) and comprises historic sound data pertaining to the animal (302) or animals of the same type as the animal; the client (102) comprising circuitry (110) configured to: detect (202) sound emitted (308, 312) by the animal (302); record (204) the detected sound (308, 312); analyze (206) the recorded sound for detecting whether the sound (308, 312) comprises a specific sound characteristic out of a plurality of possible sound characteristics, wherein the sound characteristic includes at least one of intensity, frequency and duration of the detected sound; transmit (208), in response to detecting that the sound (308, 312) comprises the specific sound characteristic, the recorded sound to a server (104); the server (104) comprises circuitry (122) configured to: receive (210) the recorded sound; classify (212) the recorded sound by comparing one or more sound characteristics of the recorded sound with the historic sound data comprised in the database (106); generate (214) the status output based on the classification of the recorded sound. A method (200) for generating a status output based on sound emitted by an animal is also provided.

Owner:SONY CORP

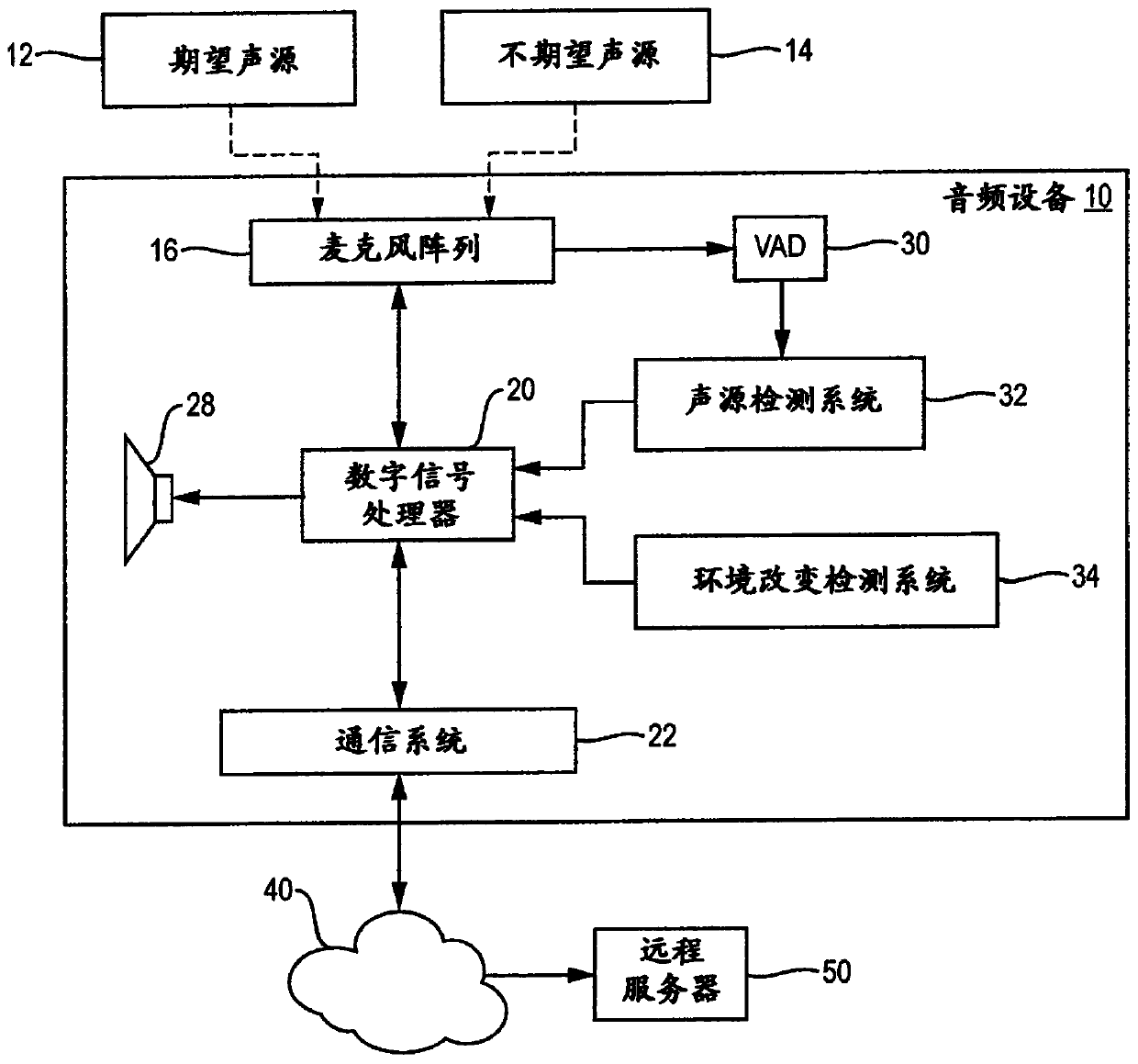

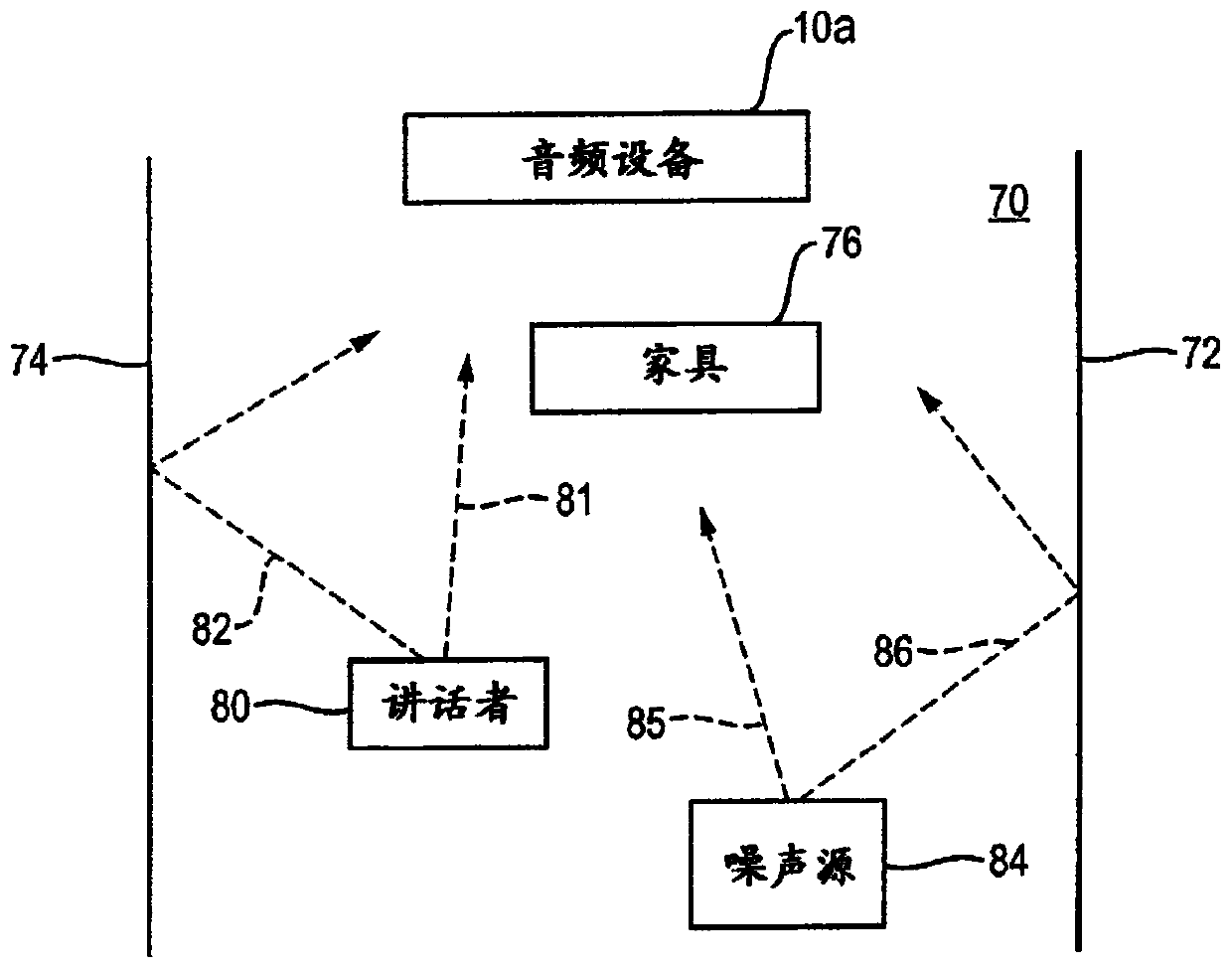

Audio device filter modification

PendingCN110268470AMicrophones signal combinationSpeech recognitionFilter (signal processing)Sound classification

An audio device with a number of microphones that are configured into a microphone array. An audio signal processing system in communication with the microphone array is configured to derive a plurality of audio signals from the plurality of microphones, use prior audio data to operate a filter topology that processes audio signals so as to make the array more sensitive to desired sounds than to undesired sounds, categorize received sounds as one of desired sounds or undesired sounds, and use the categorized received sounds and the categories of the received sounds to modify the filter topology.

Owner:BOSE CORP

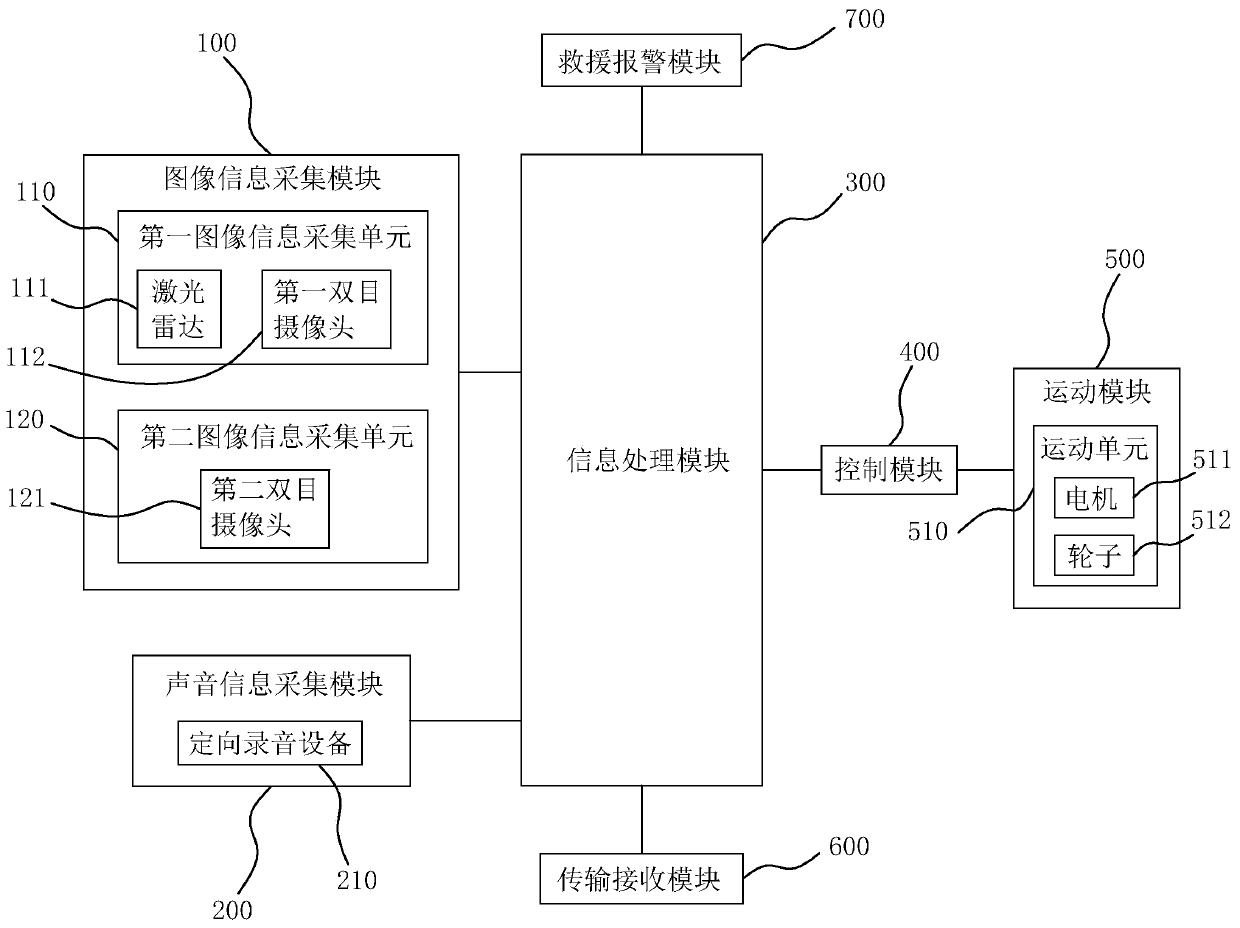

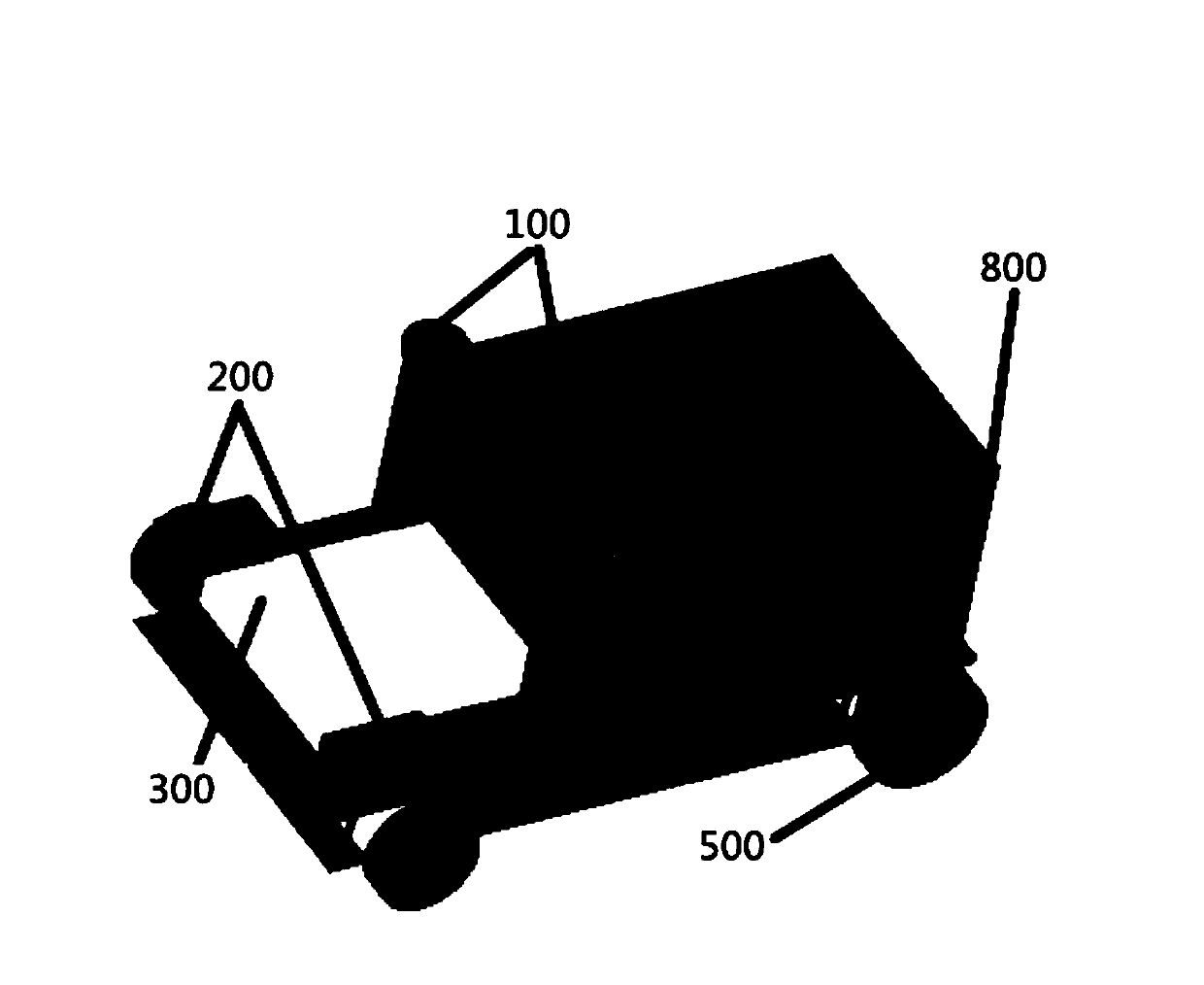

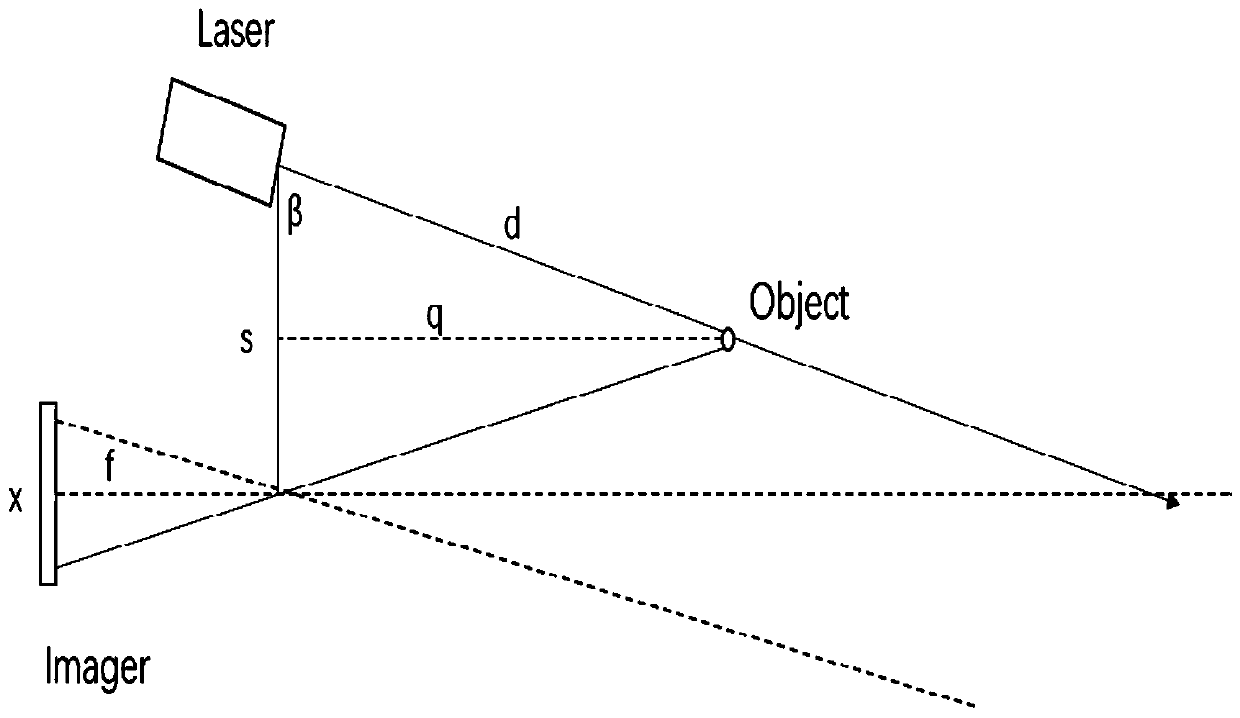

Waterside rescue robot based on SLAM technology and deep learning

PendingCN110696003AFast patrolIntelligent and efficientProgramme-controlled manipulatorInformation processingRescue robot

The invention discloses a waterside rescue robot based on the SLAM technology and deep learning. The waterside rescue robot comprises an image information acquisition module, a sound information acquisition module, an information processing module, a control module, a motion module, a transmission receiving module and a rescue alarm module; the image information acquisition module is used for collecting map information of a surrounding environment and image information of a water surface environment; the sound information acquisition module is used for collecting sound information of the watersurface environment; the information processing module is used for receiving the map information, the image information and the sound information and performing recognition based on the SLAM technology of an ROS system and a target detection and sound classification algorithm based on a deep convolutional neural network; the control module is used for outputting a motion control signal; the motion module is used for doing responding action according to the motion control information; the transmission receiving module is used for data transmission; and the rescue alarm module is connected to the information processing module and is used for sending a rescue signal. The waterside rescue robot based on the SLAM technology and deep learning according to the embodiment can perform rapid patrol, can detect a sudden drowning situation and promptly notify rescuers and is efficient and intelligent.

Owner:WUYI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com