Far-field sound classification method and device

A classification method and sound technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of target sound interference, performance degradation, and lower accuracy of sound classification tasks, and achieve the effect of improving accuracy and good robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] In order to make the above objects, features and advantages of the present invention more obvious and understandable, the present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

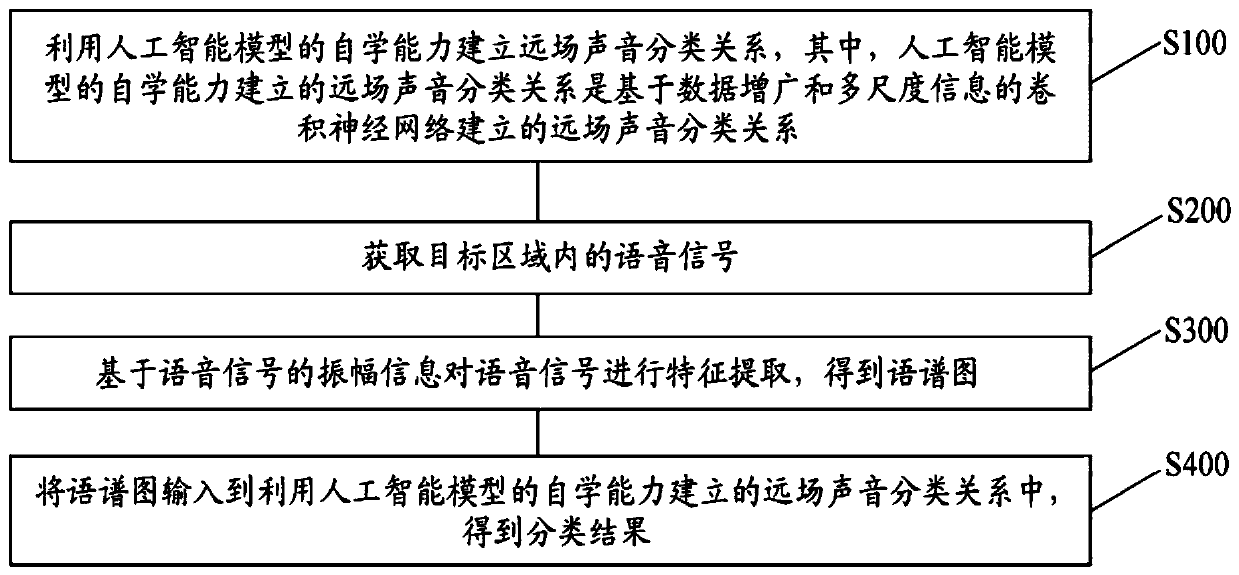

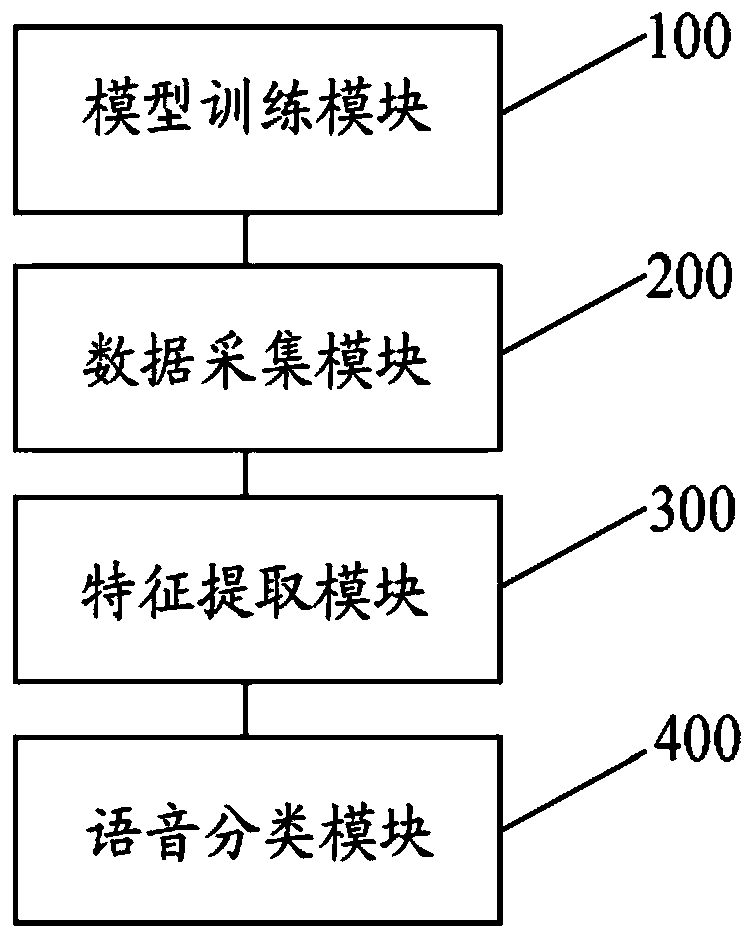

[0042] One of the core concepts of the embodiments of the present invention is to provide a far-field sound classification method, including: using the self-learning ability of the artificial intelligence model to establish a far-field sound classification relationship, wherein the far-field sound established by the self-learning ability of the artificial intelligence model The classification relationship is the far-field sound classification relationship established by the convolutional neural network based on data augmentation and multi-scale information; the speech signal in the target area is obtained; the feature extraction of the speech signal is performed based on the amplitude information of the speech signal, an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com