Depth camera hand-eye calibration method based on CALTag and point cloud information

A hand-eye calibration and depth camera technology, which is applied in image data processing, instruments, calculations, etc., can solve the problems of low accuracy and deviation of coordinate transformation capture

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

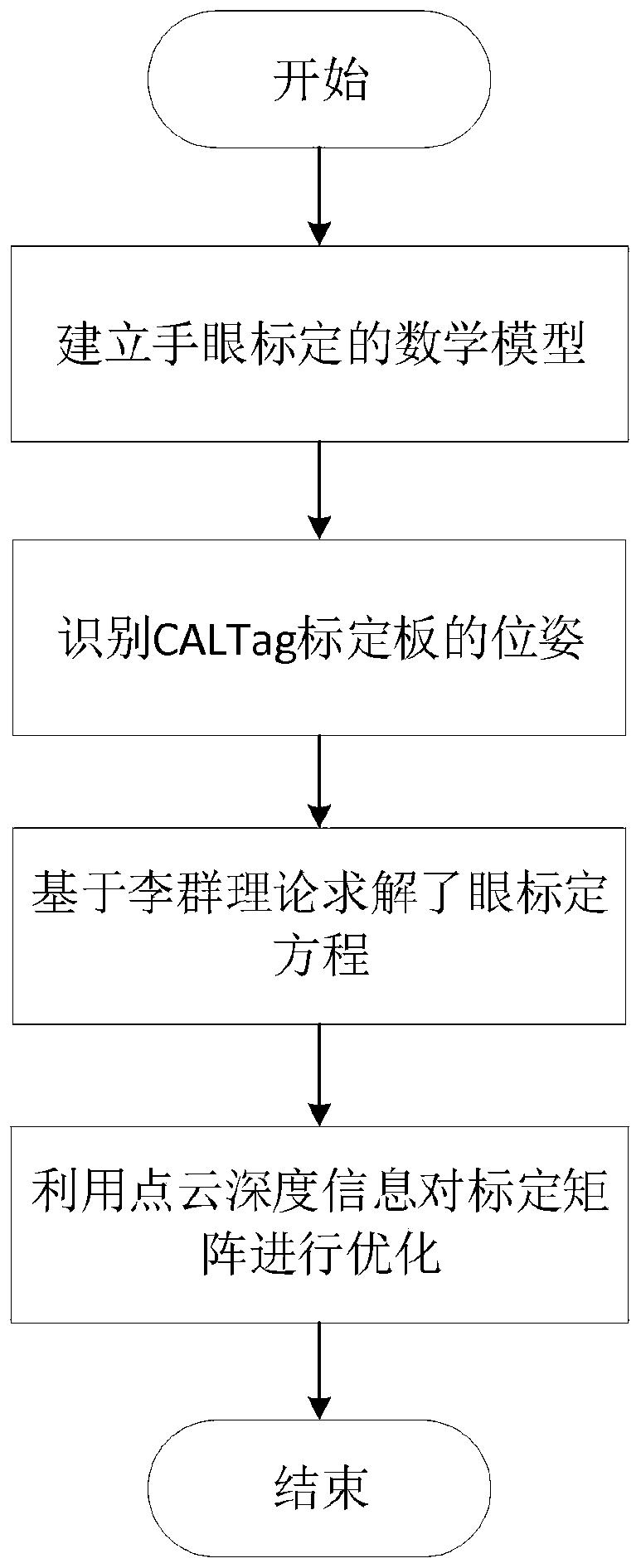

[0061] refer to figure 1 , a hand-eye calibration method for depth cameras based on CALTag and point cloud information, comprising the following steps:

[0062] Step 1), establish the mathematical model of the hand-eye calibration system:

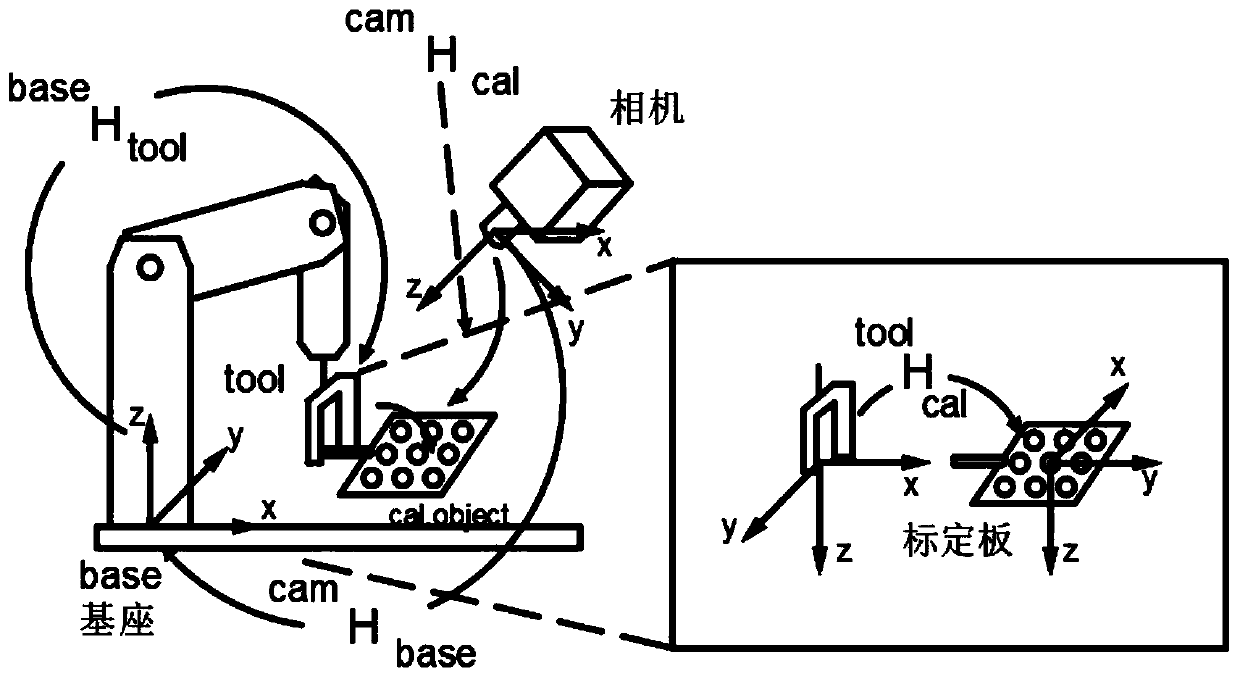

[0063] In the hand-eye calibration system, according to the difference in the mutual orientation relationship between the camera and the robot, the hand-eye relationship can be divided into Eye-in-Hand and Eye-to-Hand models. The Eye-in-Hand model is to install the camera at the end of the robot, so the camera moves with the end during the robot's working process. The camera in the Eye-to-Hand model is fixed, and the camera remains stationary relative to the target while the robot is moving.

[0064] For the hand-eye system with the external fixation of the "eye", that is, the Eye-to-Hand model, a calibration bo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com