Multi-camera data fusion method in monitoring system

A data fusion and monitoring system technology, applied in the field of computer vision, can solve problems such as the inability to monitor screen fusion, and achieve the effects of improving wide applicability, improving imaging accuracy, and improving formation efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

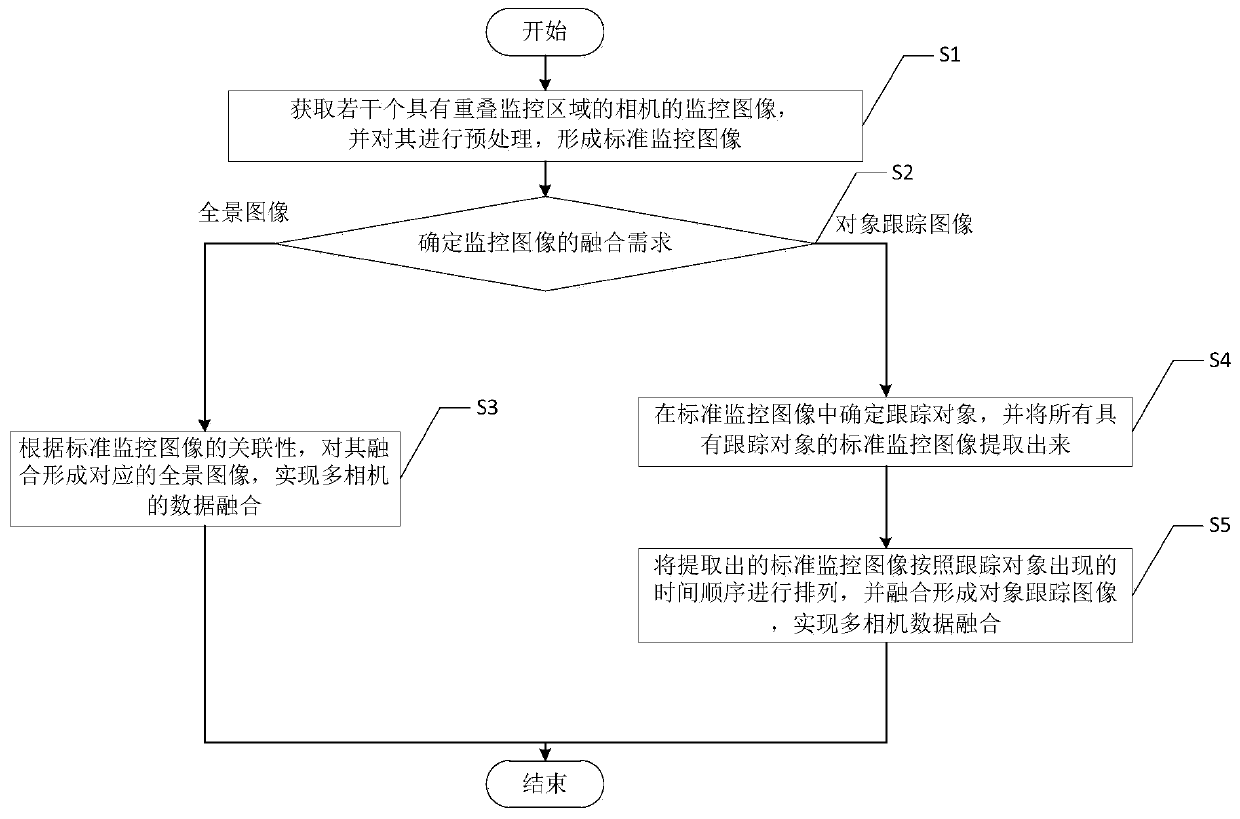

[0050] Such as figure 1 As shown, a multi-camera data fusion method in a monitoring system includes the following steps:

[0051] S1. Obtain monitoring images of several cameras with overlapping monitoring areas, and perform preprocessing on them to form standard monitoring images;

[0052]S2. Determine the fusion requirements of the surveillance images;

[0053] If it is fusion to form a panoramic image, then enter step S3;

[0054] If the object tracking image is formed for fusion, then enter step S4;

[0055] S3. According to the relevance of the standard monitoring images, they are fused to form a corresponding panoramic image, so as to realize the data fusion of multiple cameras;

[0056] S4. Determine the tracking object in the standard monitoring image, and extract all the standard monitoring images with the tracking object, and enter step S5;

[0057] S5. Arranging the extracted standard monitoring images according to the chronological sequence of the tracking obje...

Embodiment 2

[0059] The preprocessing of the monitoring image in step S1 of the above-mentioned embodiment 1 includes sequentially performing size standardization processing, grayscale processing, binarization processing and image denoising processing on the monitoring image. Among them, the size of the monitoring image is standardized to 512×512 pixels, which is convenient for subsequent image data processing and fusion; the grayscale image obtained by grayscale processing is also called grayscale image, and each pixel in the image can be represented by 0( The brightness value (Intensity) from black) to 255 (white) means that each pixel of the grayscale image only needs one byte to store the grayscale value (also known as intensity value, brightness value), and the grayscale range is 0-255. The color of each pixel in a color image is determined by three components of R, G, and B, and each component has 255 values, so that a pixel can have a color range of more than 16 million (255,255,255)...

Embodiment 3

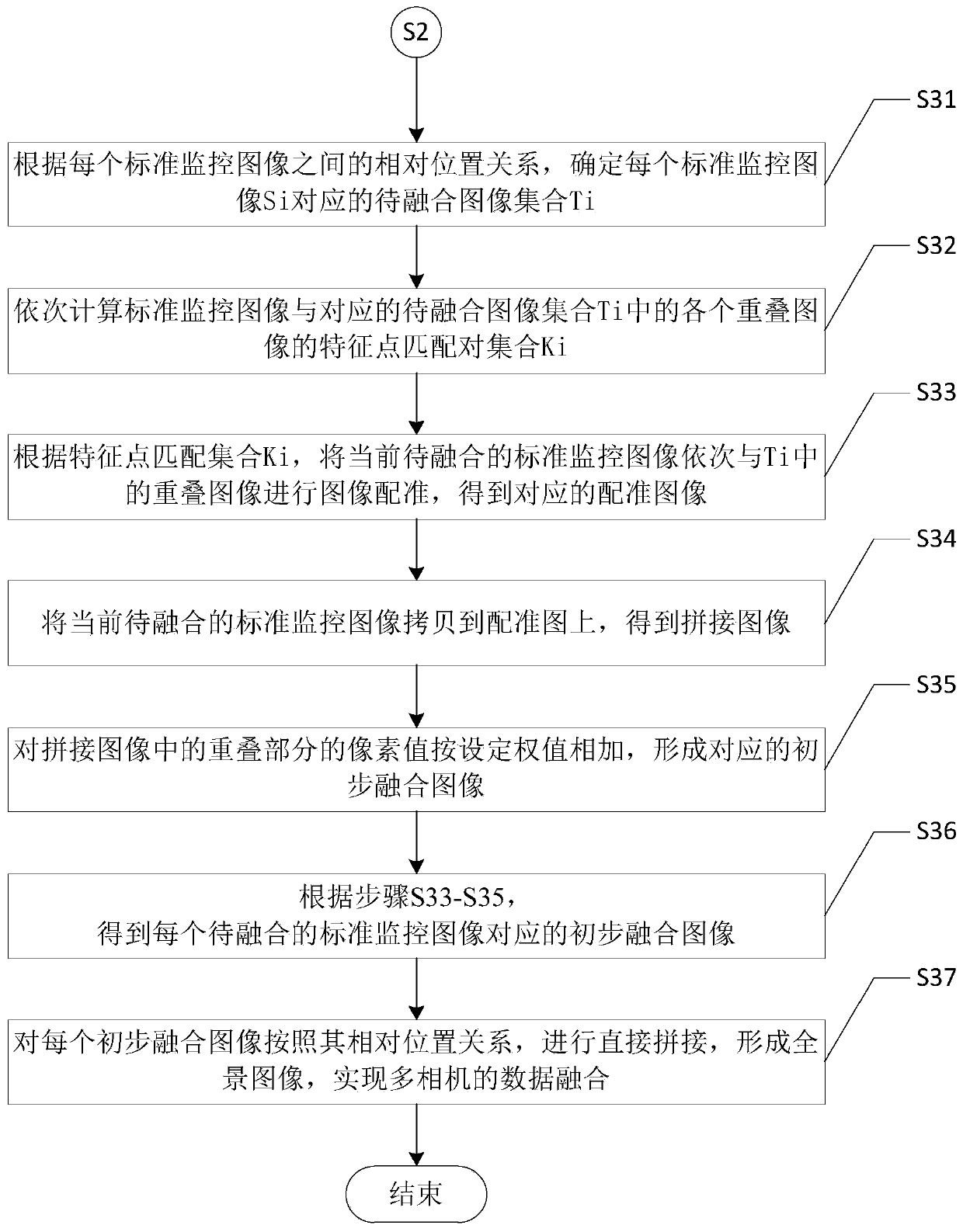

[0061] Such as figure 2 As shown, the step S3 of the above-mentioned embodiment 1 is specifically:

[0062] S31. Determine each standard monitoring image S according to the relative positional relationship between each standard monitoring image i The corresponding set of images to be fused T i ;

[0063] In the formula, the subscript i is the label of the standard monitoring image, and i=1,2,3,...,I, I is the standard monitoring image S i the total number of T i ={T 1 , T 2 ,...,T n ,...,T N}, n is required and standard monitoring image S i The overlapping image labels for fusion, n=1, 2, 3,..., N, N is the required and standard monitoring image S i The total number of standard surveillance images for fusion;

[0064] S32. Calculate the standard monitoring image S sequentially i and the corresponding set of images to be fused T i The feature point matching pair set K of each overlapping image in i ;

[0065] S33. Match the set K according to the feature points ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com