A Video Understanding Method Based on Compression-Excitation Pseudo-3D Network

A pseudo-3D and network technology, applied in the field of video understanding based on compression-excitation pseudo-3D network, can solve the problems of difficult training, difficult extraction of deep features, and difficulties, so as to increase accuracy and robustness, and deepen the number of network layers , Improve the effect of network performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] All features disclosed in this specification, or steps in all methods or processes disclosed, may be combined in any manner, except for mutually exclusive features and / or steps.

[0024] Any feature disclosed in this specification (including any appended claims, abstract and drawings), unless expressly stated otherwise, may be replaced by alternative features which are equivalent or serve a similar purpose. That is, unless expressly stated otherwise, each feature is one example only of a series of equivalent or similar features.

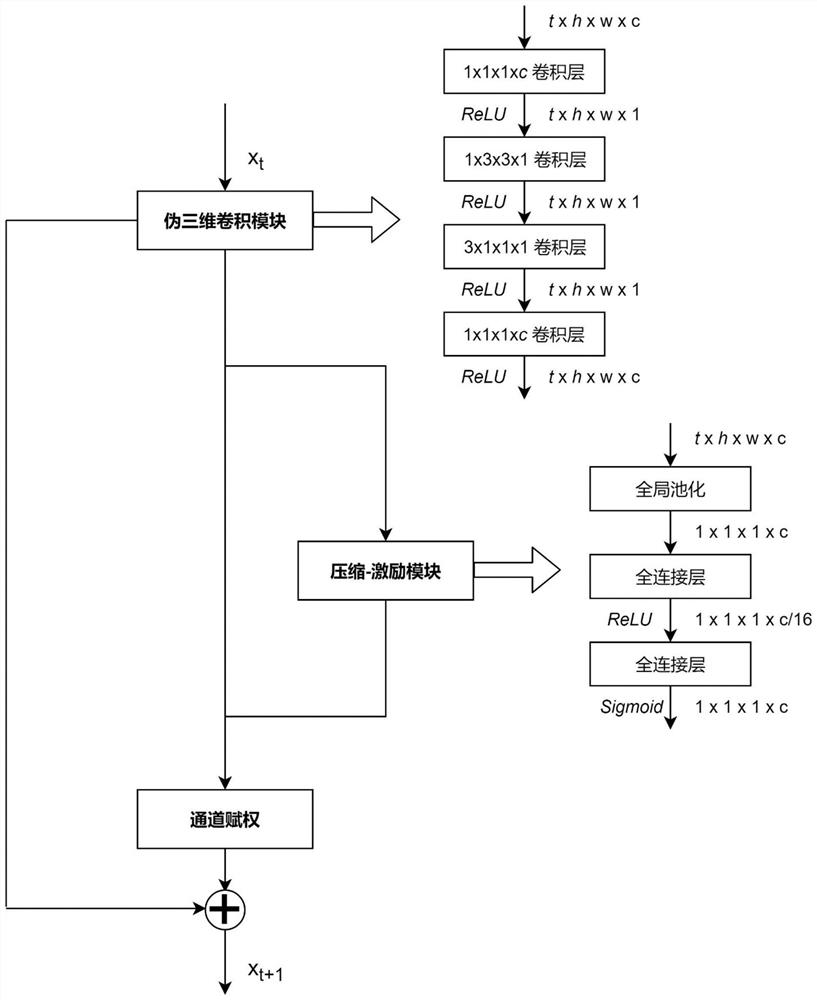

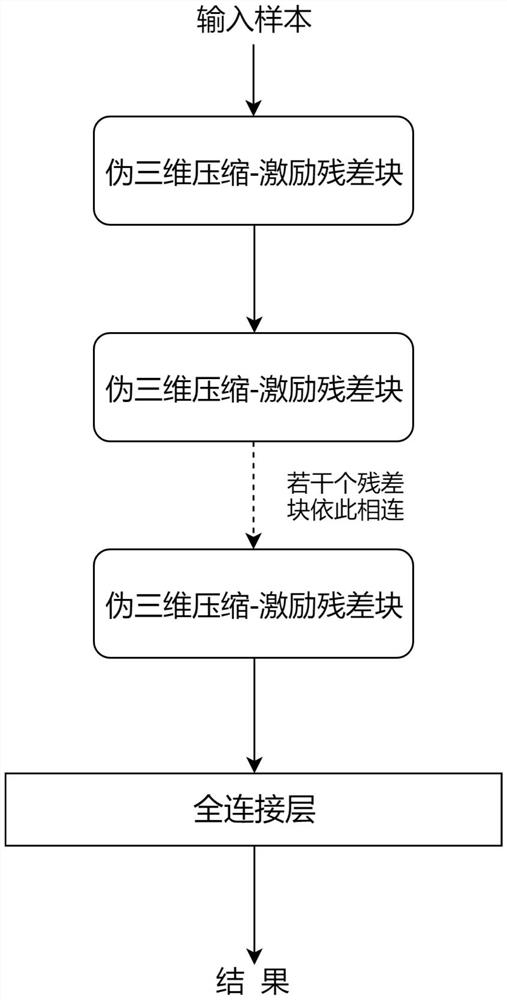

[0025] A kind of video comprehension method based on compression-excitation pseudo three-dimensional network proposed by the present invention adopts the pseudo-three-dimensional residual network based on compression-excitation mechanism to realize, including steps 1-3:

[0026] Step 1, input the original video into the network after processing

[0027] (1.1) Each training video in the training data is divided into several 4-second long segme...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com