Backdoor attack method of video analysis neural network model

A neural network model and video analysis technology, applied in the field of neural network security, can solve problems such as model interference and reduce the success rate of backdoor neural network attacks, and achieve the effect of improving the success rate, strong practical operability, and reducing interference to the backdoor

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

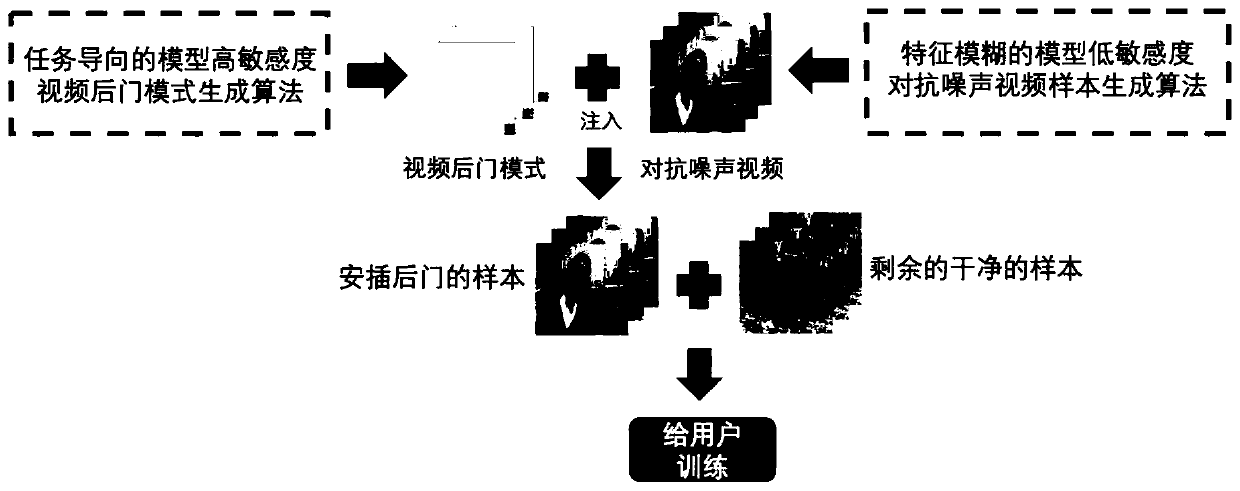

[0048] Step 1: Pre-train a clean model. Given the dataset used by the attacker D , neural network model structure NN , we prepend the D through normal training on the NN (Since different model structures have different training methods, the conventional training method corresponding to the structure should be used for normal training) to get a clean and good model M , making M Normal prediction accuracy on clean datasets.

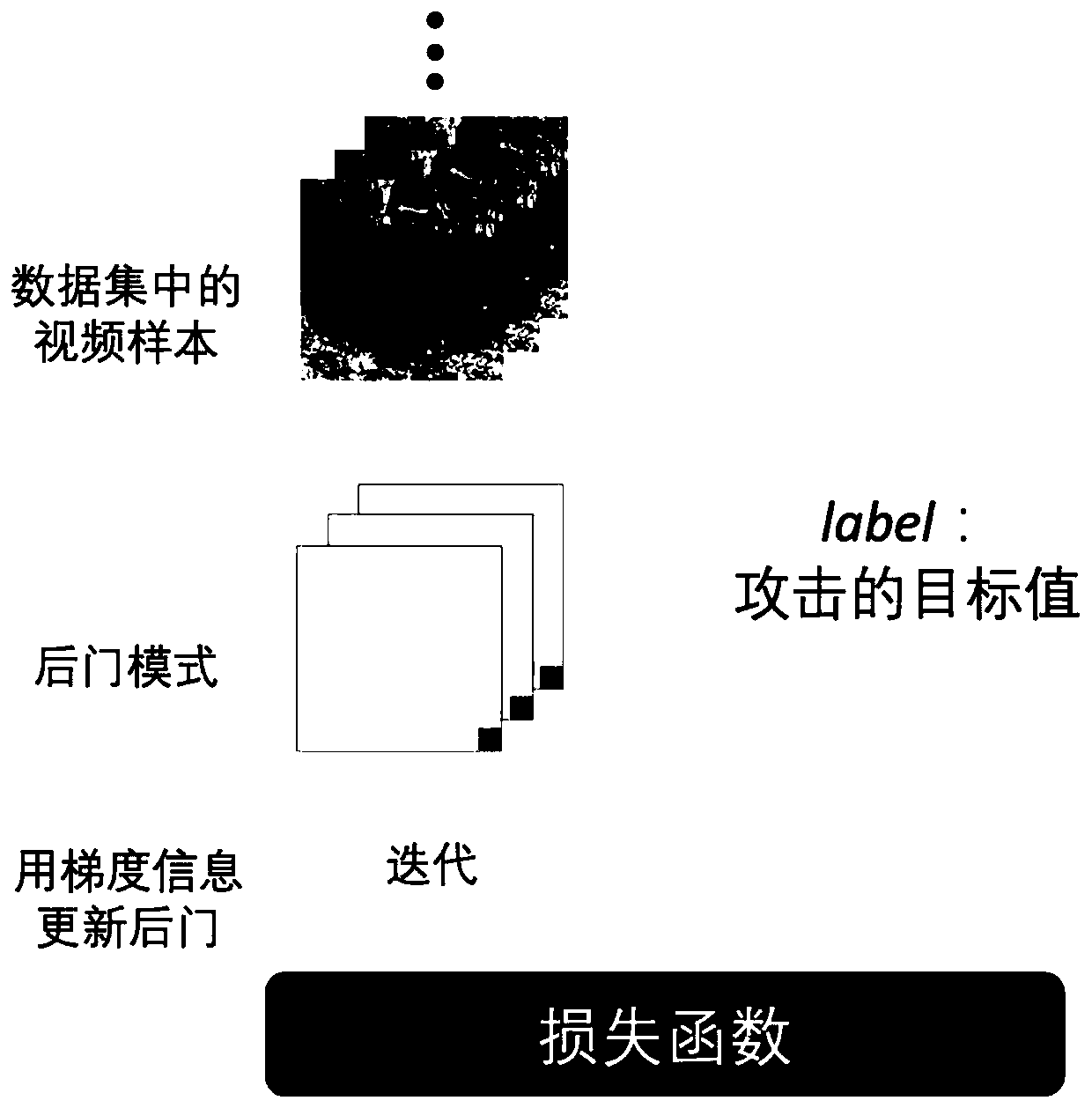

[0049] Step 2: Initialize the backdoor mode. Specifies the size, shape, and position of the backdoor in the video frame, i.e. the mask for the backdoor pattern mask . Then through random initialization, constant initialization, Gaussian distribution initialization, uniform distribution initialization and other methods to give a legal initial value to initialize the pixel value in the backdoor, and get the initial backdoor pattern trigger .

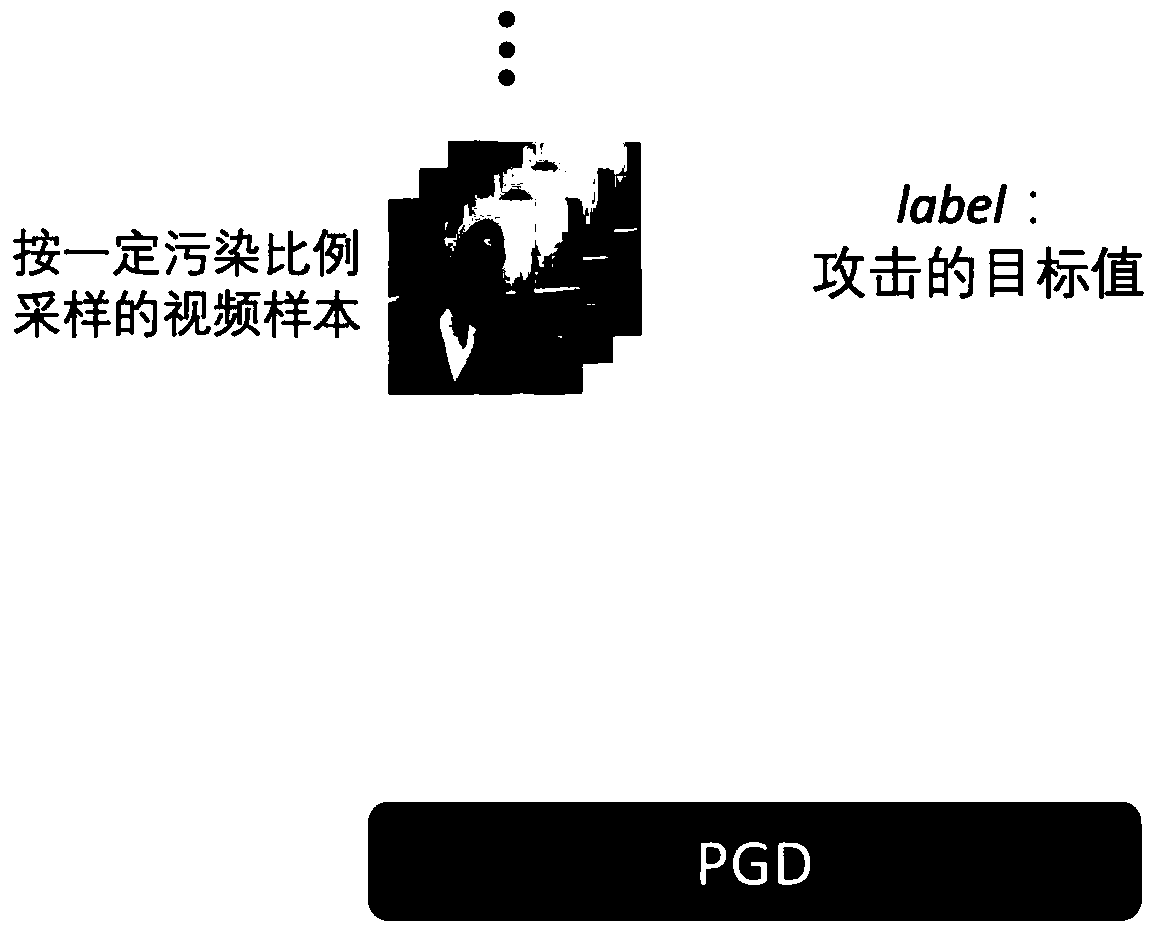

[0050] Step 3: Insert a backdoor pattern into the original video. Take out the video samples in the dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com