3D target detection method of monocular view based on convolutional neural network

A convolutional neural network and target detection technology, applied in the field of 3D target detection in monocular view, can solve the problem of missing depth information and achieve high resolution, high accuracy, and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

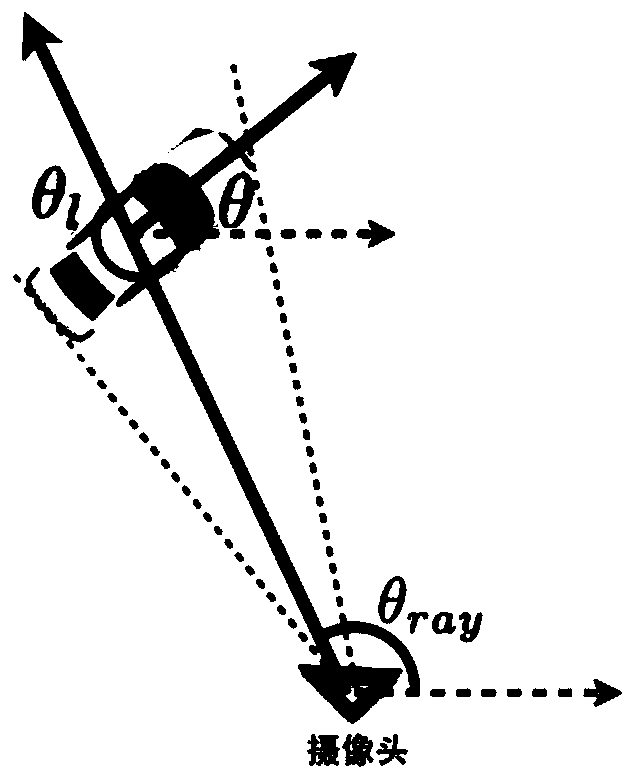

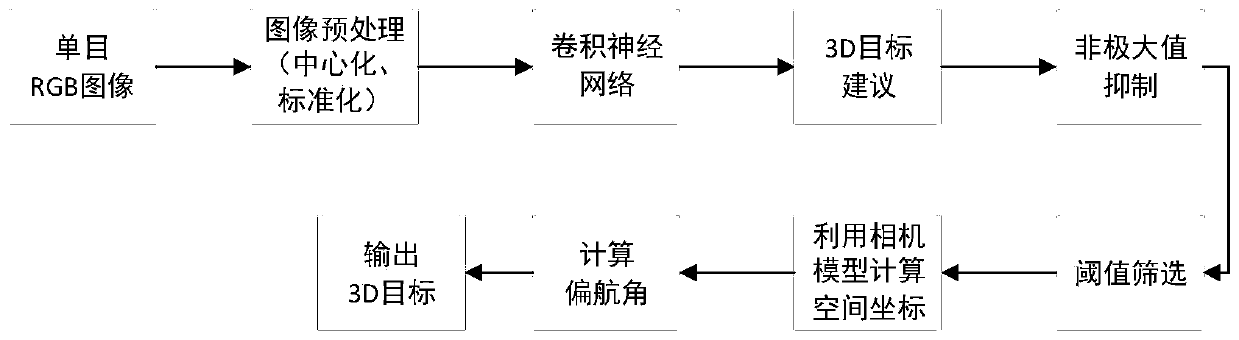

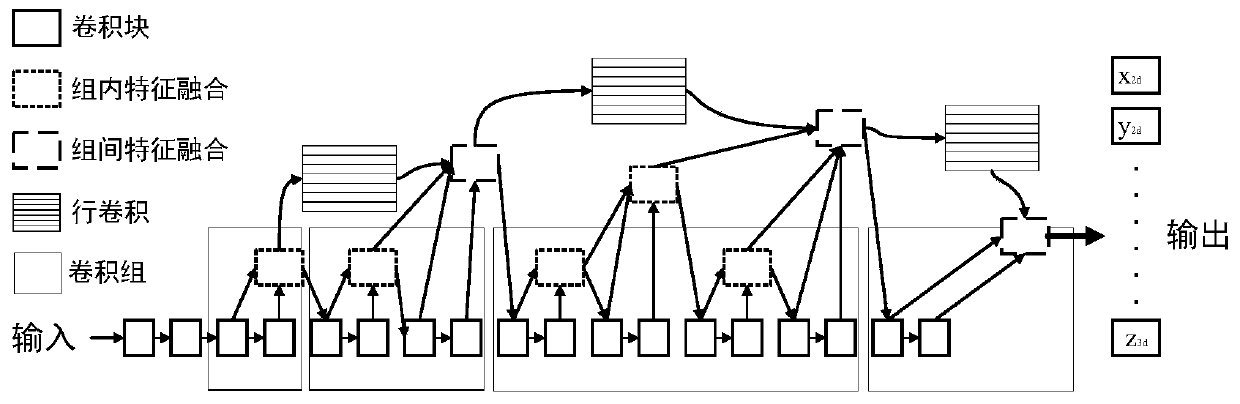

[0049] The method of the present invention will be further described below in conjunction with the accompanying drawings.

[0050] Such as figure 2 As shown, a 3D object detection method based on convolutional neural network monocular view, its specific implementation steps are as follows:

[0051] Step (1). The input image is a monocular view collected by a vehicle camera;

[0052] Step (2). The training sample is divided into a training set and a test set, and the training set sample is put into a convolutional neural network and trained by backpropagation. The test set samples are used to test the generalization ability of the model.

[0053] Step (3). Centralize and standardize the R, G, and B channels of the input image, that is, subtract the mean value obtained from the statistics on the training set, and then divide by the standard deviation:

[0054] X'=X-X mean

[0055] x s =X' / X std

[0056] Among them, X is the image to be preprocessed, X mean is the mean ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com