Patents

Literature

944 results about "3d space" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

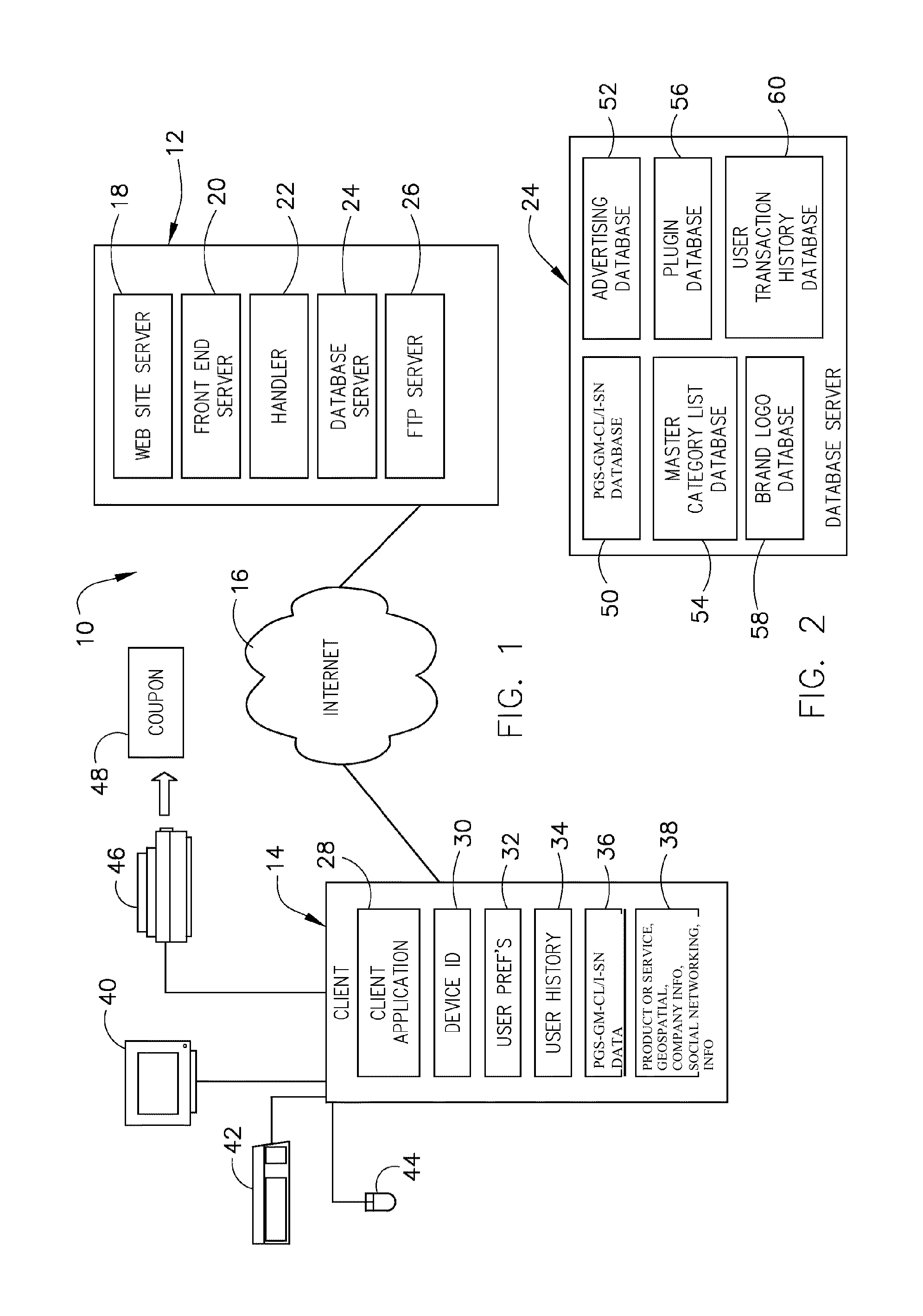

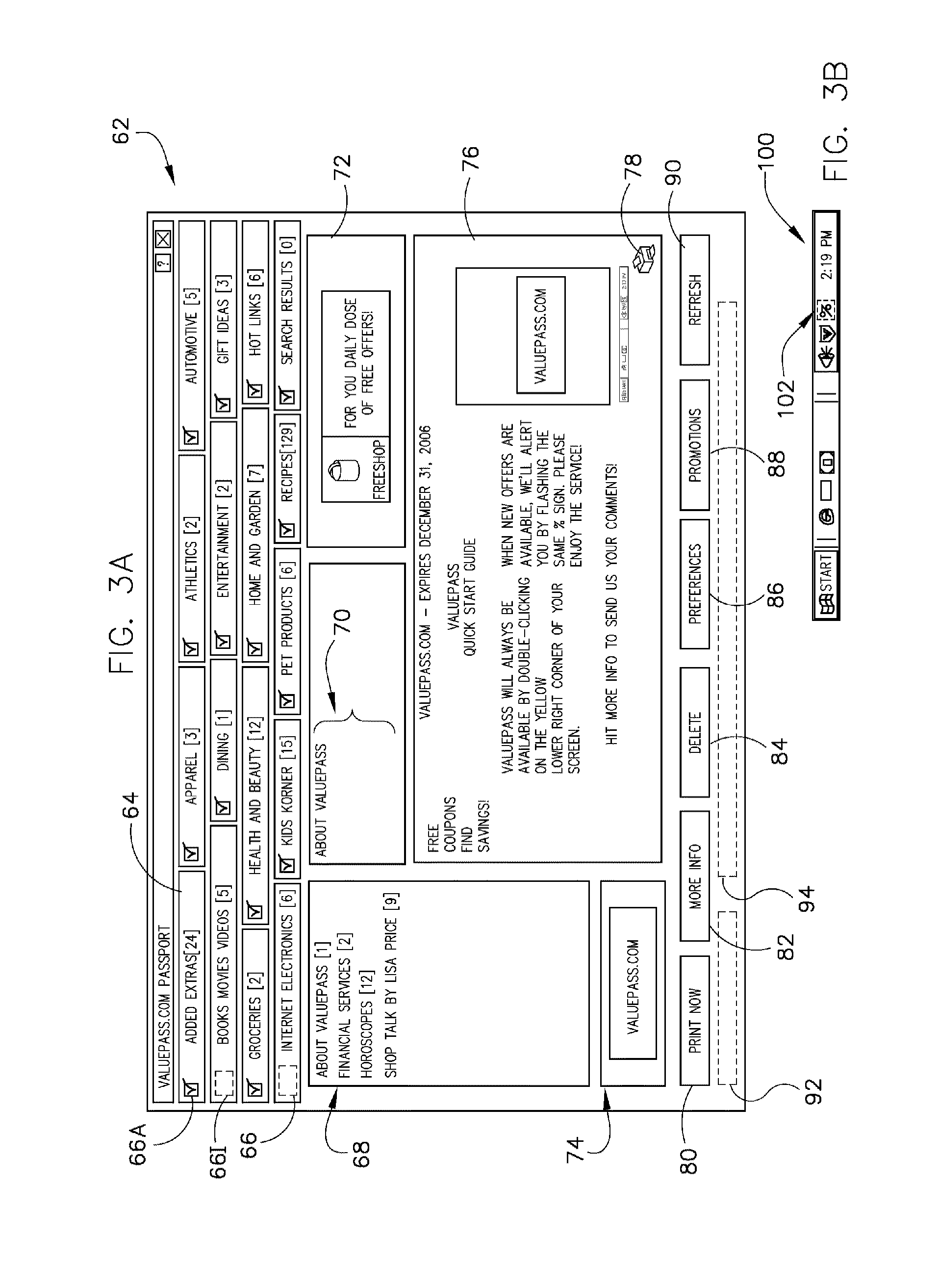

System and method for providing educational related social/geo/promo link promotional data sets for end user display of interactive ad links, promotions and sale of products, goods, and/or services integrated with 3D spatial geomapping, company and local information for selected worldwide locations and social networking

InactiveUS20130073387A1Exceptional educational toolEfficient and economicalMarketingLocal information systemsData set

A technique, method, apparatus, and system to provide educational related, integrated social networking, real time geospatial mapping, geo-target location based technologies including GPS and GIS and multiple points of interest, receiving current location of user's electronic or mobile device and multiple points of interest, cloud-type configuration storing and handling user data across multiple enterprises, generating user behavior data and ad links, promotions (“social / geo / promo”) links on a website for educational related products, goods, and / or services, including educational related social / geo / promo data sets for user customized visual displays showing 3D map presentations with correlated or related broad or alternative categories of social / geo / promo links to be displayed with web page content for view and interaction by an end user.

Owner:HEATH STEPHAN

System and method for providing sports and sporting events related social/geo/promo link promotional data sets for end user display of interactive ad links, promotions and sale of products, goods, gambling and/or services integrated with 3D spatial geomapping, company and local information for selected worldwide locations and social networking

InactiveUS20130073389A1Efficient and economicalCheaply obtain dataMultiple digital computer combinationsMarketingWeb siteData set

A technique, method, apparatus, and system to provide sports and sporting events related, integrated social networking, real time geospatial mapping, geo-target location based technologies including GPS and GIS and multiple points of interest, receiving current location of user's electronic or mobile device and multiple points of interest, cloud-type configuration storing and handling user data across multiple enterprises, generating user behavior data and ad links, promotions (“social / geo / promo”) links on a website for sports and sporting events related products, goods, gambling, and / or services, including sports related social / geo / promo data sets for user customized visual displays showing 3D map presentations with correlated or related broad or alternative categories of social / geo / promo links to be displayed with web page content for view and interaction by an end user.

Owner:HEATH STEPHAN

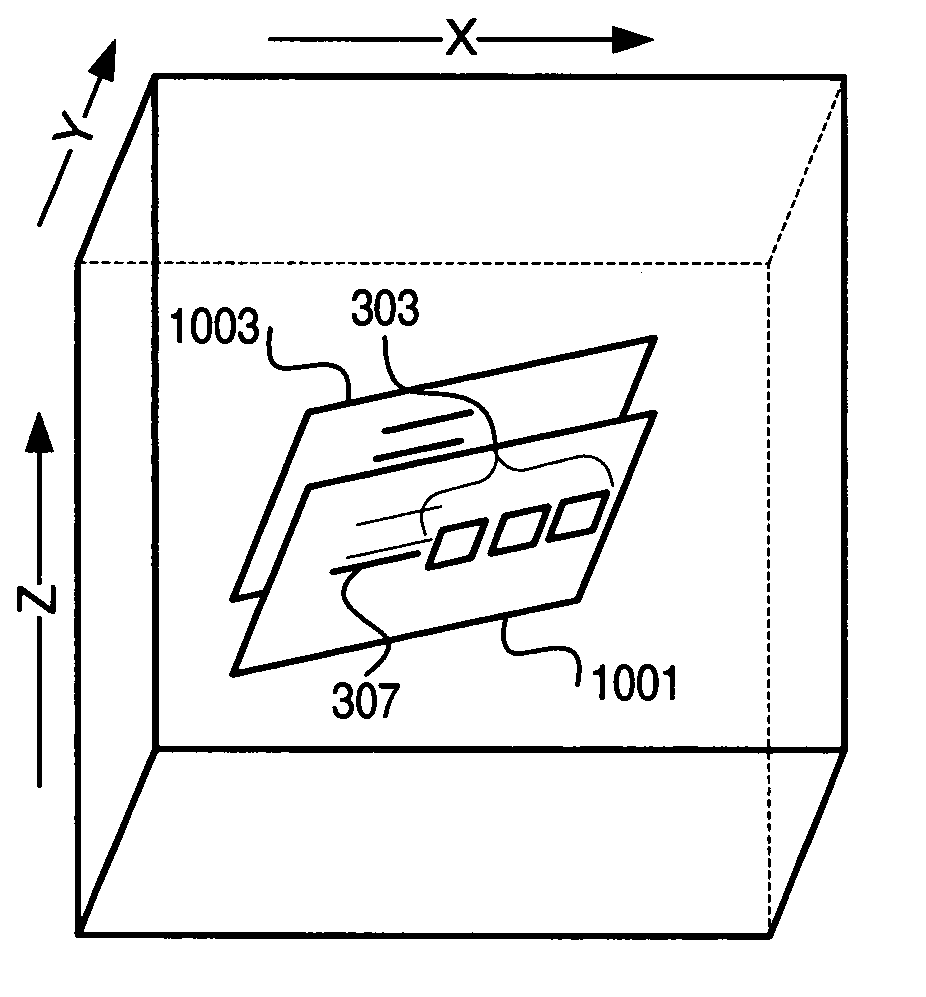

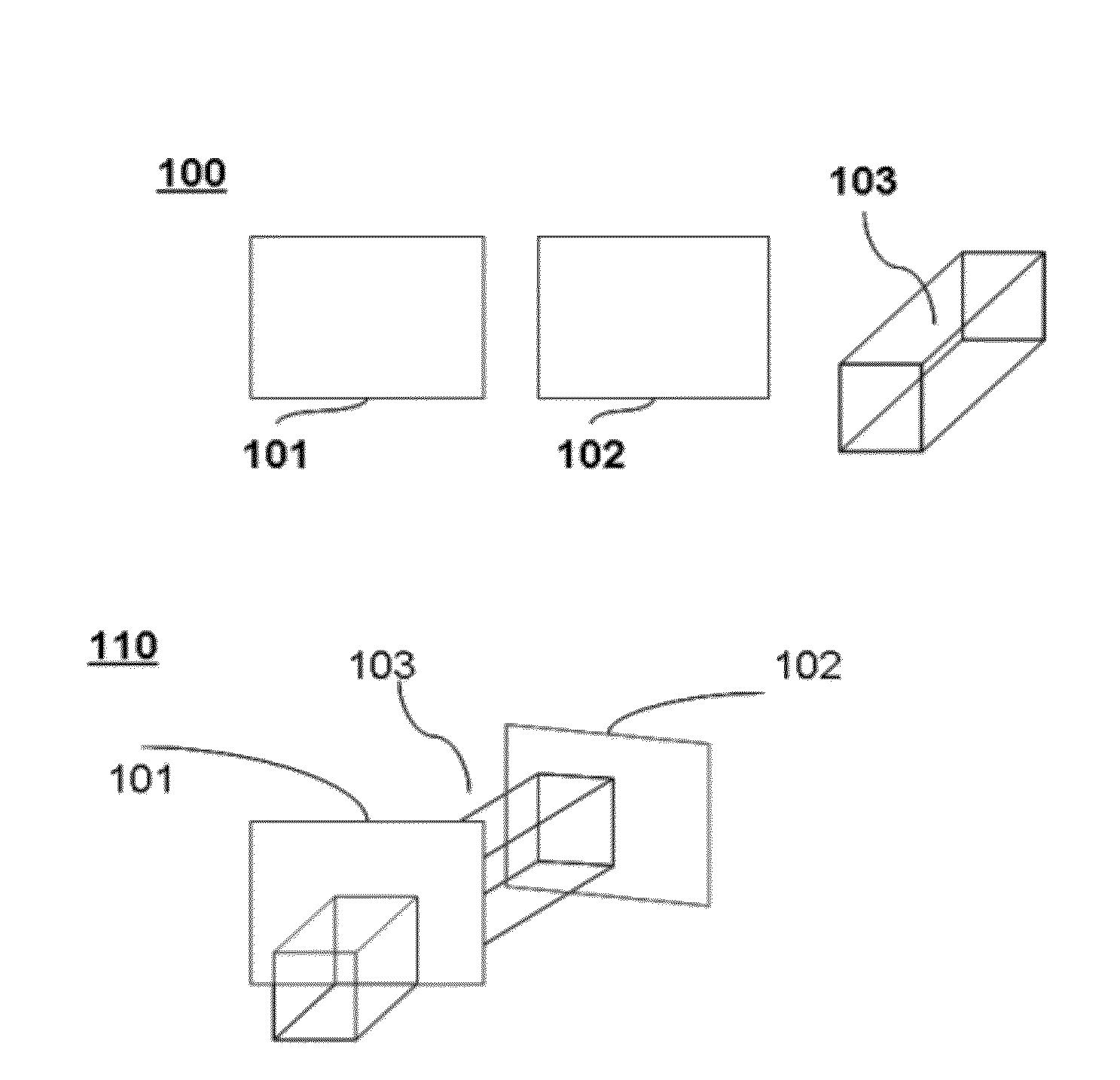

Multi-planar three-dimensional user interface

Owner:MICROSOFT TECH LICENSING LLC

Multi-planar three-dimensional user interface

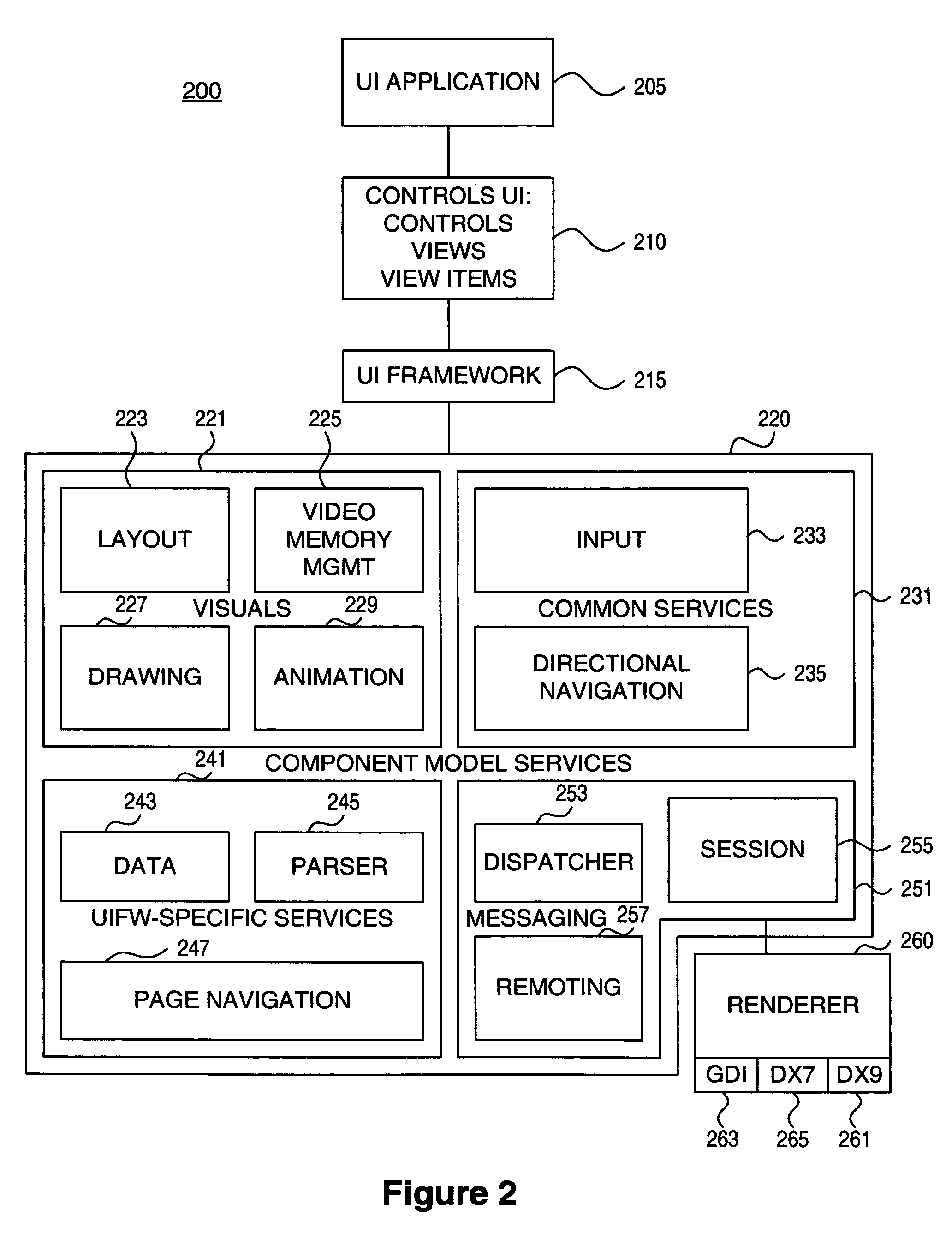

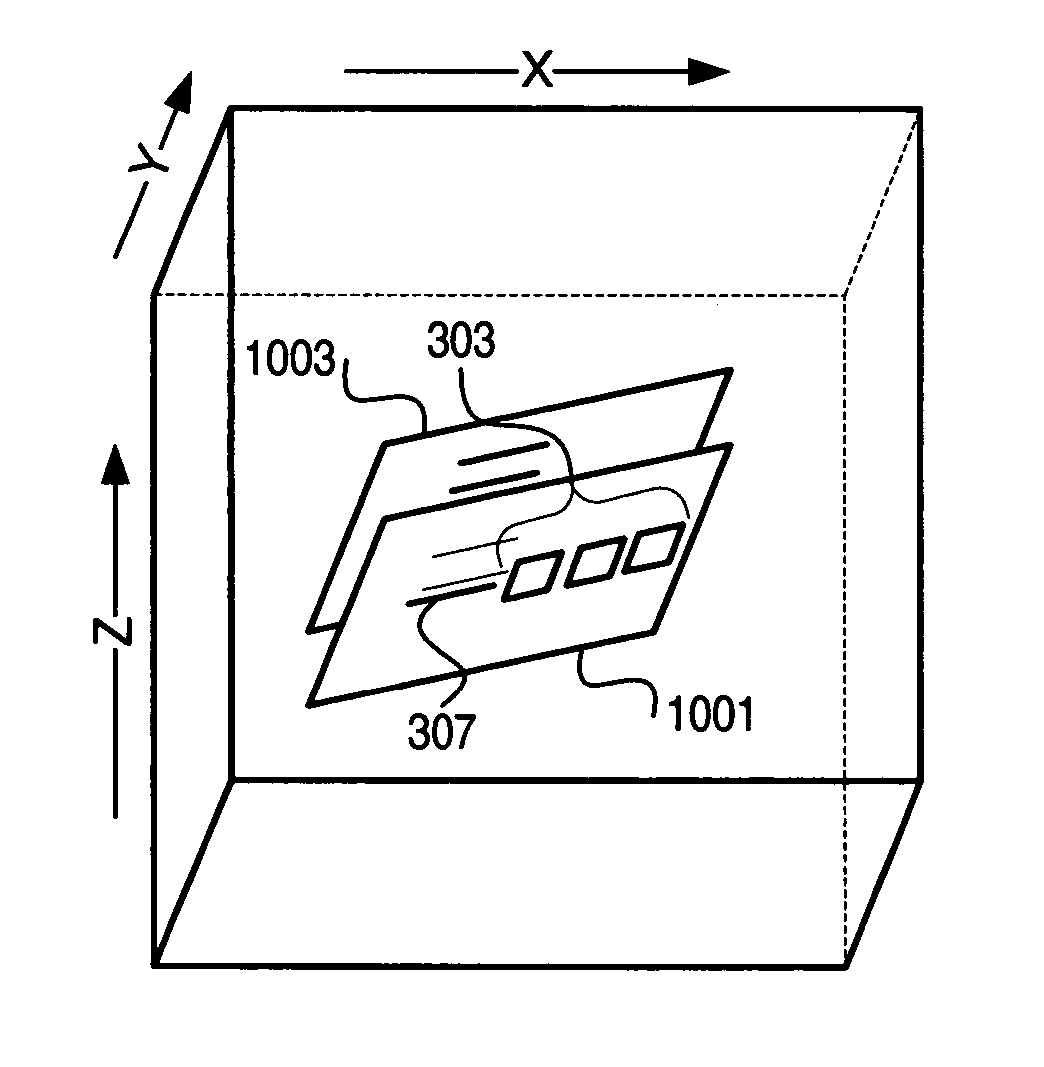

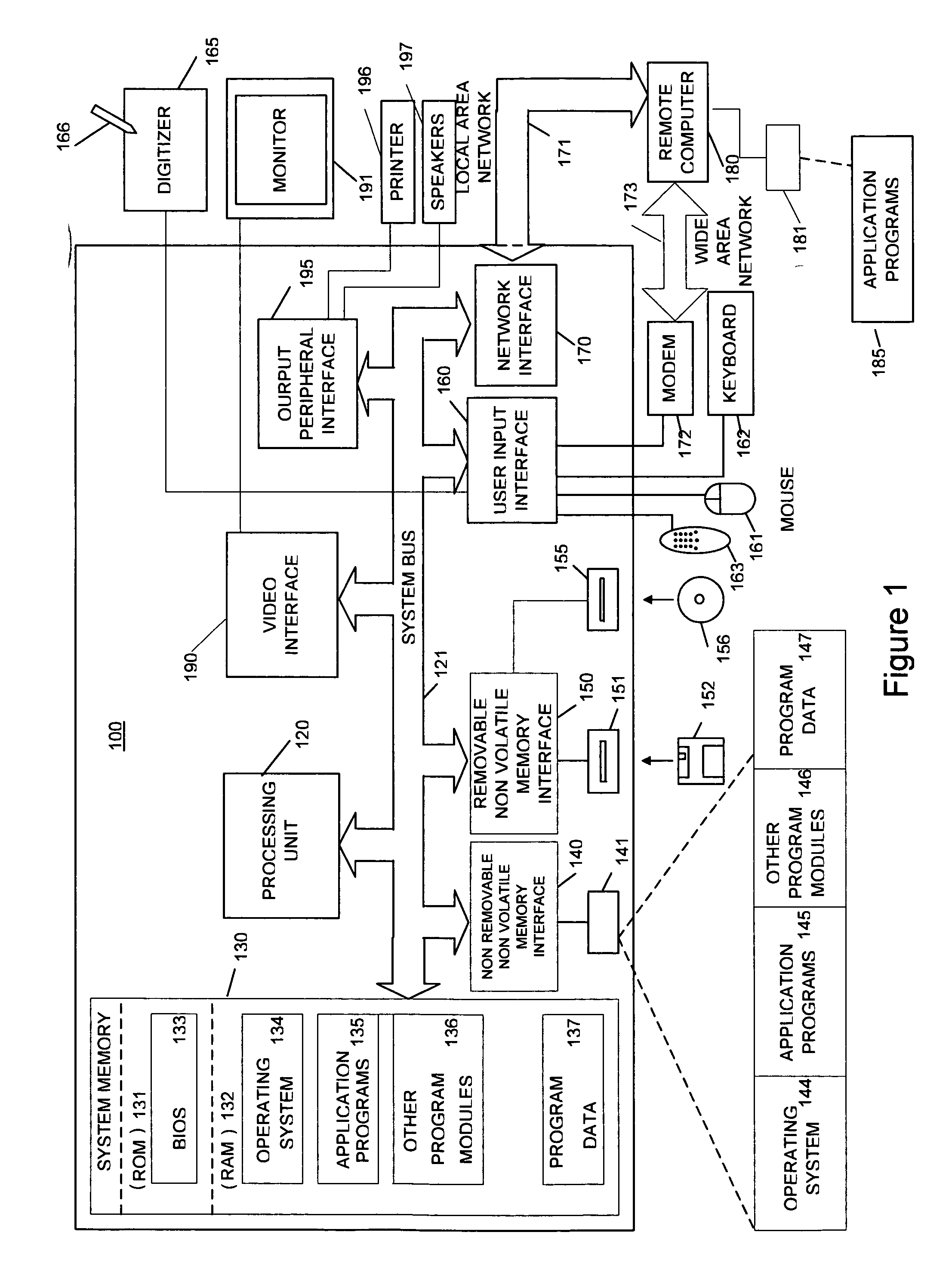

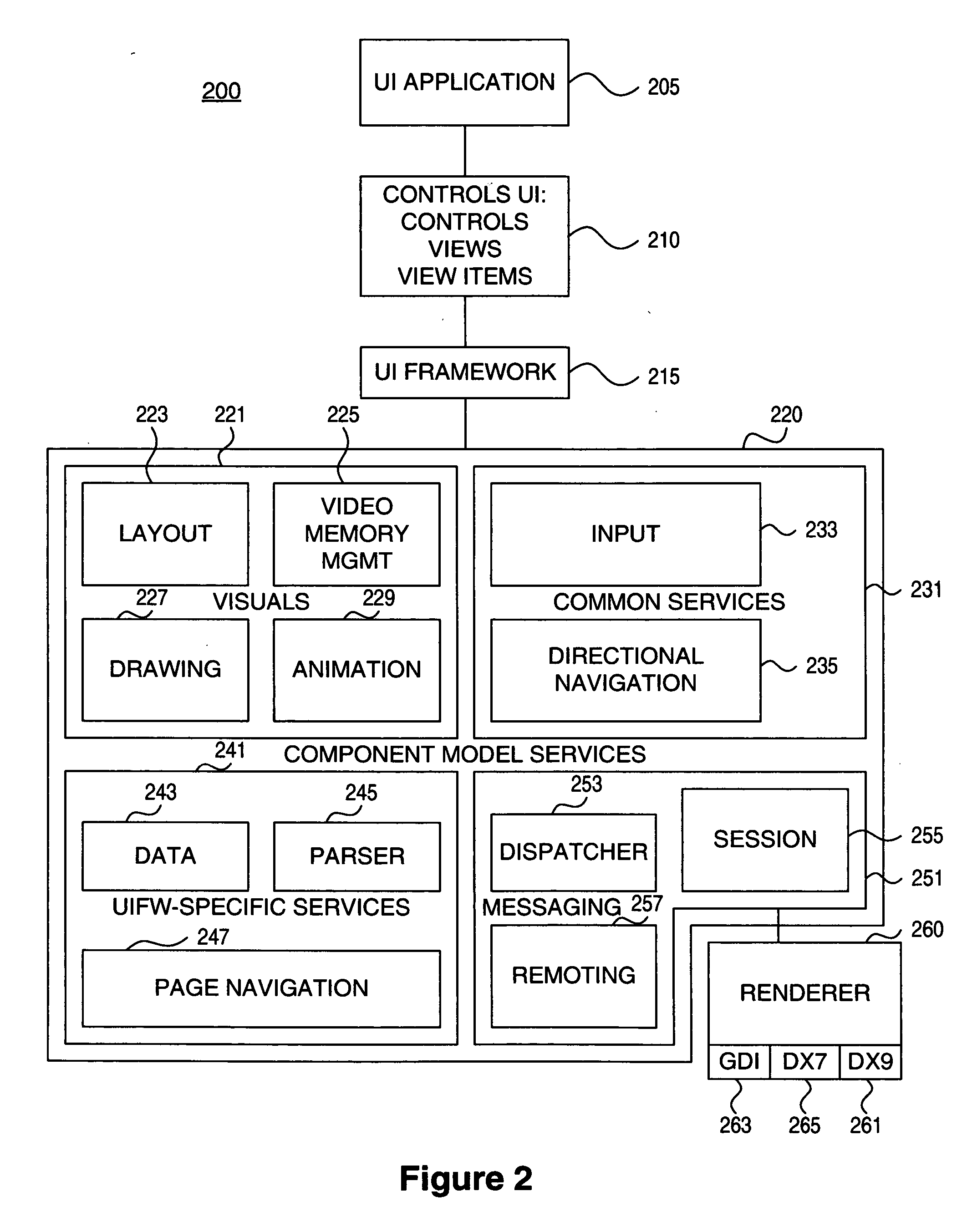

A 10-Foot media user interface is herein described. A computer user interface may be designed for primary use as a 10-Foot user interface, where a user controls the computer using a remote control device, instead of as a traditional 2-Foot user interface where the user controls the computer using keyboard and mouse from directly in from of the computer. The 10-Foot user interface uses 3D space and animations to more clearly indicate navigation and selection of items in the user interface to the controlling user. Use of three-dimensional space also increases the display screen real estate that is available for content items, and allows the media user interface to move unselected items out of primary view of the user. The user interface may animate movement in three-dimensions to allow the user to more easily conceptually follow navigation of the user interface.

Owner:MICROSOFT TECH LICENSING LLC

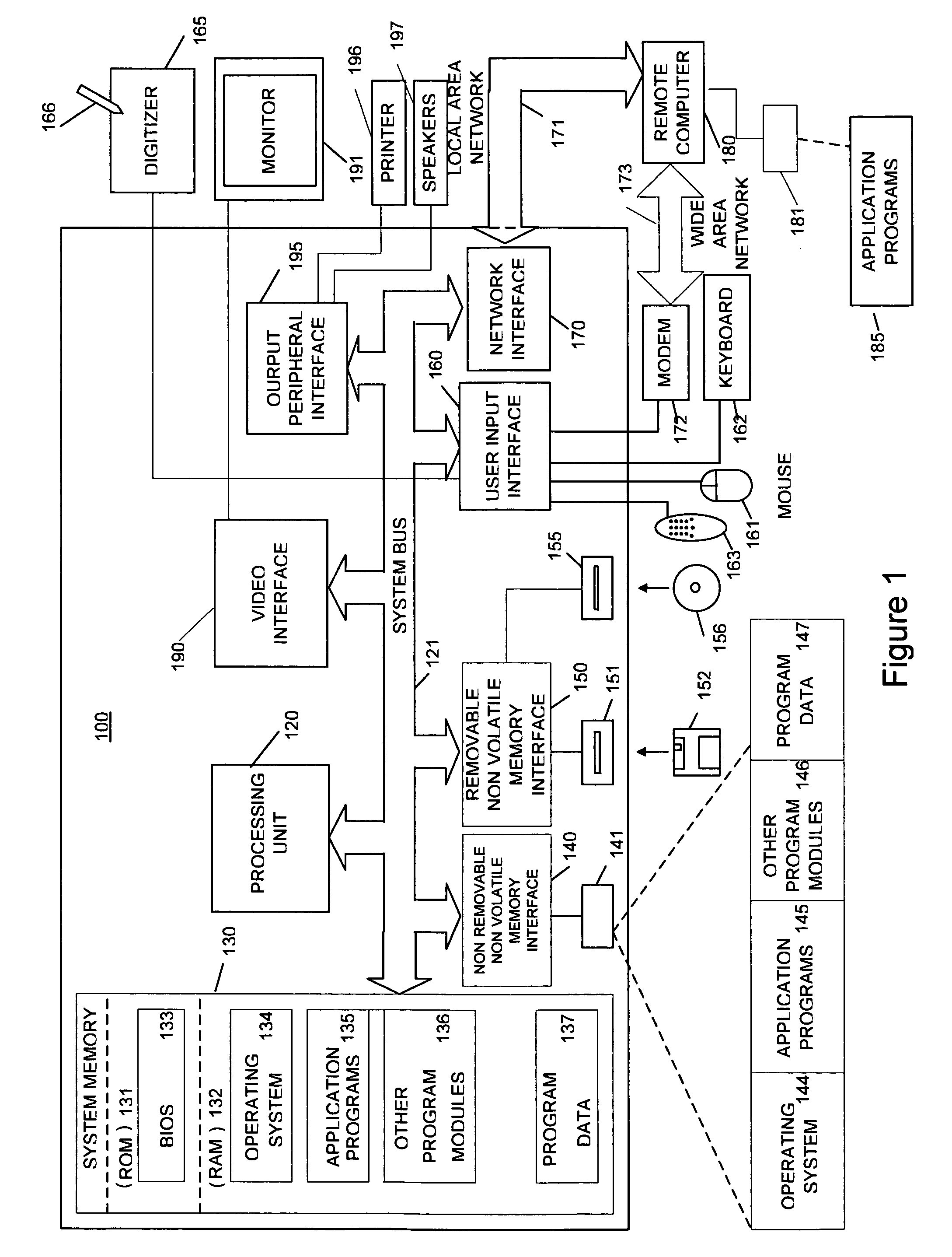

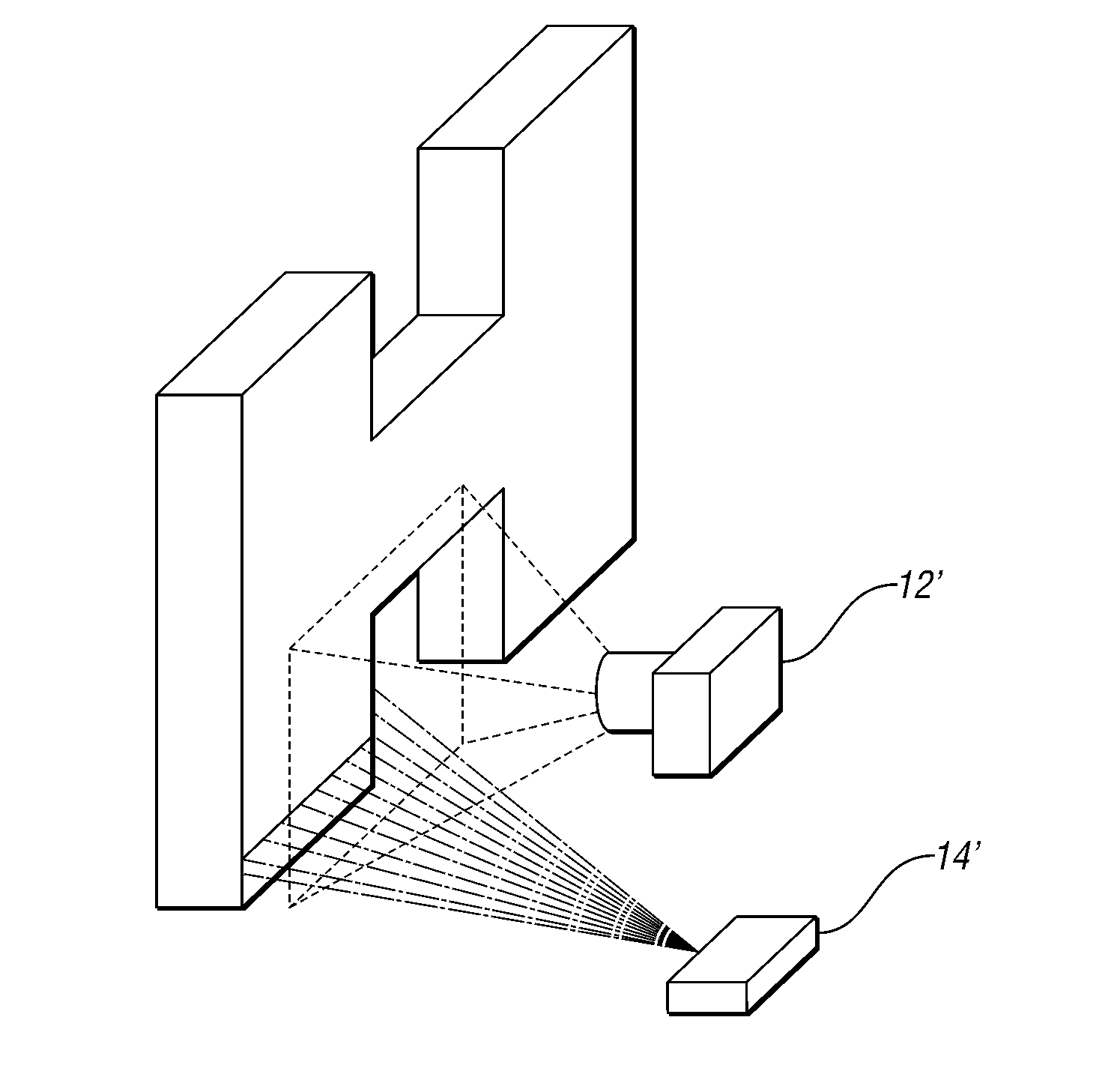

Systems and methods for 2D image and spatial data capture for 3D stereo imaging

InactiveUS20110222757A1Facilitate post-productionLarge separationImage enhancementImage analysisVirtual cameraMovie camera

Systems and methods for 2D image and spatial data capture for 3D stereo imaging are disclosed. The system utilizes a cinematography camera and at least one reference or “witness” camera spaced apart from the cinematography camera at a distance much greater that the interocular separation to capture 2D images over an overlapping volume associated with a scene having one or more objects. The captured image date is post-processed to create a depth map, and a point cloud is created form the depth map. The robustness of the depth map and the point cloud allows for dual virtual cameras to be placed substantially arbitrarily in the resulting virtual 3D space, which greatly simplifies the addition of computer-generated graphics, animation and other special effects in cinemagraphic post-processing.

Owner:SHAPEQUEST

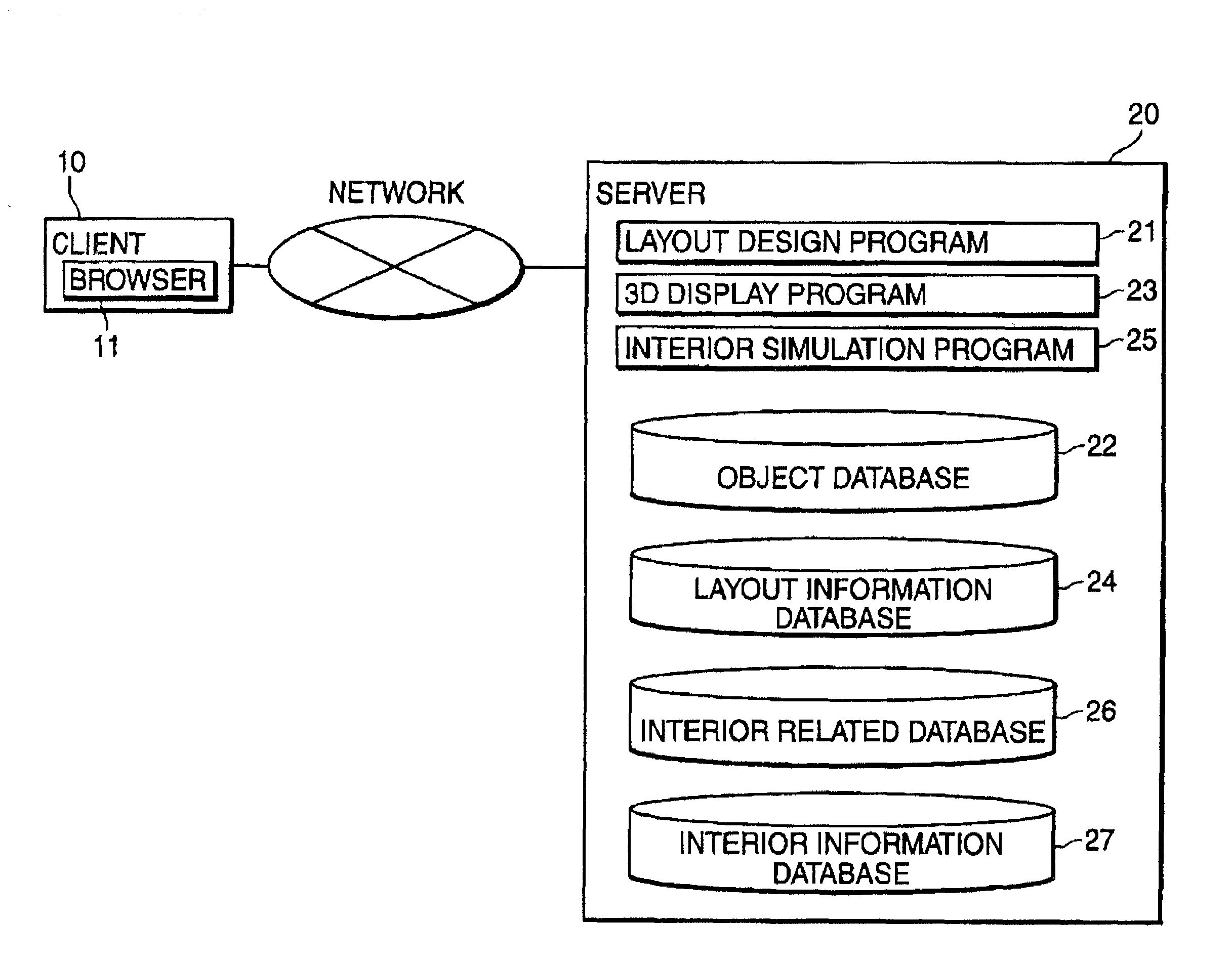

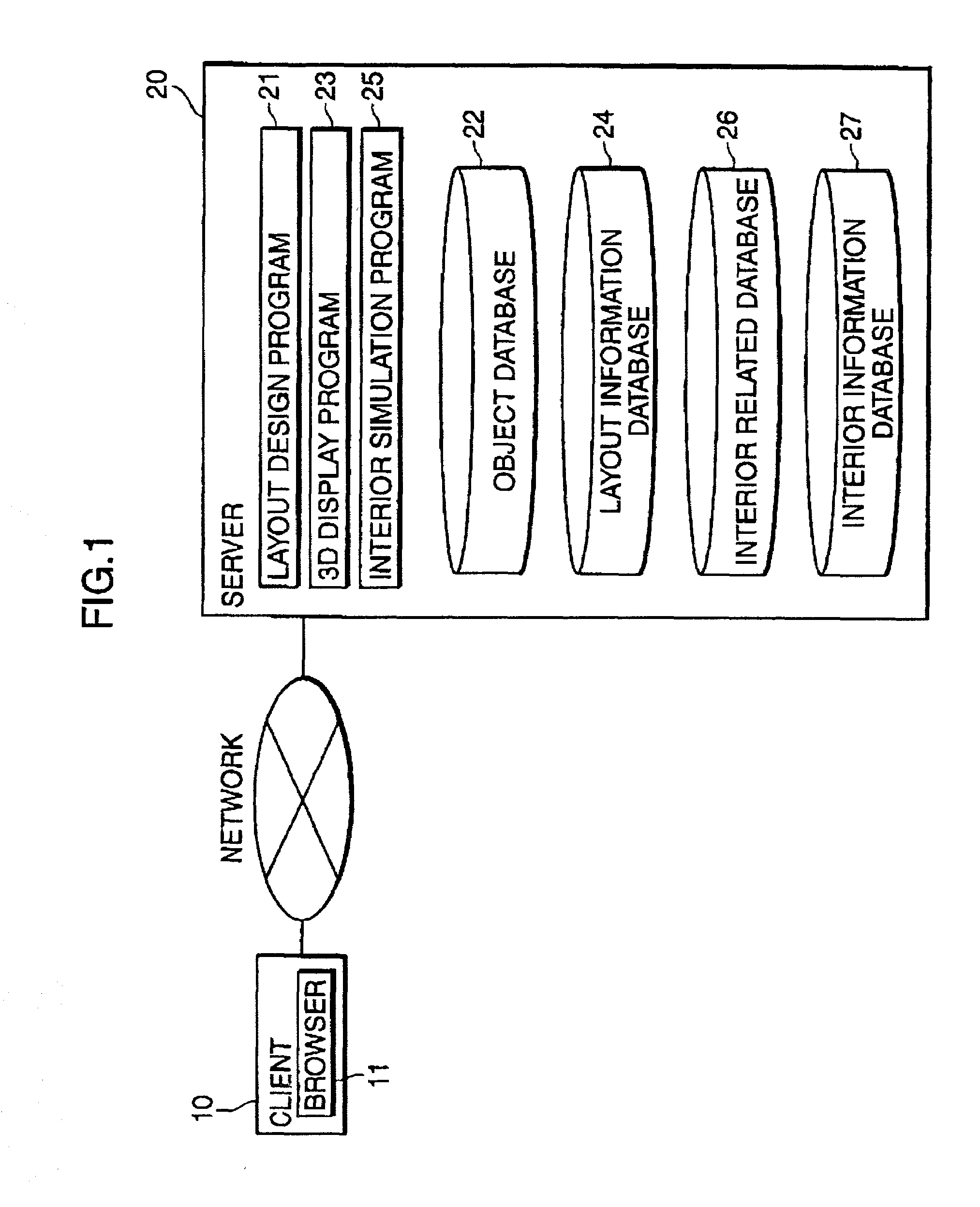

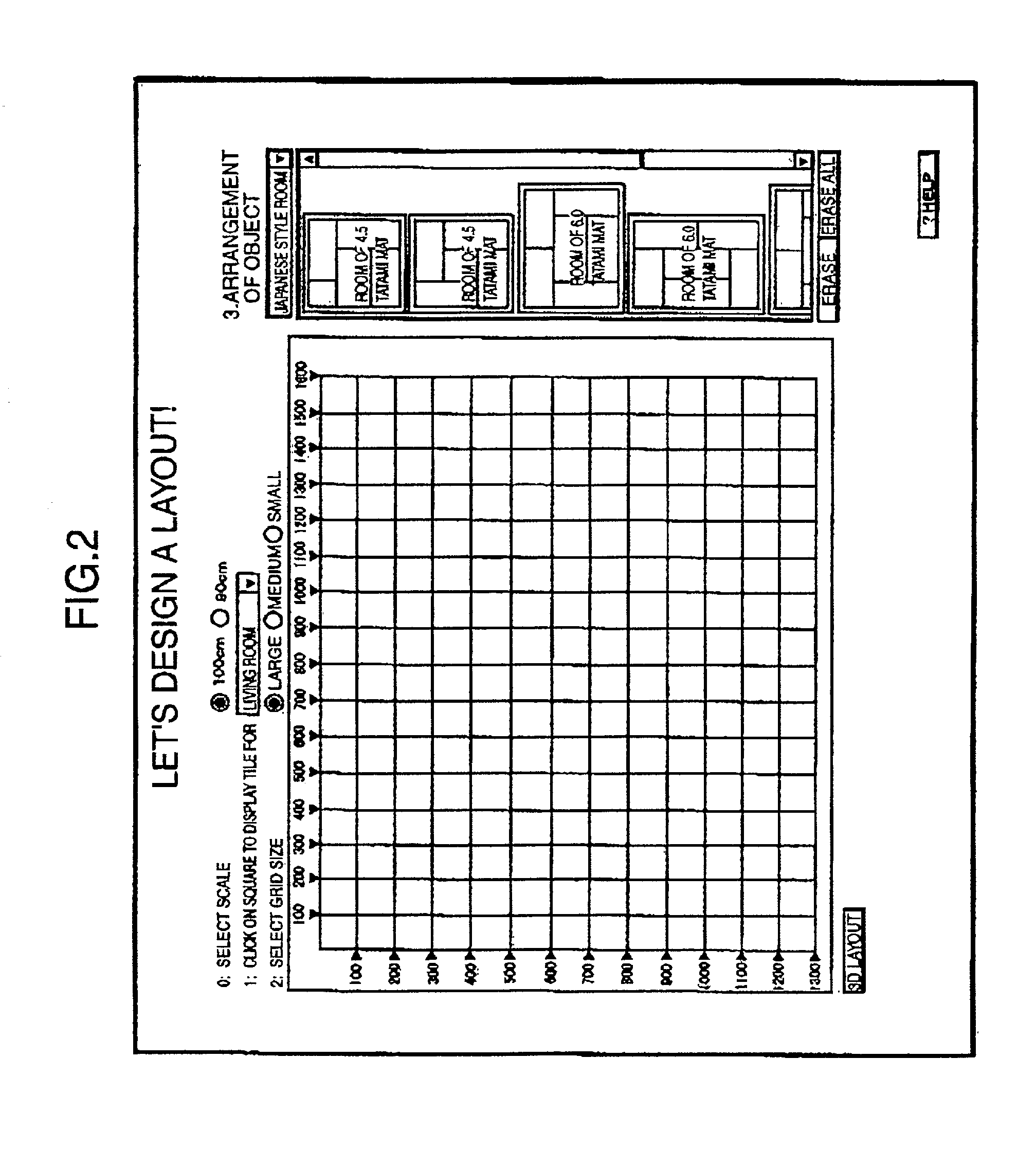

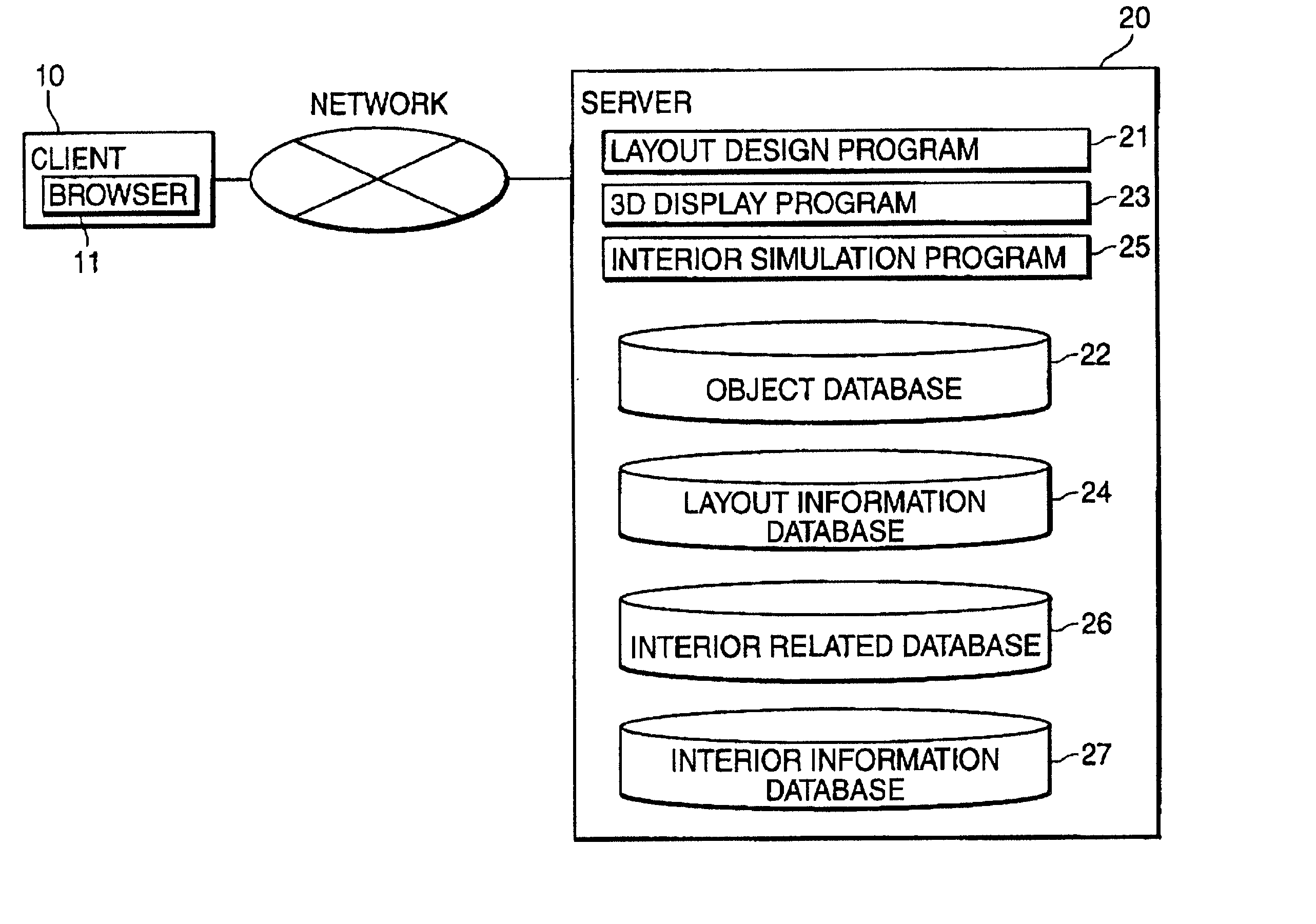

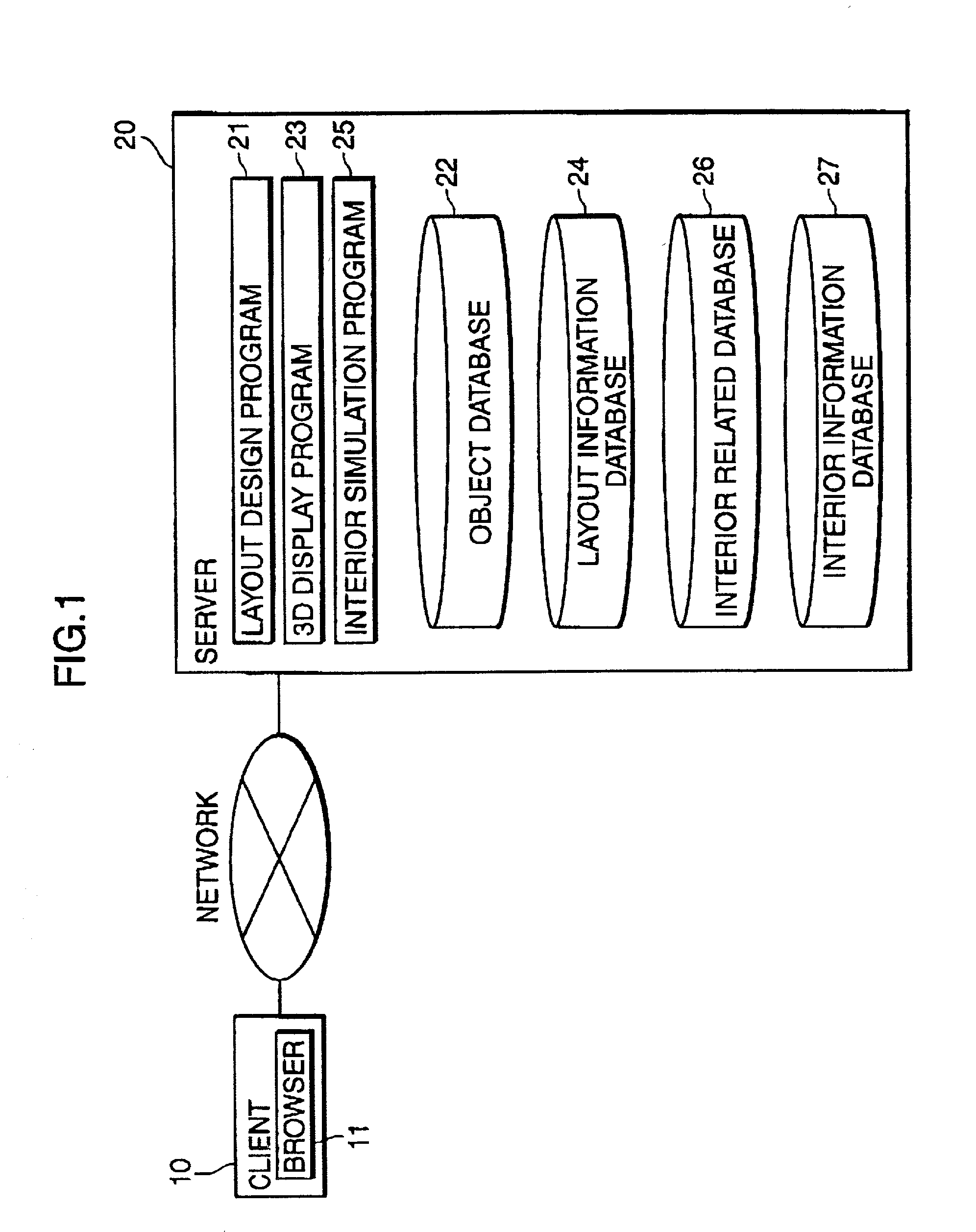

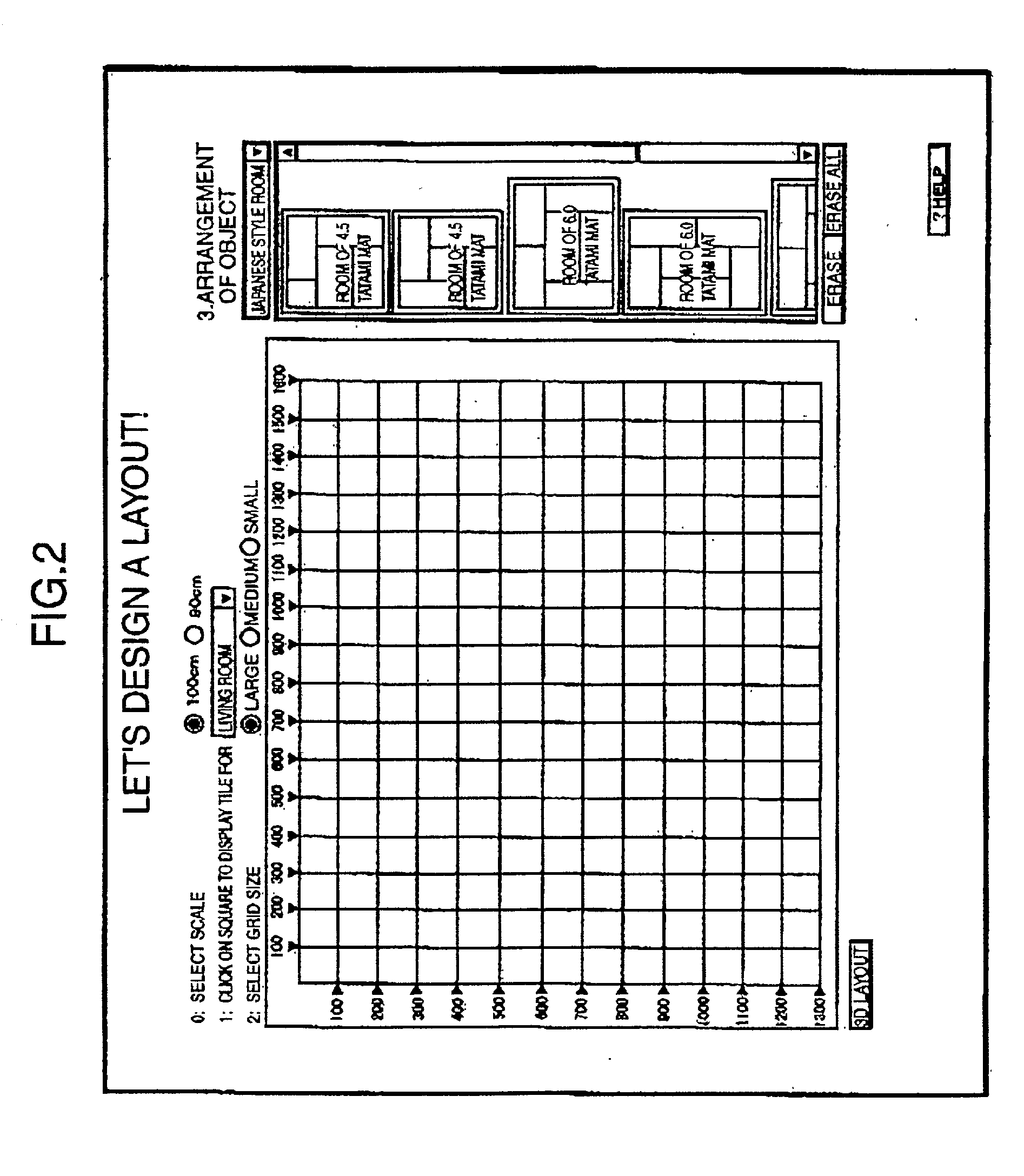

Method for aiding space design using network, system therefor, and server computer of the system

InactiveUS7246044B2Simplify the design processGeometric CADCathode-ray tube indicators3d imageClient-side

Provided is an environment which enables a user to implement 3D space design on a computer accessible to a server computer via a network. The server computer includes a layout design program which is operable on a screen activated by a browser of the client computer to enable the user to implement space design of a layout in the terms of a 2D image, an object database which stores object data used for layout design for retrieval and extraction, and a 3D display program which is operable on the browser screen of the client computer to display the designed space in the terms of a 3D image. The client computer is equipped with the browser capable of executing programs of the layout design program and the 3D display program. Upon receiving the layout design program and the 3D display program from the server computer via the network, the programs of the layout design program and the 3D display program are executable on the browser screen.

Owner:PANASONIC CORP

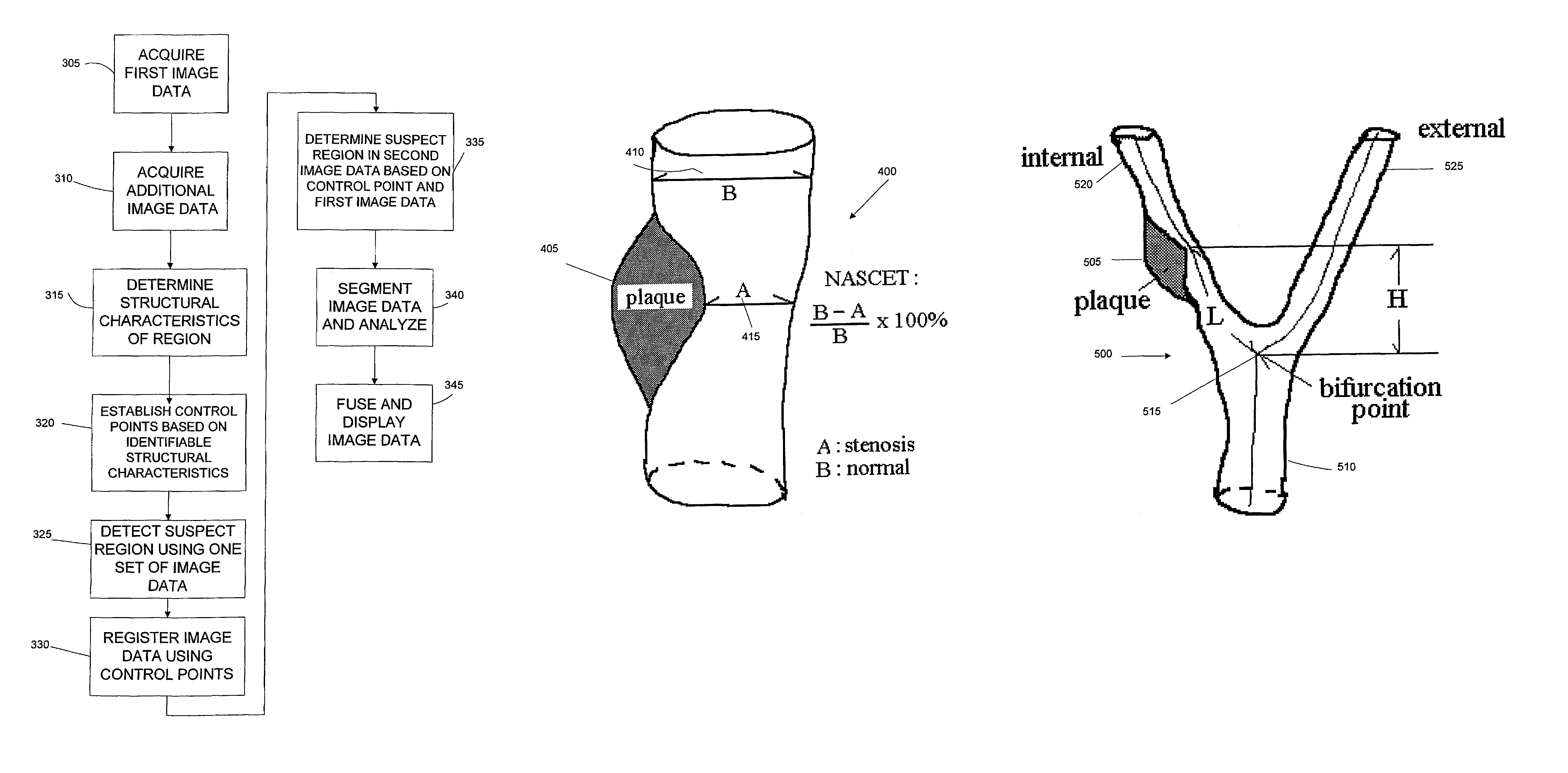

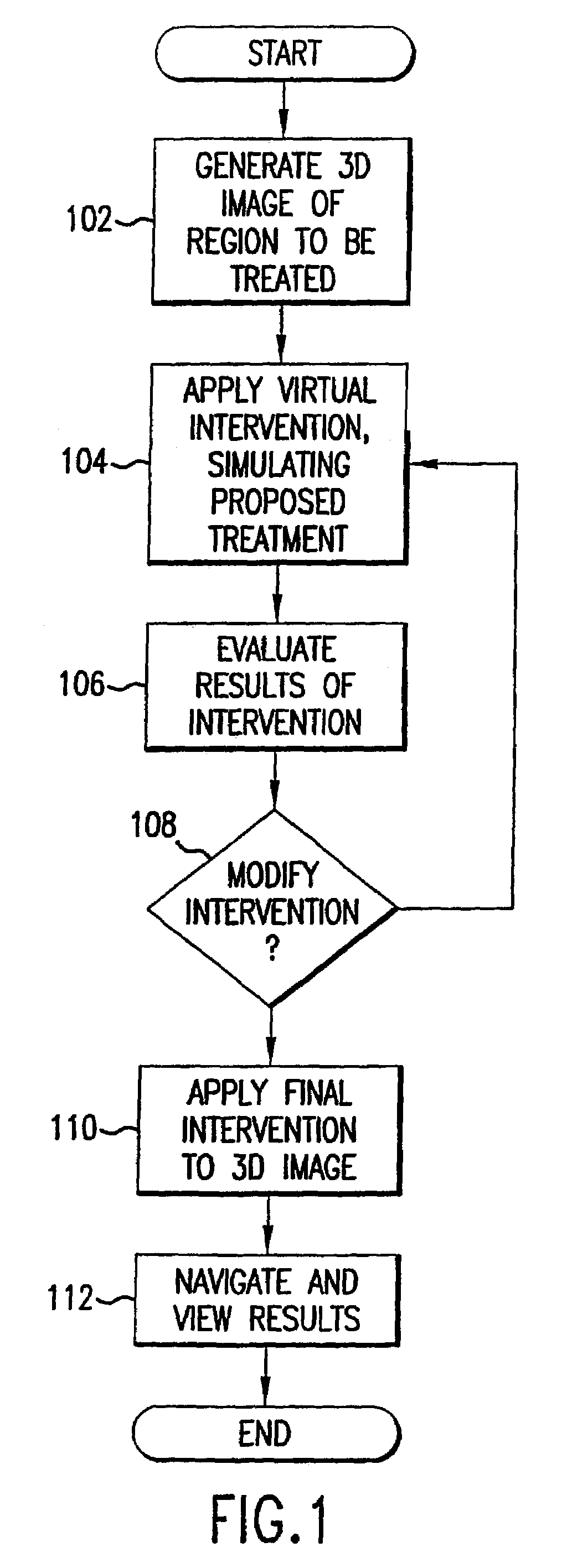

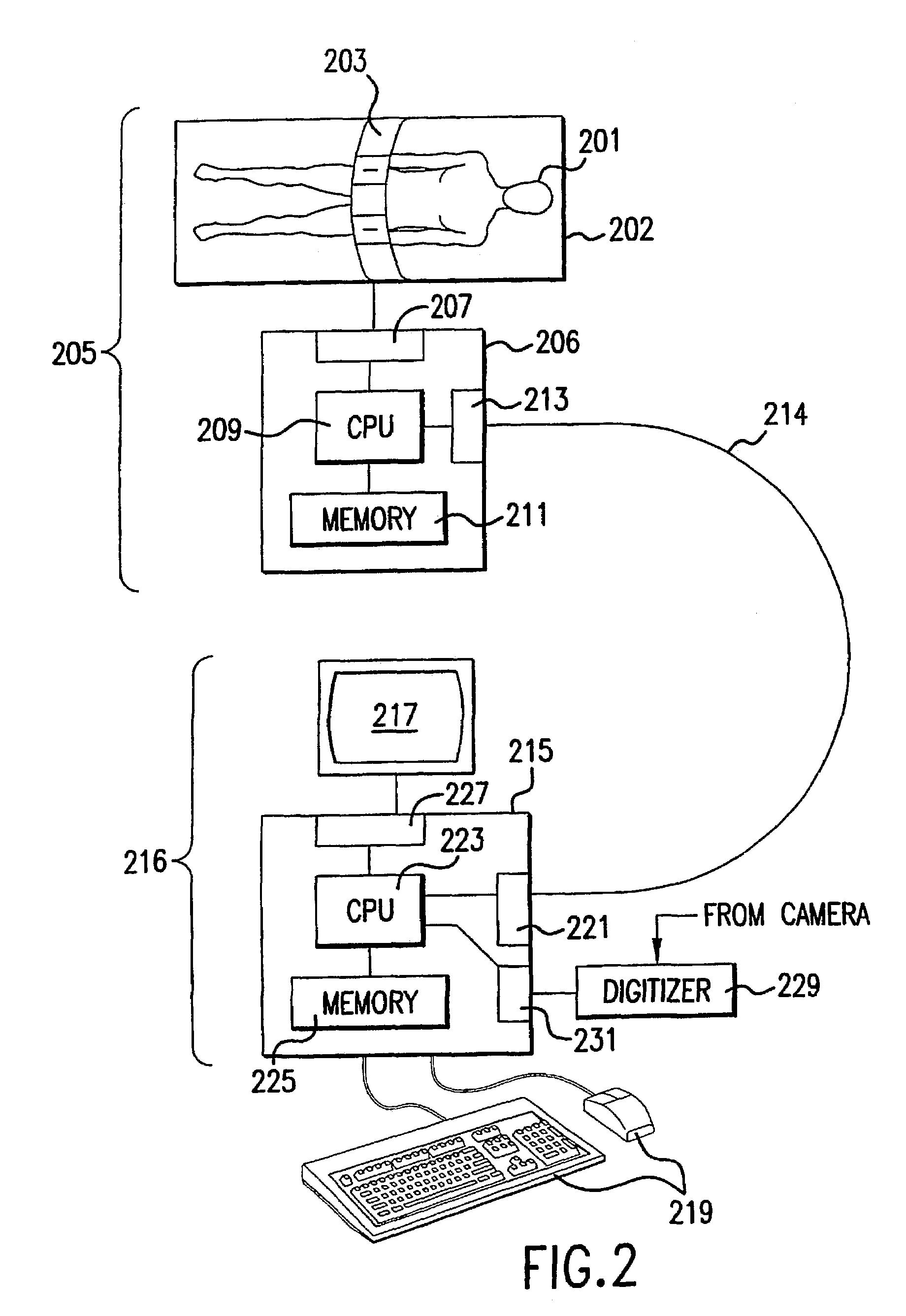

Computer aided treatment planning and visualization with image registration and fusion

A computer based system and method of visualizing a region using multiple image data sets is provided. The method includes acquiring first volumetric image data of a region and acquiring at least second volumetric image data of the region. The first image data is generally selected such that the structural features of the region are readily visualized. At least one control point is determined in the region using an identifiable structural characteristic discernable in the first volumetric image data. The at least one control point is also located in the at least second image data of the region such that the first image data and the at least second image data can be registered to one another using the at least one control point. Once the image data sets are registered, the registered first image data and at least second image data can be fused into a common display data set. The multiple image data sets have different and complimentary information to differentiate the structures and the functions in the region such that image segmentation algorithms and user interactive editing tools can be applied to obtain 3d spatial relations of the components in the region. Methods to correct spatial inhomogeneity in MR image data is also provided.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

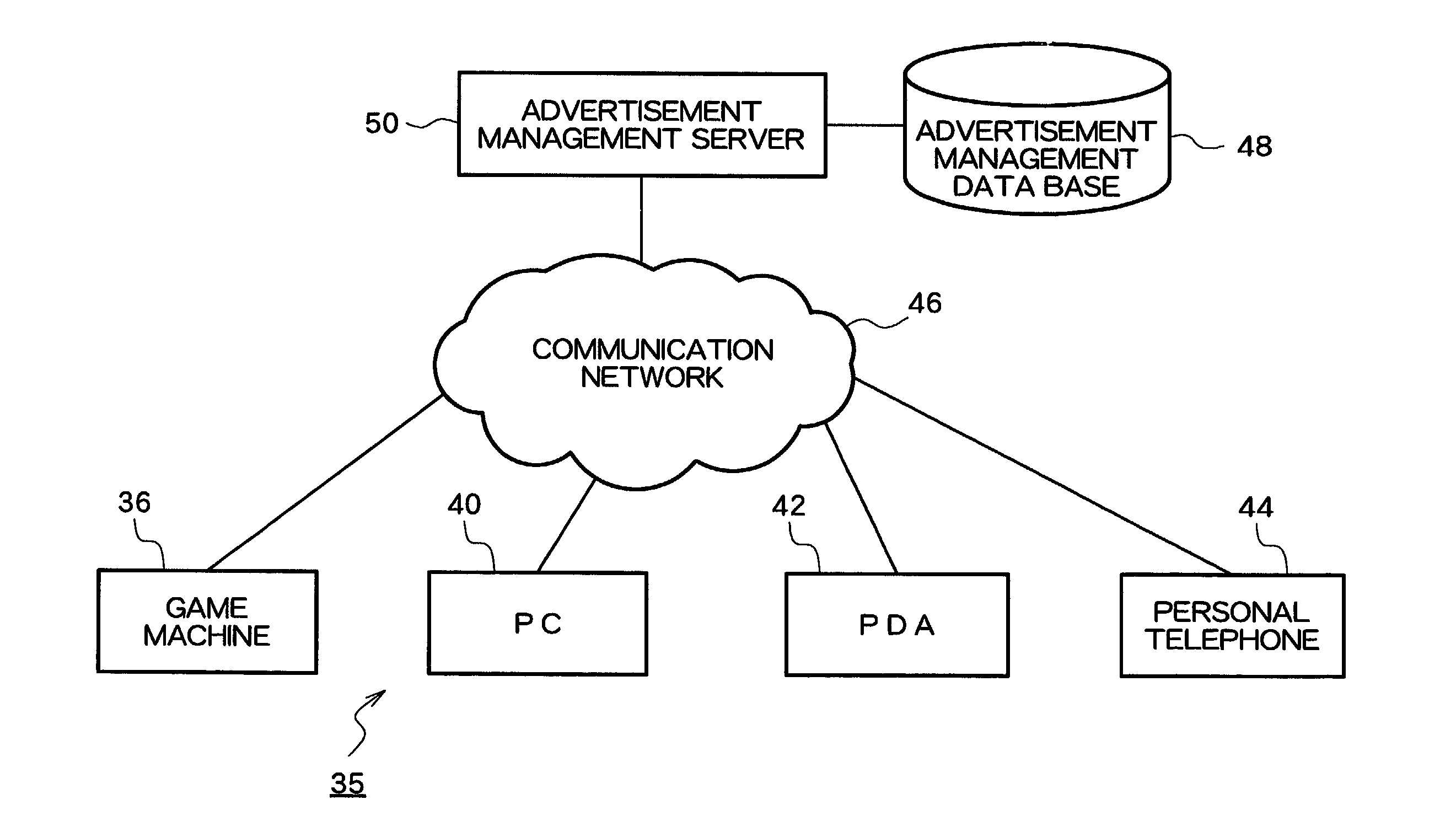

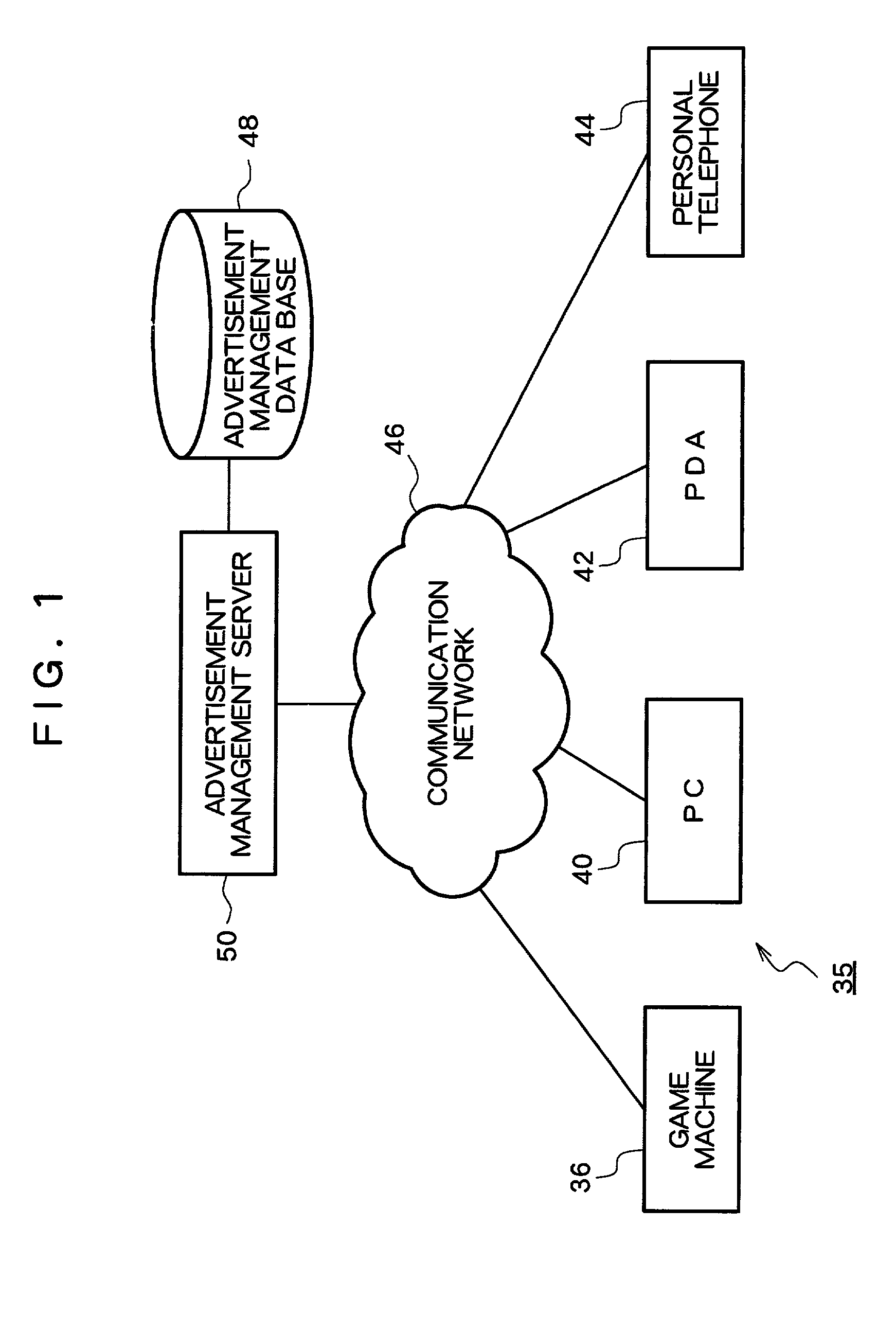

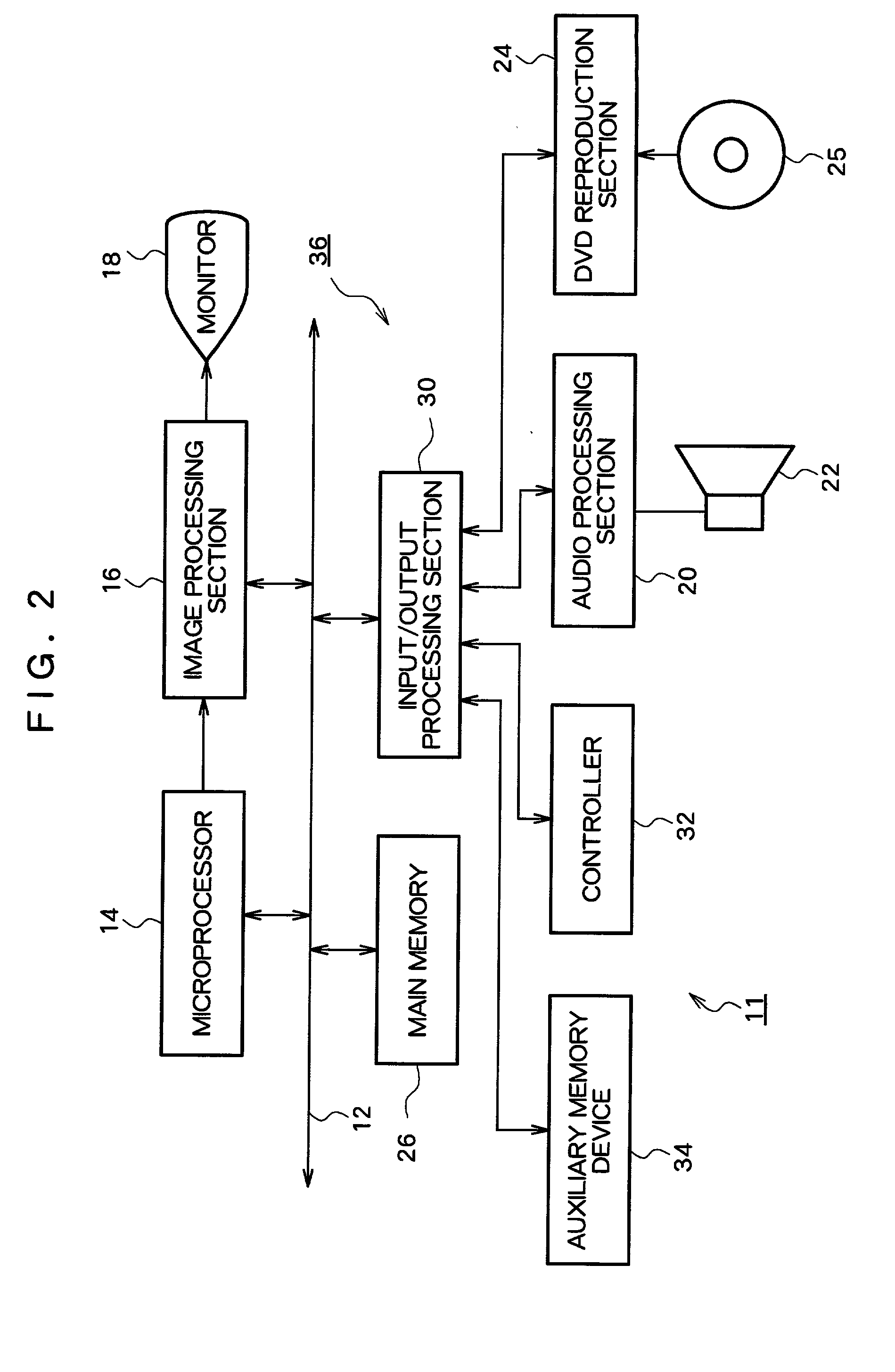

Game advertisement charge system, game advertisement display system, game machine, game advertisement charge method, game advertisement output method, game machine control method and program

In order to generate reasonable game advertisement charges and to direct a player's attention to a game advertisement, a charge amount to be charged for outputting a game advertisement is calculated based on displayed amount information, e.g., information concerning a display time and area, and display quality information, e. g., information concerning a display position on a game screen, presence or absence of clipping with an advertisement image, an advertisement display direction in a vertical 3D space, and so forth. Further, displaying of that advertisement is limited, when some advertisement has been displayed to a predetermined amount. Still further, an advertisement display program or data is obtained before a game program or game data is obtained, and the game program or the game data is obtained while an advertisement is being output based on the advertisement output program or data obtained. Yet further, a charge amount to be charged for outputting an advertisement is calculated based on attribute information obtained in advance concerning players targeted by an advertisement and attribute information of the player enjoying the game.

Owner:KONAMI DIGITAL ENTERTAINMENT CO LTD

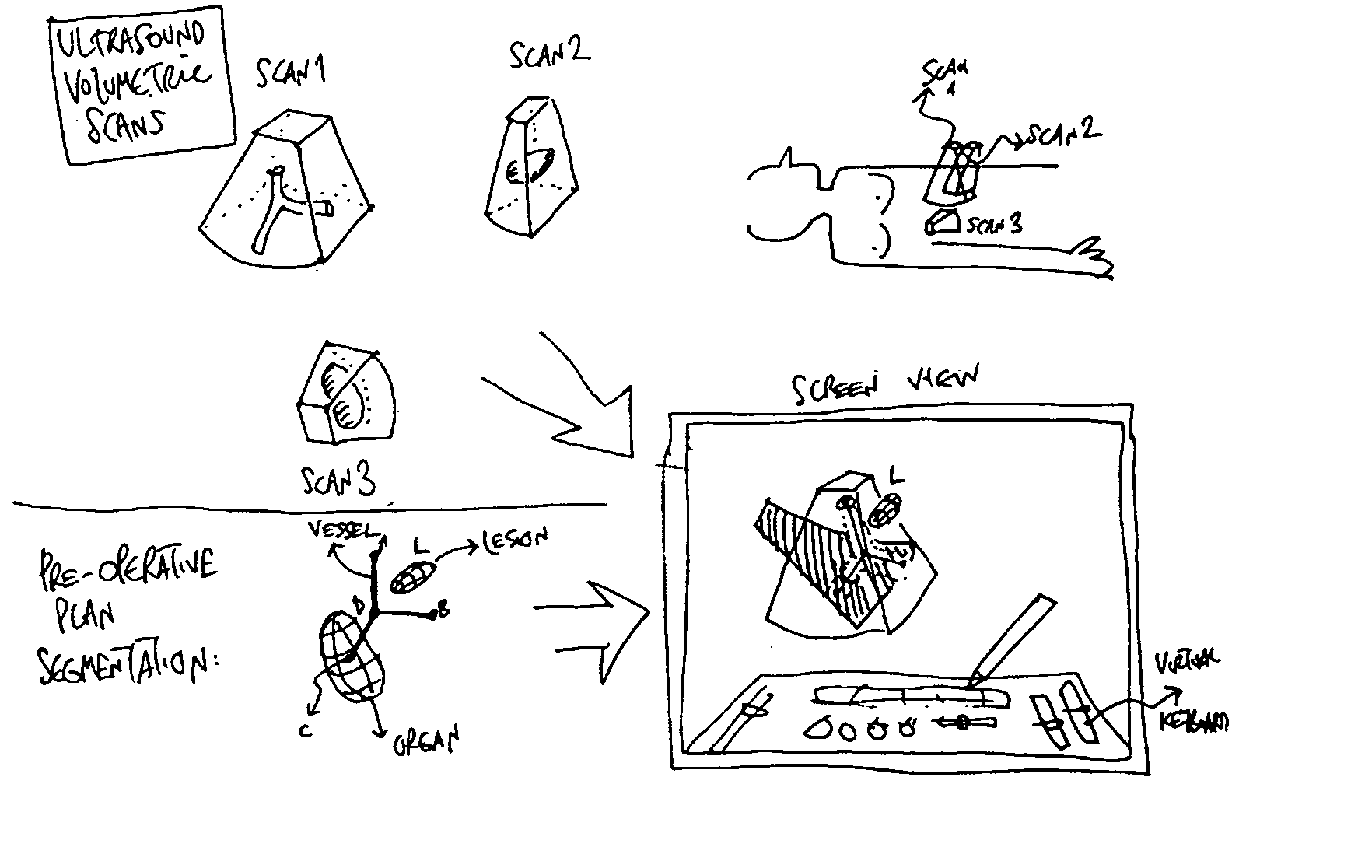

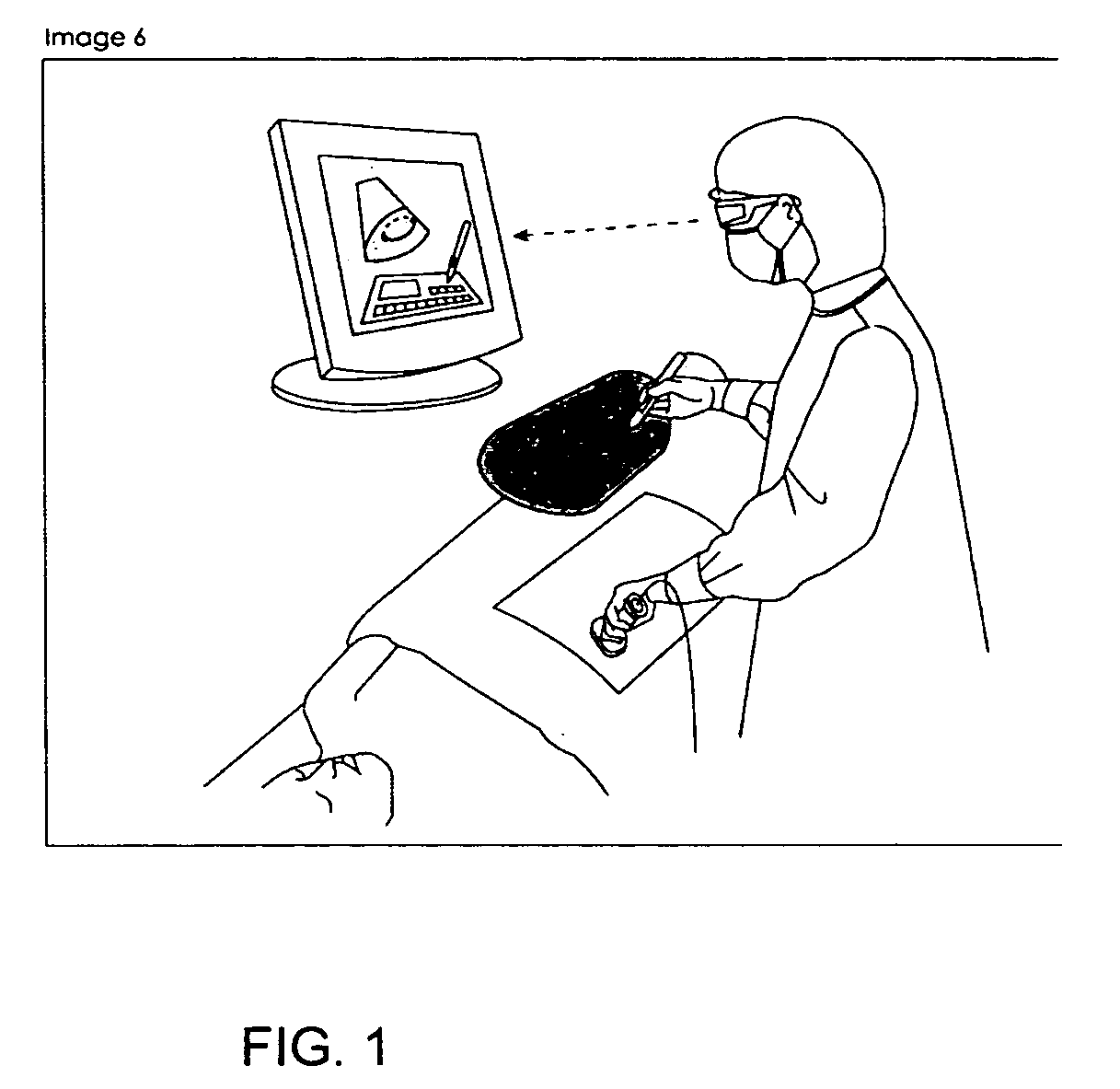

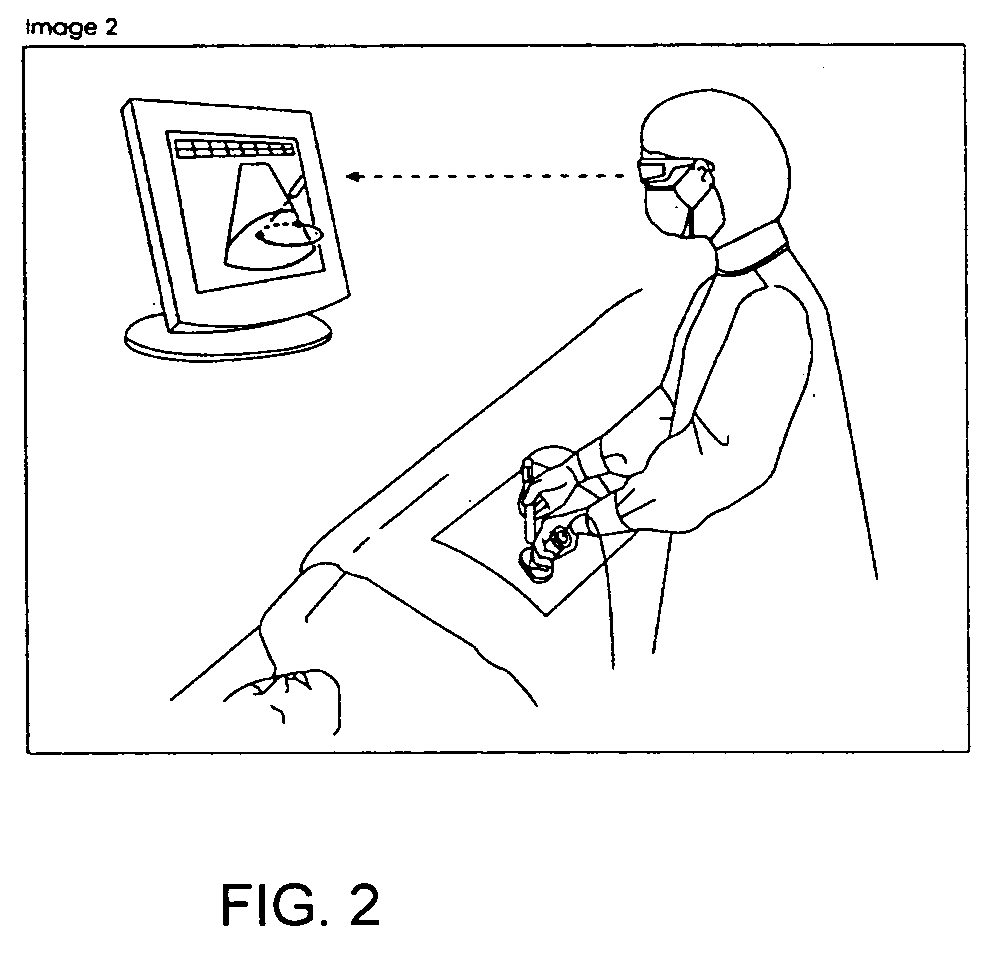

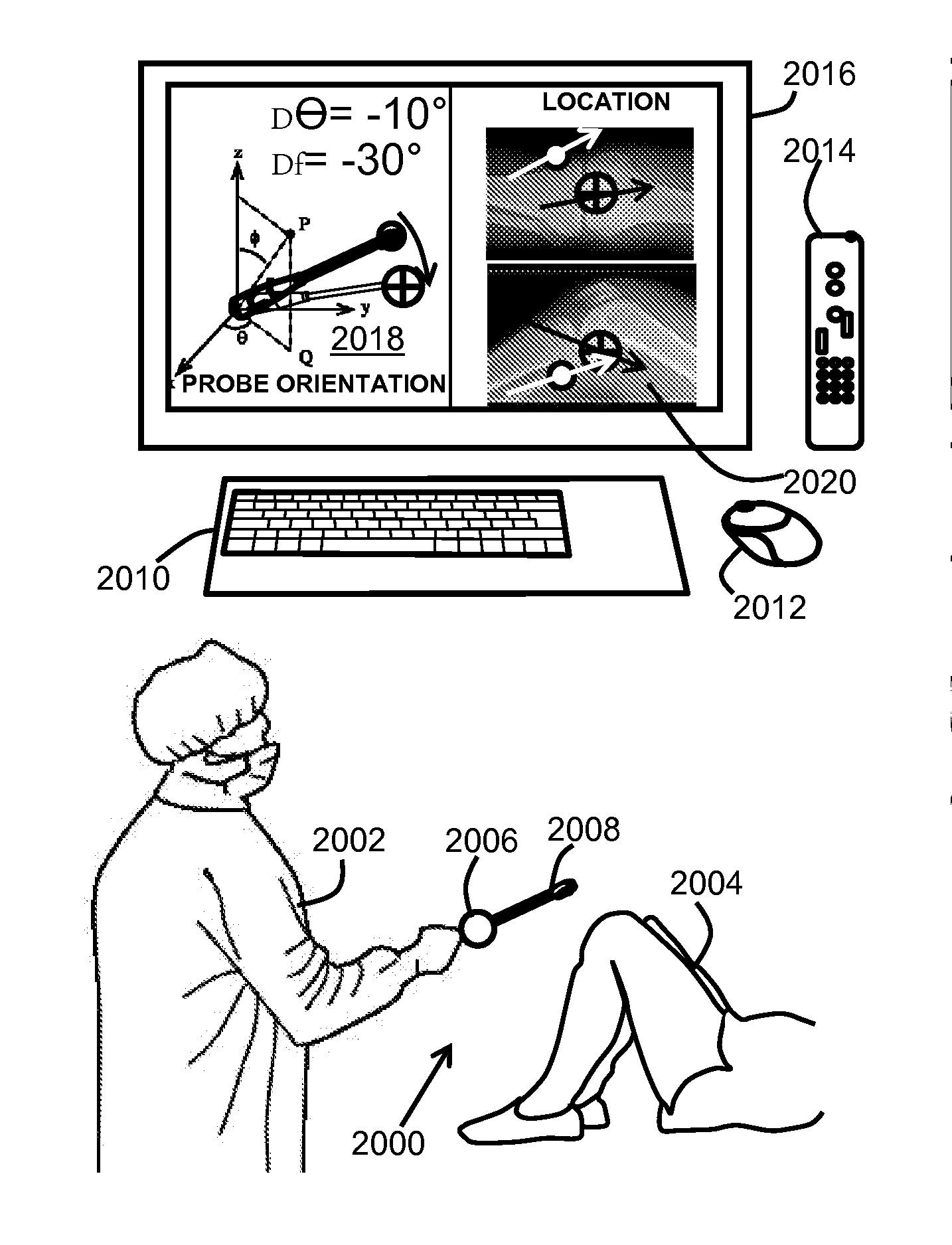

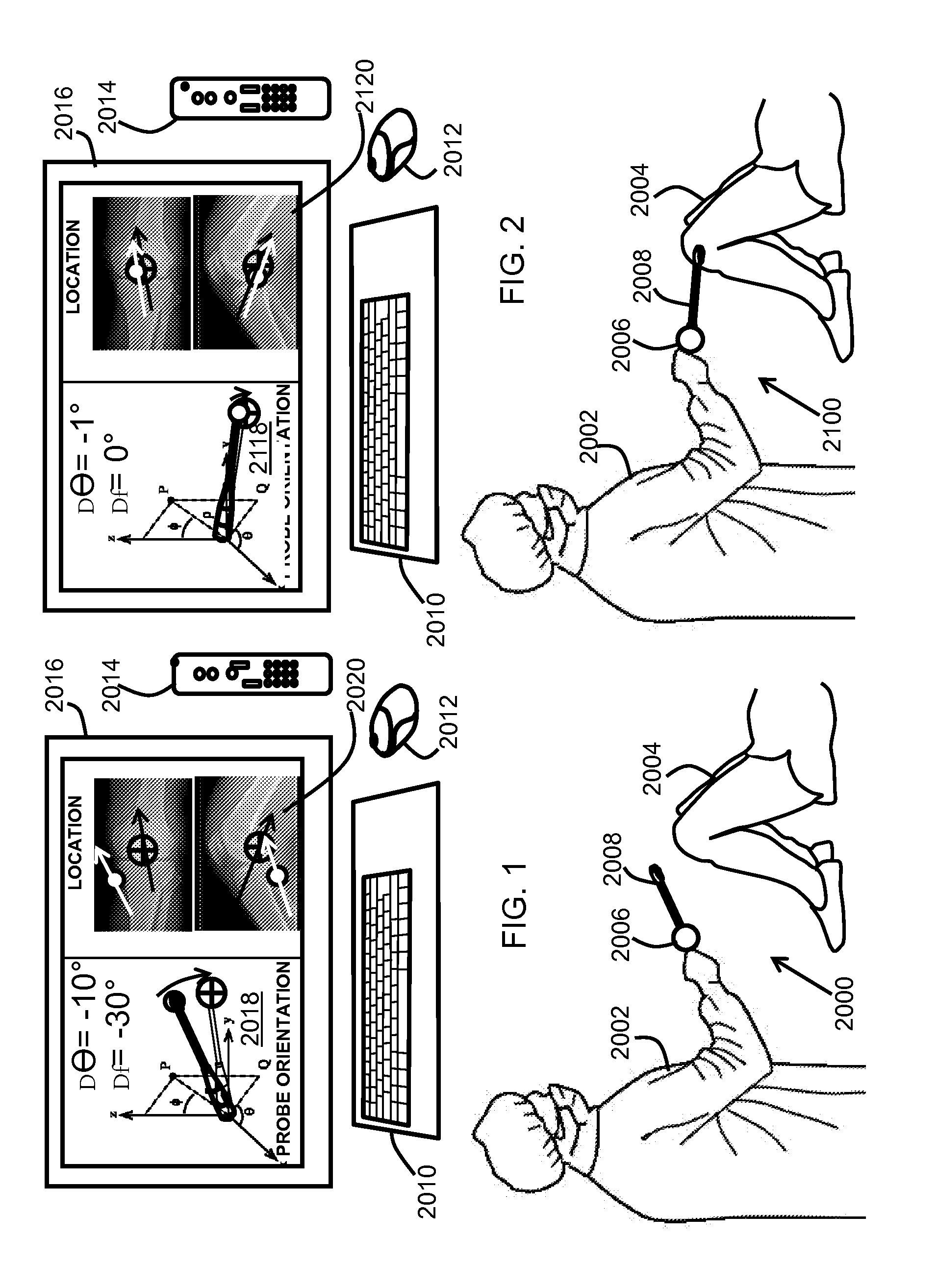

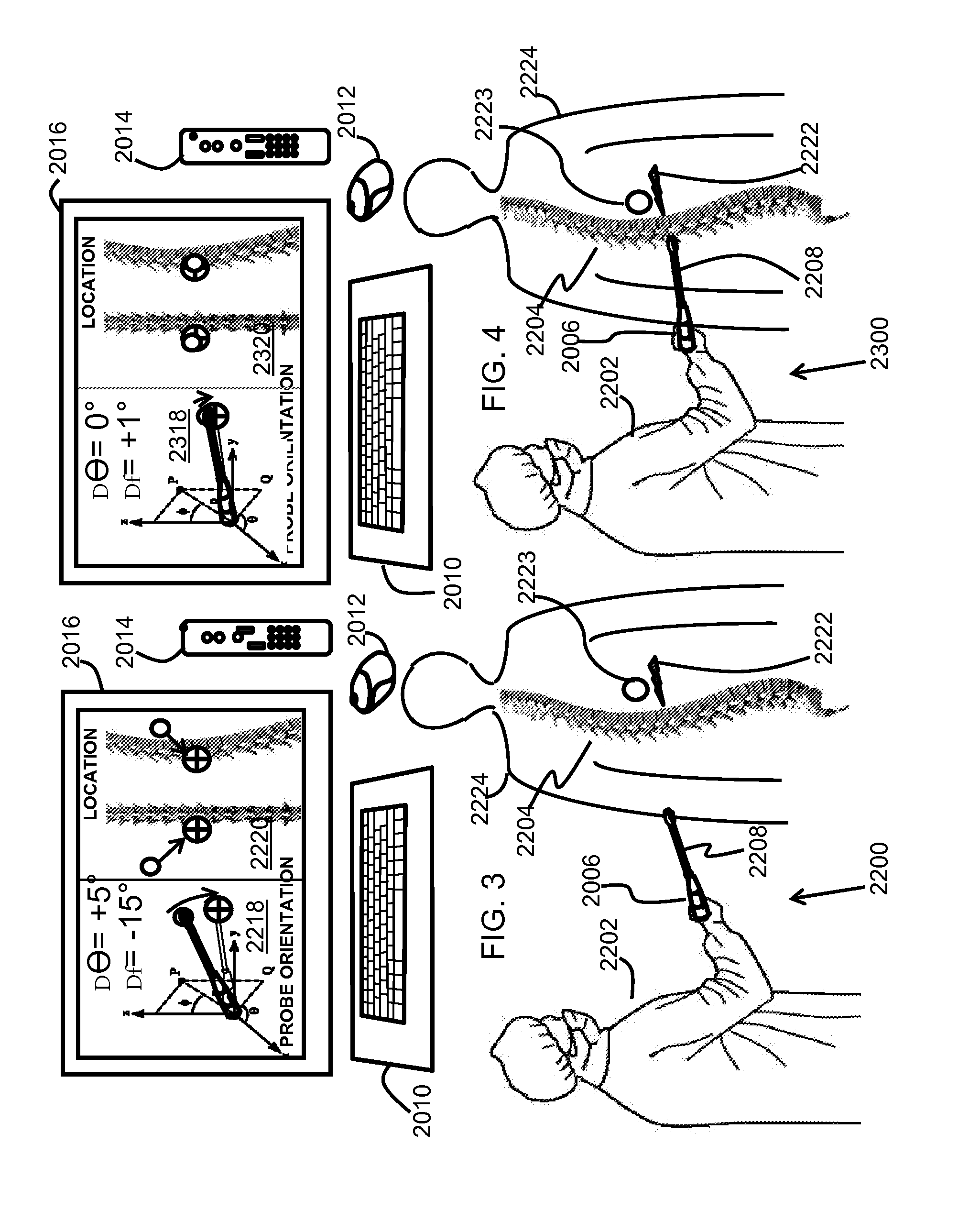

System and method for three-dimensional space management and visualization of ultrasound data ("SonoDEX")

InactiveUS20060020204A1Image enhancementWave based measurement systemsImaging processingSonification

A system and method for the imaging management of a 3D space where various substantially real-time scan images have been acquired is presented. In exemplary embodiments according to the present invention, a user can visualize images of a portion of a body or object obtained from a substantially real-time scanner not just as 2D images, but as positionally and orientationally located slices within a particular 3D space. In such exemplary embodiments a user can convert such slices into volumes whenever needed, and can process the images or volumes using known image processing and / or volume rendering techniques. Alternatively, a user can acquire ultrasound images in 3D using the techniques of UltraSonar or 4D Ultrasound. In exemplary embodiments according to the present invention, a user can manage various substantially real-time images obtained, either as slices or volumes, and can control their visualization, processing and display, as well as their registration and fusion with other images, volumes and virtual objects obtained or derived from prior scans of the body or object of interest using various modalities.

Owner:BRACCO IMAGINIG SPA

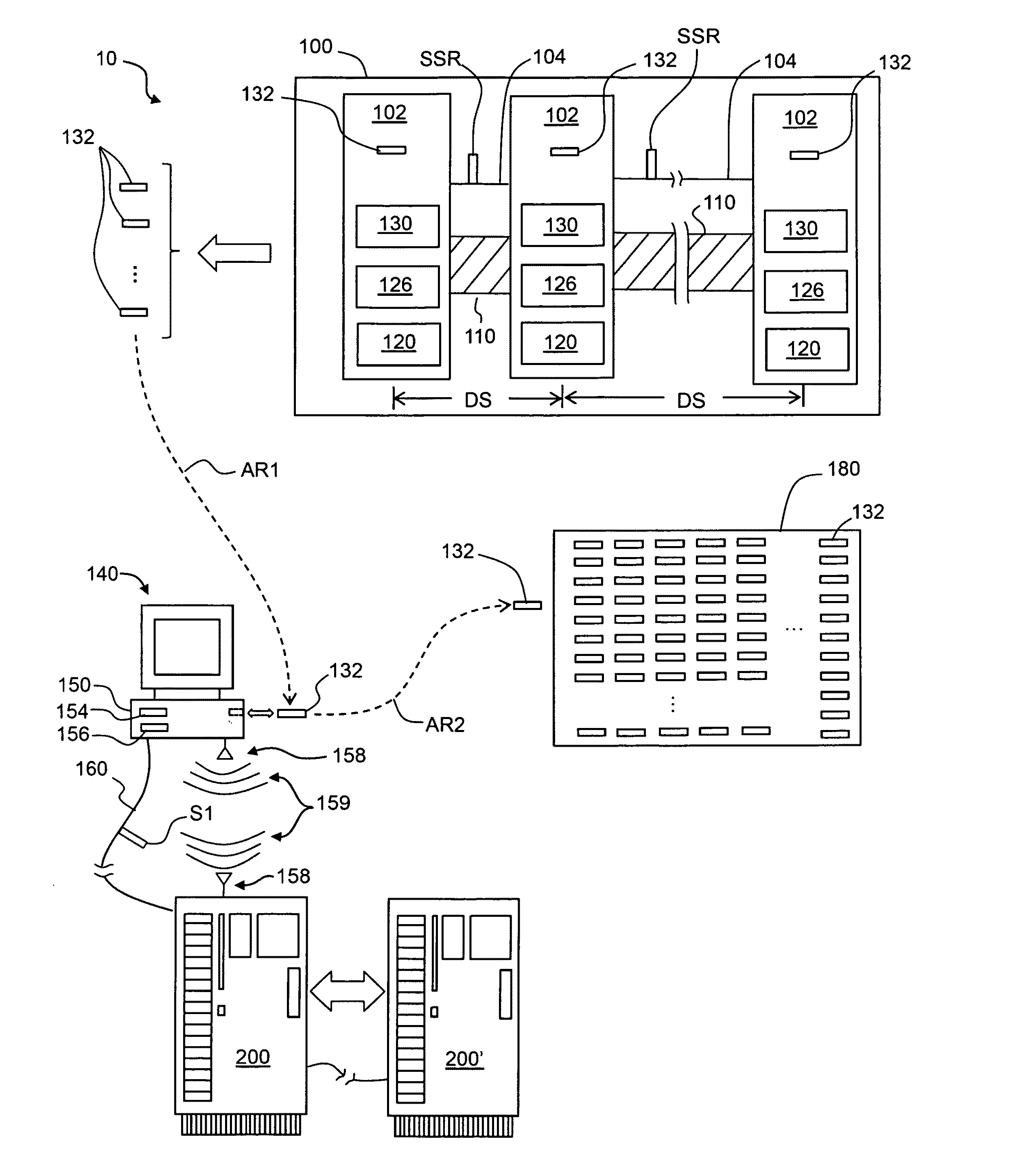

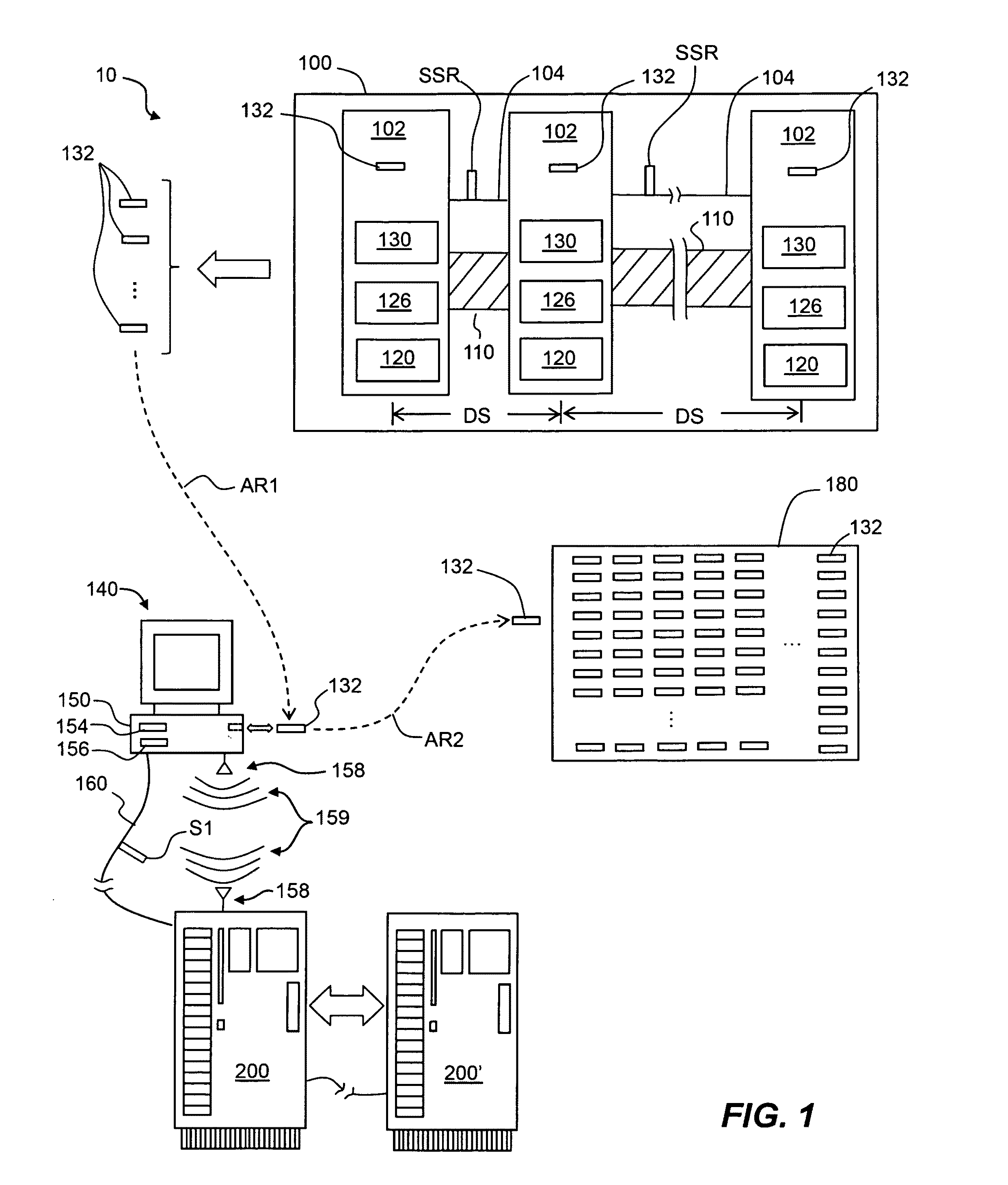

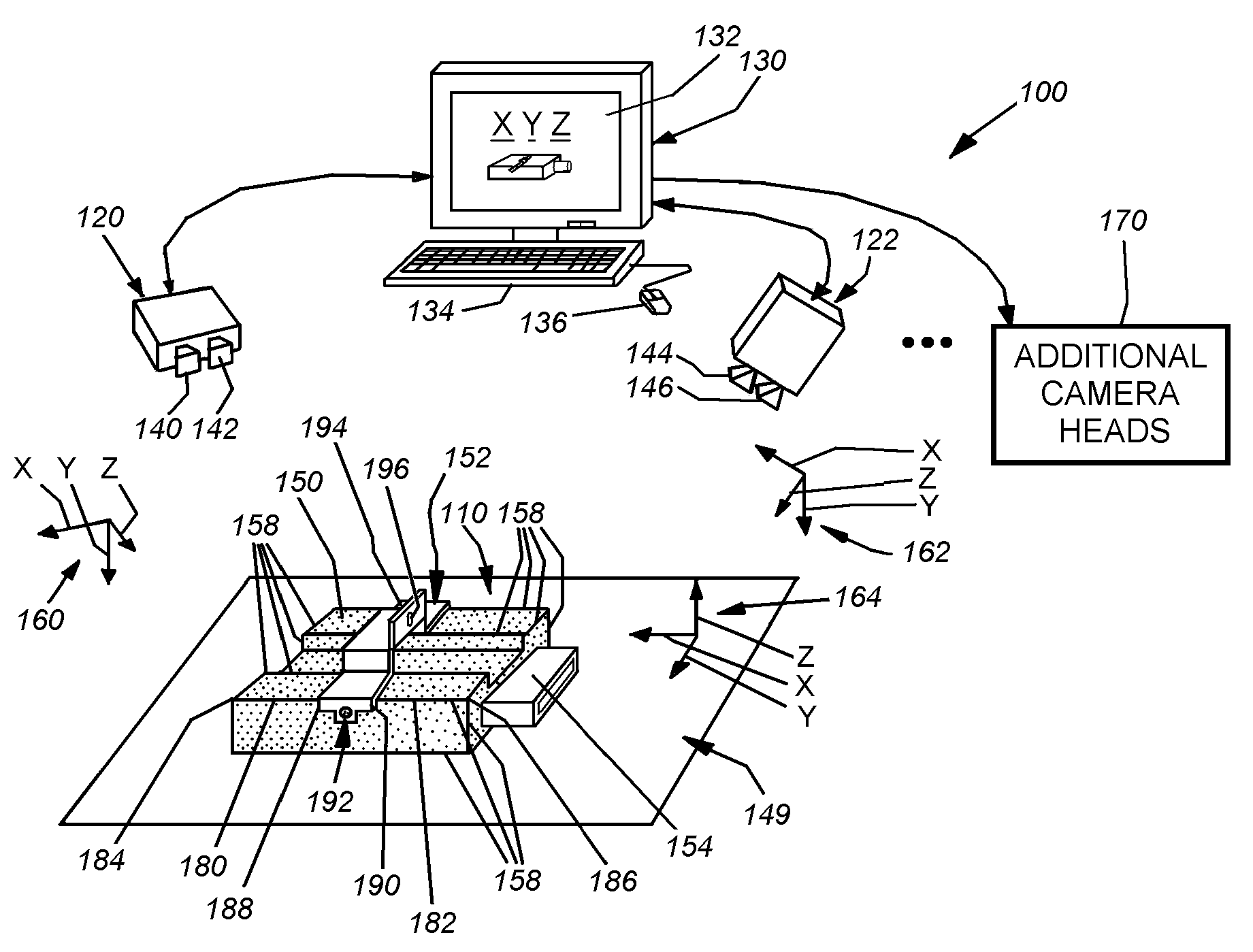

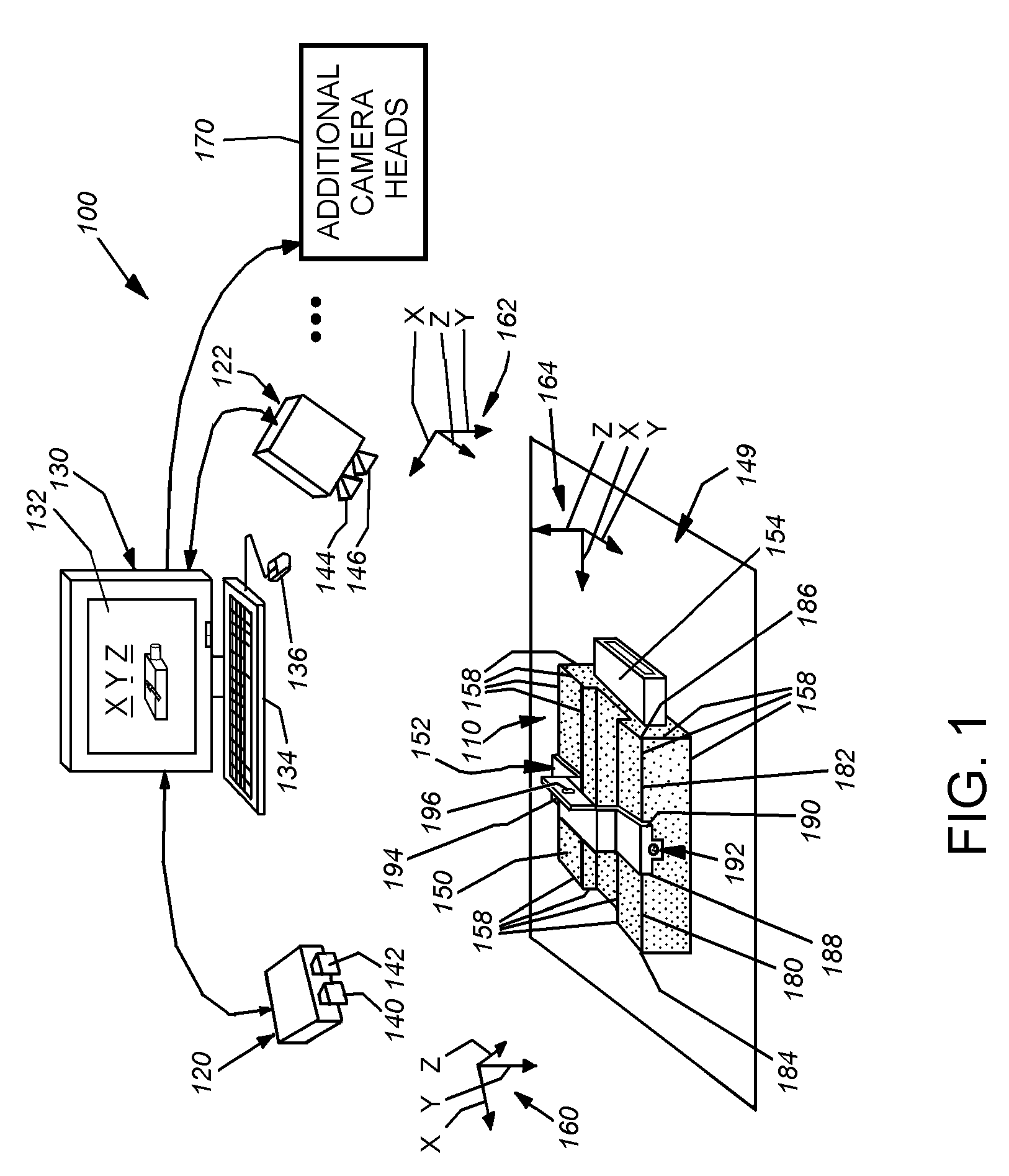

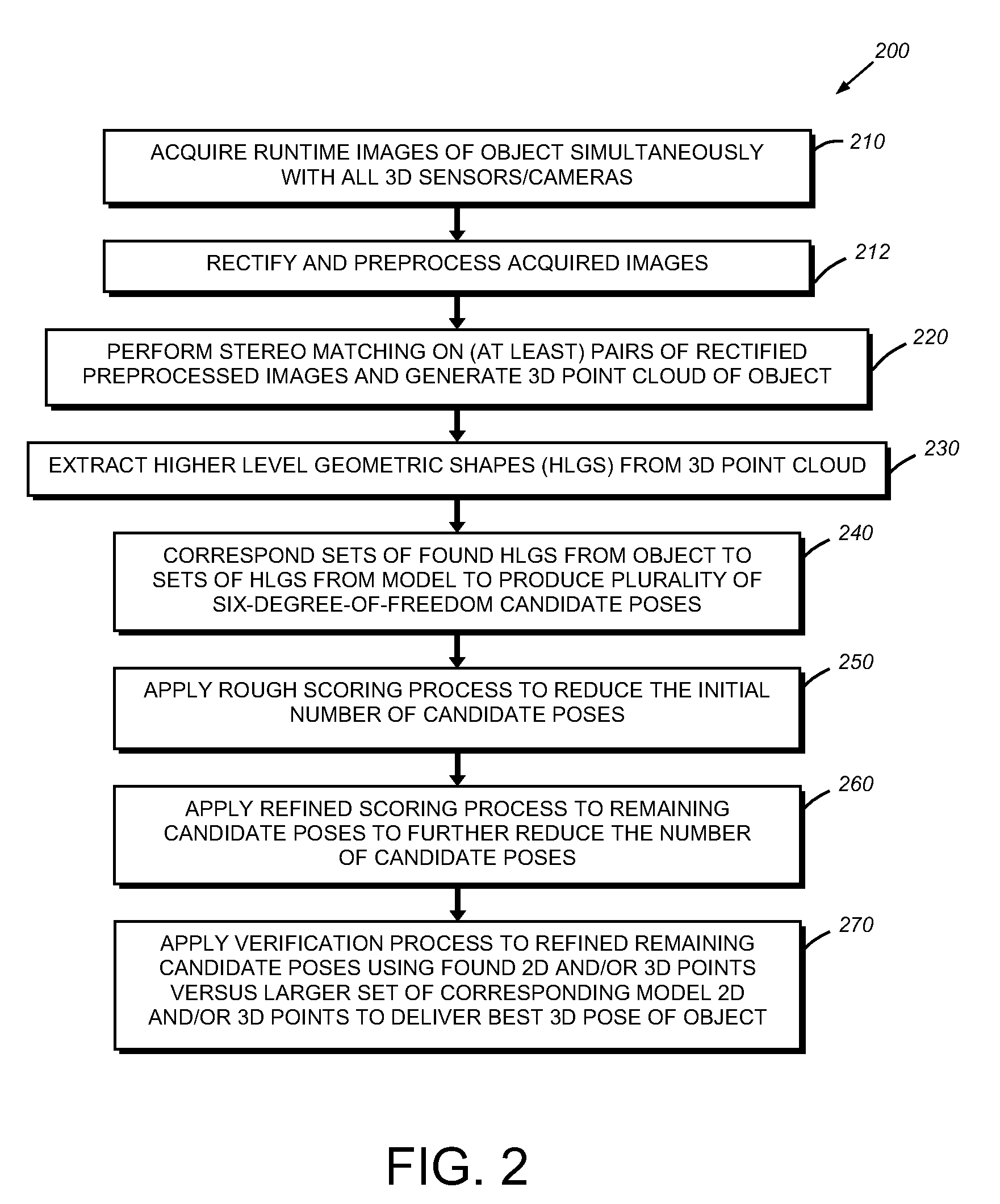

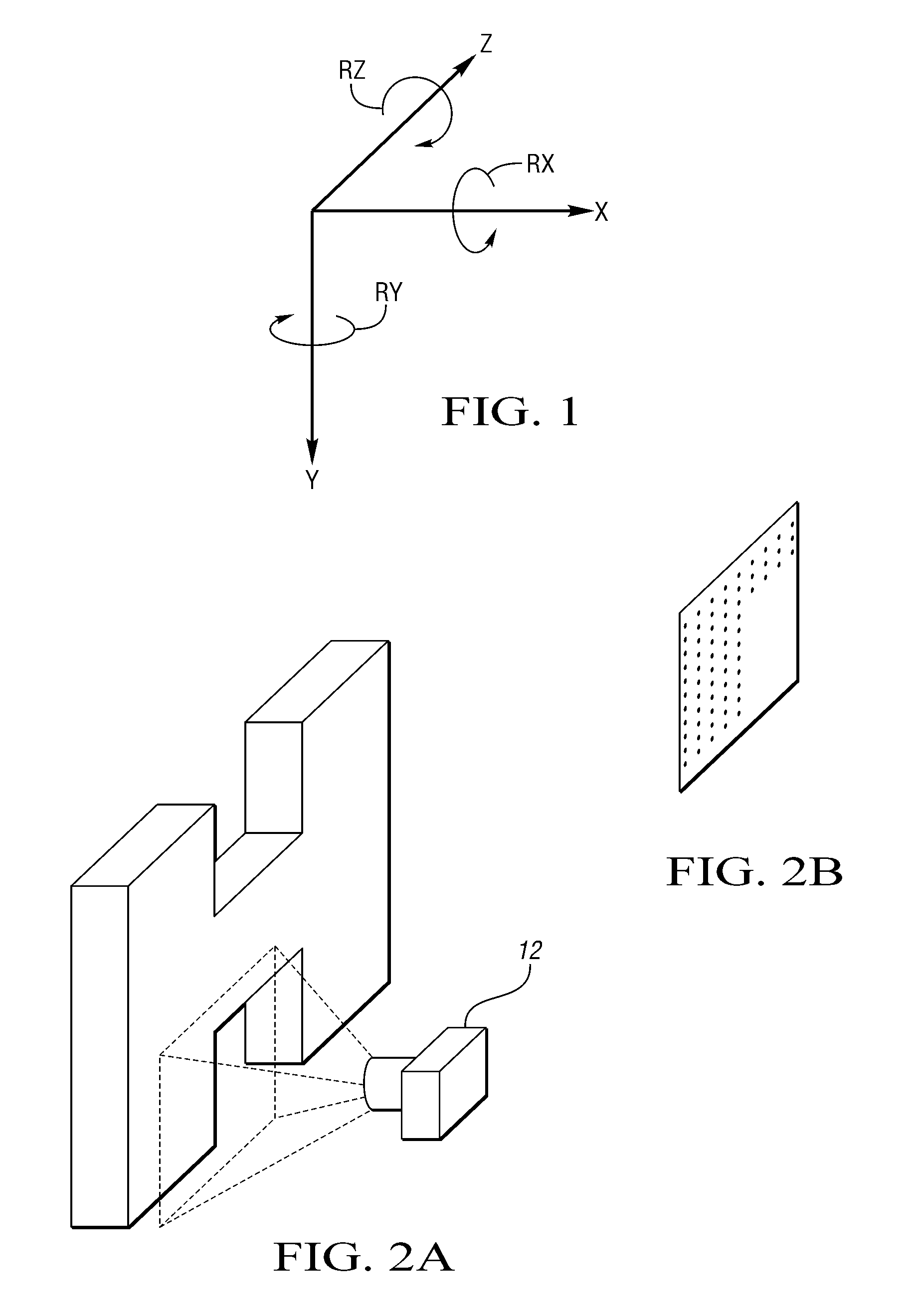

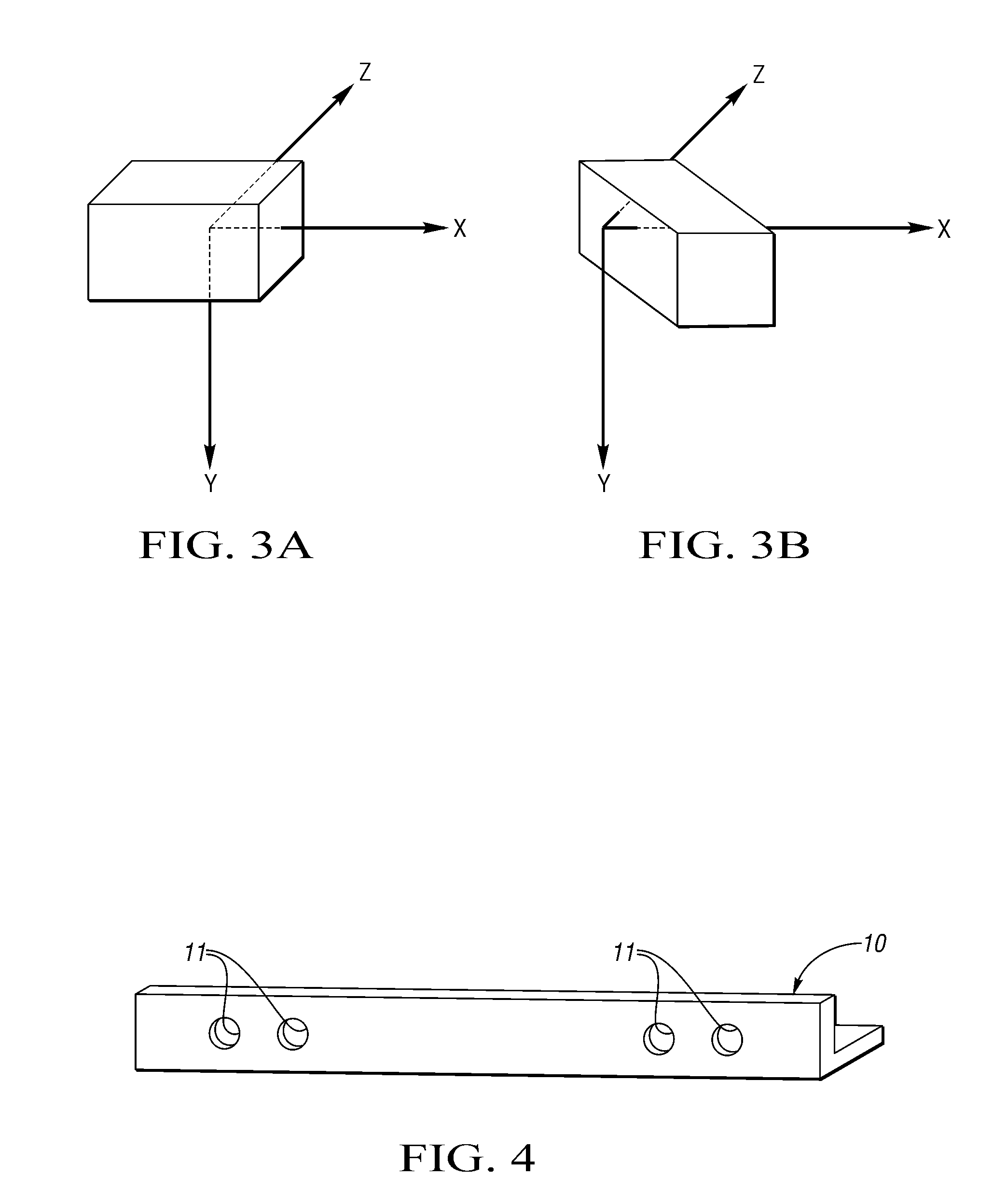

System and method for three-dimensional alignment of objects using machine vision

This invention provides a system and method for determining the three-dimensional alignment of a modeledobject or scene. After calibration, a 3D (stereo) sensor system views the object to derive a runtime 3D representation of the scene containing the object. Rectified images from each stereo head are preprocessed to enhance their edge features. A stereo matching process is then performed on at least two (a pair) of the rectified preprocessed images at a time by locating a predetermined feature on a first image and then locating the same feature in the other image. 3D points are computed for each pair of cameras to derive a 3D point cloud. The 3D point cloud is generated by transforming the 3D points of each camera pair into the world 3D space from the world calibration. The amount of 3D data from the point cloud is reduced by extracting higher-level geometric shapes (HLGS), such as line segments. Found HLGS from runtime are corresponded to HLGS on the model to produce candidate 3D poses. A coarse scoring process prunes the number of poses. The remaining candidate poses are then subjected to a further more-refined scoring process. These surviving candidate poses are then verified by, for example, fitting found 3D or 2D points of the candidate poses to a larger set of corresponding three-dimensional or two-dimensional model points, whereby the closest match is the best refined three-dimensional pose.

Owner:COGNEX CORP

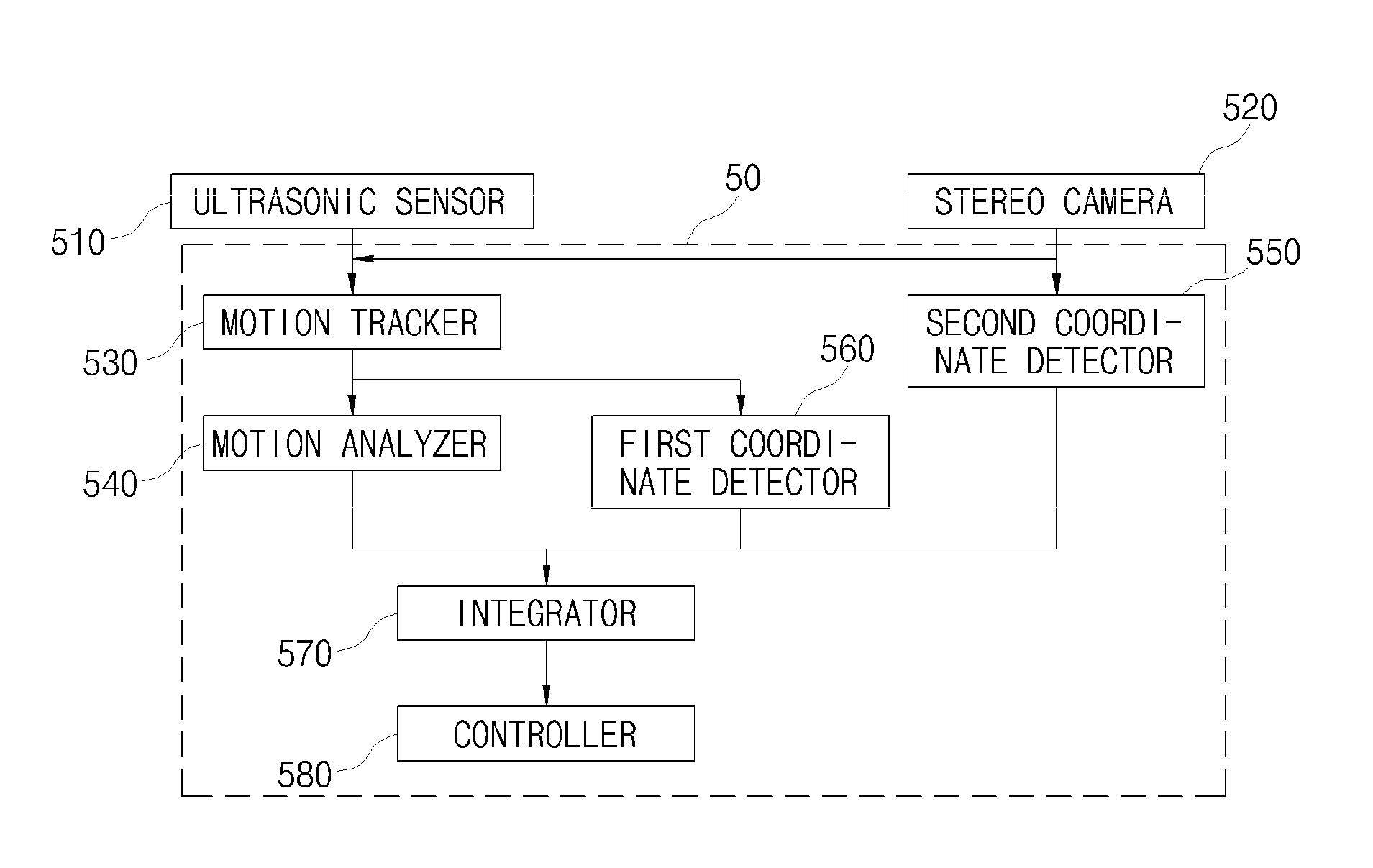

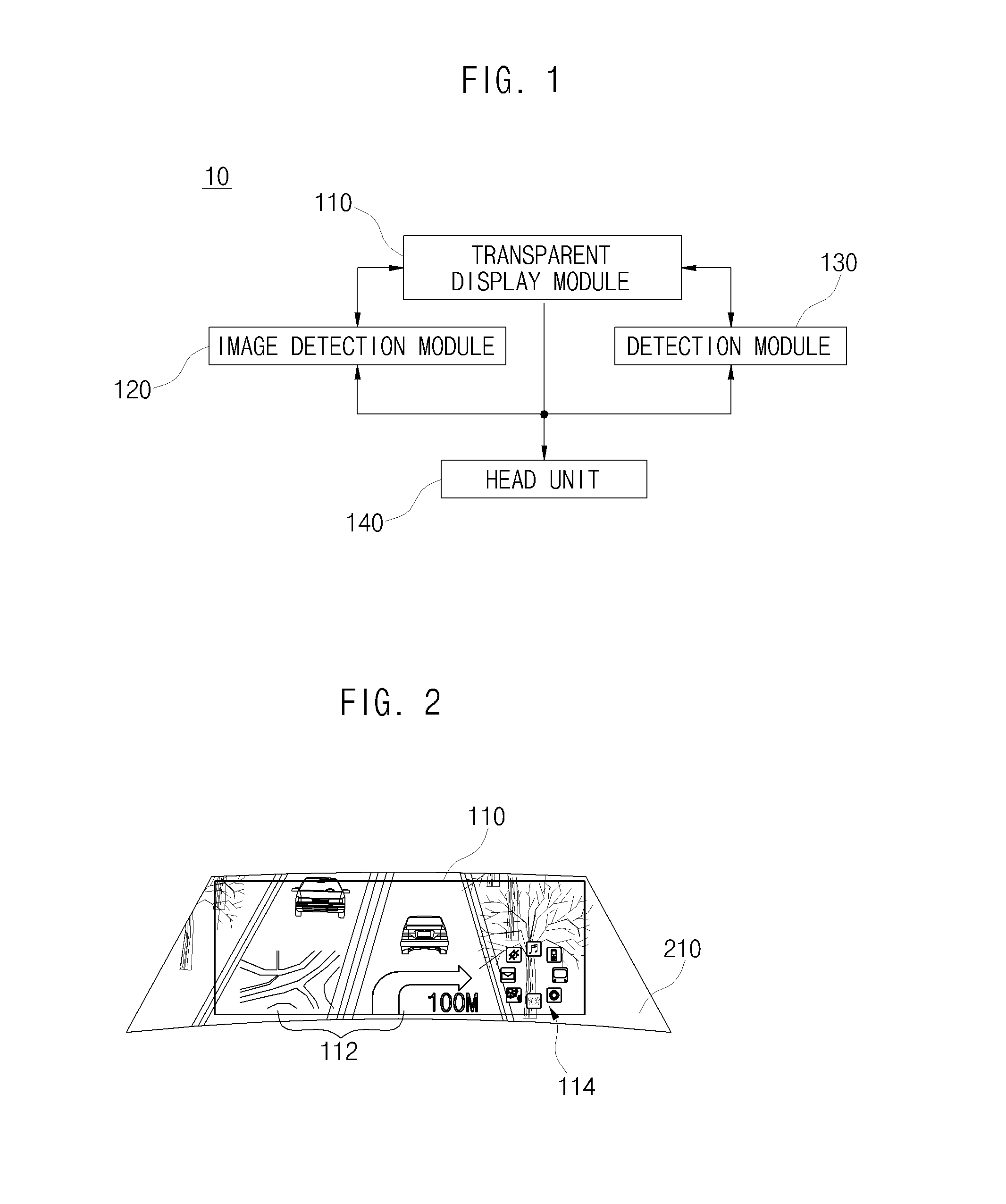

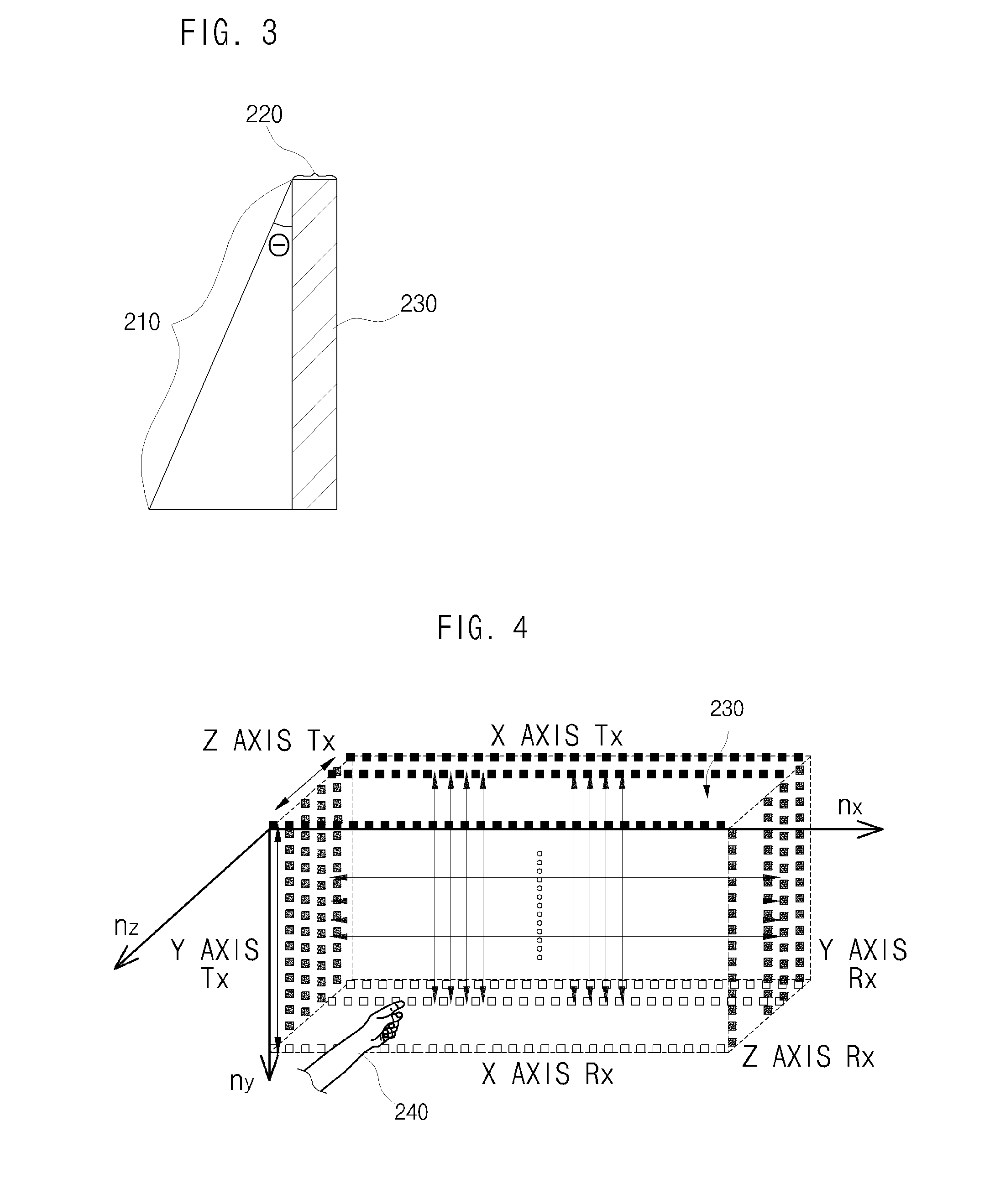

Apparatus and method of user interface for manipulating multimedia contents in vehicle

InactiveUS20110260965A1Safely and efficiently manipulatingCathode-ray tube indicatorsInput/output processes for data processingIn vehicleImage detection

Disclosed are provided an apparatus and a method of a user interface for manipulating multimedia contents for a vehicle. An apparatus of a user interface for manipulating multimedia contents for a vehicle according to an embodiment of the present invention includes: a transparent display module displaying an image including one or more multimedia objects; an ultrasonic detection module detecting a user indicating means by using an ultrasonic sensor in a 3D space close to the transparent display module; an image detection module tracking and photographing the user indicating means; and a head unit judging whether or not any one of the multimedia objects is selected by the user indicating means by using information received from at least one of the image detection module and the ultrasonic detection module and performing a control corresponding to the selected multimedia object.

Owner:ELECTRONICS & TELECOMM RES INST

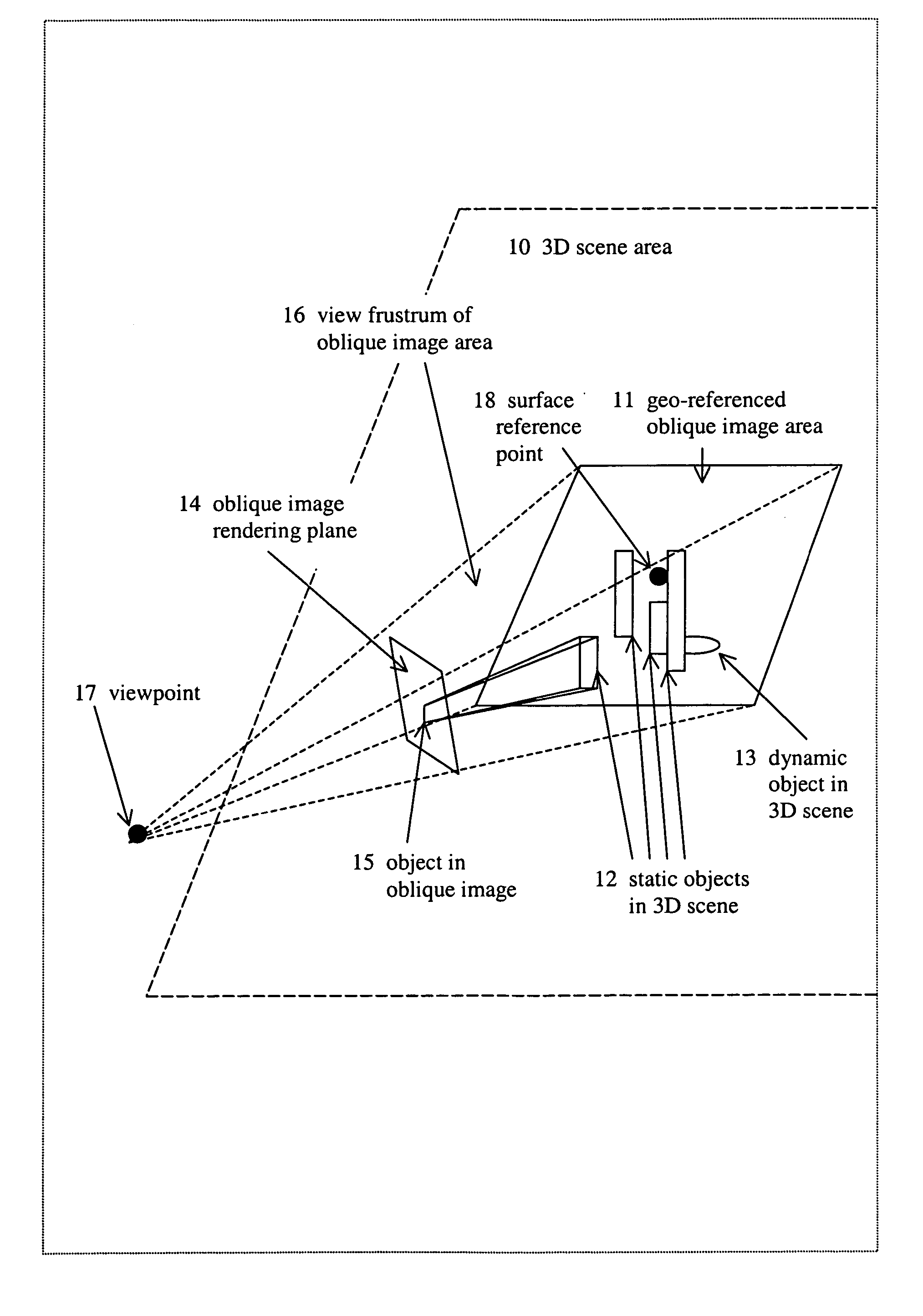

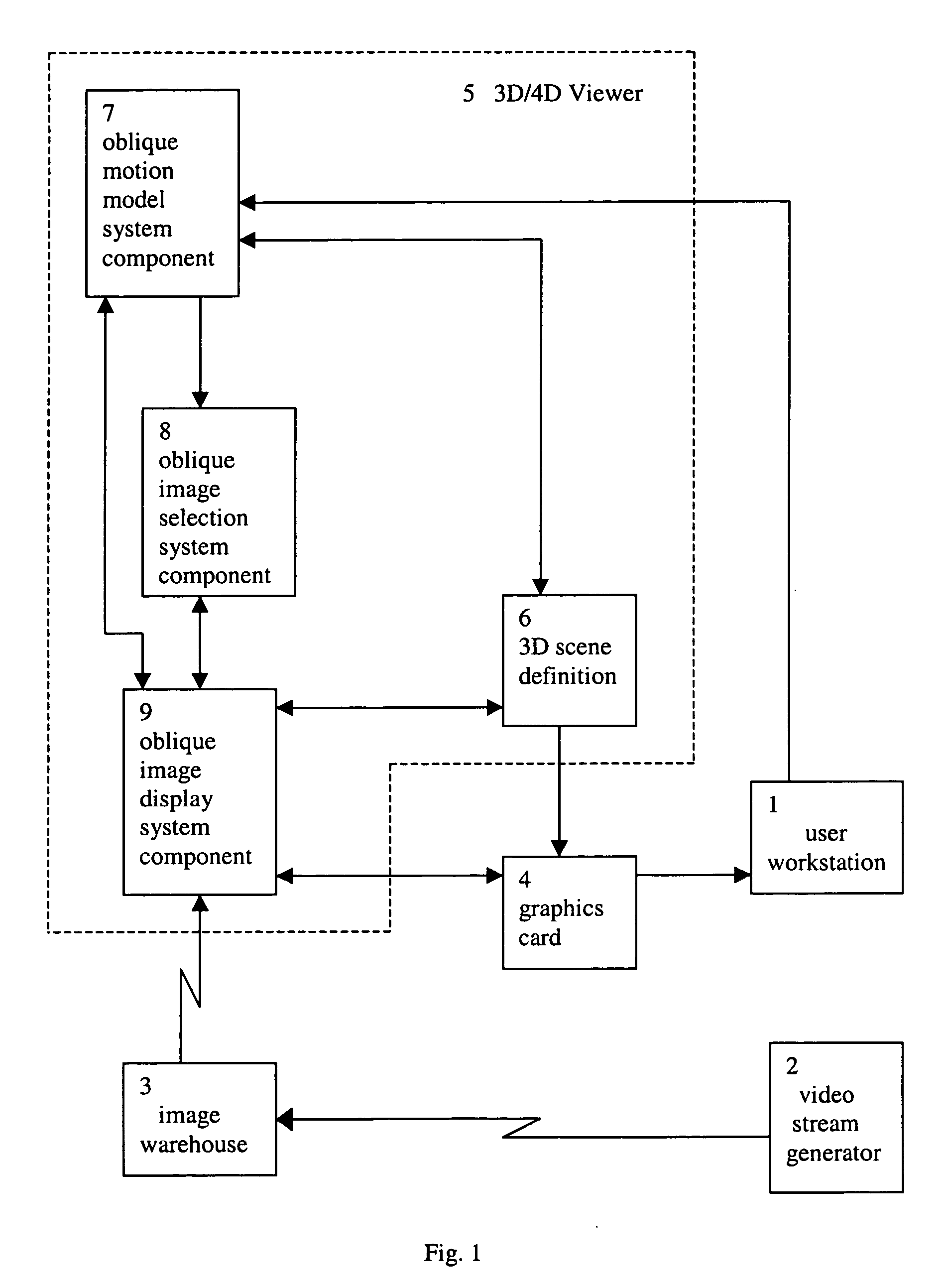

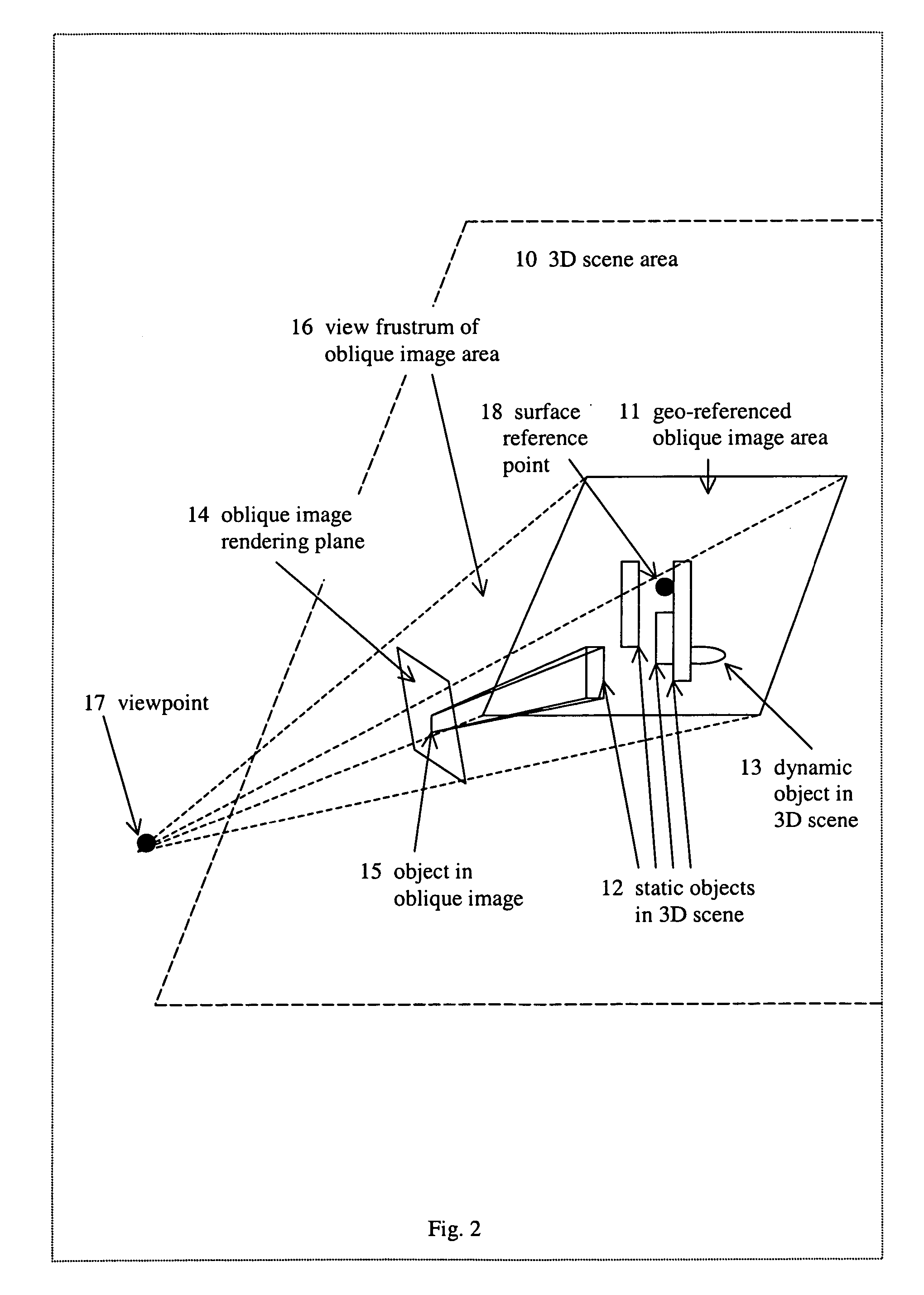

System for viewing a collection of oblique imagery in a three or four dimensional virtual scene

A system for selecting oblique images from a collection of geo-referenced oblique images and viewing them within the context of a virtual, three- or four-dimensional (3D space and time) geographic scene, providing the ability to analyze and interact with the oblique image being viewed. The system automatically selects and displays the best fit oblique image from an image warehouse based on the user's current 3D / 4D viewpoint, and continuously maintains the geo-registration of the oblique image as the user adjusts the viewpoint.

Owner:BALFOUR TECH

Spatial browsing approach to multimedia information retrieval

InactiveUS6281898B1Improve organizationTelevision system detailsColor television detailsInformation spaceComputer graphics (images)

A three dimensional user interface allows browsing of a database displayed as a three dimensional information space. The data is organized along three axes. A current plane or layer of data is summarized on an information landscape, with different planes being selectable using a tower that is located at the intersection of the three axes. A control wall with incorporated tools is used to formulate database queries. A preview wall previews selected data. The preview wall also provides transition between previewing the searches in the 3D space and actual viewing of the programming in full screen 2D display. Application to TV programming data is shown.

Owner:US PHILIPS CORP

3-d imaging and processing system including at least one 3-d or depth sensor which is continually calibrated during use

InactiveUS20130329012A1Low costSimplify useImage enhancementImage analysis3d sensorObservational error

3D imaging and processing method and system including at least one 3D or depth sensor which is continuously calibrated during use are provided. In one embodiment, a calibration apparatus or object is continuously visible in the field of view of each 3D sensor. In another embodiment, such as a calibration apparatus is not needed. Continuously calibrated 3D sensors improve the accuracy and reliability of depth measurements. The calibration system and method can be used to ensure the accuracy of measurements using any of a variety of 3D sensor technologies. To reduce the cost of implementation, the invention can be used with inexpensive, consumer-grade 3D sensors to correct measurement errors and other measurement deviations from the true location and orientation of an object in 3D space.

Owner:LIBERTY REACH

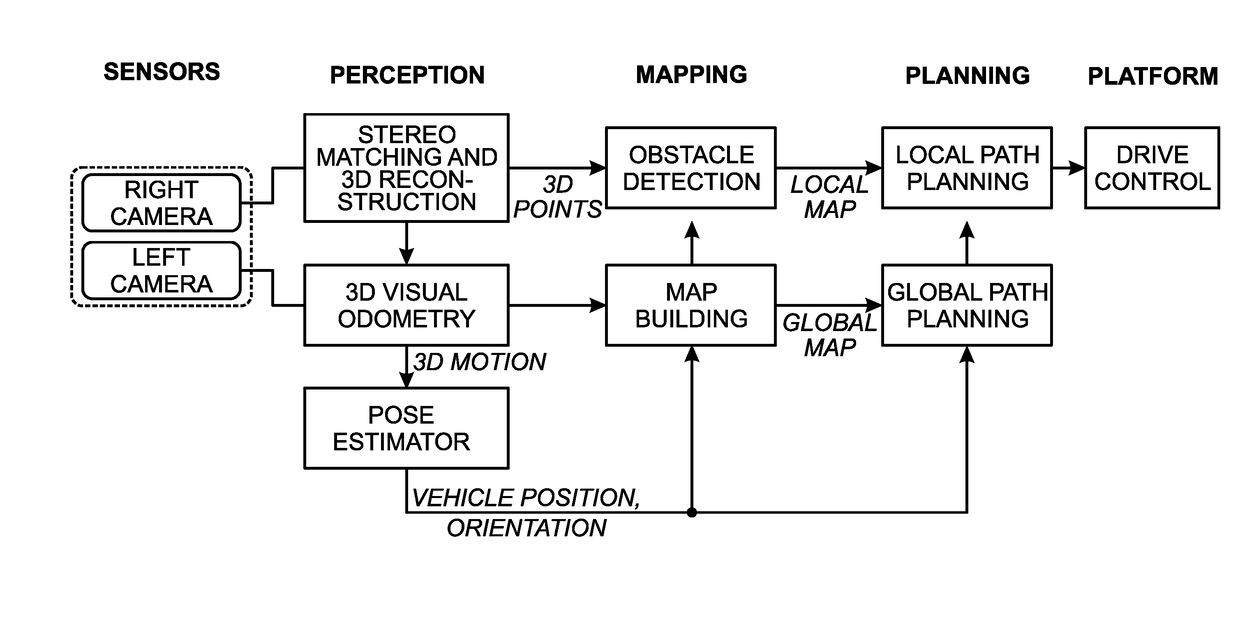

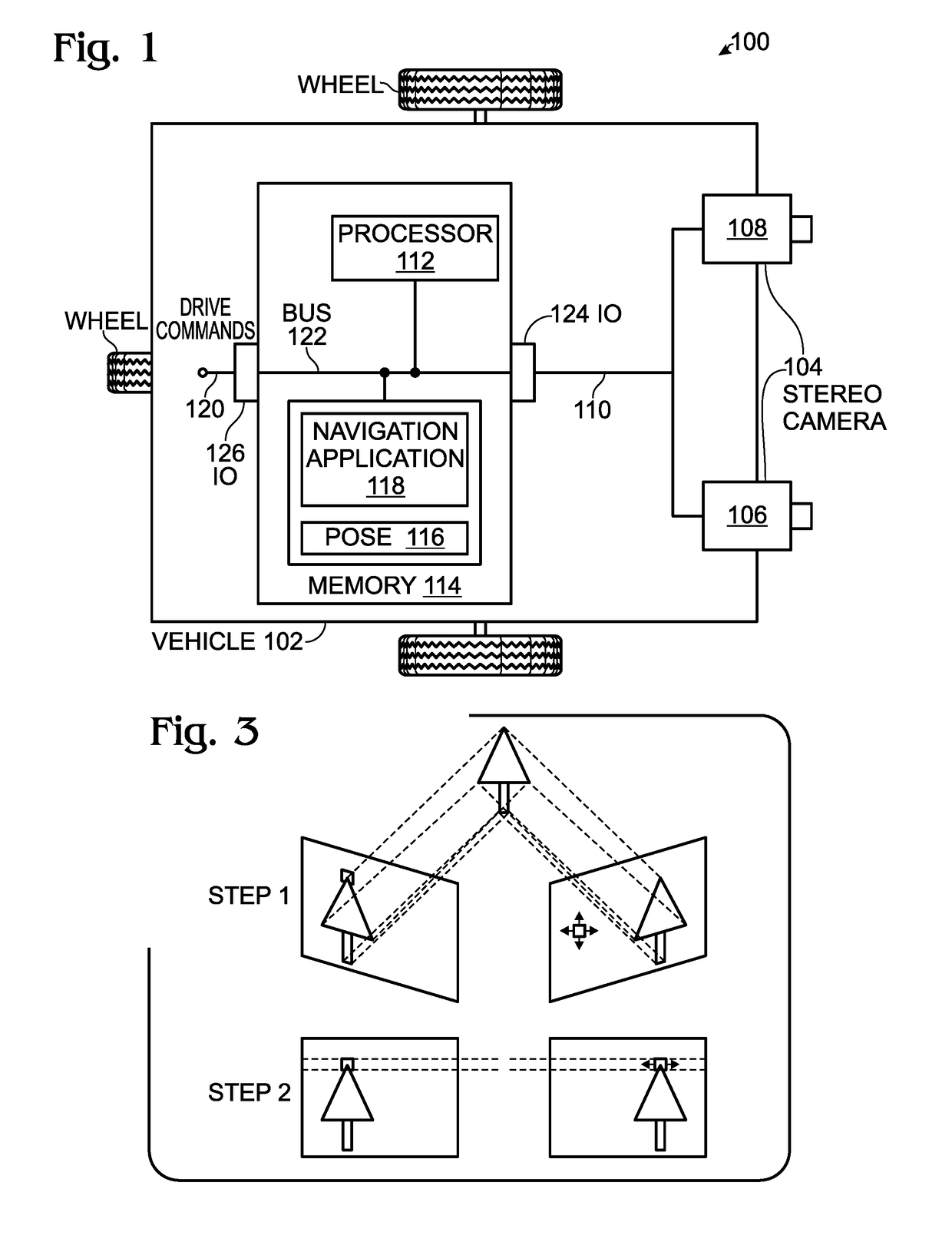

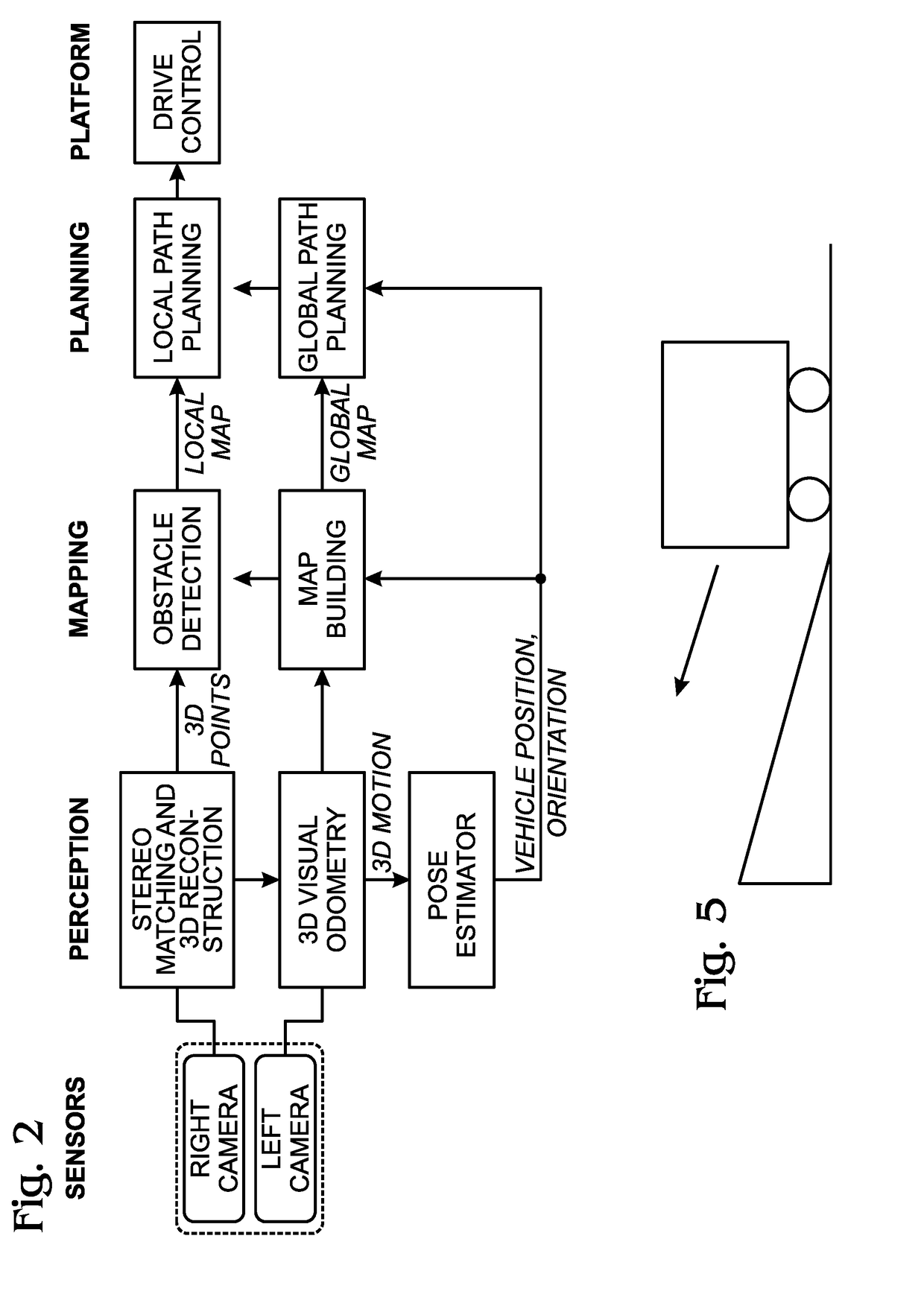

Autonomous Navigation using Visual Odometry

A system and method are provided for autonomously navigating a vehicle. The method captures a sequence of image pairs using a stereo camera. A navigation application stores a vehicle pose (history of vehicle position). The application detects a plurality of matching feature points in a first matching image pair, and determines a plurality of corresponding object points in three-dimensional (3D) space from the first image pair. A plurality of feature points are tracked from the first image pair to a second image pair, and the plurality of corresponding object points in 3D space are determined from the second image pair. From this, a vehicle pose transformation is calculated using the object points from the first and second image pairs. The rotation angle and translation are determined from the vehicle pose transformation. If the rotation angle or translation exceed a minimum threshold, the stored vehicle pose is updated.

Owner:SHARP KK

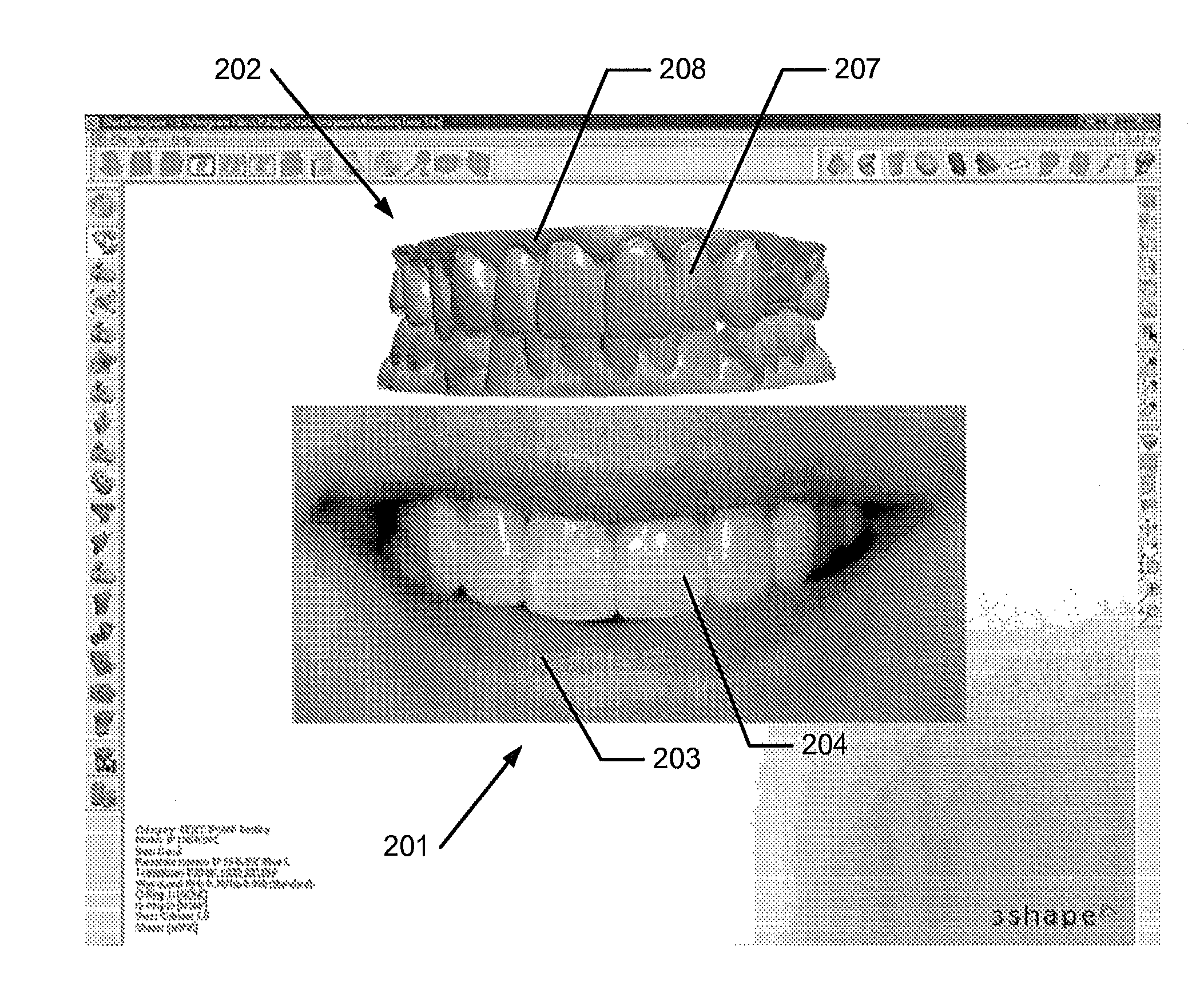

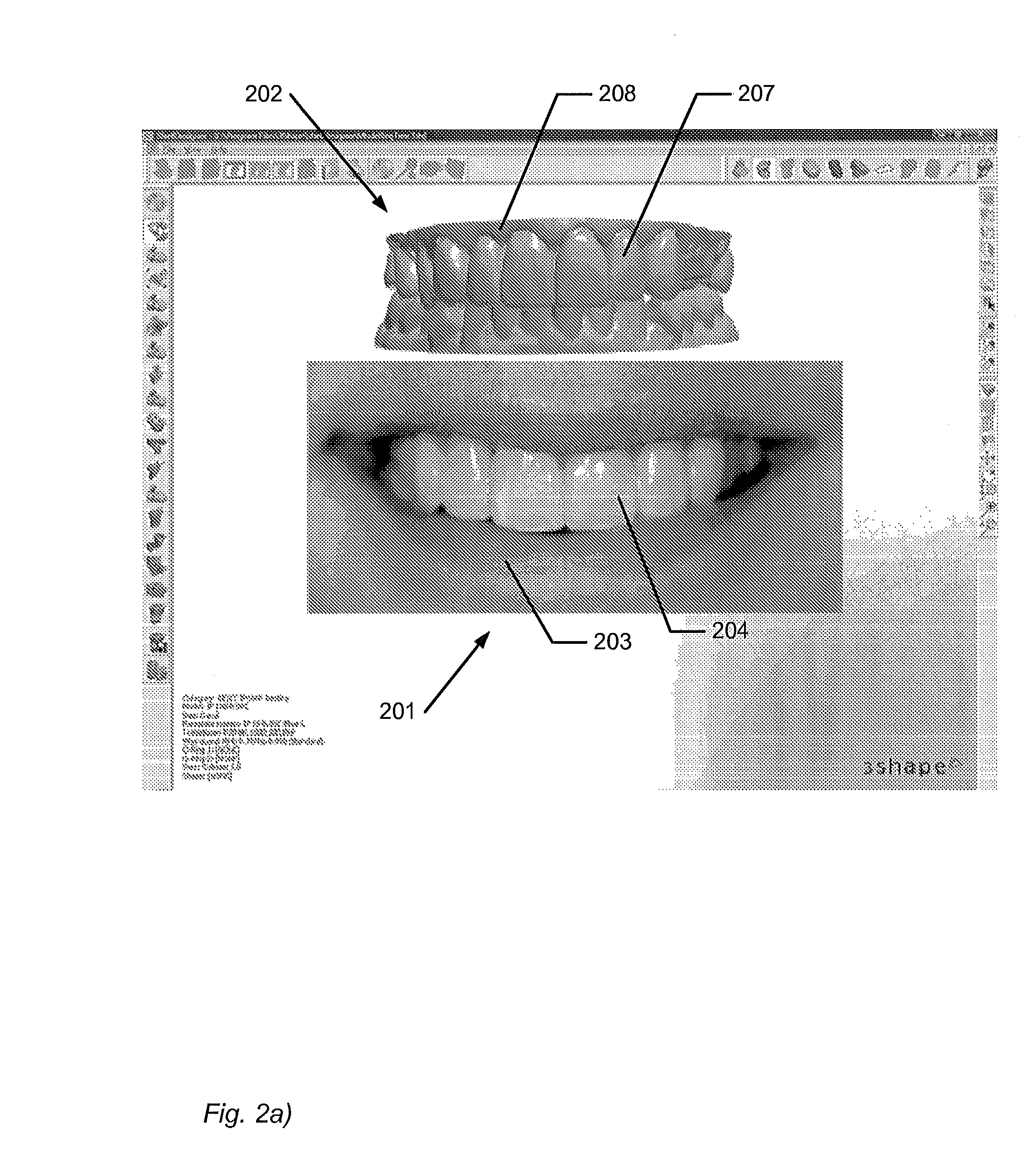

2d image arrangement

ActiveUS20130218530A1Good for comparisonDetection is slightImpression capsAdditive manufacturing apparatusViewpointsComputer graphics (images)

Disclosed is a method of designing a dental restoration for a patient, wherein the method includes providing one or more 2D images, where at least one 2D image includes at least one facial feature; providing a 3D virtual model of at least part of the patient's oral cavity; arranging at least one of the one or more 2D images relative to the 3D virtual model in a virtual 3D space such that the 2D image and the 3D virtual model are aligned when viewed from a viewpoint, whereby the 3D virtual model and the 2D image are both visualized in the 3D space; and modeling a restoration on the 3D virtual model, where the restoration is designed to fit the facial feature of the at least one 2D image.

Owner:3SHAPE AS

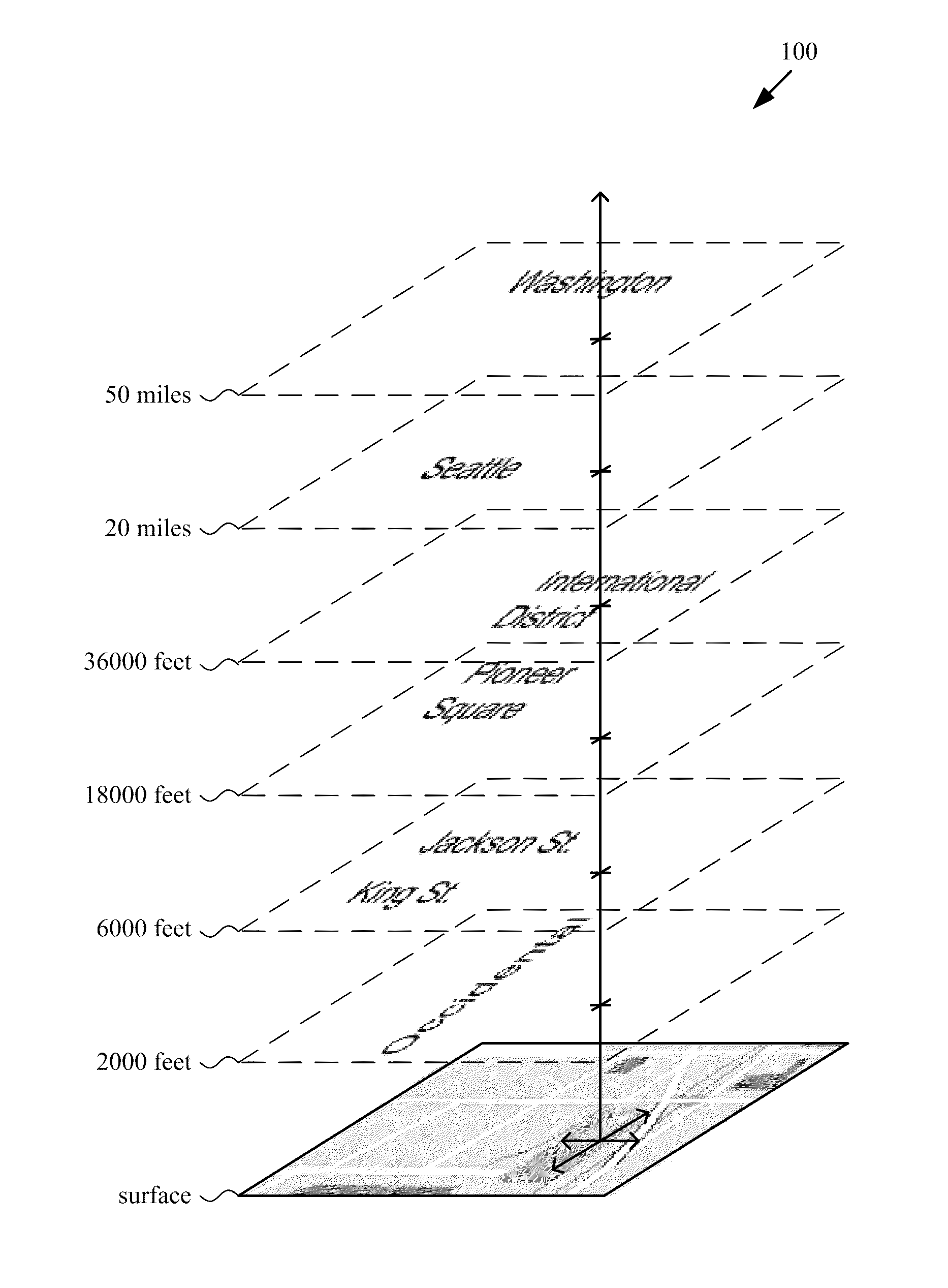

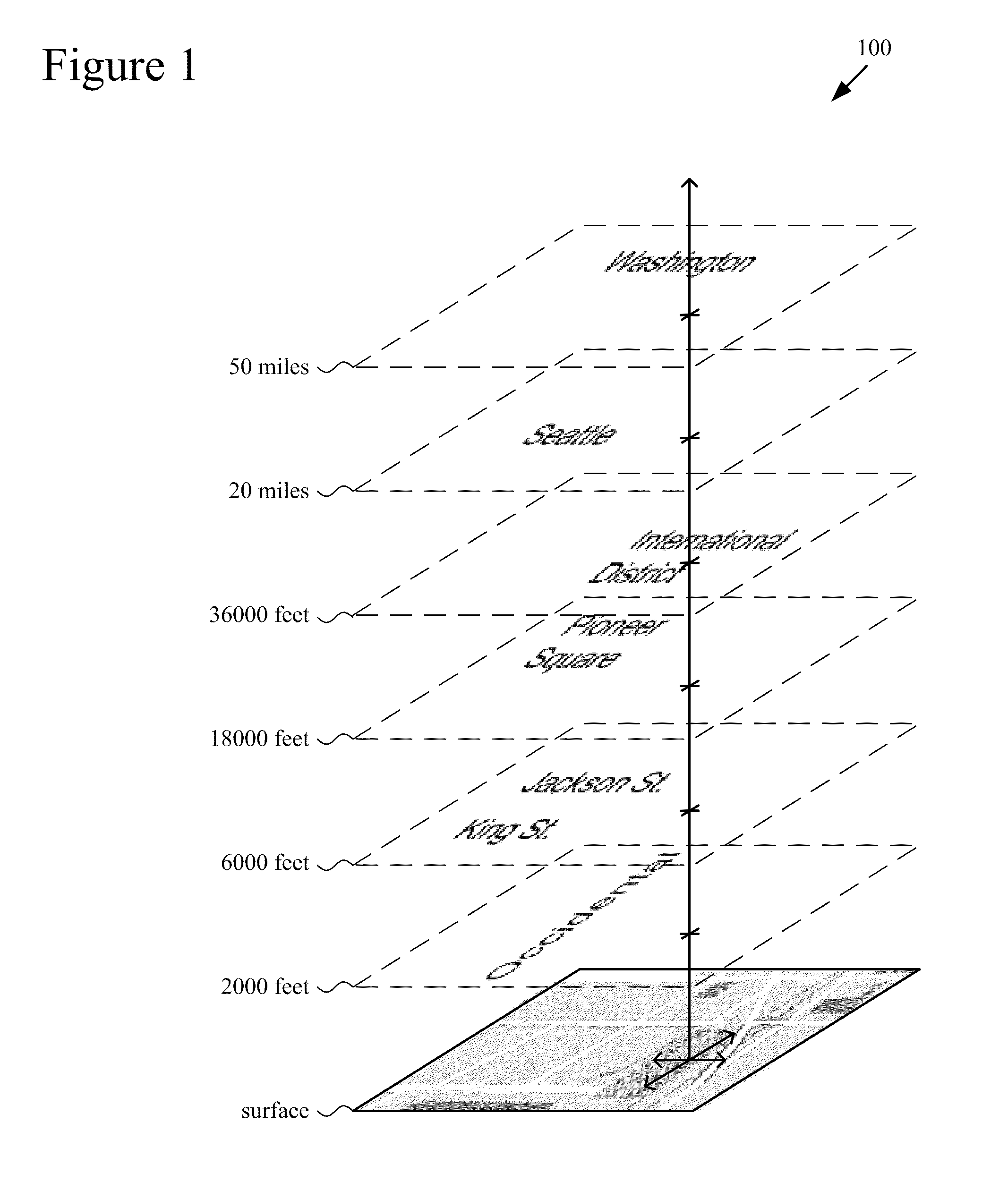

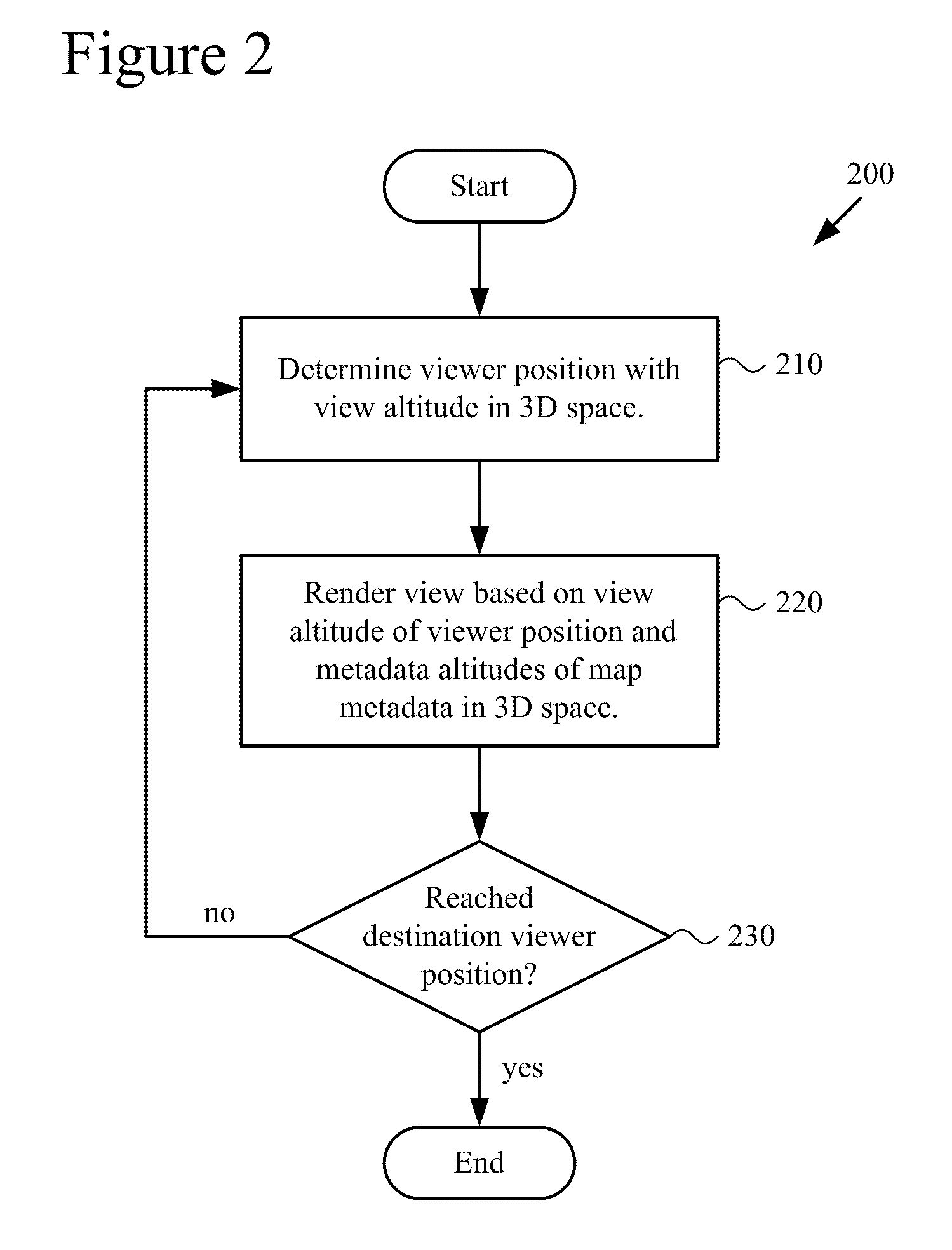

3D layering of map metadata

ActiveUS20120019513A1Good effectImprove experienceMaps/plans/chartsVehicle position indicationParallaxSurface layer

Techniques and tools are described for rendering views of a map in which map metadata elements are layered in 3D space through which a viewer navigates. Layering of metadata elements such as text labels in 3D space facilitates parallax and smooth motion effects for zoom-in, zoom-out and scrolling operations during map navigation. A computing device can determine a viewer position that is associated with a view altitude in 3D space, then render for display a map view based upon the viewer position and metadata elements layered at different metadata altitudes in 3D space. For example, the computing device places text labels in 3D space above features associated with the respective labels, at the metadata altitudes indicated for the respective labels. The computing device creates a map view from points of the placed labels and points of a surface layer of the map that are visible from the viewer position.

Owner:MICROSOFT TECH LICENSING LLC

Method for aiding space design using network, system therefor, and server computer of the system

Provided is an environment which enables a user to implement 3D space design on a computer accessible to a server computer via a network. The server computer includes a layout design program which is operable on a screen activated by a browser of the client computer to enable the user to implement space design of a layout in the terms of a 2D image, an object database which stores object data used for layout design for retrieval and extraction, and a 3D display program which is operable on the browser screen of the client computer to display the designed space in the terms of a 3D image. The client computer is equipped with the browser capable of executing programs of the layout design program and the 3D display program. Upon receiving the layout design program and the 3D display program from the server computer via the network, the programs of the layout design program and the 3D display program are executable on the browser screen.

Owner:PANASONIC CORP

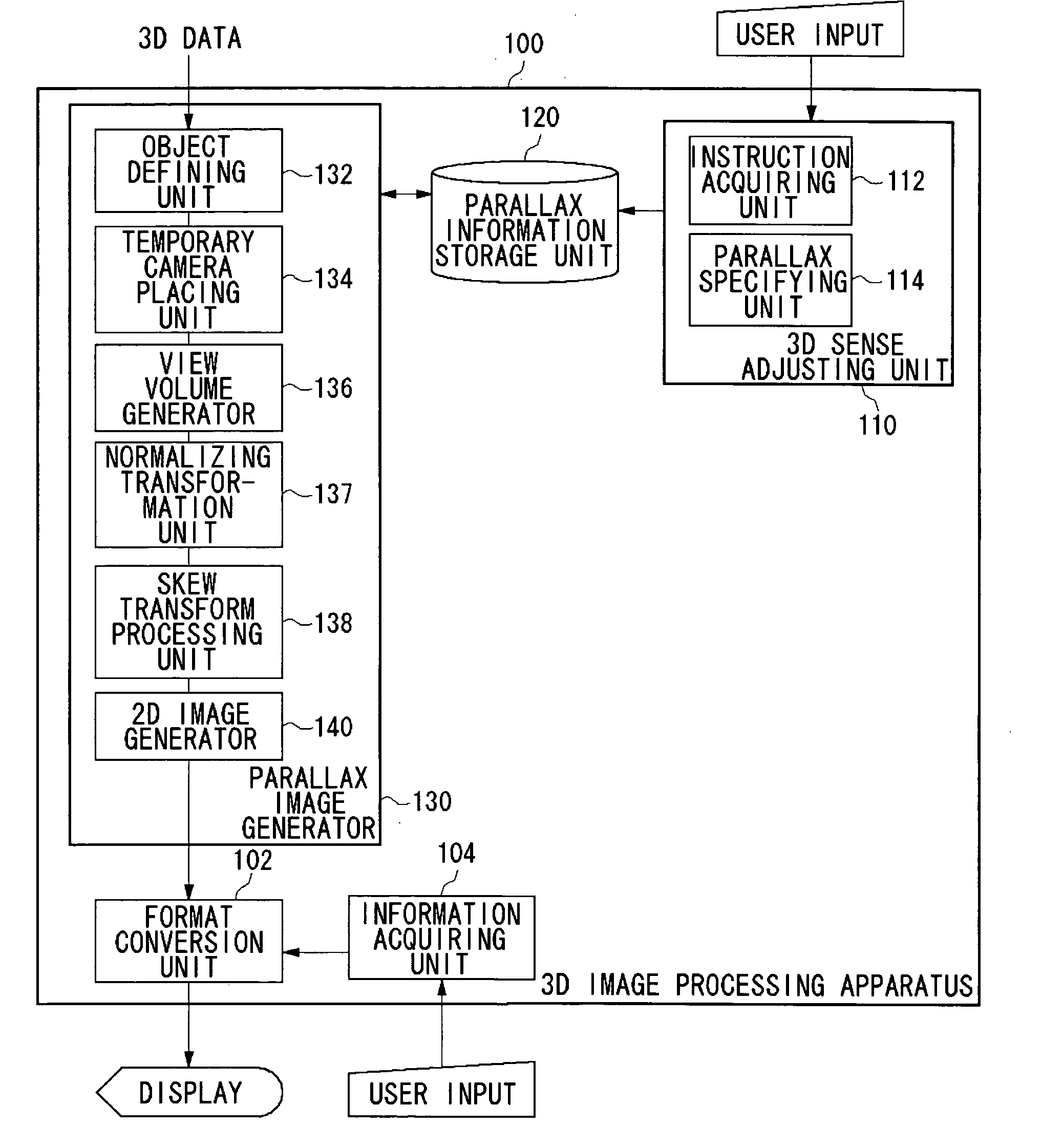

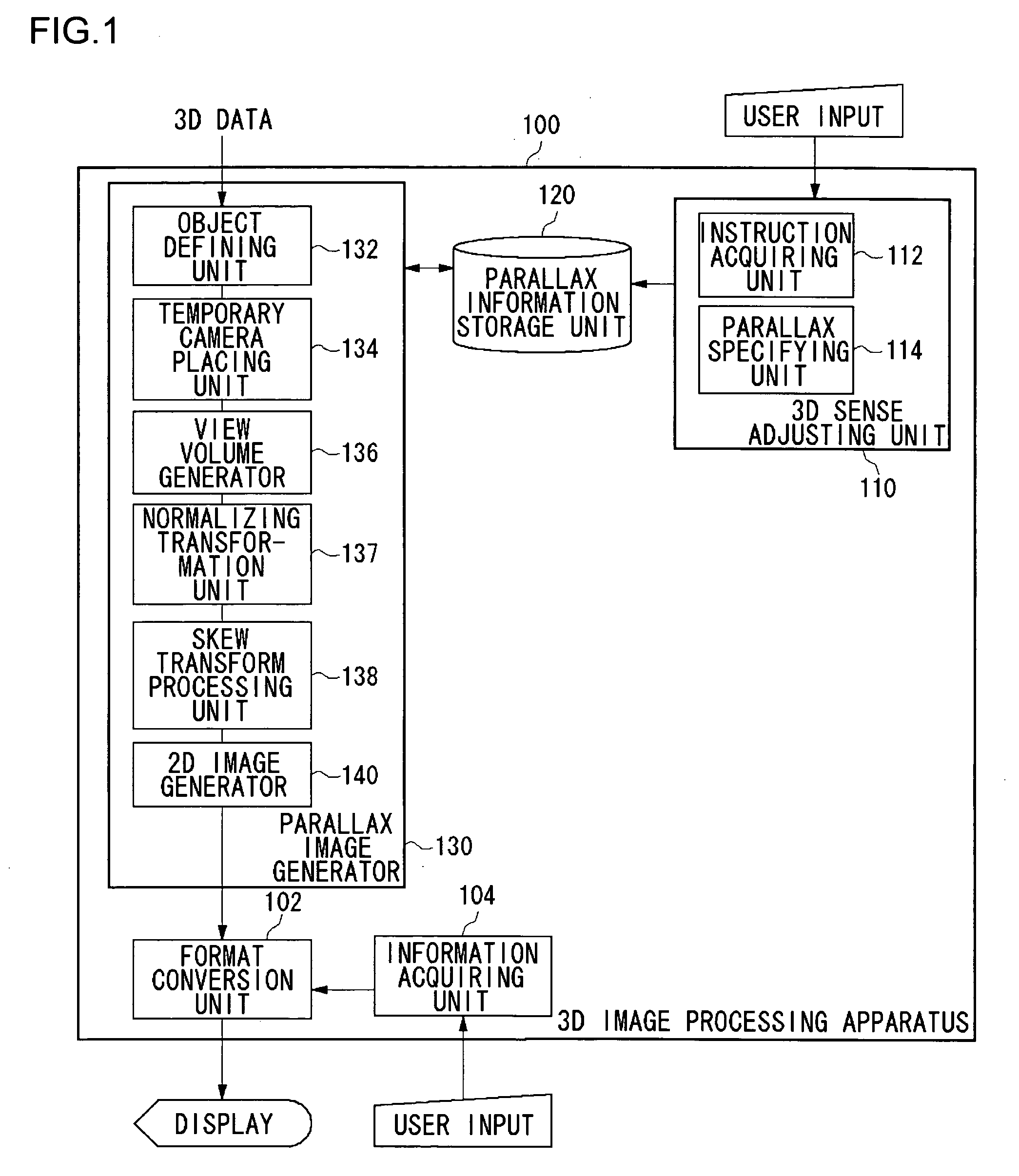

Method and apparatus for processing three-dimensional images

InactiveUS20050253924A1Easy to handleIncrease the compression ratioSteroscopic systemsParallaxComputer graphics (images)

A 3D image processing apparatus first generates a combined view volume that contains view volumes set respectively by a plurality of real cameras, based on a single temporary camera placed in a virtual 3D space. Then, this apparatus performs skewing transformation on the combined view volume so as to acquire view volumes for each of the plurality of real cameras. Finally, two view volumes acquired for the each of the plurality of real cameras are projected on a projection plane so as to produce 2D images having parallax. Using the temporary camera alone, the 2D images serving as base points for a parallax image can be produced by acquiring the view volumes for the each of the plurality of real cameras. As a result, a processing for actually placing the real cameras can be skipped, so that a high-speed processing as a whole can be realized.

Owner:SANYO ELECTRIC CO LTD

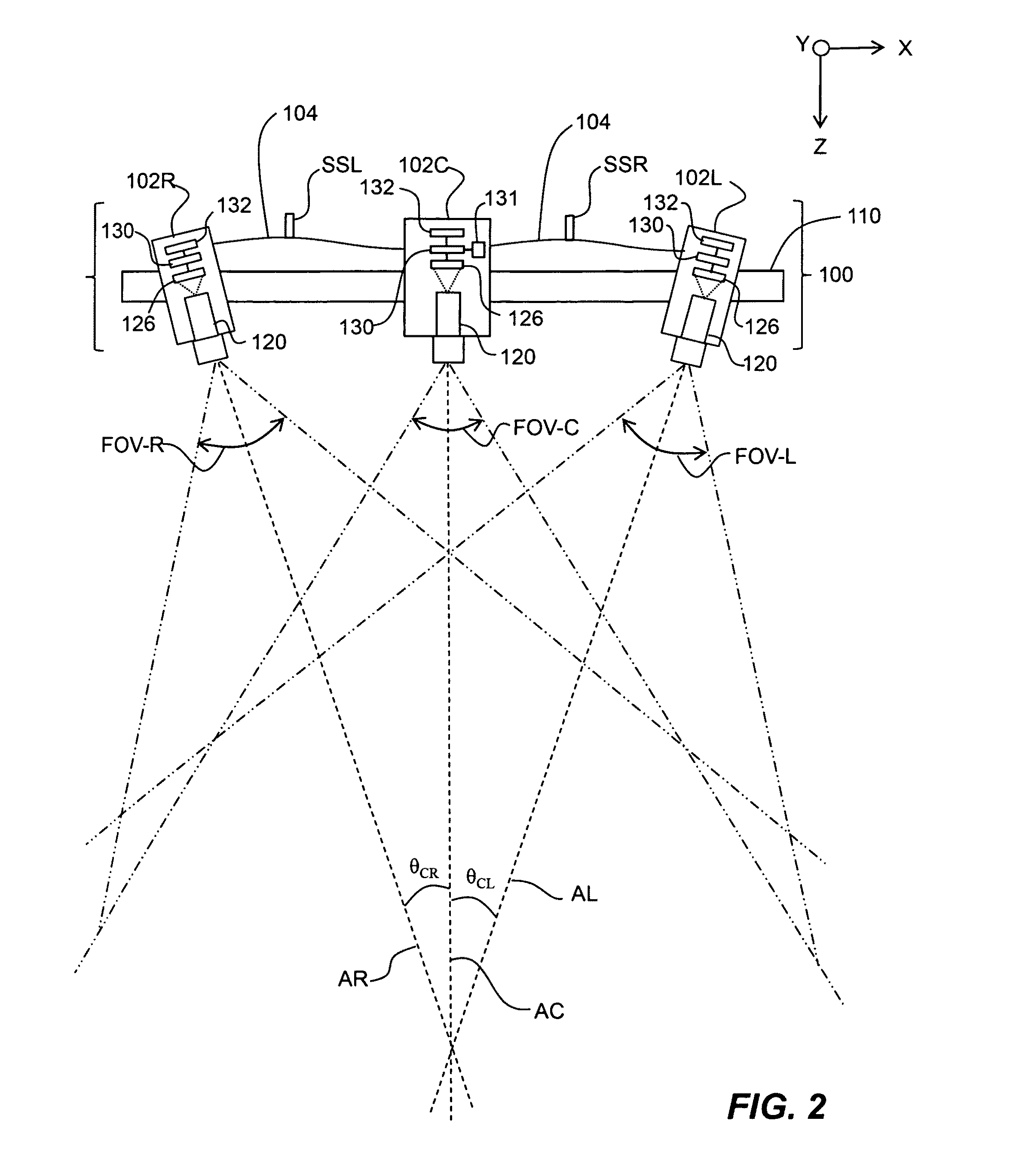

Methods and apparatus for practical 3D vision system

ActiveUS20070081714A1Facilitates finding patternsHigh match scoreCharacter and pattern recognitionCamera lensMachine vision

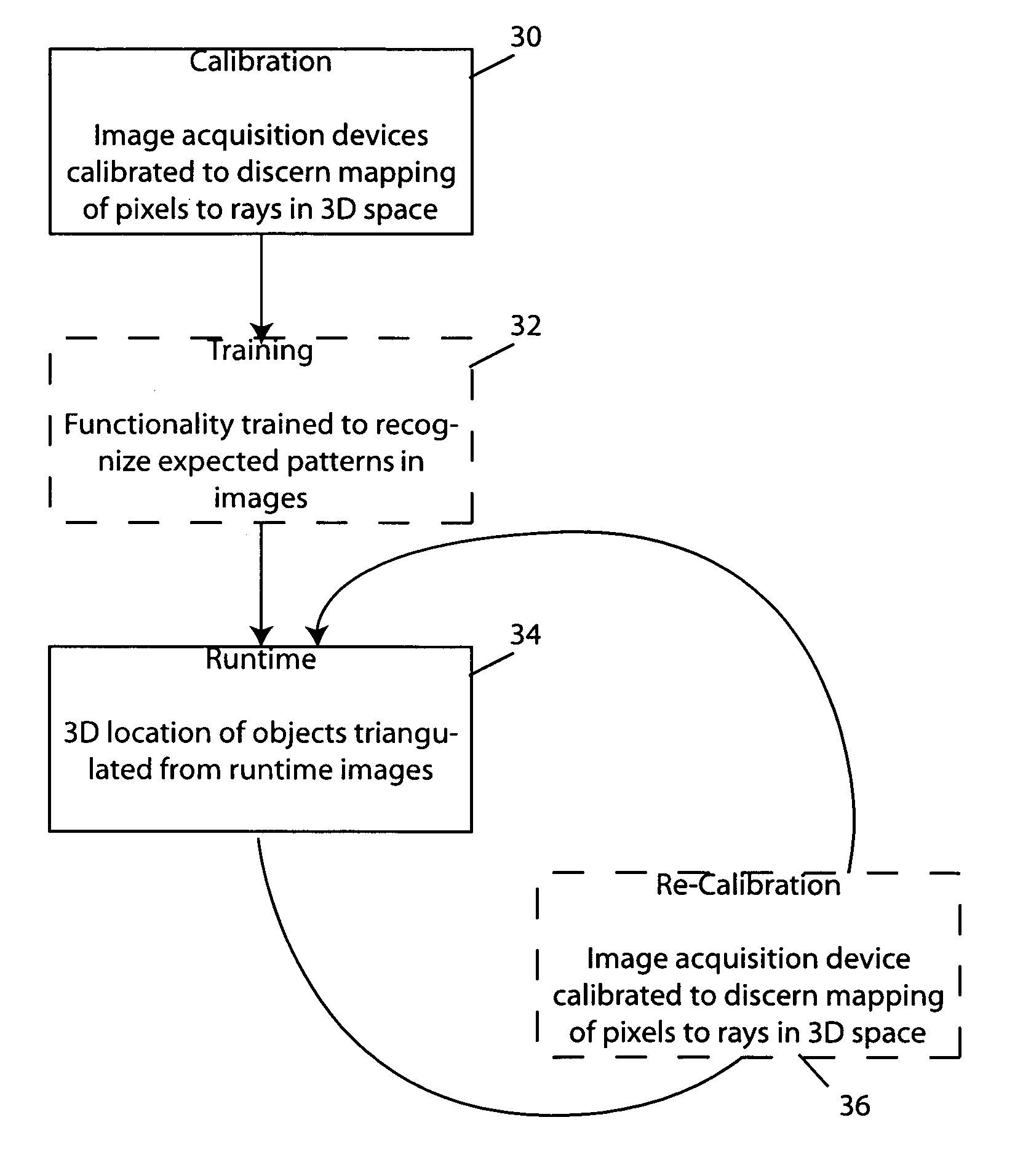

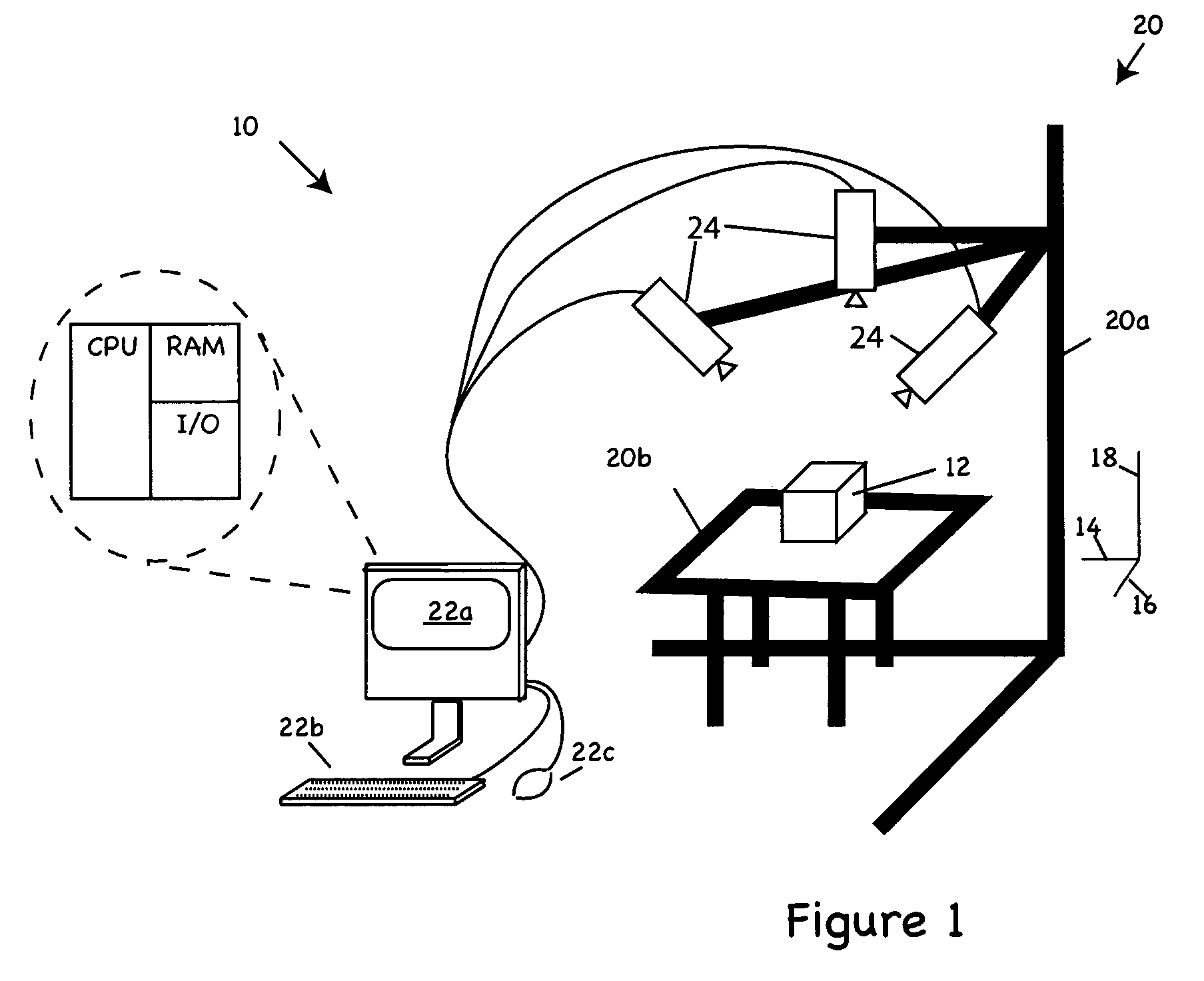

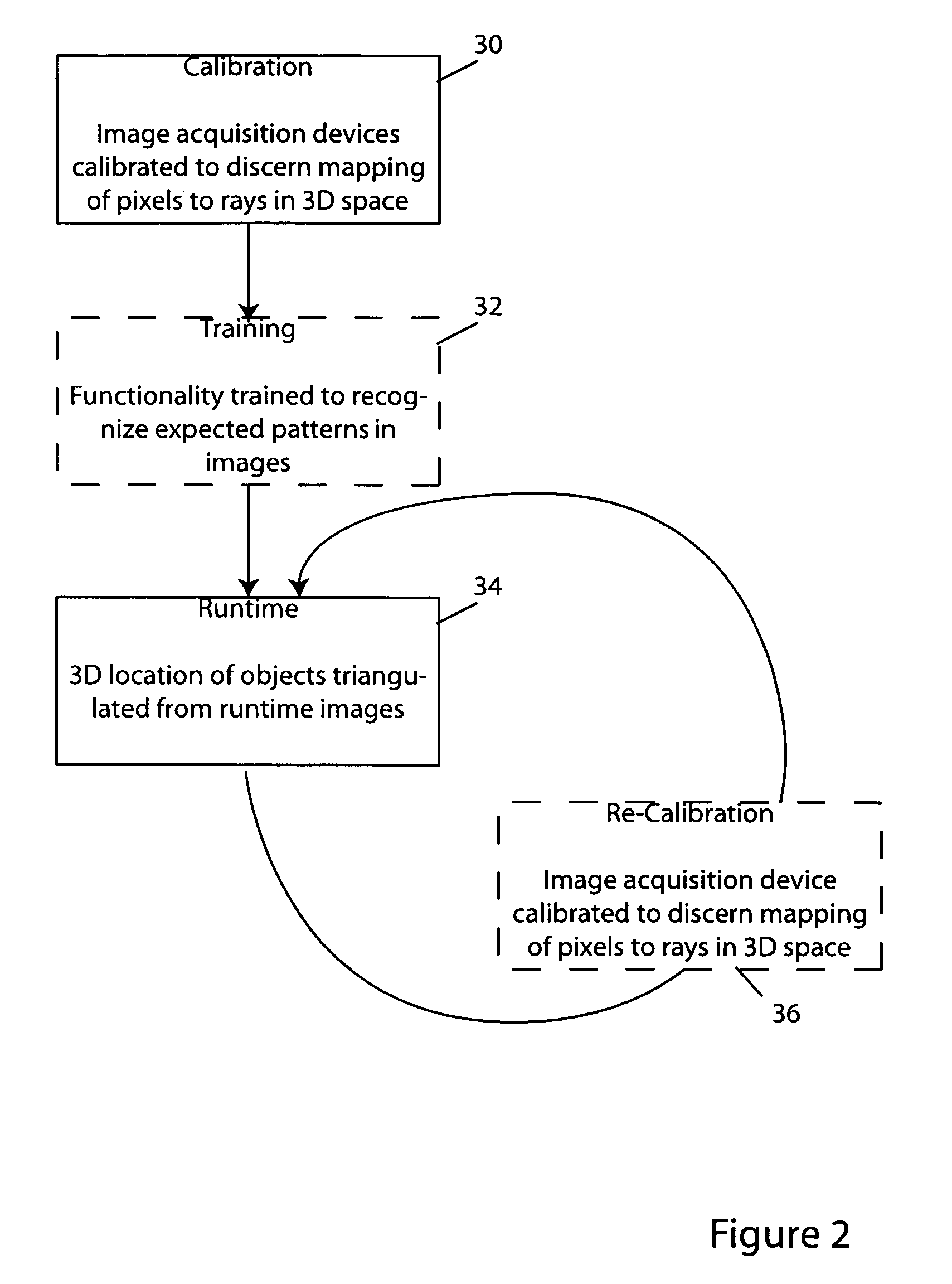

The invention provides inter alia methods and apparatus for determining the pose, e.g., position along x-, y- and z-axes, pitch, roll and yaw (or one or more characteristics of that pose) of an object in three dimensions by triangulation of data gleaned from multiple images of the object. Thus, for example, in one aspect, the invention provides a method for 3D machine vision in which, during a calibration step, multiple cameras disposed to acquire images of the object from different respective viewpoints are calibrated to discern a mapping function that identifies rays in 3D space emanating from each respective camera's lens that correspond to pixel locations in that camera's field of view. In a training step, functionality associated with the cameras is trained to recognize expected patterns in images to be acquired of the object. A runtime step triangulates locations in 3D space of one or more of those patterns from pixel-wise positions of those patterns in images of the object and from the mappings discerned during calibration step.

Owner:COGNEX TECH & INVESTMENT

Prosthetic hip installation system

A prosthetic hip installation system comprising a reamer, an impactor, a tracking element, and a remote system. The tracking element can be integrated into the reamer or impactor for providing tracking data on the position or orientation. Alternatively, the tracking element can be housed in a separate module that can be coupled to either the reamer or impactor. The tracking element will couple to a predetermined location. Points in 3D space can be registered to provide a frame of reference for the tracking element or when the tracking element is moved from tool to tool. The tracking element sends data from the reamer or impactor wirelessly. The remote system receives the tracking data and can further process the data. A display on the remote system can support placement and orientation of the tool to aid in the installation of the prosthetic component.

Owner:ORTHOSENSOR

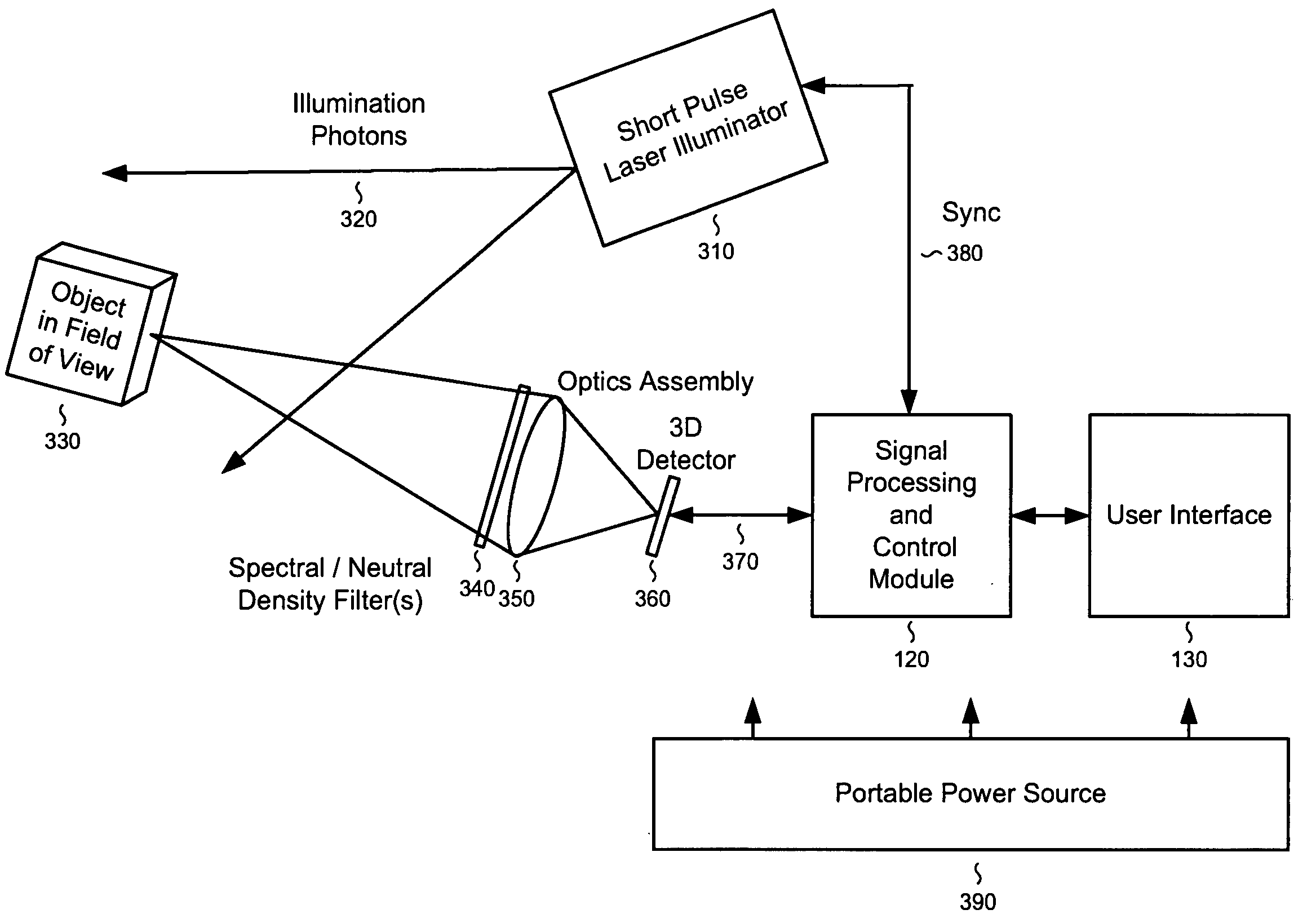

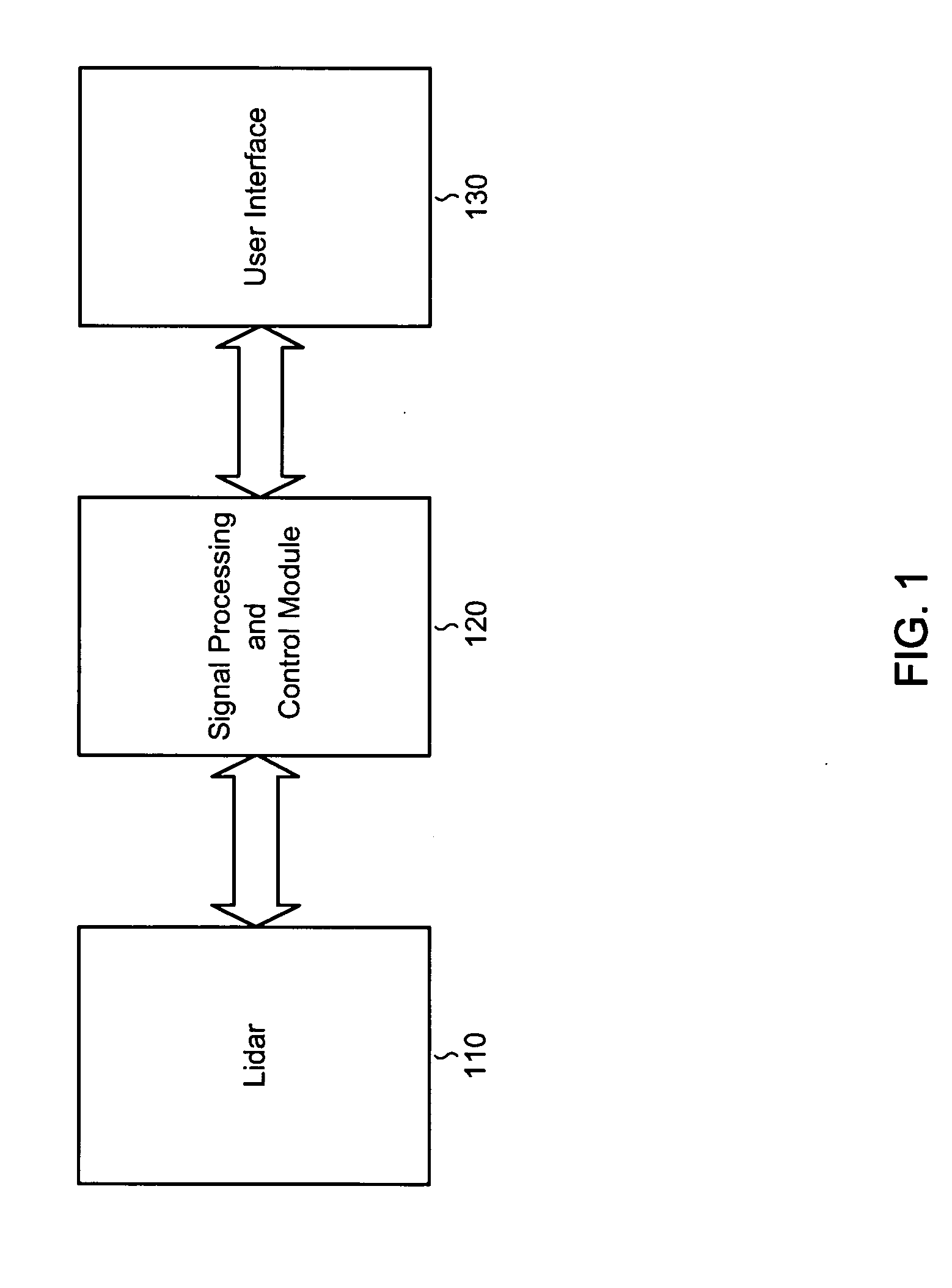

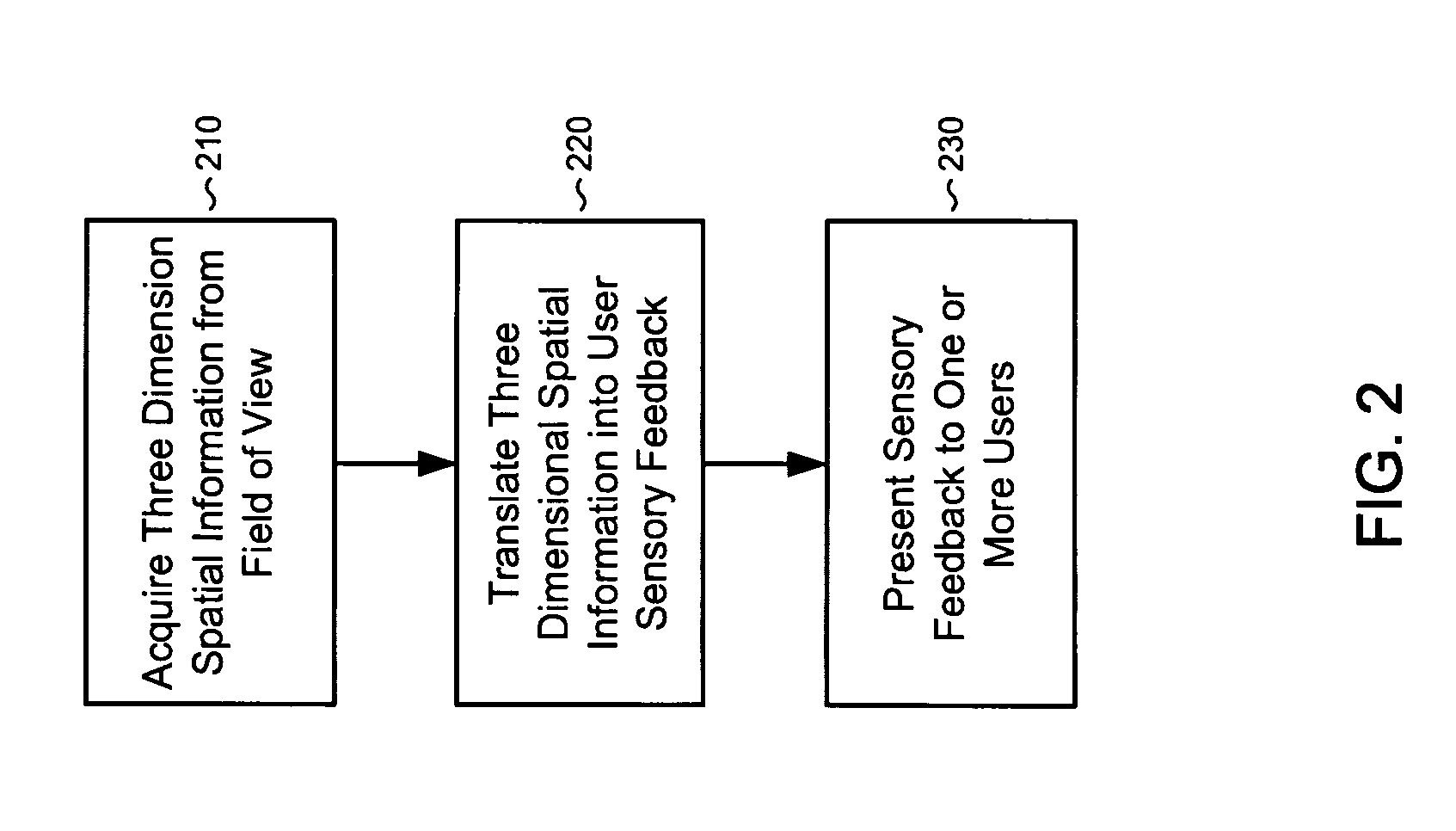

Systems and methods for laser radar imaging for the blind and visually impaired

InactiveUS20080309913A1Improve accuracyAccurate informationOptical rangefindersSolid-state devicesVisually impairedRadar systems

A 3D imaging ladar system comprises a solid state laser and geiger-mode avalanche photodiodes utilizing a scanning imaging system in conjunction with a user interface to provide 3D spatial object information for vision augmentation for the blind. Depth and located object information is presented acoustically by: 1) generating an audio acoustic field to present depth as amplitude and the audio image as a 2D location. 2) holographic acoustical imaging for a 3D sweep of the acoustic field. 3) a 2D acoustic sweep combined with acoustic frequency information to create a 3D presentation.A system to fuse data derived from a three dimensional imaging ladar system with information from a visible, ultraviolet, or infrared camera systems and acoustically present the information in a four or five dimensional acoustical format utilizing three dimensional acoustic position information, along with frequency, and modulation to represent color, texture, or object recognition information is also provided.

Owner:FALLON JAMES JOHN

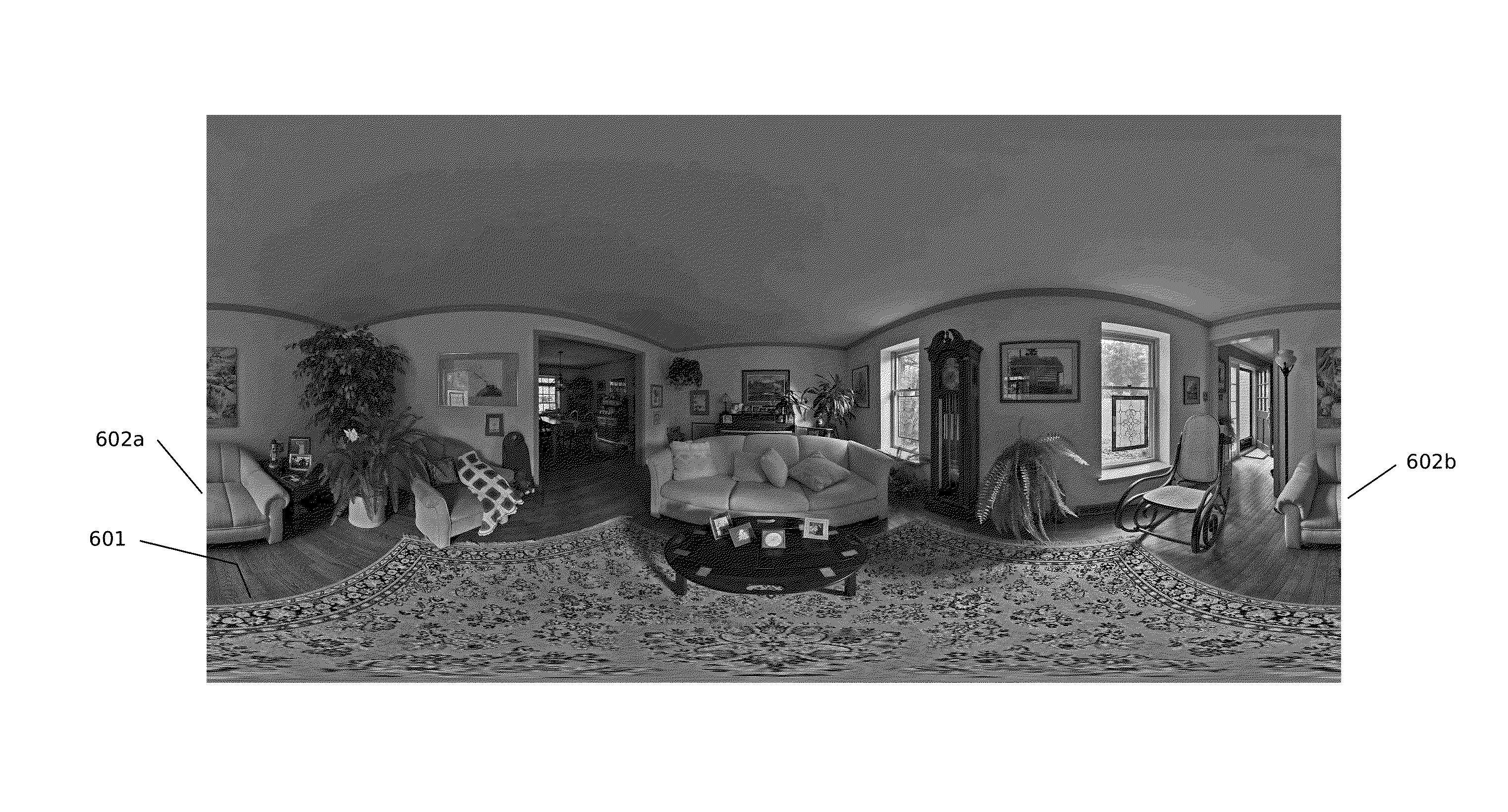

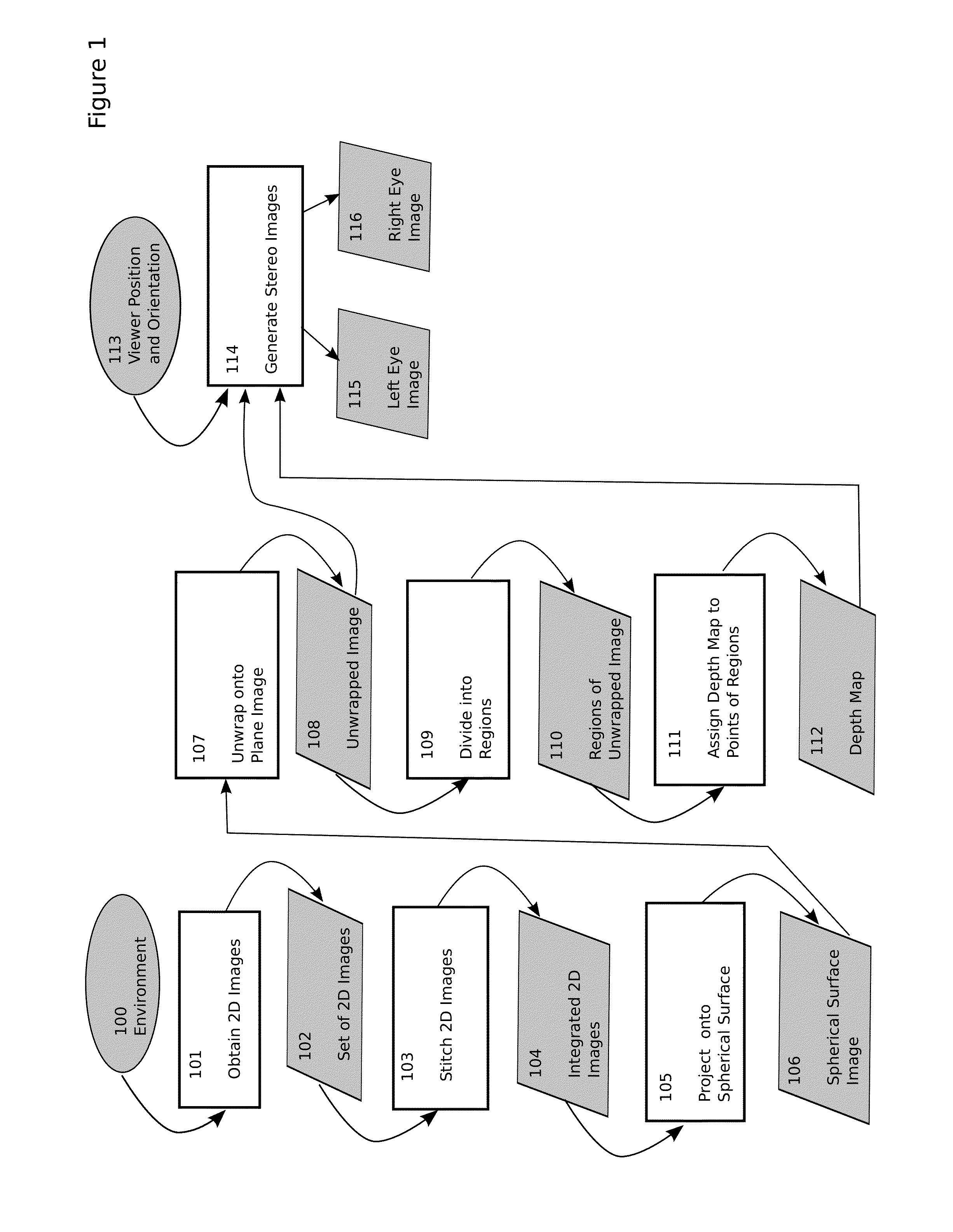

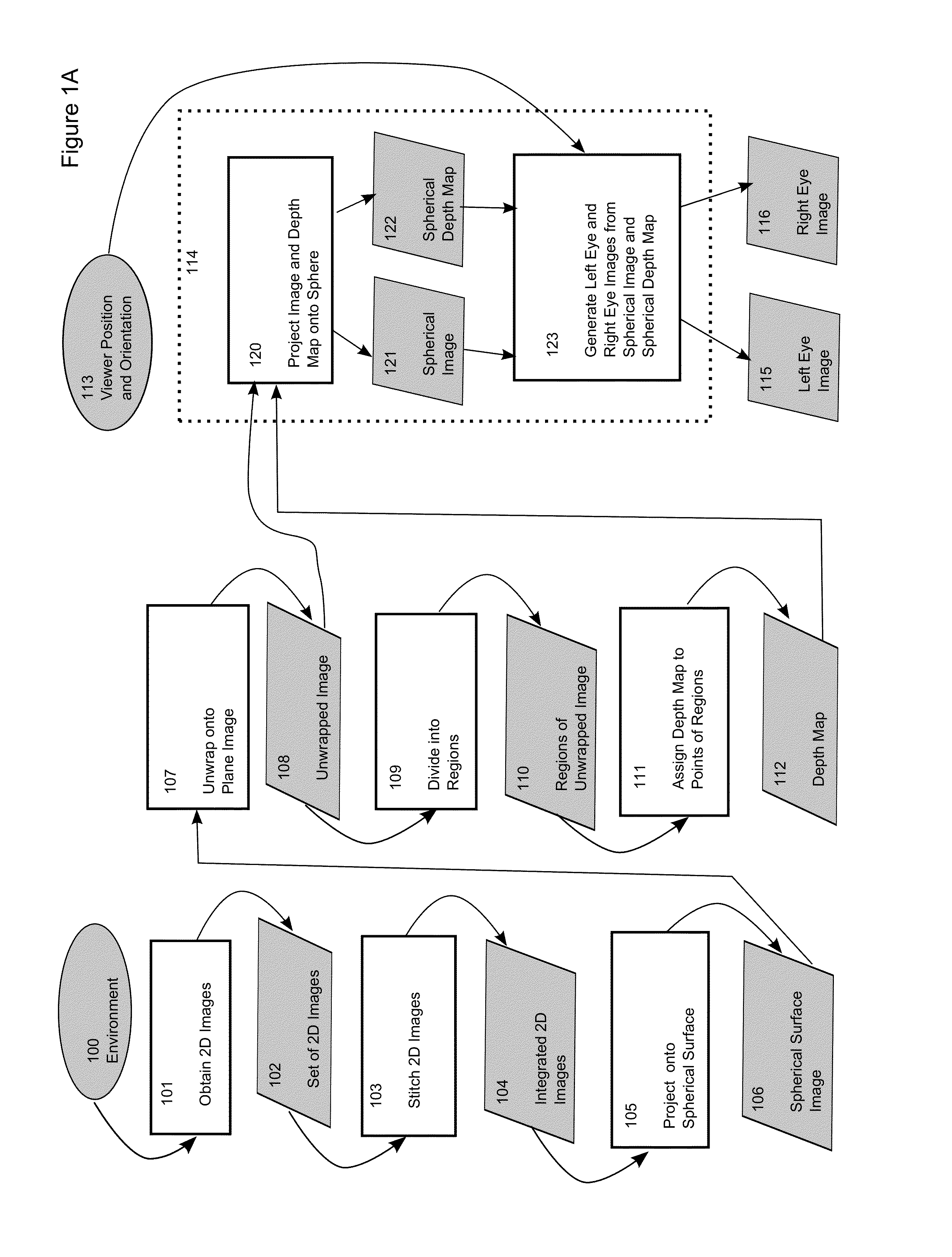

Method for creating 3D virtual reality from 2d images

A method that enables creation of a 3D virtual reality environment from a series of 2D images of a scene. Embodiments map 2D images onto a sphere to create a composite spherical image, divide the composite image into regions, and add depth information to the regions. Depth information may be generated by mapping regions onto flat or curved surfaces, and positioning these surfaces in 3D space. Some embodiments enable inserting, removing, or extending objects in the scene, adding or modifying depth information as needed. The final composite image and depth information are projected back onto one or more spheres, and then projected onto left and right eye image planes to form a 3D stereoscopic image for a viewer of the virtual reality environment. Embodiments enable 3D images to be generated dynamically for the viewer in response to changes in the viewer's position and orientation in the virtual reality environment.

Owner:LEGEND FILMS INC

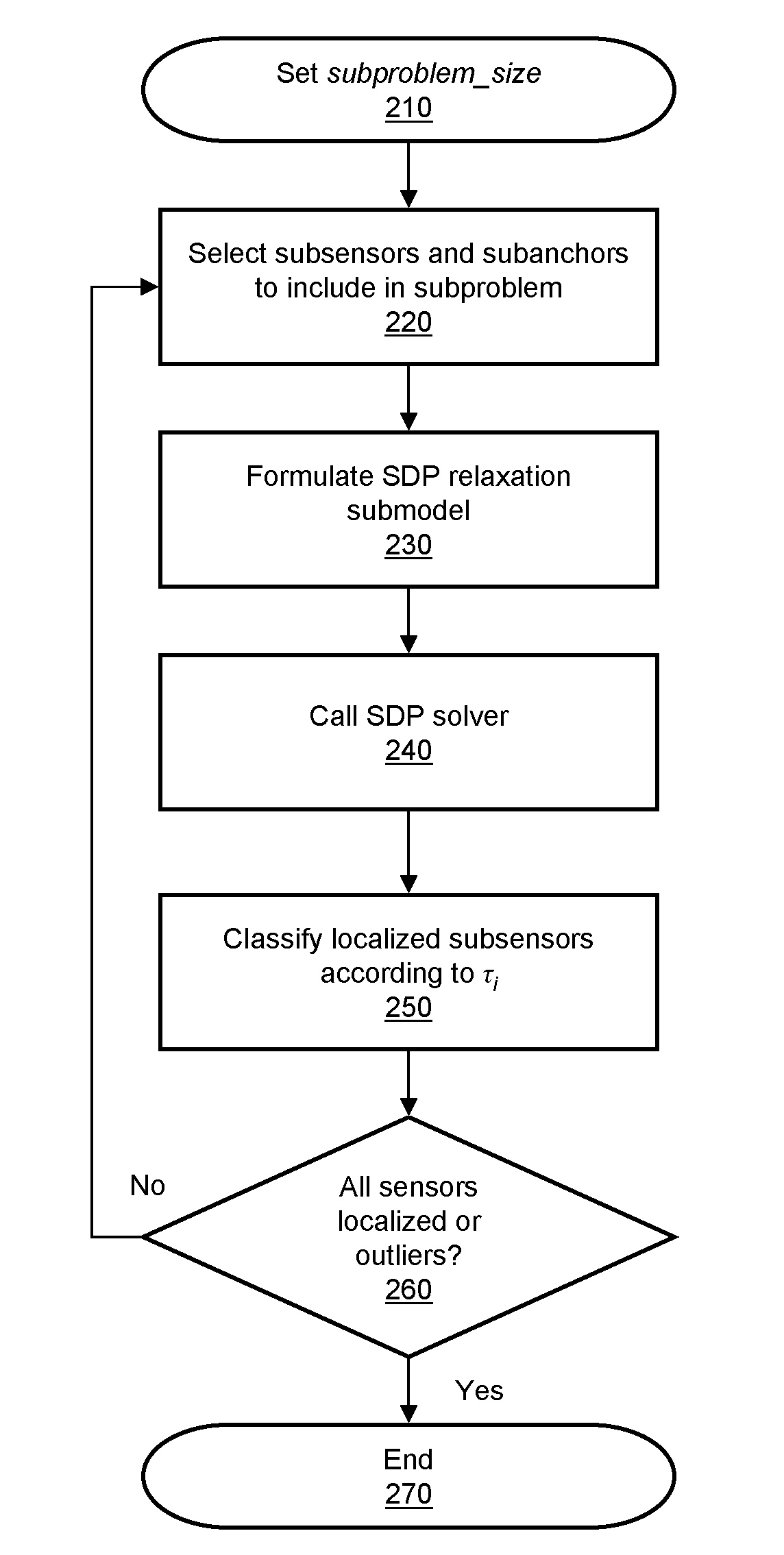

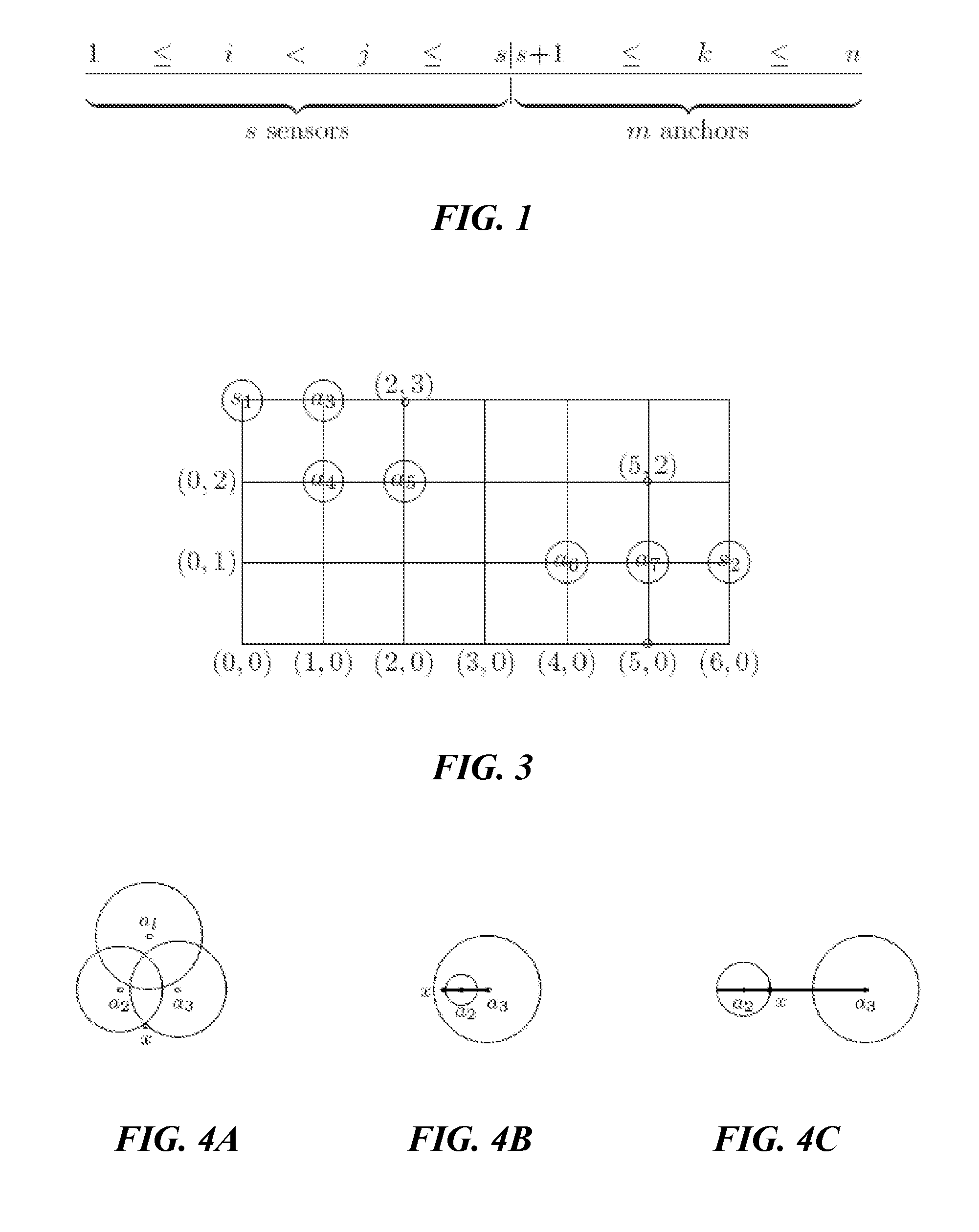

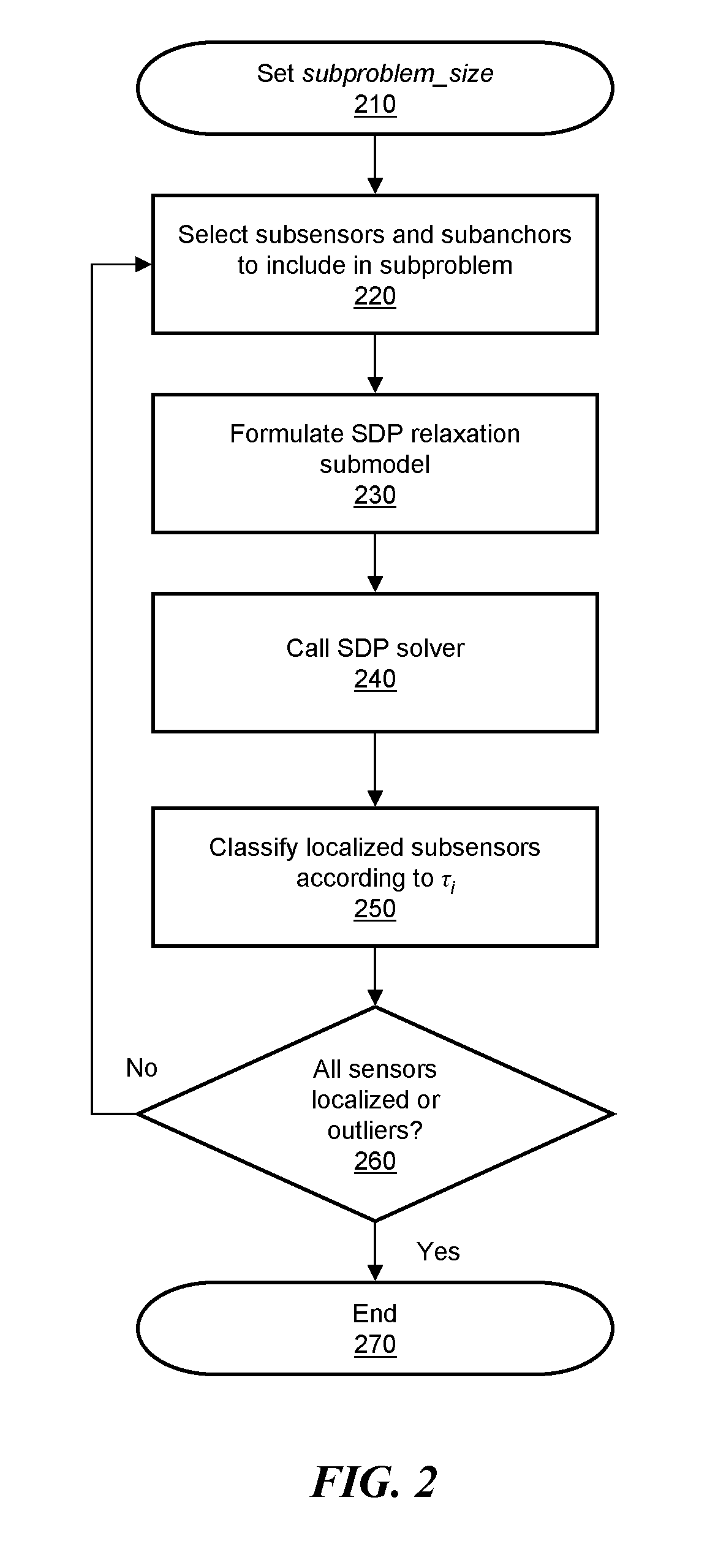

Scalable sensor localization for wireless sensor networks

ActiveUS20070005292A1Error minimizationSolve small or large problems more accuratelyNetwork traffic/resource managementNetwork topologiesWireless sensor networkingComputer science

Adaptive rule-based methods to solve localization problems for ad hoc wireless sensor networks are disclosed. A large problem may be solved as a sequence of very small subproblems, each of which is solved by semidefinite programming relaxation of a geometric optimization model. The subproblems may be generated according to a set of sensor / anchor selection rules and a priority list. The methods scale well and provide improved positioning accuracy. A dynamic version may be used for estimating moving sensors locations in a real-time environment. The method may use dynamic distance measurement updates among sensors, and utilizes subproblem solving for static sensor localization. Methods to deploy sensor localization algorithms in clustered distributed environments are also provided, permitting application to arbitrarily large networks. In addition, the methods may be used to solve sensor localizations in 2D or 3D space. A preprocessor may be used for localization of networks without absolute position information.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

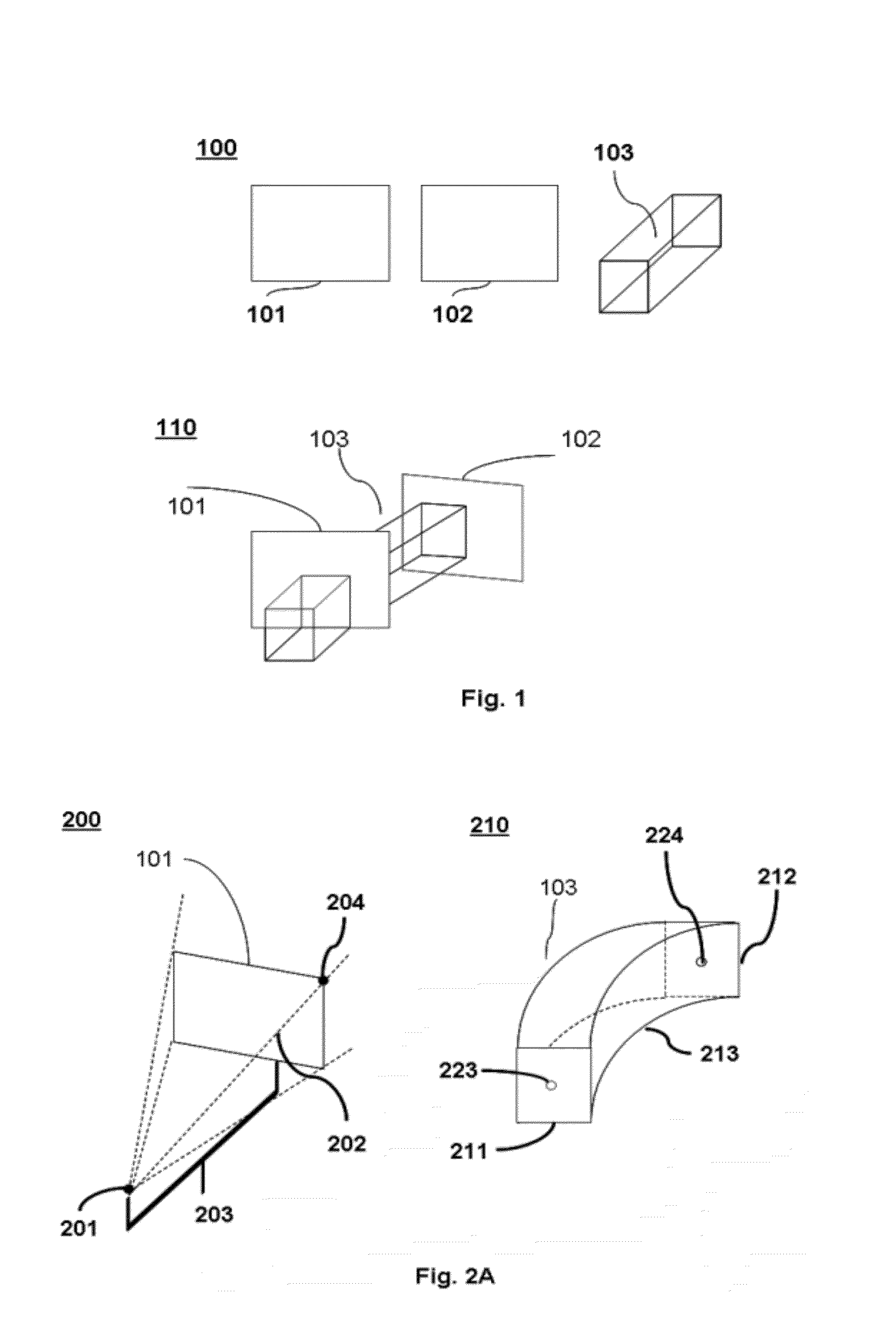

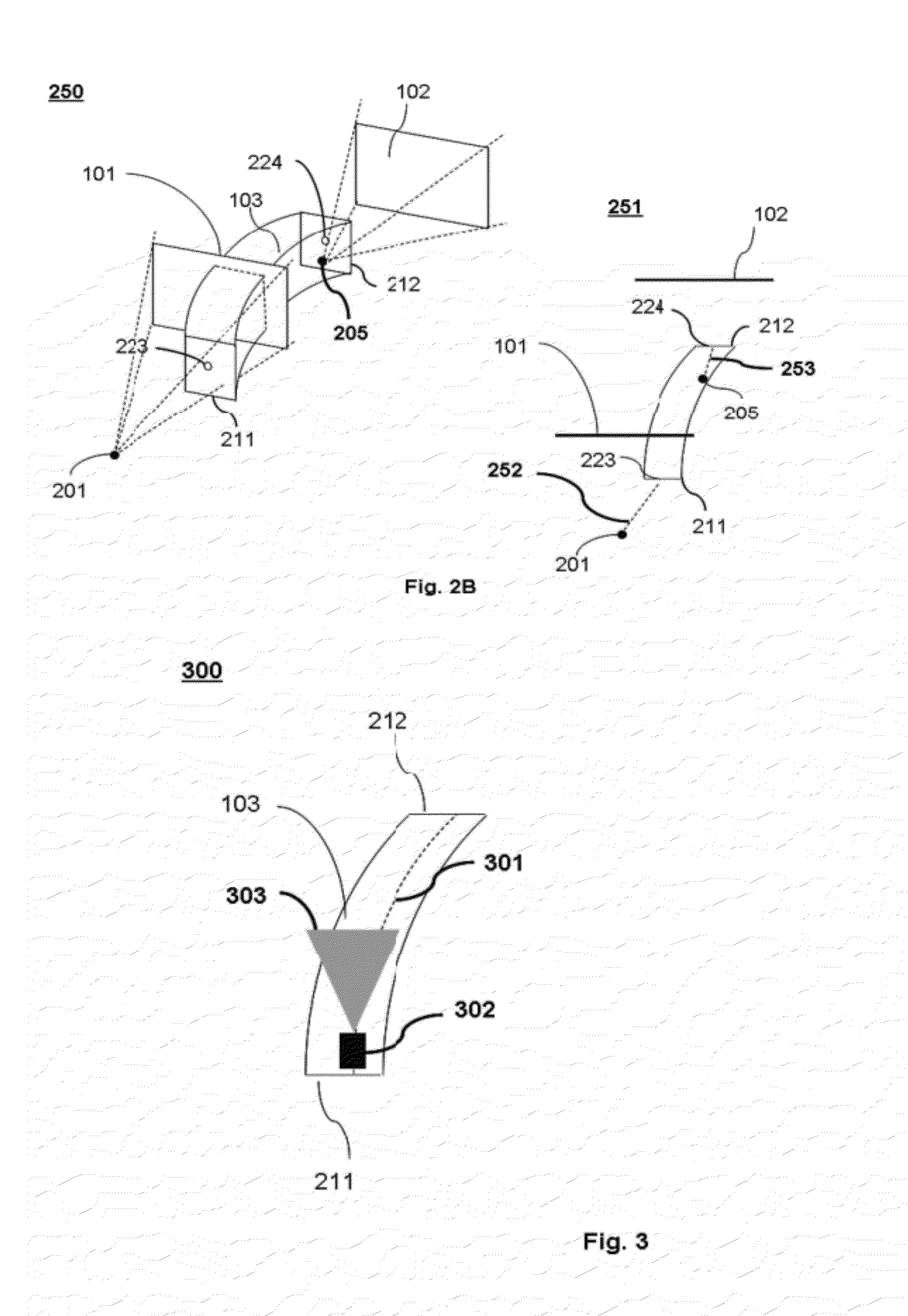

Generating Three-Dimensional Virtual Tours From Two-Dimensional Images

ActiveUS20120099804A1Increase impressionGood effectCharacter and pattern recognitionWeb data navigationUser inputVirtual camera

Interactive three-dimensional (3D) virtual tours are generated from ordinary two-dimensional (2D) still images such as photographs. Two or more 2D images are combined to form a 3D scene, which defines a relationship among the 2D images in 3D space. 3D pipes connect the 2D images with one another according to defined spatial relationships and for guiding virtual camera movement from one image to the next. A user can then take a 3D virtual tour by traversing images within the 3D scene, for example by moving from one image to another, either in response to user input or automatically. In various embodiments, some or all of the 2D images can be selectively distorted to enhance the 3D effect, and thereby reinforce the impression that the user is moving within a 3D space. Transitions from one image to the next can take place automatically without requiring explicit user interaction.

Owner:3DITIZE SL

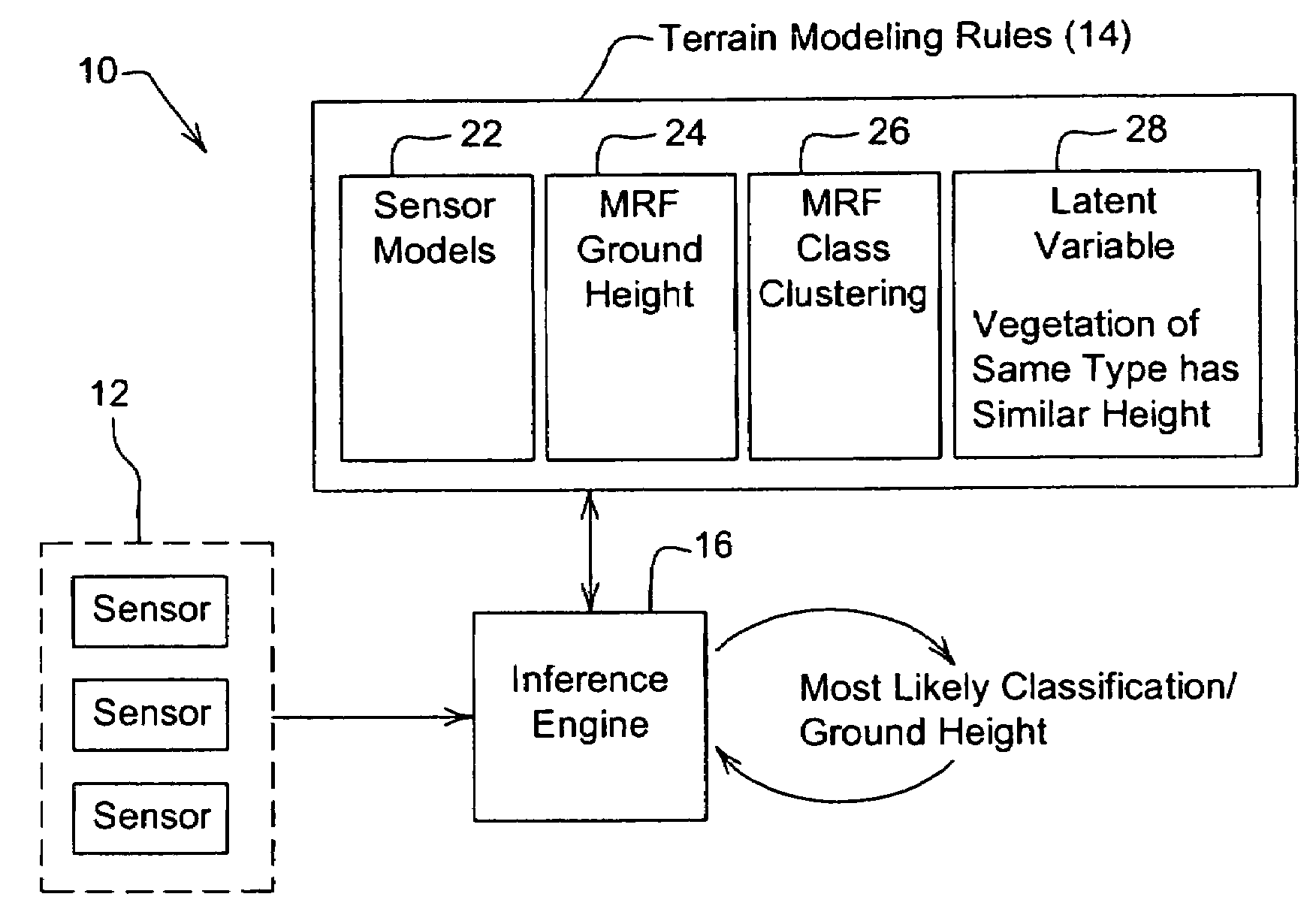

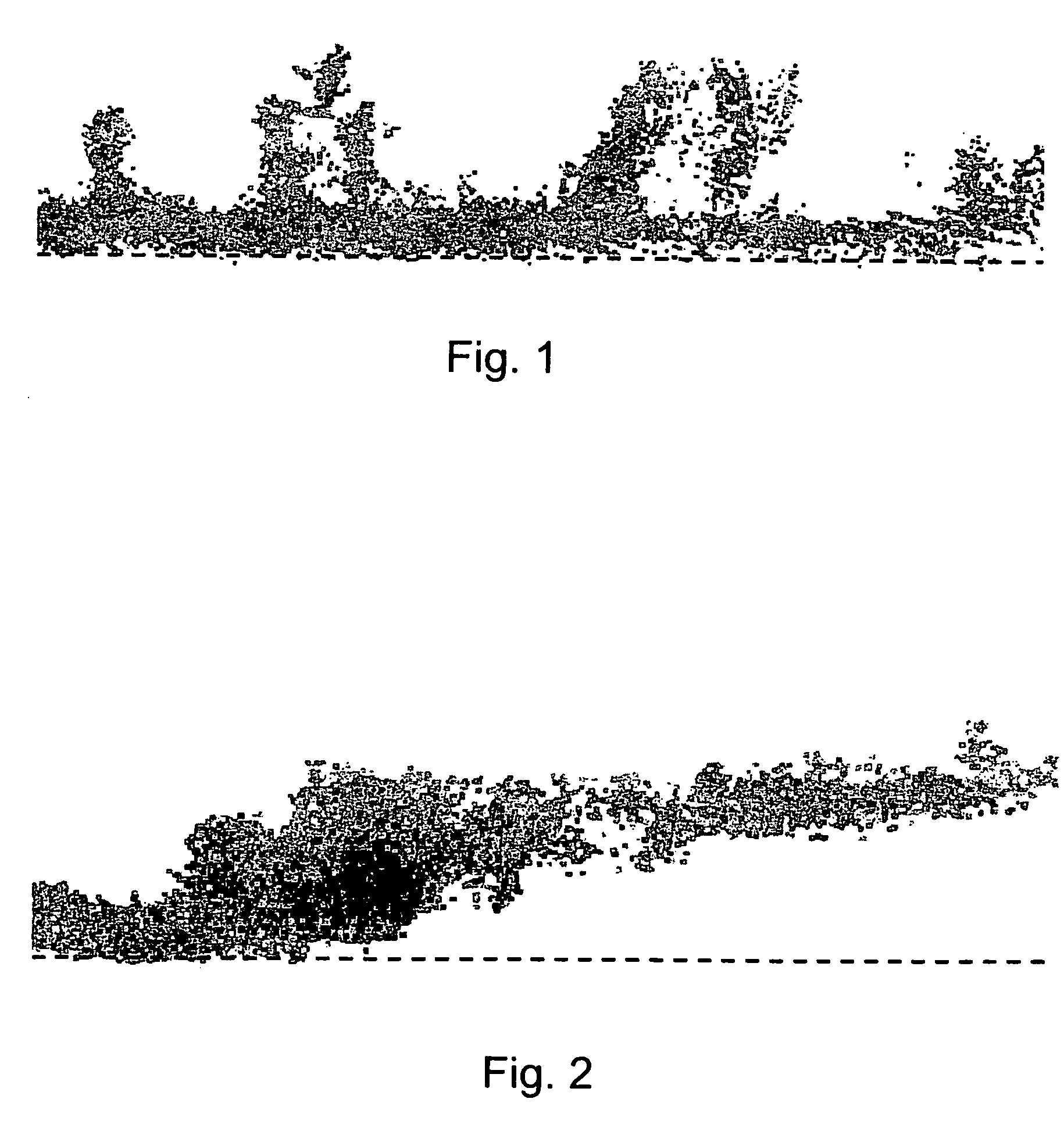

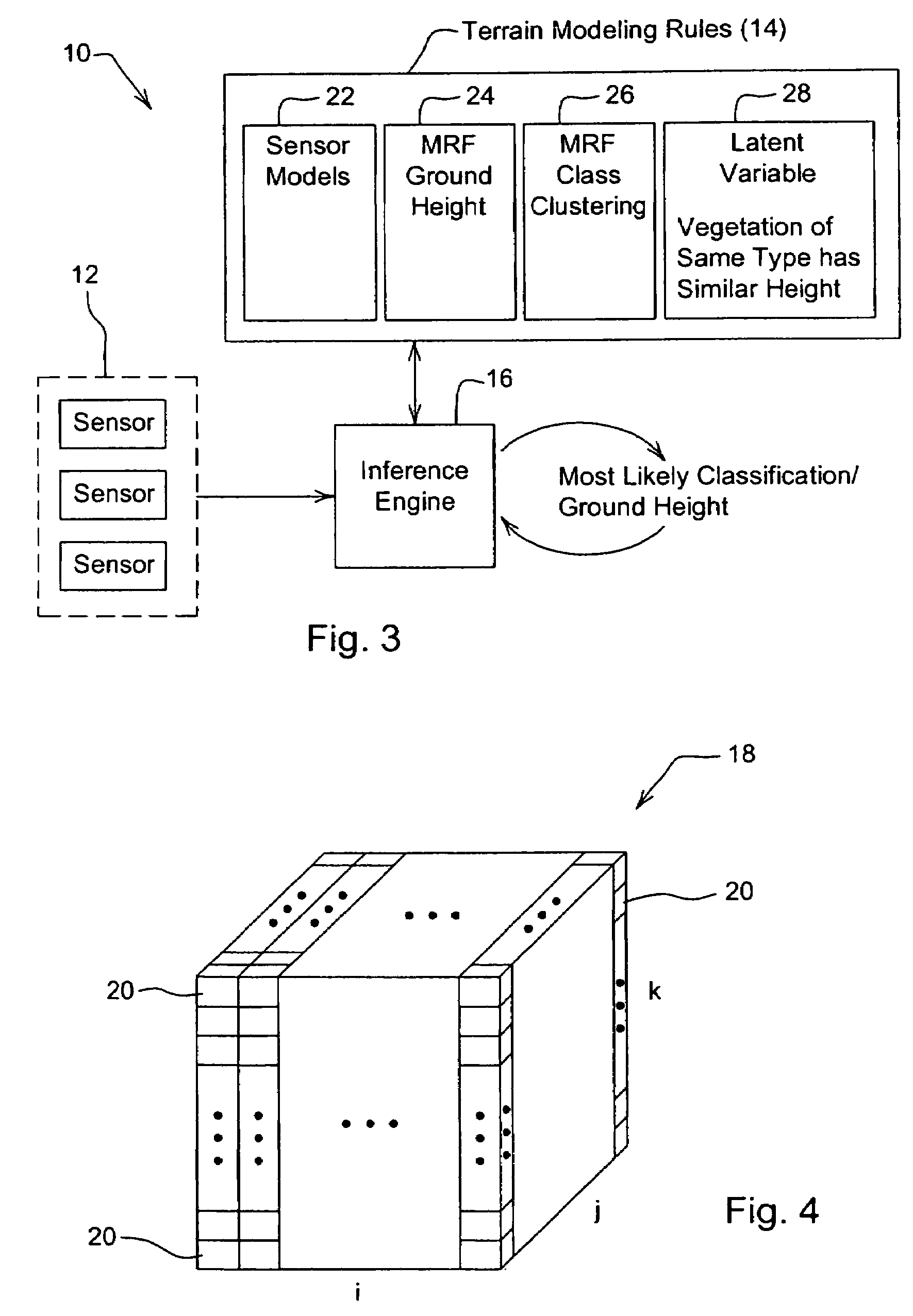

System and method for generating a terrain model for autonomous navigation in vegetation

ActiveUS20070280528A1Stable and robust navigationAccurate estimateVehicle position/course/altitude controlVehiclesProbabilistic methodTerrain

The disclosed terrain model is a generative, probabilistic approach to modeling terrain that exploits the 3D spatial structure inherent in outdoor domains and an array of noisy but abundant sensor data to simultaneously estimate ground height, vegetation height and classify obstacles and other areas of interest, even in dense non-penetrable vegetation. Joint inference of ground height, class height and class identity over the whole model results in more accurate estimation of each quantity. Vertical spatial constraints are imposed on voxels within a column via a hidden semi-Markov model. Horizontal spatial constraints are enforced on neighboring columns of voxels via two interacting Markov random fields and a latent variable. Because of the rules governing abstracts, this abstract should not be used to construe the claims.

Owner:CARNEGIE MELLON UNIV

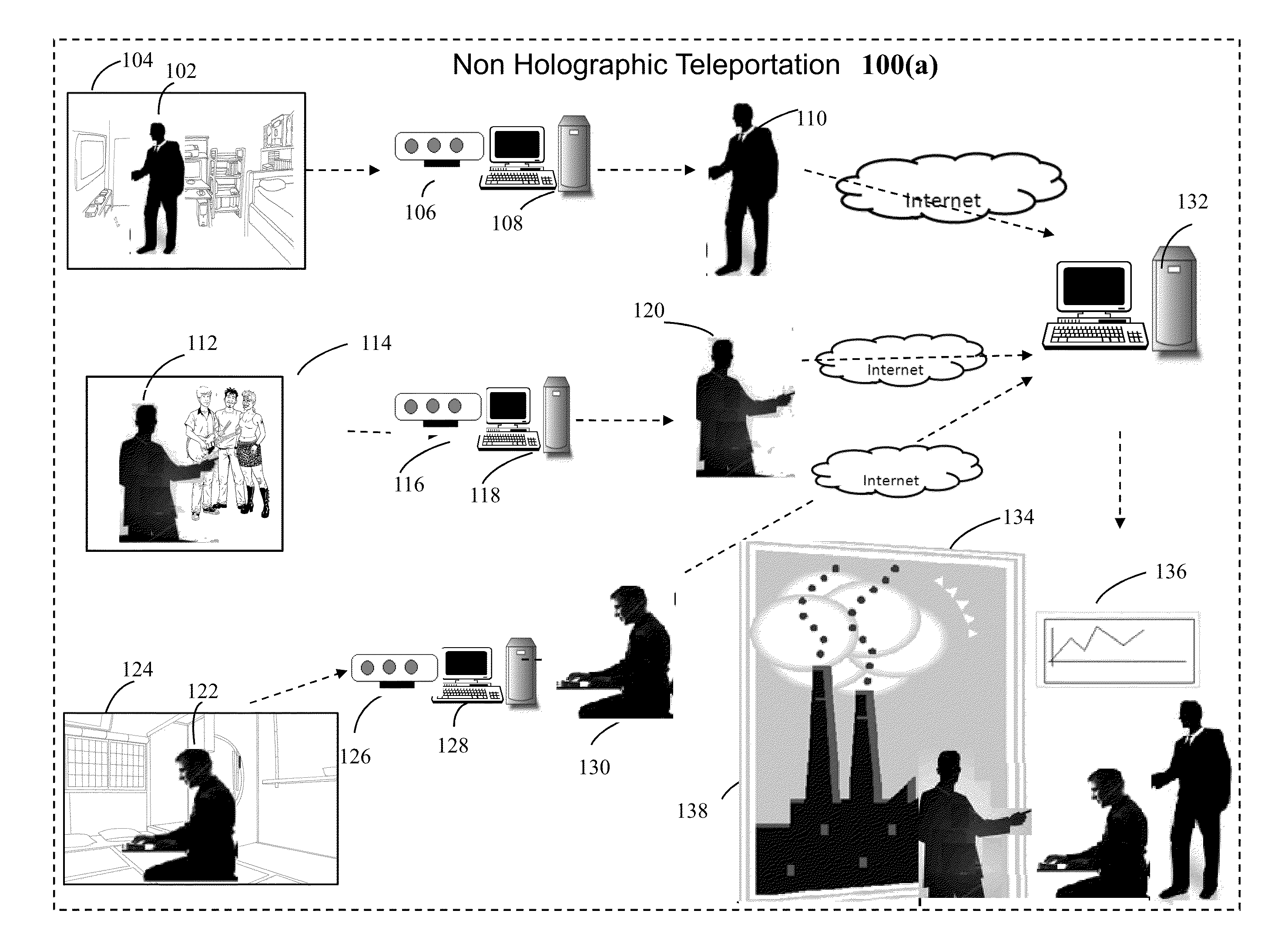

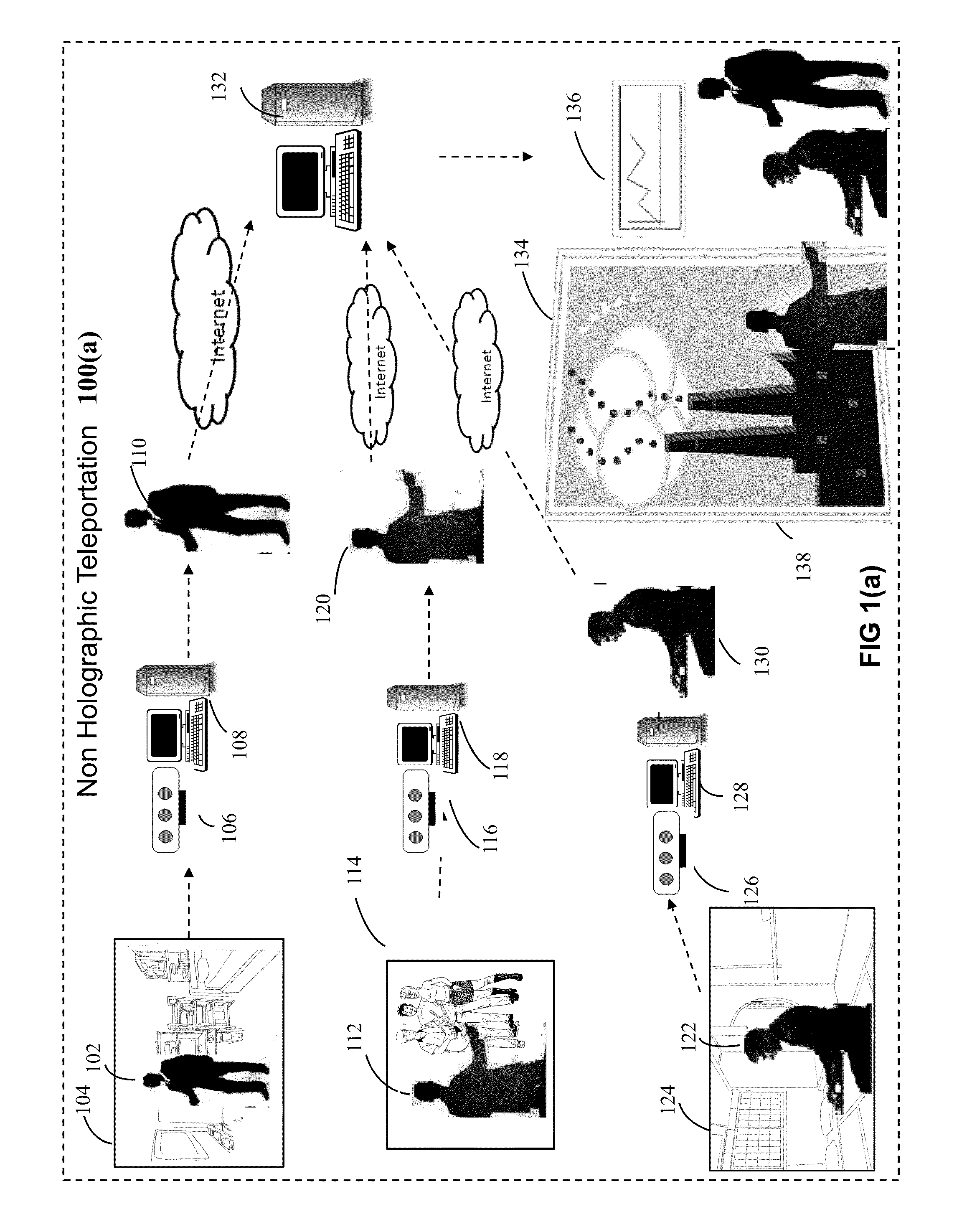

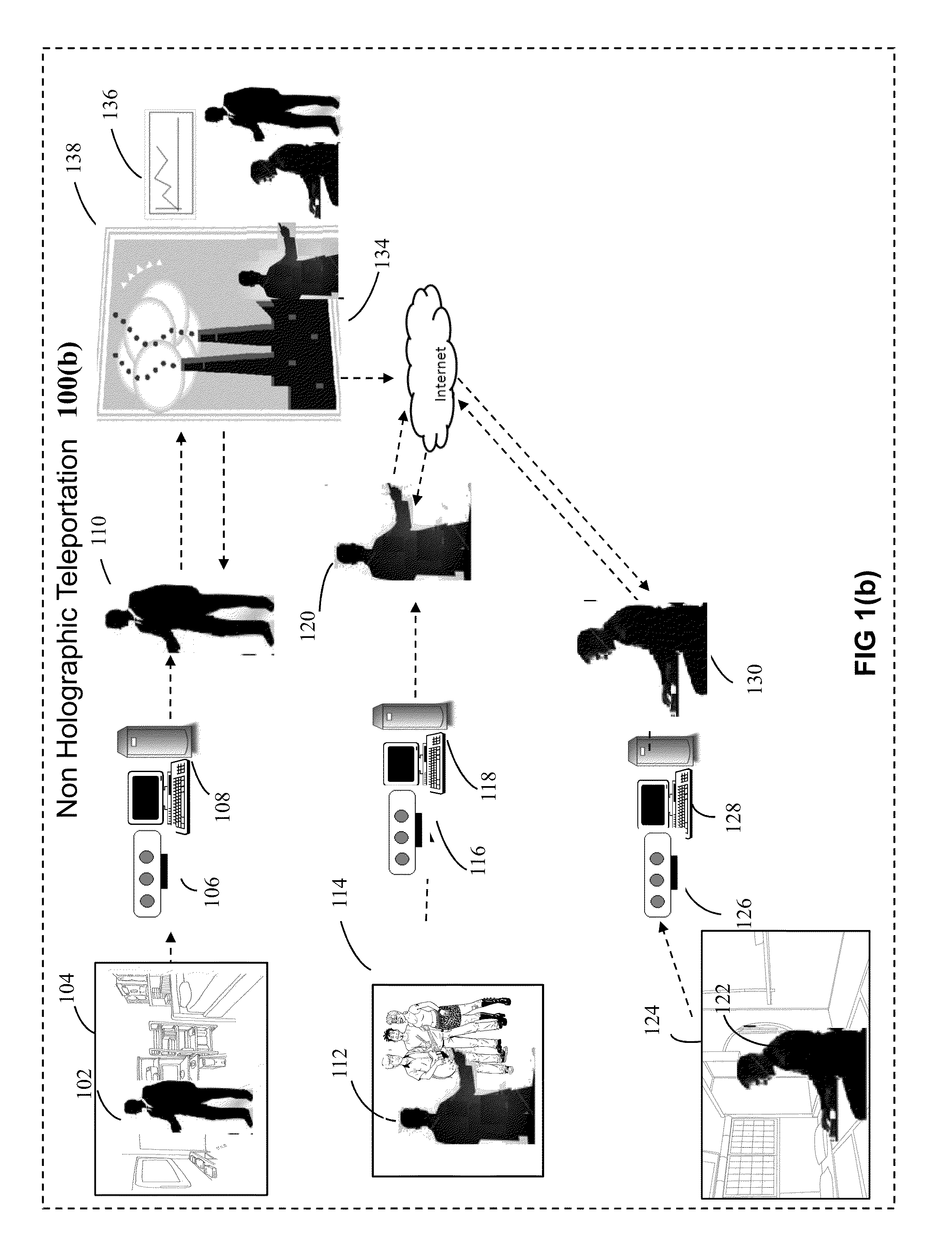

System and method for non-holographic teleportation

InactiveUS20150091891A1Easy to installEffective and accessible for massAmusementsTelevision systemsVirtual trainingRgb image

The present invention discloses a system and method for non-holographic virtual teleportation of one or more remote objects to a designated three-dimensional space around a user in realtime. This is achieved by using a plurality of participating teleportation terminals, located at geographically diverse locations, that capture the RGB and depth data of their respective environments, extract RGB images of target objects from their corresponding environment, and transmit the alpha channeled object images via Internet for integration into a single composite scene in which layers of computer graphics are added in the foreground and in the background. The invention thus creates a virtual 3D space around a user in which almost anything imaginable can be virtually teleported for user interaction. This invention has application in intuitive computing, perceptual computing, entertainment, gaming, virtual meetings, conferencing, gesture based interfaces, advertising, ecommerce, social networking, virtual training, education, so on and so forth.

Owner:DUMEDIA

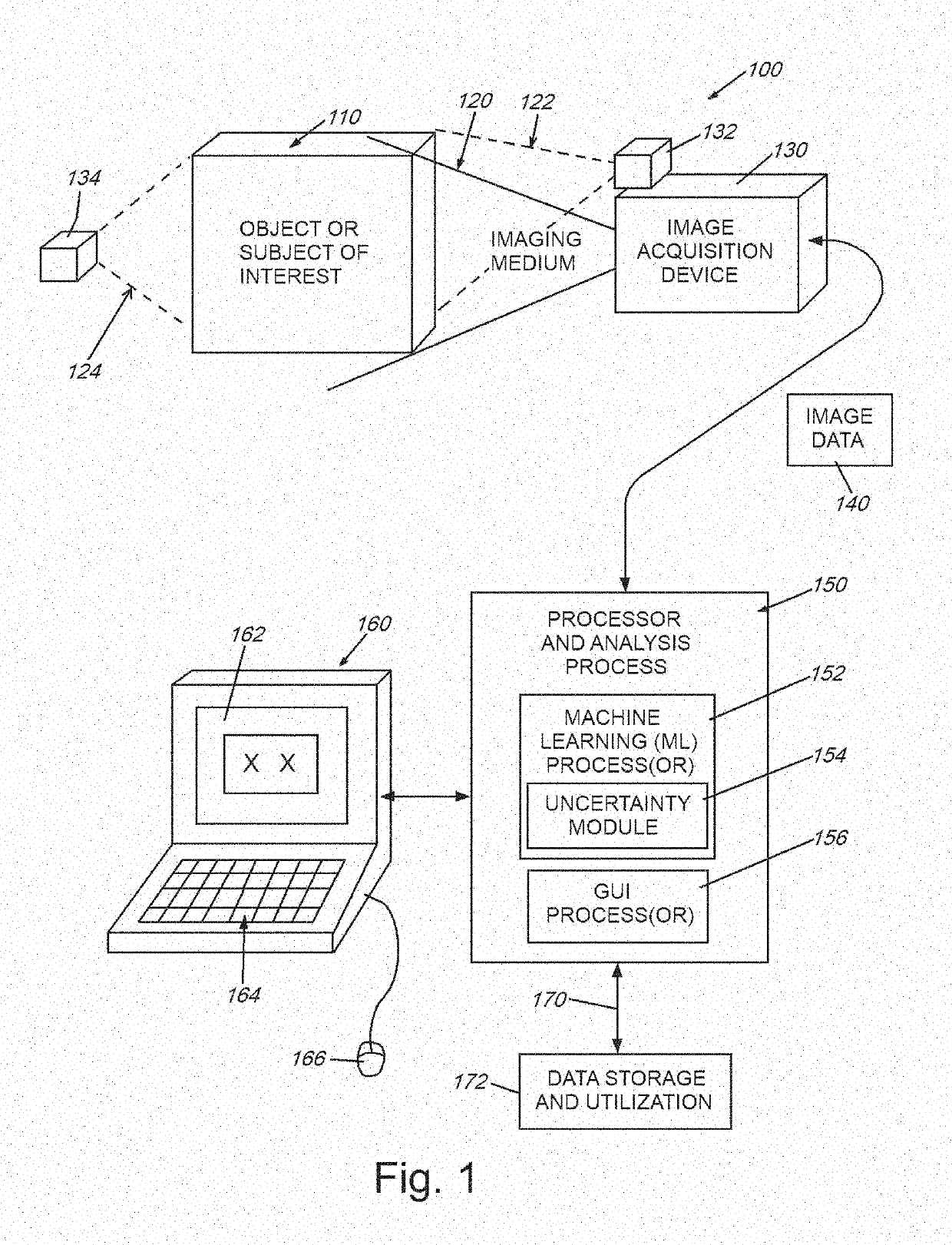

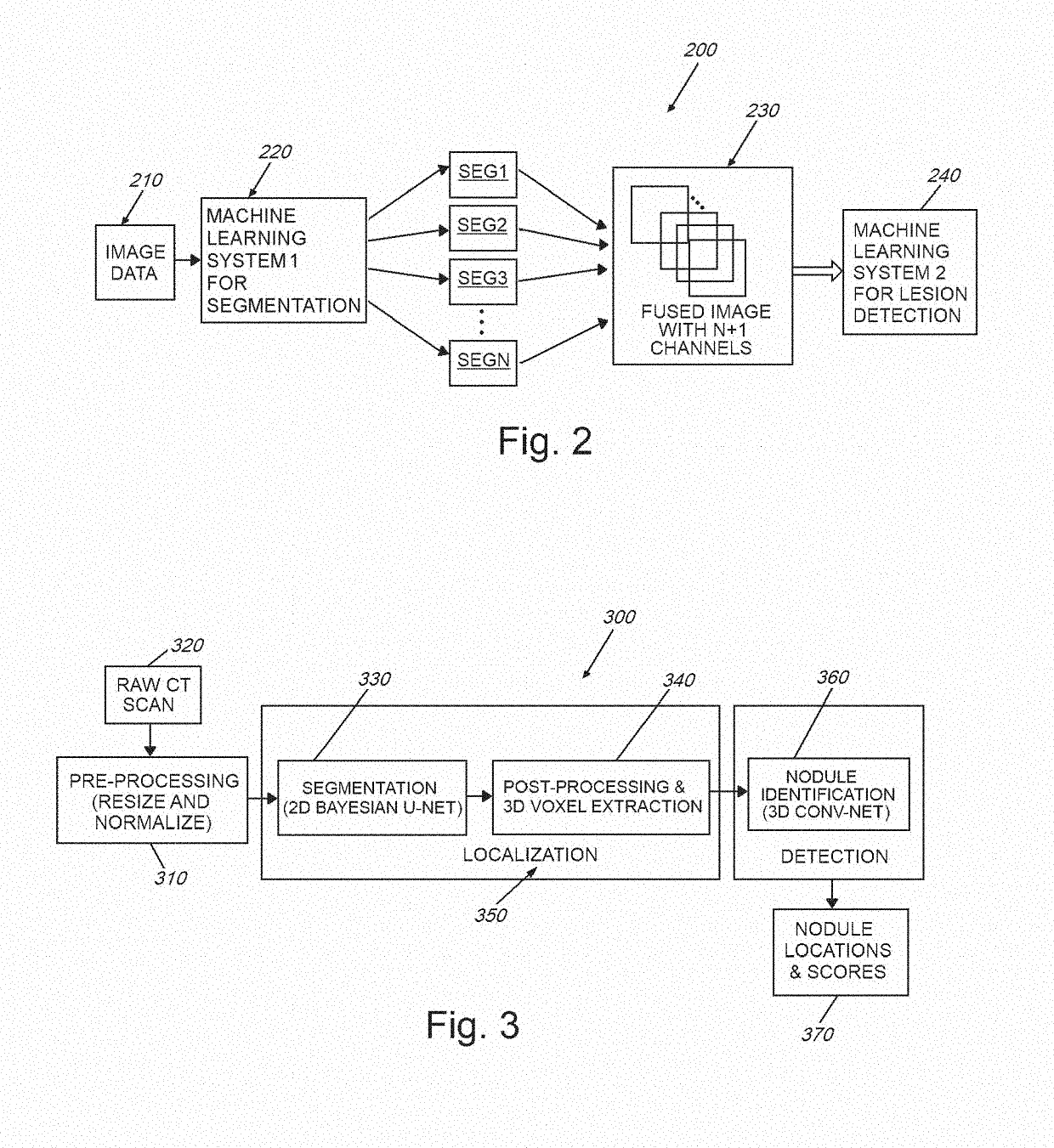

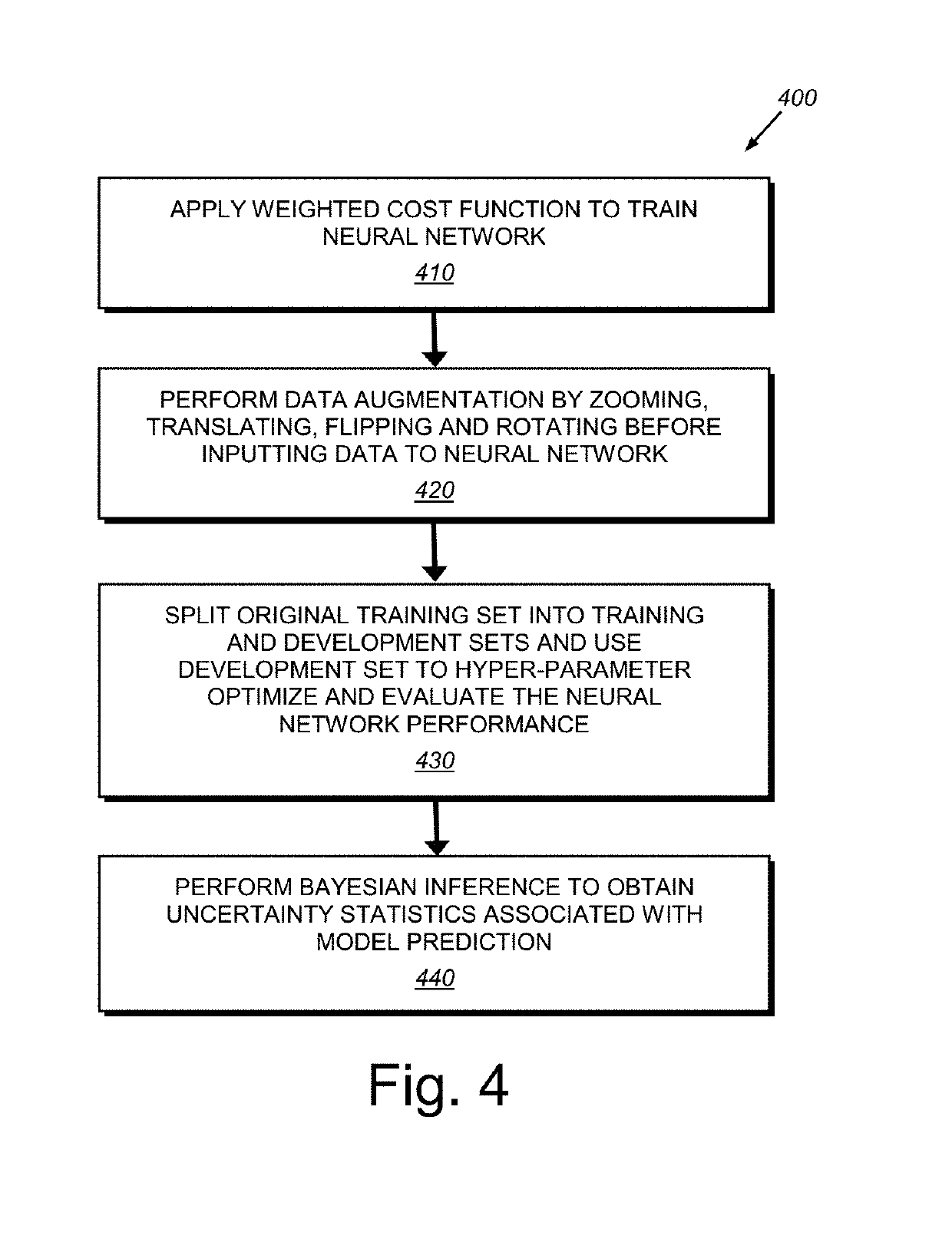

System and method for quantifying uncertainty in reasoning about 2d and 3D spatial features with a computer machine learning architecture

InactiveUS20190122073A1Efficient use ofOvercome disadvantagesImage enhancementImage analysisData setPropagation of uncertainty

This invention provides a system and method to propagate uncertainty information in a deep learning pipeline. It allows for the propagation of uncertainty information from one deep learning model to the next by fusing model uncertainty with the original imagery dataset. This approach results in a deep learning architecture where the output of the system contains not only the prediction, but also the model uncertainty information associated with that prediction. The embodiments herein improve upon existing deep learning-based models (CADe models) by providing the model with uncertainty / confidence information associated with (e.g. CADe) decisions. This uncertainty information can be employed in various ways, including (a) transmitting uncertainty from a first stage (or subsystem) of the machine learning system into a next (second) stage (or the next subsystem), and (b) providing uncertainty information to the end user in a manner that characterizes the uncertainty of the overall machine learning model.

Owner:CHARLES STARK DRAPER LABORATORY

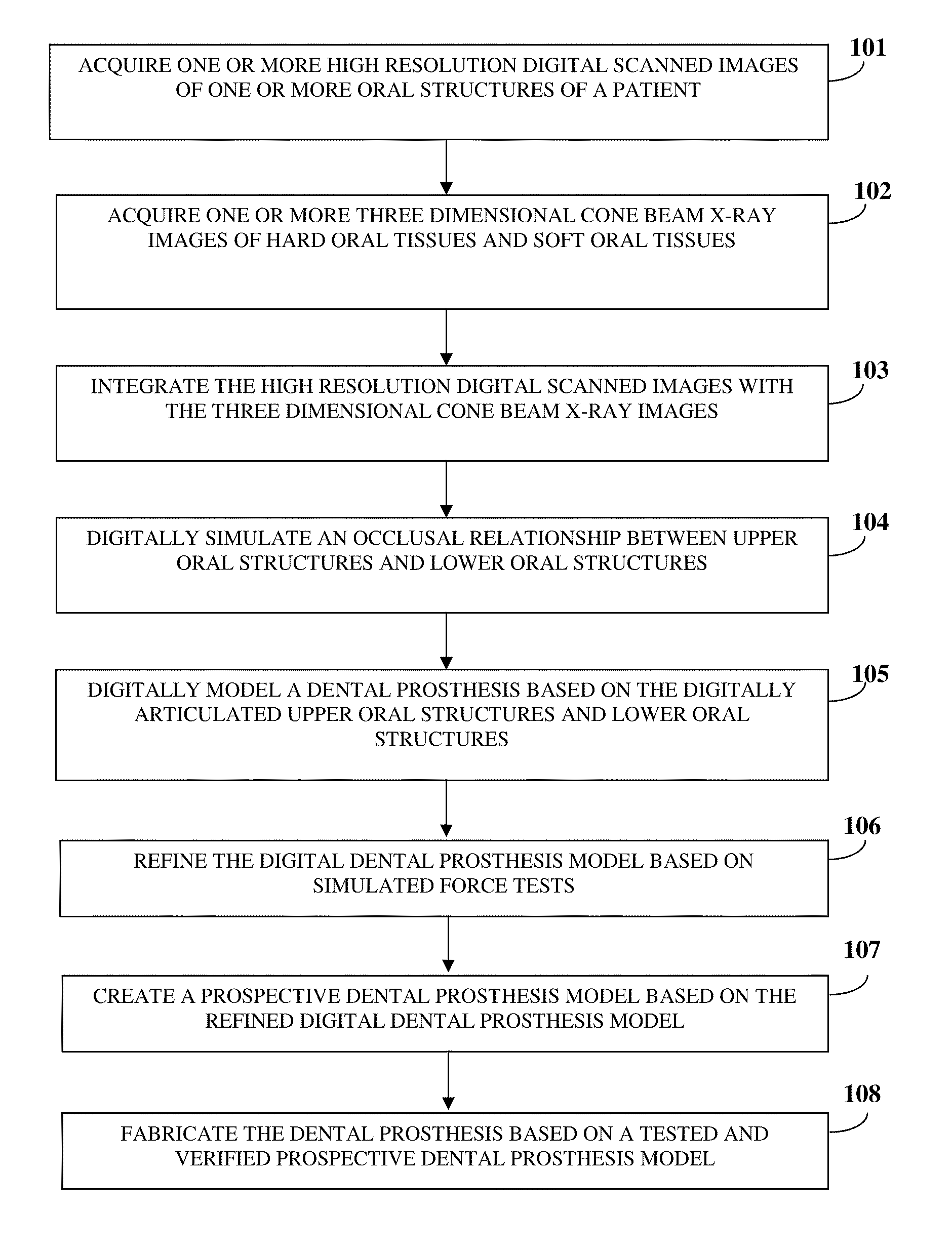

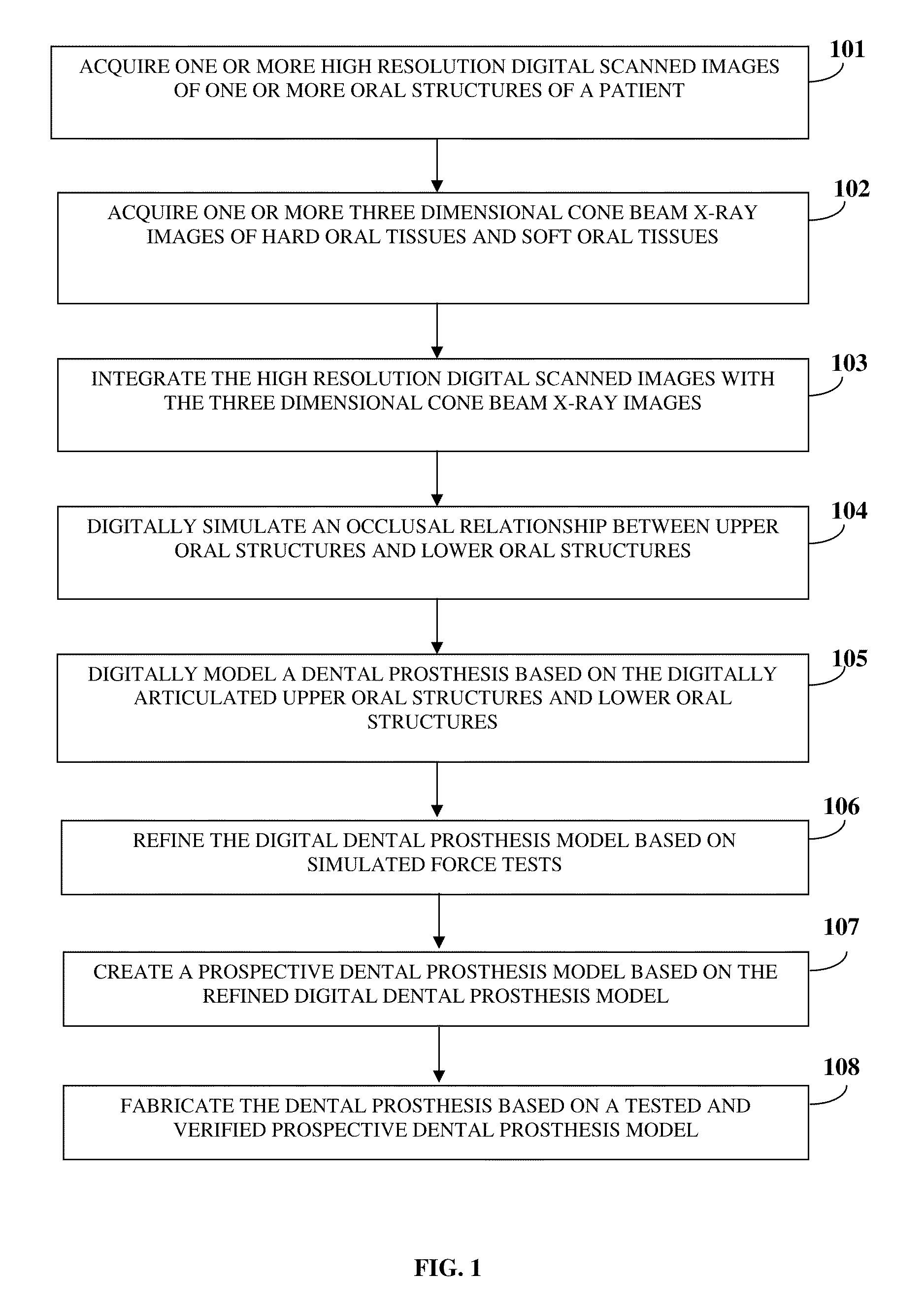

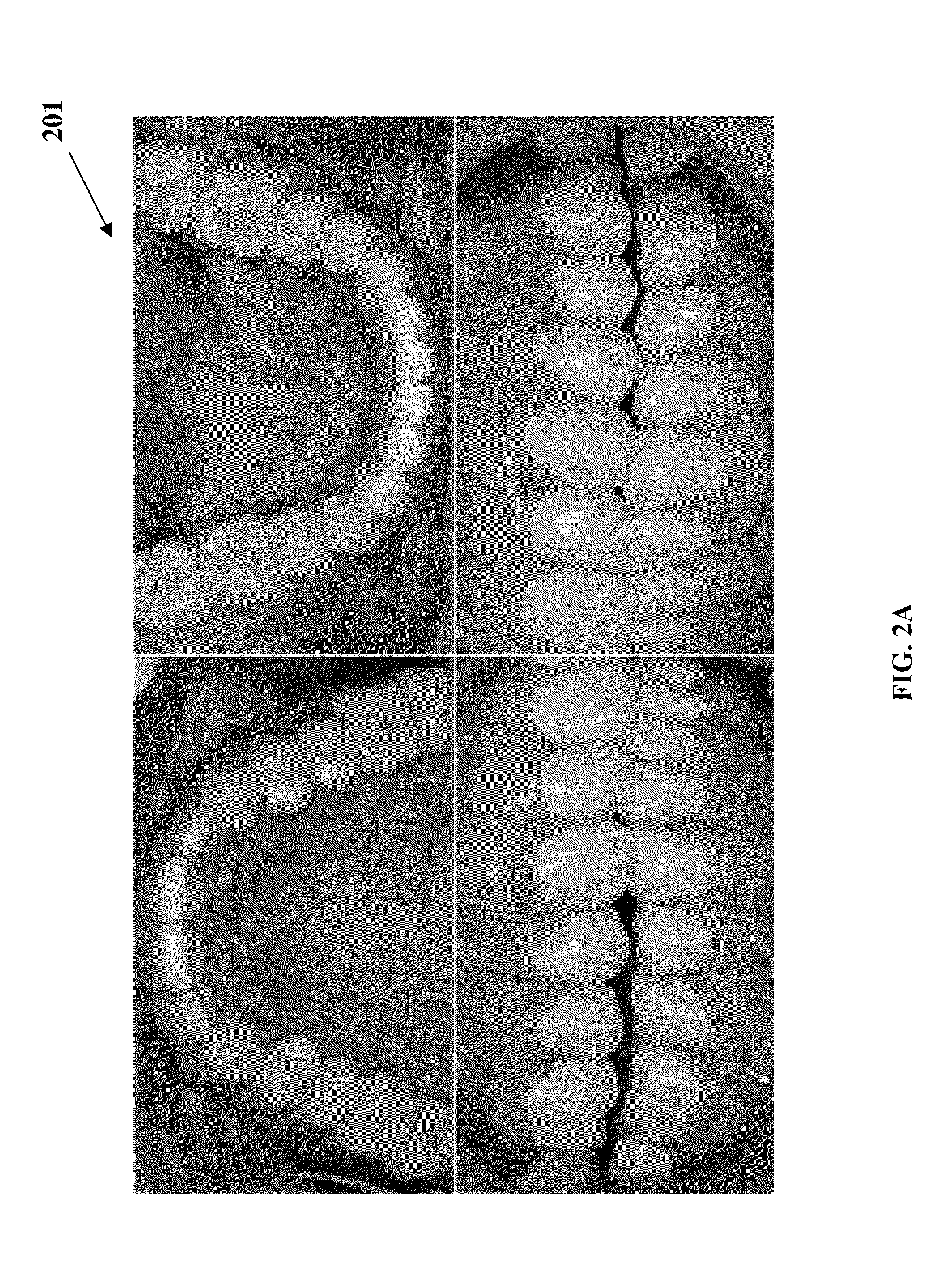

Computer-aided Fabrication Of A Removable Dental Prosthesis

InactiveUS20110276159A1Maximizing retention and functionHighly accurate model-lessAdditive manufacturing apparatusDental articulatorsX-rayComputer aid

A method and system for fabricating a dental prosthesis are provided. High resolution digital scanned images of a patient's oral structures are acquired. Three dimensional (3D) cone beam X-ray images of hard and soft oral tissues are acquired. The scanned images are integrated with the 3D cone beam X-ray images in a 3D space to obtain combined three dimensional images of the oral structures. The occlusal relationship between upper and lower oral structures are digitally simulated using the combined three dimensional images. The dental prosthesis is digitally modeled for planning intra-oral positioning and structure of the dental prosthesis. The digital dental prosthesis model is refined based on simulated force tests performed for assessing interference and retention of the digital dental prosthesis model. A prospective dental prosthesis model is created based on the refined digital dental prosthesis model. The dental prosthesis is fabricated based on a verified prospective dental prosthesis model.

Owner:HANKOOKIN

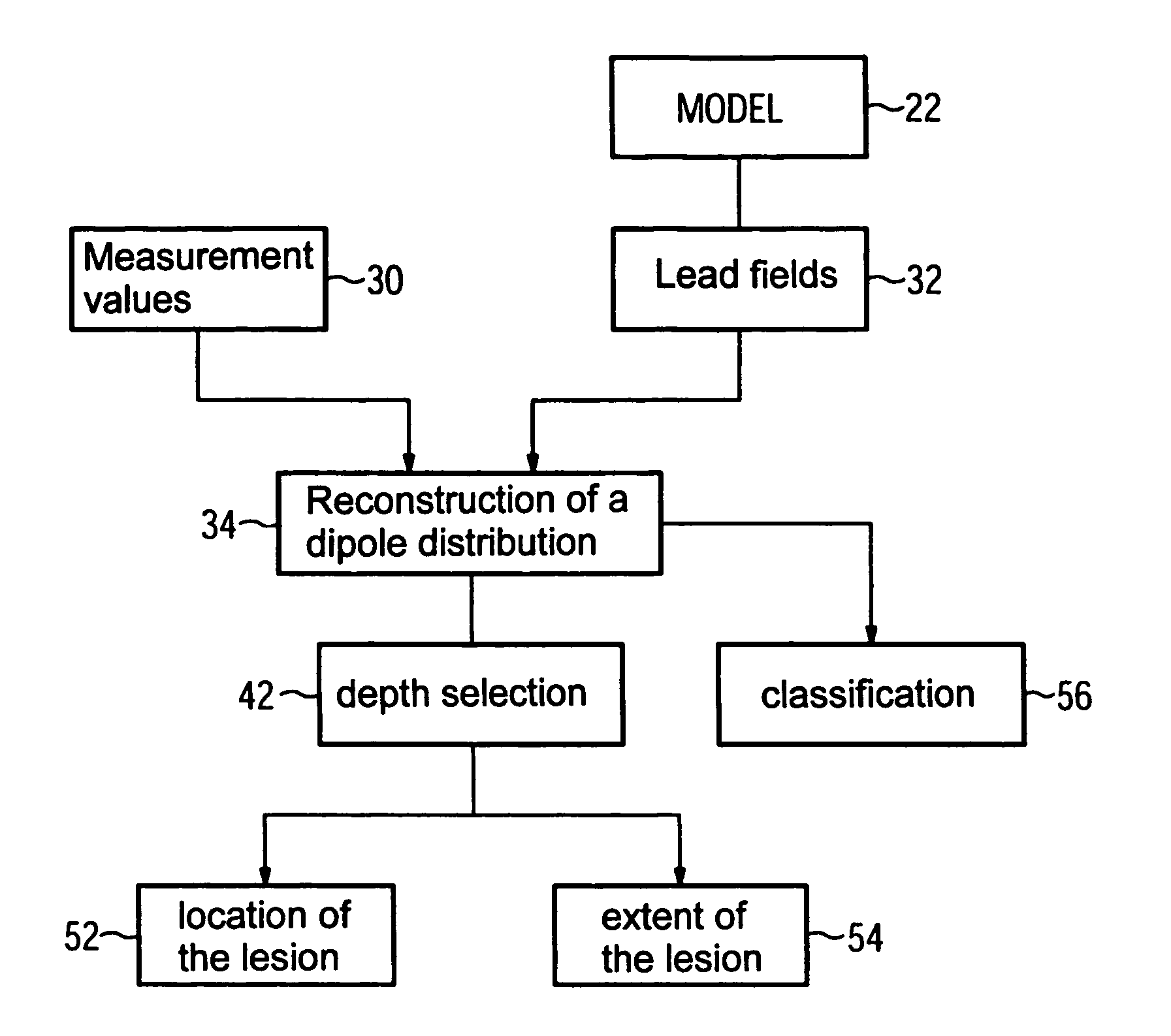

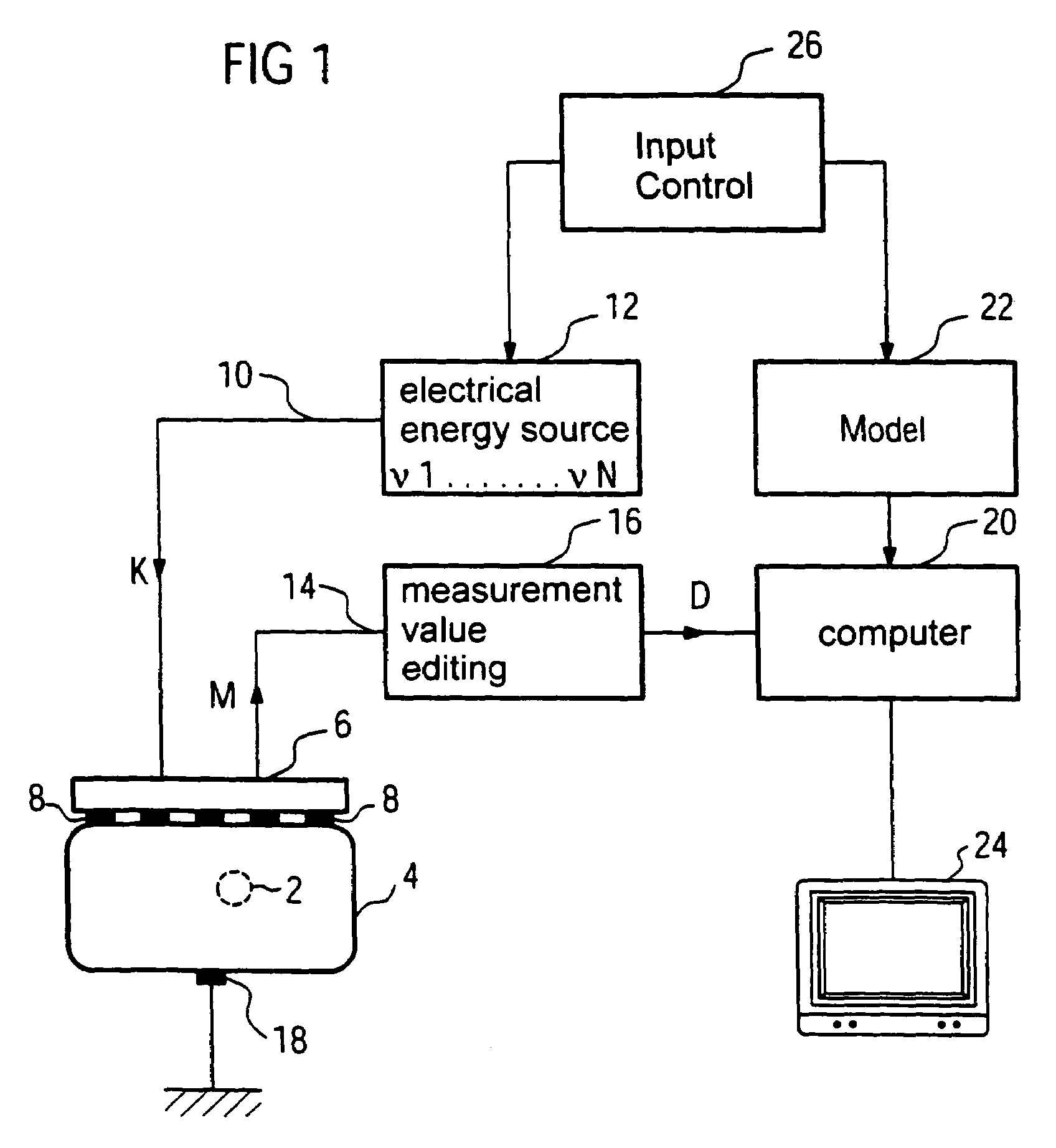

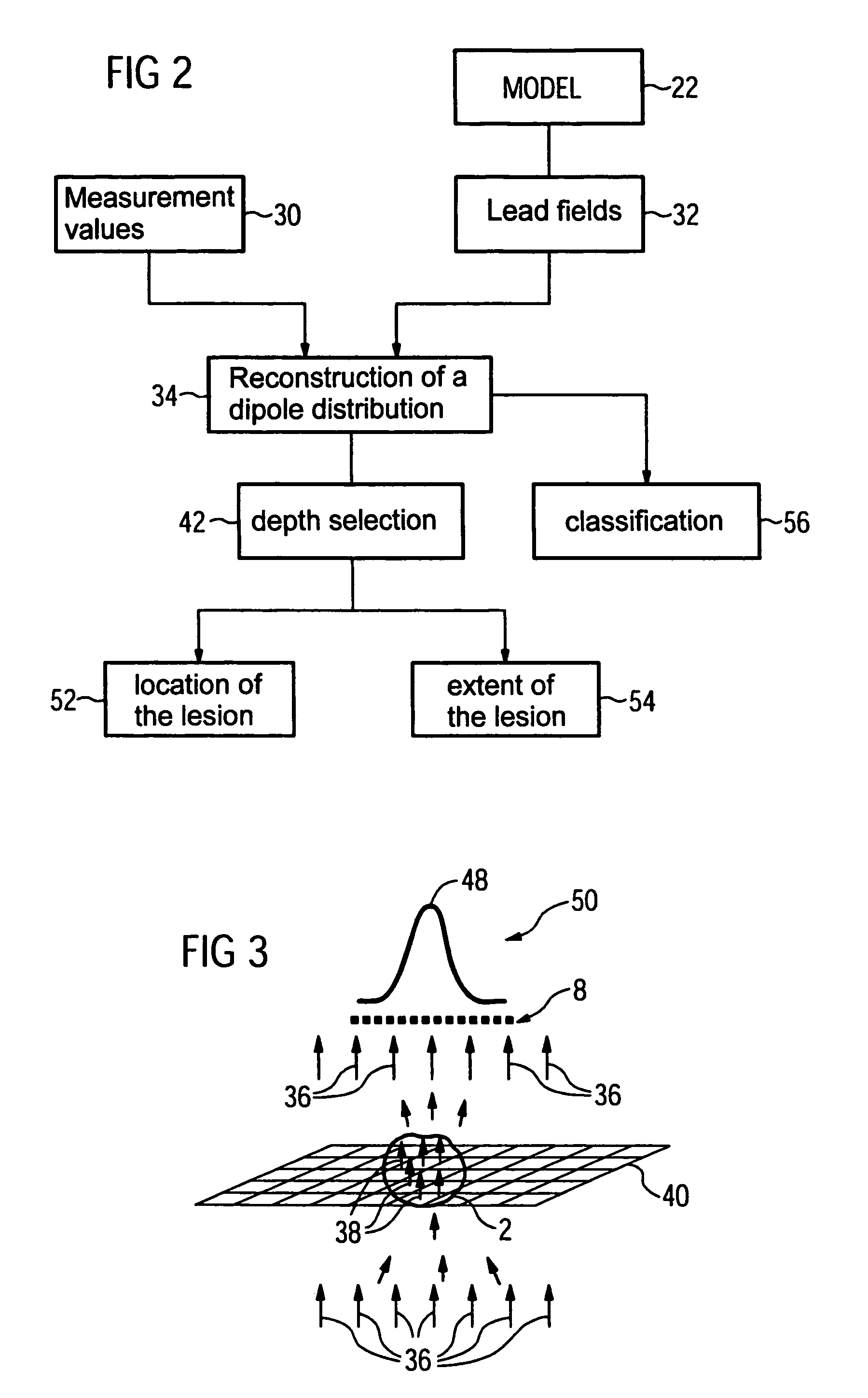

Apparatus for localizing a focal lesion in a biological tissue section

An apparatus for localizing a focal lesion in a biological tissue section applies electrical excitation signals to the tissue section and measures electrical response signals at a number of measurement locations on a surface of the tissue section that arise there due to the excitation signals. A computer reconstructs a distribution of electrical dipole moments from the response signals, this distribution of dipole moments overall best reproducing the response signals, and supplies the 3D spatial position of the distribution as an output.

Owner:SIEMENS HEALTHCARE GMBH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com