DNN task unloading method and terminal in edge-cloud hybrid computing environment

A hybrid computing and task technology, applied in computing, energy-saving computing, neural learning methods, etc., can solve problems such as long response time, reduce delay, network congestion, etc., achieve accurate cost estimation, ensure feasibility, and reduce costs. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

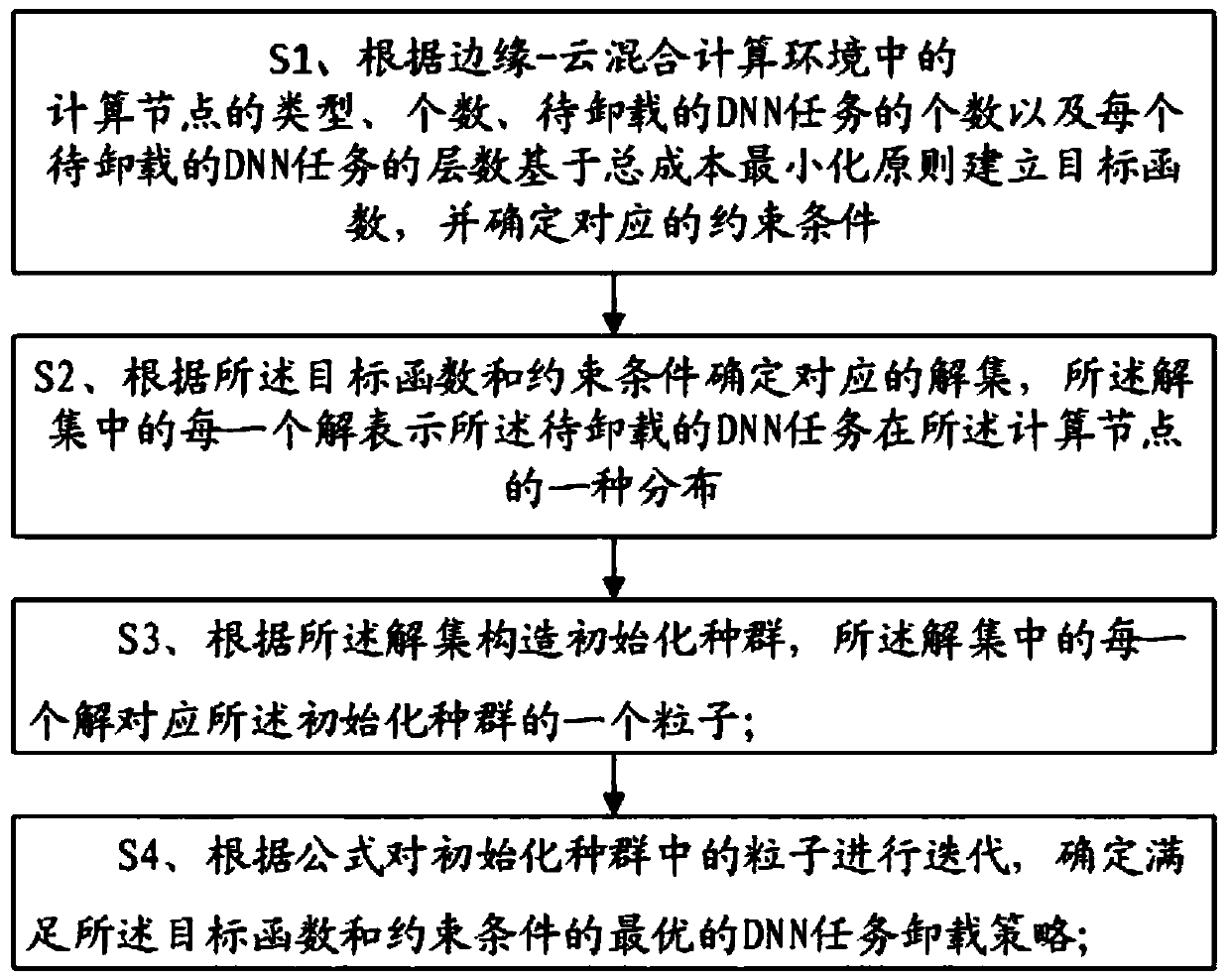

[0105] Please refer to figure 1 , Embodiment 1 of the present invention is:

[0106] A DNN task offloading method in an edge-cloud hybrid computing environment, specifically comprising:

[0107] S1. According to the type and number of computing nodes in the edge-cloud hybrid computing environment, the number of DNN tasks to be offloaded, and the number of layers of each DNN task to be offloaded, the objective function is established based on the principle of total cost minimization, and determined Corresponding constraints;

[0108] First construct the DNN task offloading system model in the edge-cloud hybrid environment,

[0109] T={t 1 ,t 2 ,...,t n} represents the collection of all tasks. t i ={t i,1 ,t i,2 ,...,t i,n} represents a specific DNN task, g i Indicates that a task t is generated i node, a i Indicates the task t i Generation time, dl i means t i Deadline. t i,j Denotes the DNN task t i the jth layer. For DNN task t i Each layer of t i,j , da...

Embodiment 2

[0131] A DNN task offloading method in an edge-cloud hybrid computing environment, which is different from Embodiment 1 in that:

[0132] The calculation of pBest in the S4 is specifically:

[0133] Calculate the optimal solution pBset of the i-th particle after t iterations i t ;

[0134] According to the preset fitness function, the fitness values of the i-th particle in the t-th iteration and the t-1-th iteration are calculated respectively, and pBset is obtained respectively i t The fitness value and pBest i t-1 the fitness value;

[0135] Set the pBset i t The fitness value and the pBest i t-1 The fitness value is compared, if the current fitness value is less than the pBest i t-1 The fitness value of , then pBest i t =pBest i t-1 ;

[0136] Otherwise, keep pBest i t constant;

[0137] Wherein, the preset fitness function is:

[0138] If both the particles of the t-th iteration and the particles of the t-1-th iteration can unload all the DNN tasks t...

Embodiment 3

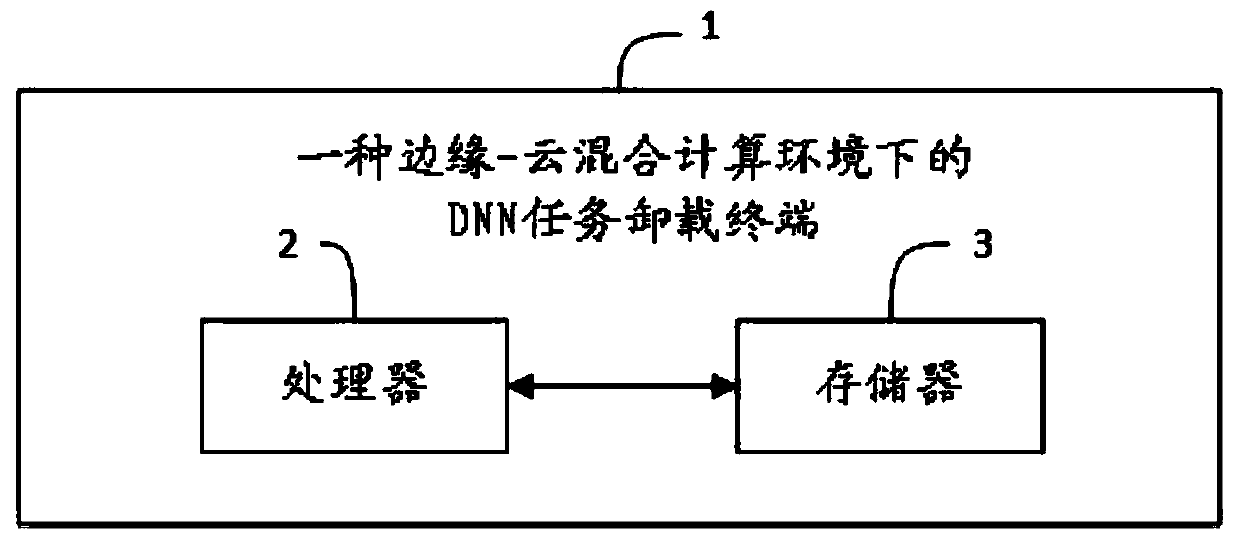

[0154] Please refer to figure 2 , the third embodiment of the present invention is:

[0155] A DNN task offloading terminal 1 in an edge-cloud hybrid computing environment, including a memory 2, a processor 3, and a computer program stored in the memory 2 and operable on the processor 3, and the processor 3 executes the The steps in the first or second embodiment are realized when the computer program is described.

[0156] To sum up, the DNN task offloading method and terminal provided by the present invention in an edge-cloud hybrid environment are established according to the type and number of edge-cloud computing nodes, the number of DNN tasks to be offloaded, and the number of layers. The model establishes a mapping relationship between the tasks to be offloaded and the computing nodes in the DNN, and takes the minimum total cost as the objective function, which is easy to quantify and compare and meets actual expectations, and ensures the lowest cost when unloading DN...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com