Highly flexible configurable data post-processor for deep neural network

A technology of deep neural network and post-processor, applied in the field of data post-processor, can solve the problems of low hardware reuse rate, consumption of hardware resources, unsatisfactory cost, etc., to meet design needs, flexible use, and reduce hardware cost Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

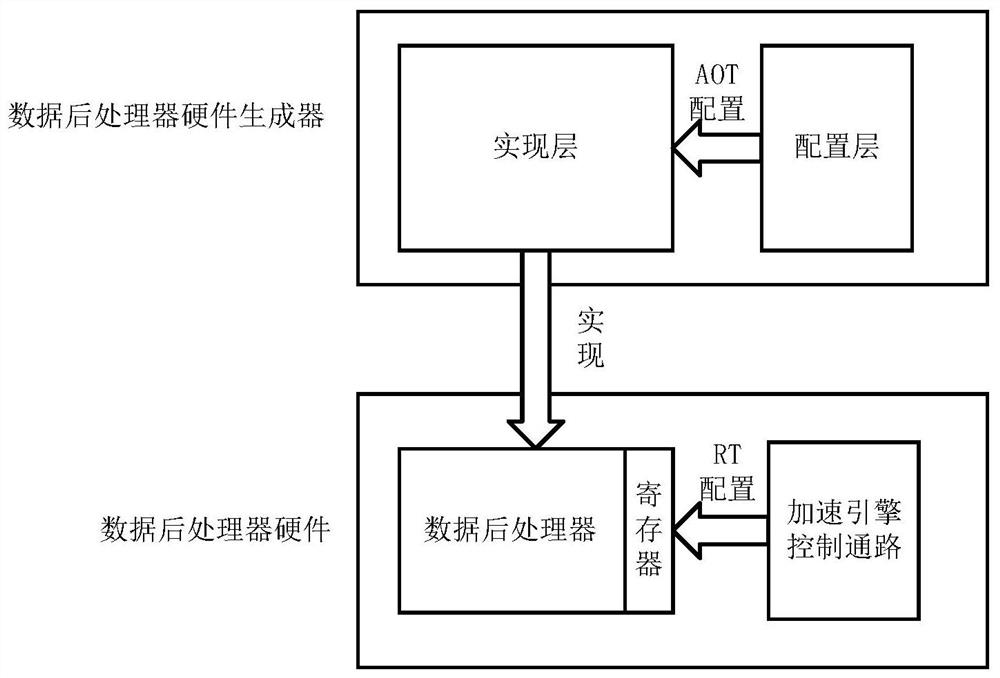

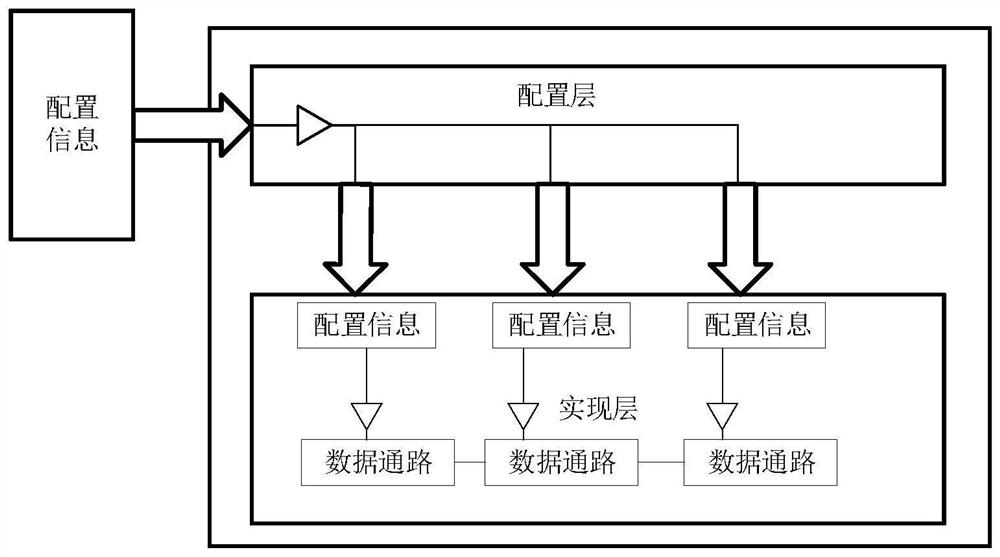

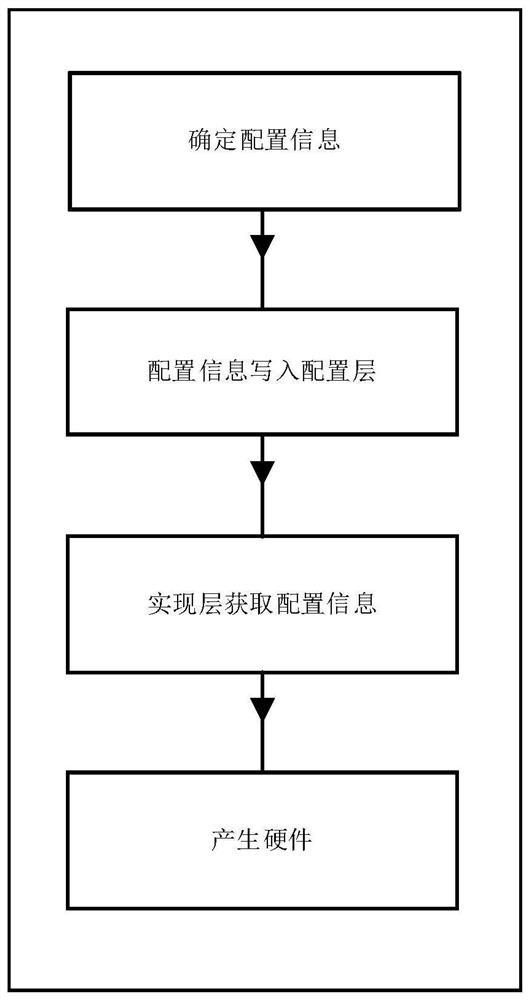

[0028] A highly configurable data post-processor for deep neural networks. The configuration of the processor is divided into pre-run (AOT) configuration and runtime (RT) configuration. The AOT configuration works in the data post-processor The hardware implementation stage is used to obtain the data post-processor required by the user, such as figure 1 As shown, in order to realize the AOT configuration, the present invention designs a dedicated hardware generator for the hardware implementation stage of the data post-processor. The hardware generator is divided into a configuration layer and an implementation layer. In the configuration layer, data post-processing The configuration information implemented by the hardware of the processor, including the data bit width of the data path in the data post-processor, the number of registers of key data processing nodes, etc., and the configuration layer has an interface that can be operated, and the user can fill in it as needed F...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com