Remote sensing image fishpond extraction method based on row-column self-attention full convolutional neural network

A convolutional neural network and remote sensing image technology, applied in the field of remote sensing image fish pond extraction based on row-column self-attention full convolutional neural network, can solve the problem of incomplete extraction range, inability to automatically obtain geometric shapes, and blurred edges of fish ponds. And other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] In order to facilitate those of ordinary skill in the art to understand and implement the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the implementation examples described here are only used to illustrate and explain the present invention, and are not intended to limit this invention.

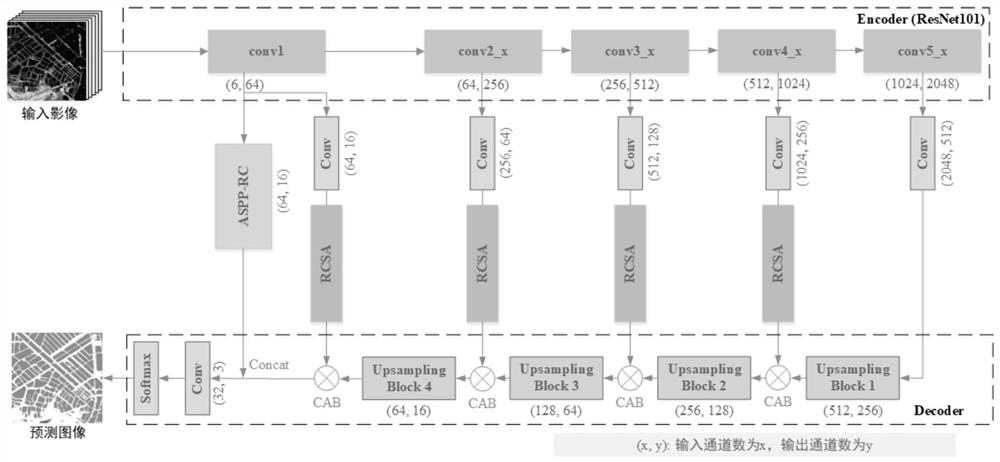

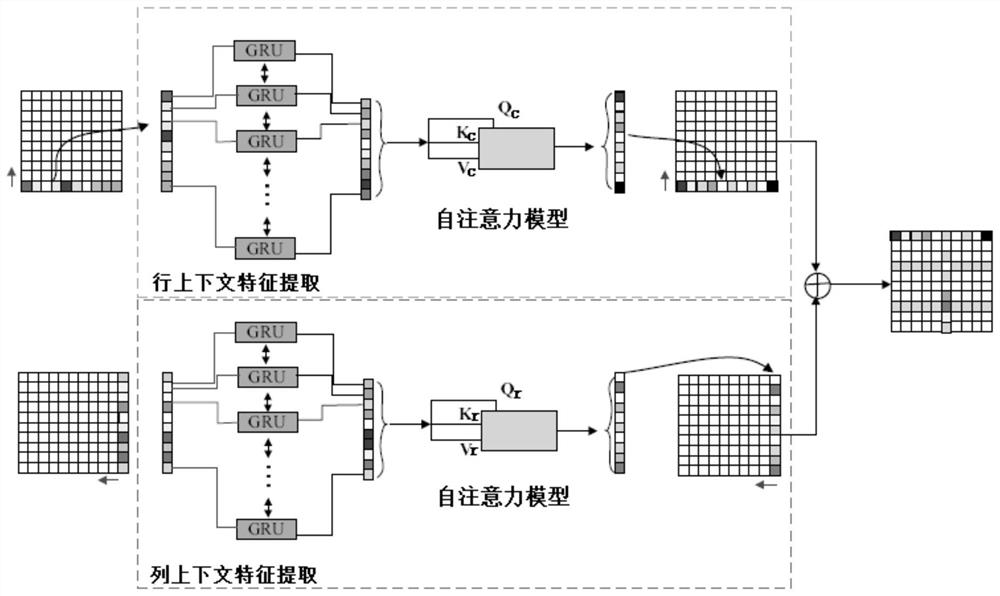

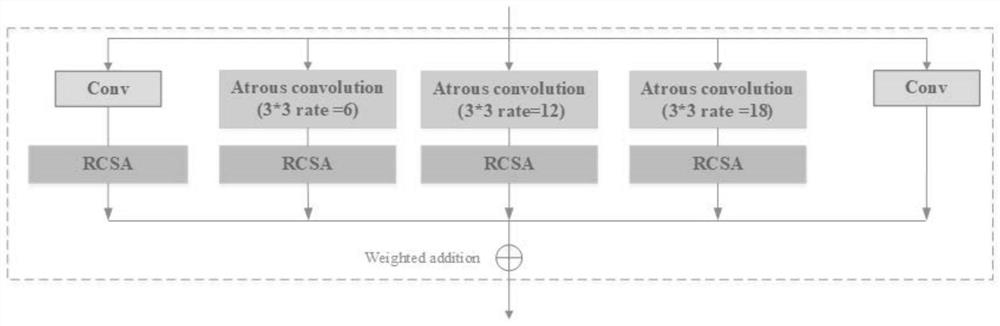

[0033] The self-attention full convolutional neural network structure diagram of ranks and columns given by the present invention is as follows: figure 1 As shown, each rectangular box represents a neural network layer. Among them, conv1, conv2_x, conv3_x, conv4_x, and conv5_x are the five sets of convolutional layers of ResNet101 respectively; RCSA represents the row-column bidirectional GRU self-attention model; ASPP-RC represents the hollow space convolution pooling pyramid combined with the row-column bidirectional GRU self-attention model Model; Upsampl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com