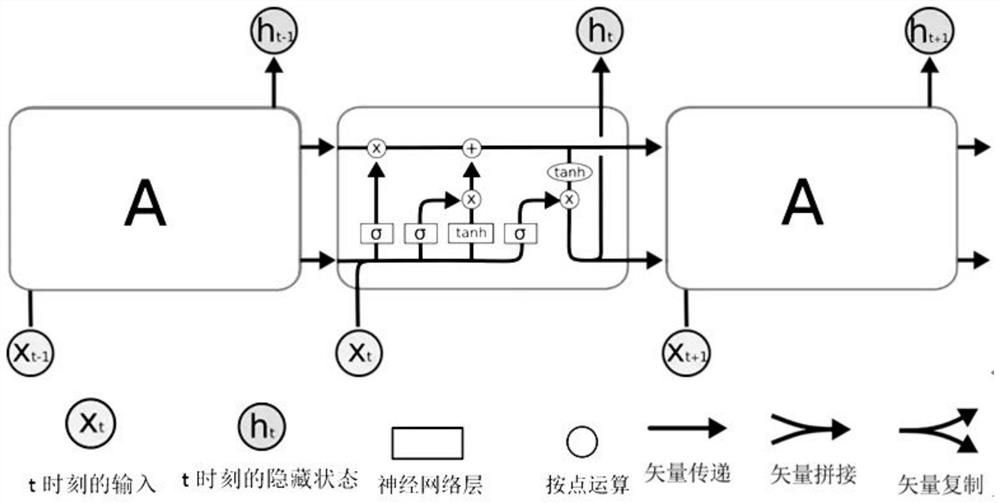

Video semantic segmentation method based on ConvLSTM convolutional neural network

A convolutional neural network and semantic segmentation technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problem of ignoring the correlation of adjacent video frames, improve the generalization ability, expand the receptive field, The effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

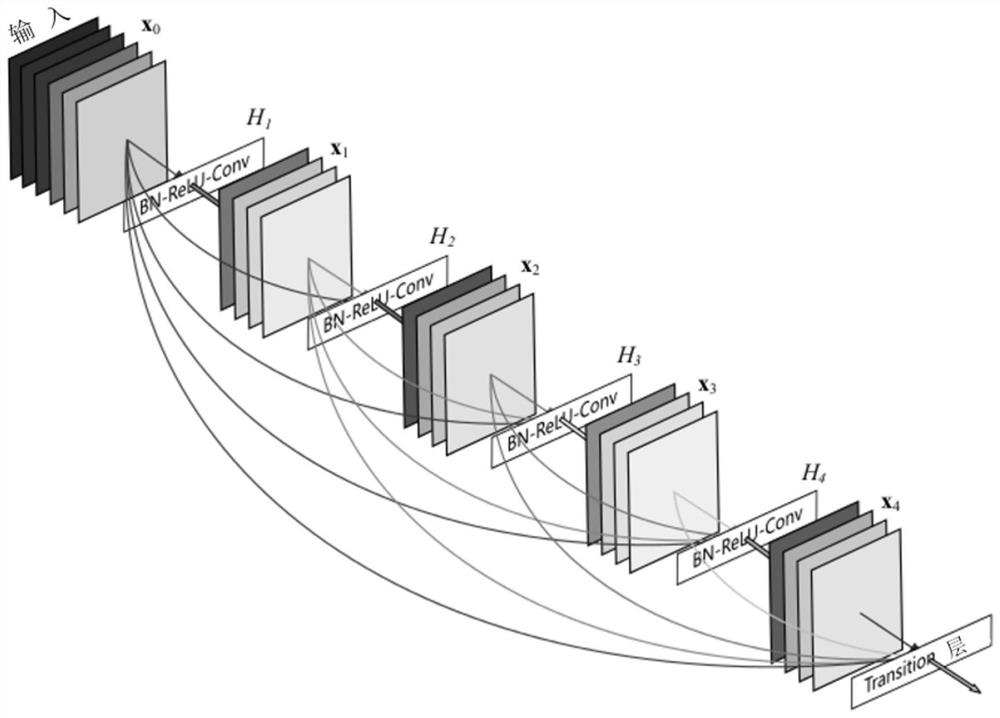

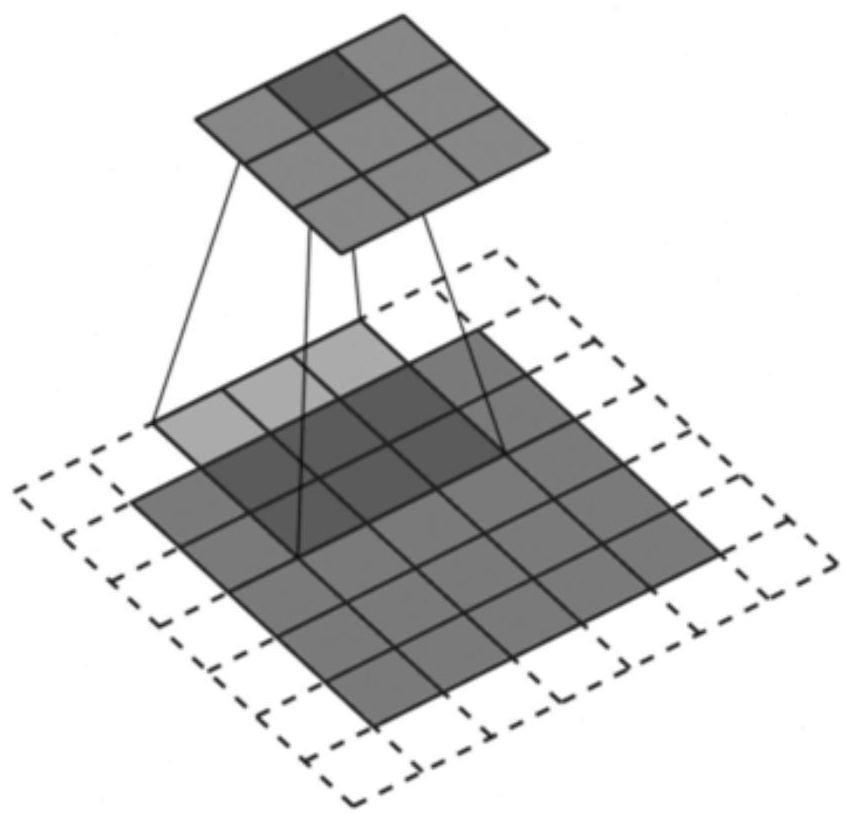

[0064] A video semantic segmentation method based on ConvLSTM convolutional neural network, comprising the following steps:

[0065] A. Build and train video semantic segmentation network

[0066] (1) Get the dataset

[0067] Neural networks need a large amount of data to learn, and most networks use supervised learning, that is, the input data has corresponding labeled data during network training. The input data in the training set, that is, the data set is a video sequence, and the corresponding labeled data of the data set is the result image after semantic segmentation; in video semantic segmentation, because the video contains many frames, only a few frames in a video sequence have corresponding Annotated images of , in the Cityscapes dataset, each video sequence has 30 frames, of which the 20th frame has annotation information. The dataset is Cityscapes dataset. The Cityscapes dataset contains a variety of video sequences recorded in street scenes from 50 different c...

Embodiment 2

[0086] According to a kind of video semantic segmentation method based on ConvLSTM convolutional neural network described in embodiment 1, its difference is:

[0087] Before performing step (3), perform data augmentation on the data in the training set in the data set, including: perform random horizontal flipping, random brightness adjustment, and random cropping on the data in the training set to expand the data in the training set. In this way, over-fitting of the network can be avoided and the generalization ability of the network can be improved.

[0088] In step (3), the learning rate decay strategy is used to train the video semantic segmentation network. As the number of iterations increases, the learning rate gradually decreases, which can ensure that the model will not fluctuate too much in the later stage of training, thus getting closer to the optimal solution. Set the initial learning rate l 0 is 0.0003, and the learning rate l is attenuated by formula (I) durin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com