Method and device of training a captioning model, computer equipment and storage medium

A subtitle and model technology, applied in the field of training subtitle models, can solve problems such as low training quality, high training difficulty, and data consumption, and achieve the effects of simplifying the training process, improving training quality, and saving memory and data consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] Currently, great progress has been made in image and video captioning. Much of this is due to advances in machine translation. For example, the encoder-decoder framework and attention mechanism were first introduced in machine translation and then extended to subtitles. Both image captioning methods and video captioning methods follow their pipelines and apply an attention mechanism in caption generation. Compared with image subtitles, video subtitles describe dynamic scenes rather than static ones.

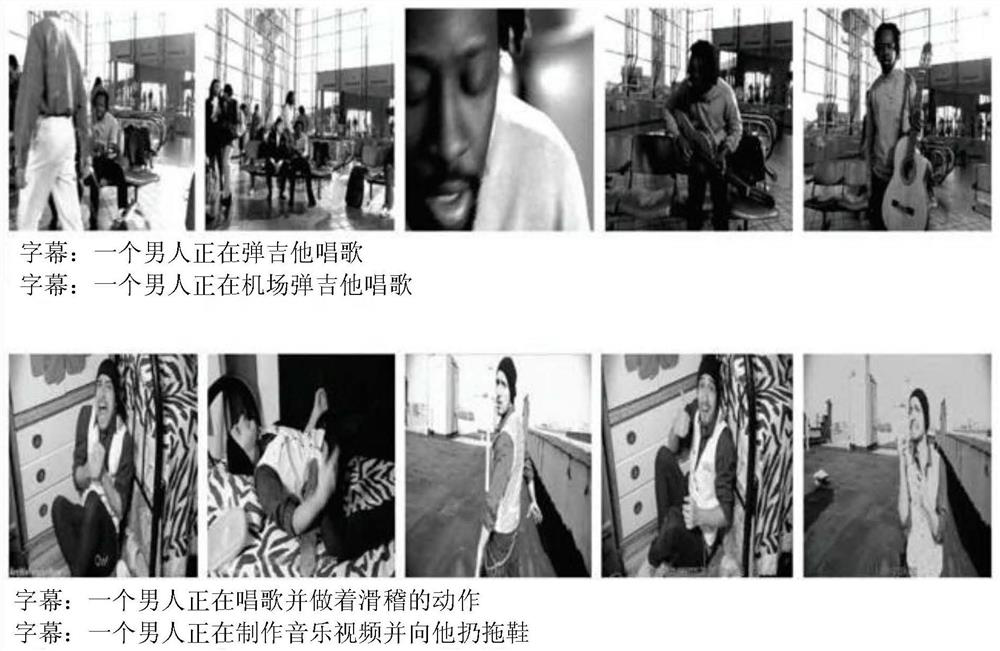

[0018] from figure 1 As can be seen in , video captioning is much more difficult due to larger appearance variations. Some related techniques propose boundary-aware long-short-term memory (LSTM, long short-term memory) units to automatically detect temporal video segments. Some related techniques integrate natural language knowledge into their networks by training linguistic LSTM models on large external text datasets. Some related technologies extend the Gated Recur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com