Real-time gesture recognition method and system based on machine vision

A technology of gesture recognition and machine vision, applied in neural learning methods, character and pattern recognition, input/output of user/computer interaction, etc., can solve problems such as difficulties in gesture recognition, difficulties in implementing gesture recognition algorithms, and complex hand shapes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

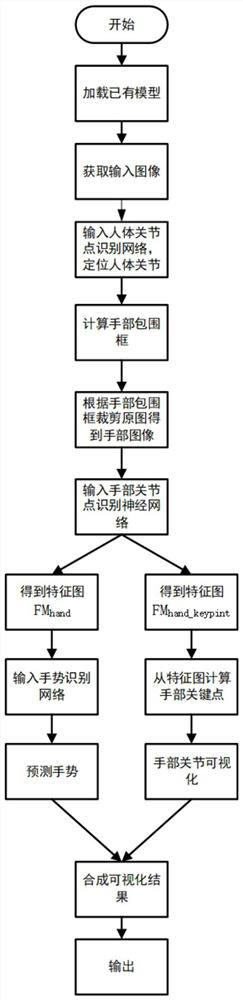

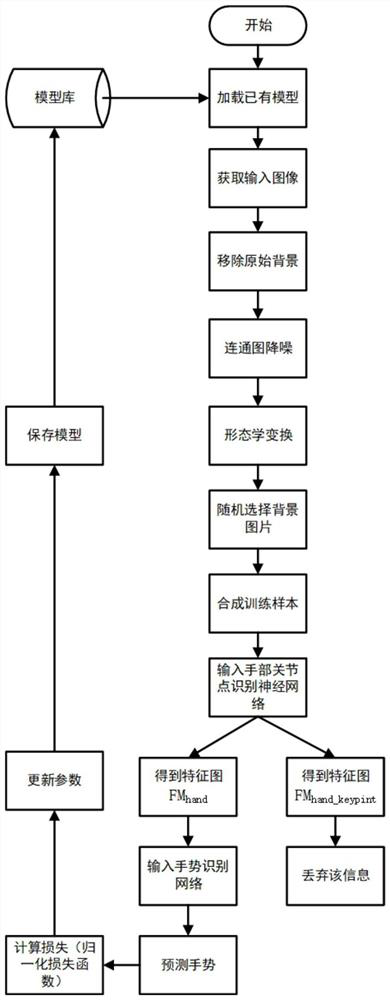

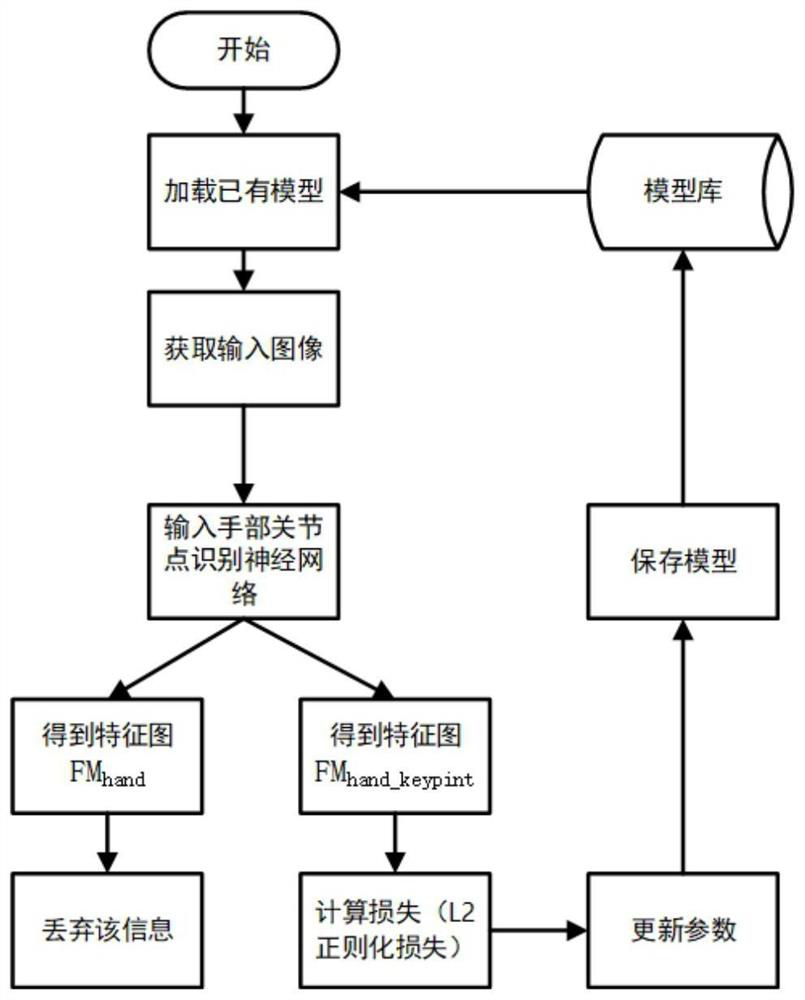

Embodiment 1

[0073] Solve the difficult problem of hand positioning through human joint point recognition. After obtaining the hand position, detect the hand joint points, and then use the hand joint point information to solve the difficult problem of gesture recognition caused by complex hand shapes and low hand resolution. But at the same time, the difficulty of the joint point extraction algorithm lies in the following points:

[0074] (1) The local distinction is weak, for example, it is difficult to define the precise location of "neck" or "the last joint of the thumb";

[0075] (2) Some joints are blocked or invisible, such as being blocked by clothes, the human body itself, or by the background, and some parts of the human body are similar;

[0076] (3) The surrounding environment, lighting, and the size and resolution of the target that needs joint extraction relative to the image will all affect the effect of key point extraction.

[0077] In order to overcome these problems, it ...

Embodiment 2

[0134] The present embodiment proposes a real-time gesture recognition system based on machine vision on the basis of Embodiment 1, including a video capture module, a streaming media server, a GPU server and a result display terminal; the video capture module collects video streams that include hands , and uploaded frame by frame to the streaming media server; the streaming media server encodes and compresses the video stream and transmits it to the GPU server through the network; the GPU server decodes the video stream and processes each frame according to the identification method of embodiment 1 to obtain the result Tuple and visualization information; the result display terminal obtains and displays the visualization joint point information and result tuple.

[0135]In order to solve the problem of mismatch between the processing rate of video capture and upload (60Hz) and the processing rate of each frame (22Hz~28Hz) in the GPU server process, the video capture module fix...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com