3D target bounding box estimation system based on GIoU

A bounding box, 3D technology, applied in computing, computer components, image data processing, etc., can solve the problem of low accuracy of 3D target bounding box estimation, and achieve the effect of improving calibration accuracy and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

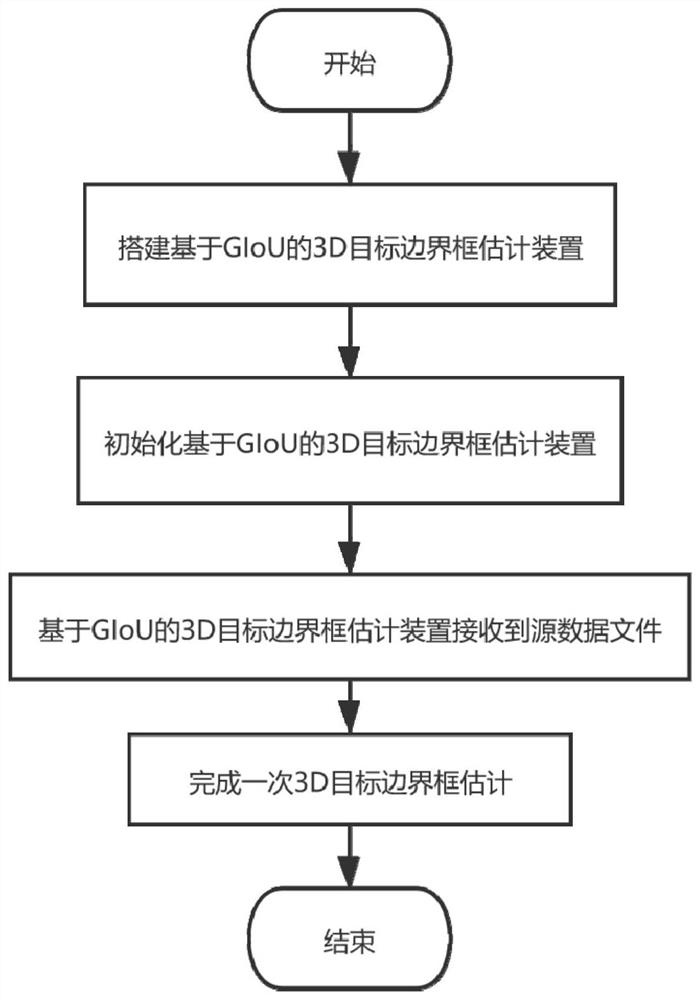

[0048] Technical scheme of the present invention comprises the following steps:

[0049] combine image 3 , the overall process of the present invention is:

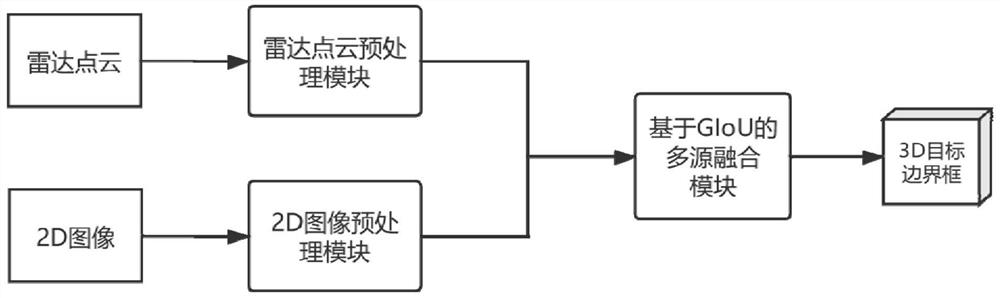

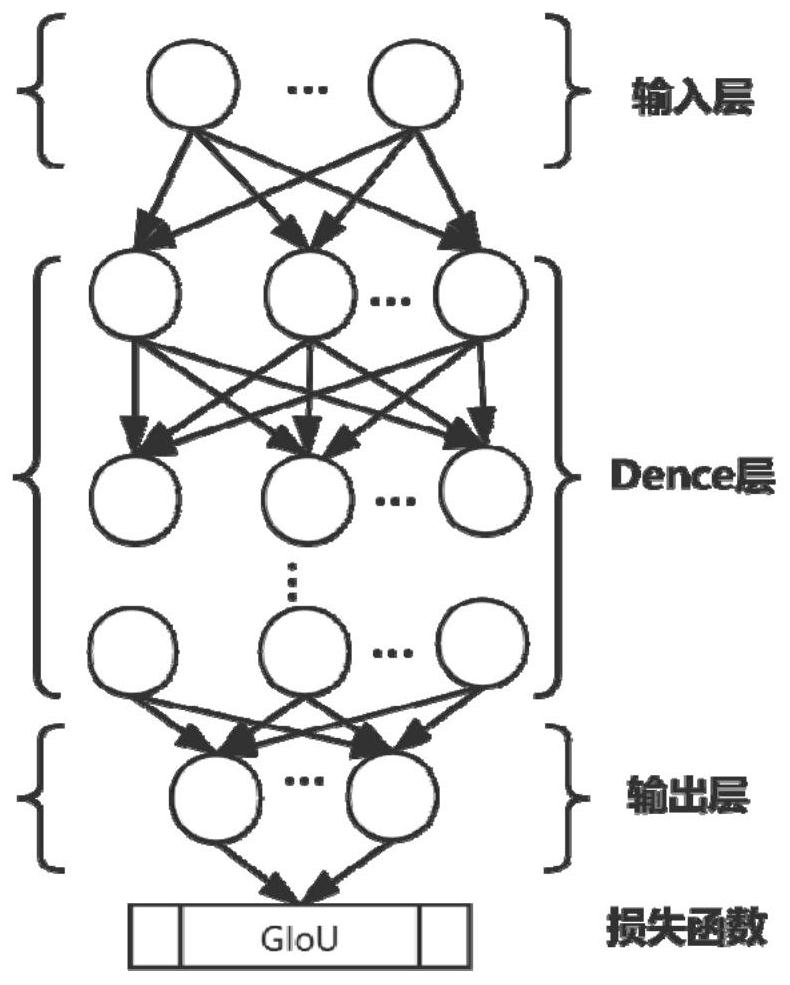

[0050] The first step is to build a GIoU-based 3D target bounding box estimation device. It consists of a radar point cloud preprocessing module, a 2D image preprocessing module, and a GIoU-based multi-source fusion module. The radar point cloud preprocessing module is the PointNet neural network model, the 2D image preprocessing module is the Resnet50 neural network model, and the GIoU-based multi-source fusion module is the Dense neural network model.

[0051] The radar point cloud preprocessing module can convert point cloud data into a fixed-dimensional digital feature representation.

[0052] The 2D image preprocessing module converts point 2D image data into a fixed-dimensional digital feature representation.

[0053] The GIoU-based multi-source fusion module can fuse the digital features of point cloud data an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com