Relation extraction method based on combination of attention mechanism and graph long-short-term memory neural network

A long-short-term memory and relational extraction technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as difficult to effectively process time-series data, loss, and error accumulation information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0069] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

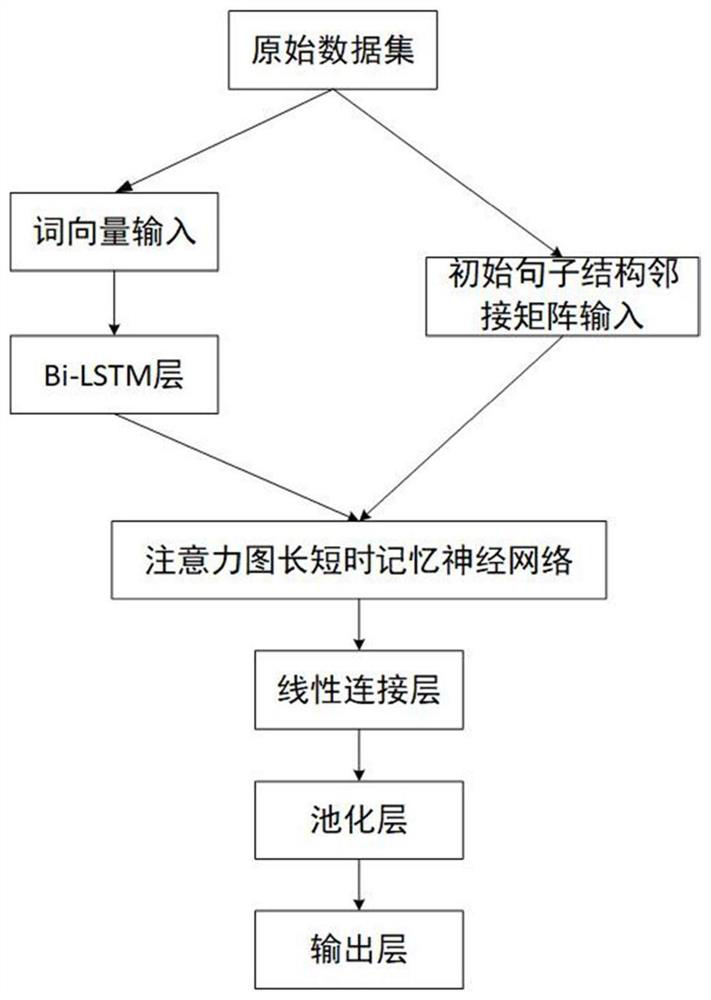

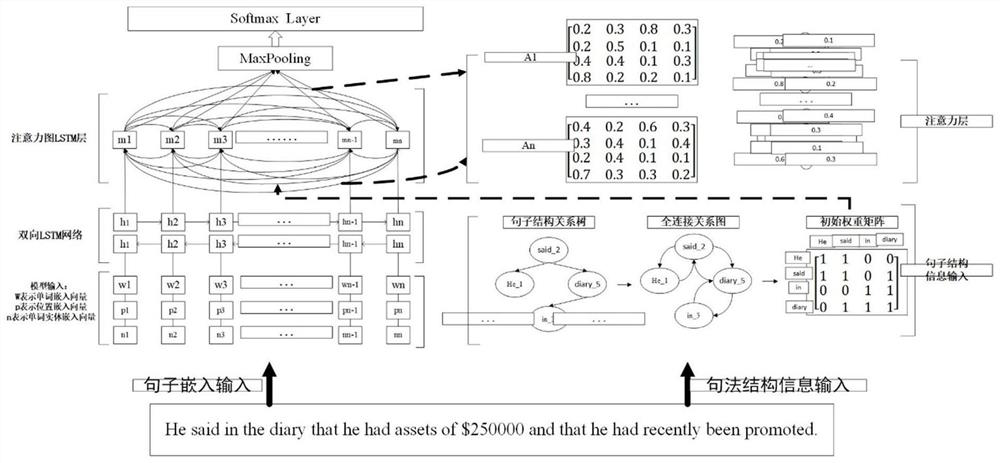

[0070] A method of relation extraction based on the combination of the attention mechanism and the graph long-short-term memory neural network described in the present invention, such as figure 1 As shown, the specific steps of relation extraction are as follows:

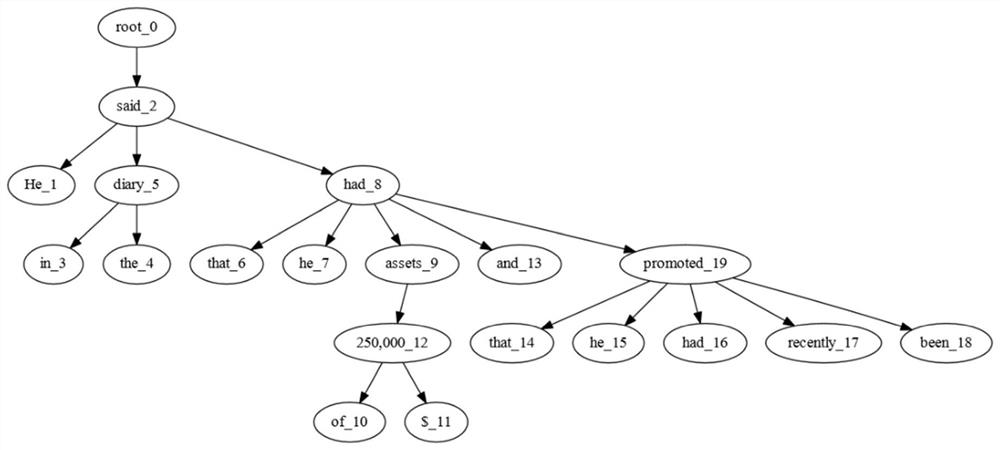

[0071] Step 1. Obtain a relational extraction data set, preprocess the text data in the data set, and generate a word vector matrix for feature extraction of sentence temporal context information and an adjacency matrix for feature extraction of sentence structure information.

[0072] This embodiment uses the TACRED dataset and the Semeval-2010-task8 dataset, wherein the TACRED dataset contains 68,124 training sets, 22,631 verification sets, and 15,509 test sets, with a total of 41 relationship types and a special relationship type (no relation ). The Semeval-201...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com