Three-dimensional target detection method and system based on deep neural network

A technology of deep neural network and 3D target, which is applied in the field of 3D target detection method and system based on deep neural network, which can solve the problems of increasing cost and achieve the effects of small amount of calculation, good adaptability, good versatility and real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

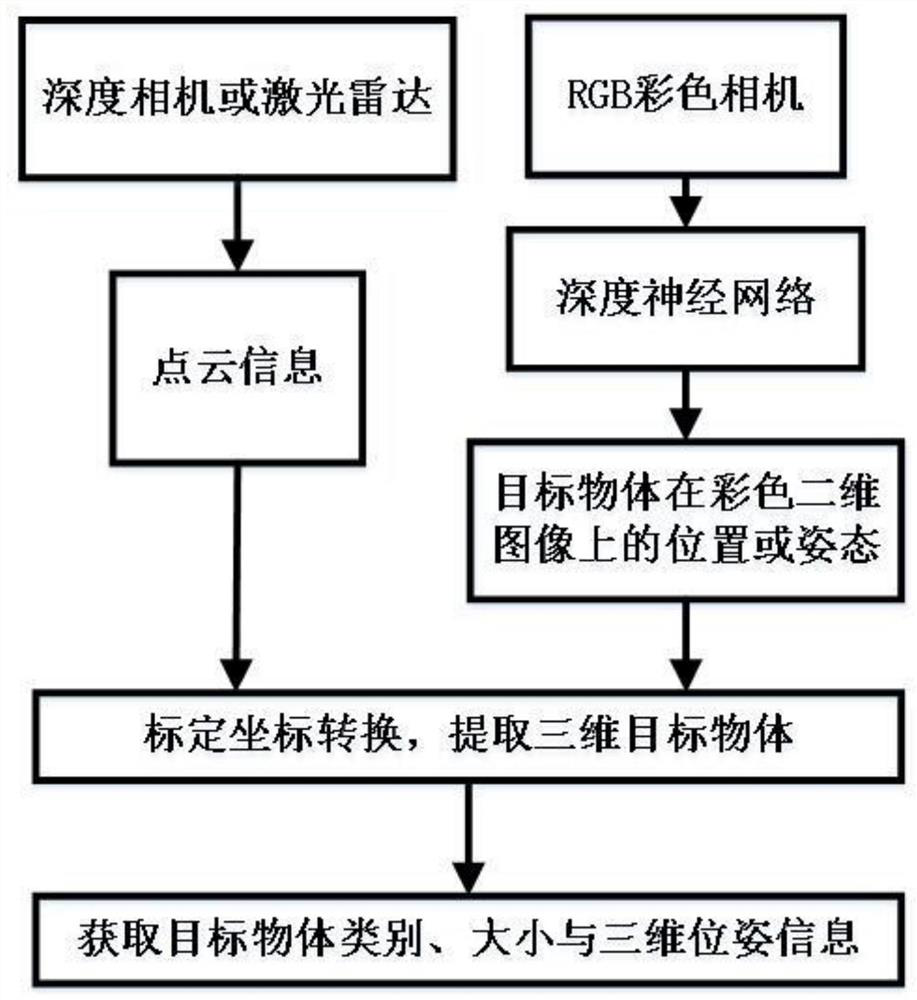

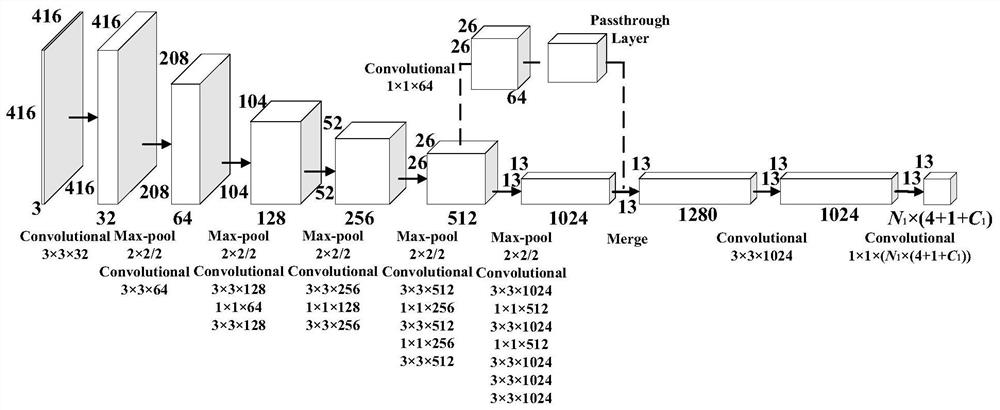

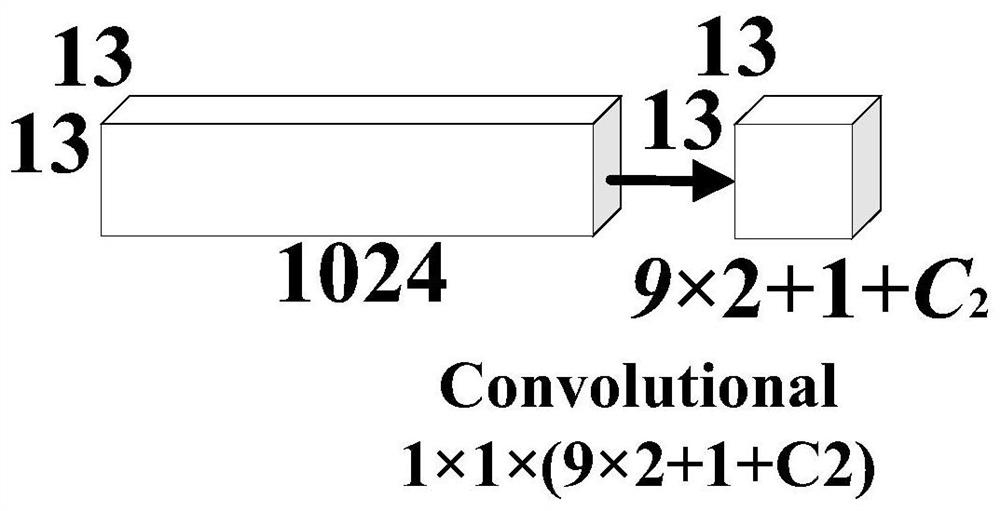

[0025] Such as figure 1 As shown, a 3D object detection method based on deep neural network, including: a. Obtain the color image and point cloud information of the environment where the target object is located; b. Use the deep neural network YOLO6D and YOLOv2 to jointly detect the color image, frame Select the target object, respectively obtain the 2D bounding box and the 3D bounding box of the target object on the color image; c, map the point cloud information to the image coordinate system of the color image, and obtain the coordinate information of the point cloud information in the color image; d 1. According to the 2D bounding box and 3D bounding box of the target object on the color image, combined with the coordinate information of the point cloud information in the color image, the depth information of the 2D bounding box and the 3D bounding box are obtained respectively; e, according to the 2D bounding box and the 3D bounding box The depth information of the boundi...

Embodiment 2

[0045] Based on the method for detecting a three-dimensional object based on a deep neural network described in Embodiment 1, this embodiment provides a three-dimensional object detection system based on a deep neural network, including:

[0046] The first module is used to obtain color images and point cloud information of the environment where the target object is located;

[0047] The second module is used to jointly detect the color image using the deep neural network YOLO6D and YOLOv2, frame the target object, and obtain the 2D bounding box and 3D bounding box of the target object on the color image respectively;

[0048] The third module is used to map the point cloud information to the image coordinate system of the color image, and obtain the coordinate information of the point cloud information in the color image;

[0049] The fourth module is used to obtain the depth information of the 2D bounding box and the 3D bounding box respectively according to the 2D bounding ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com