Video sequence encoding and decoding method for video pedestrian re-identification

A person re-identification and video sequence technology, applied in the field of computer vision image retrieval, can solve the problems of high computational overhead, unsuitable for batch processing, huge storage overhead, etc., and achieve the effect of reducing performance loss and high imaging quality.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

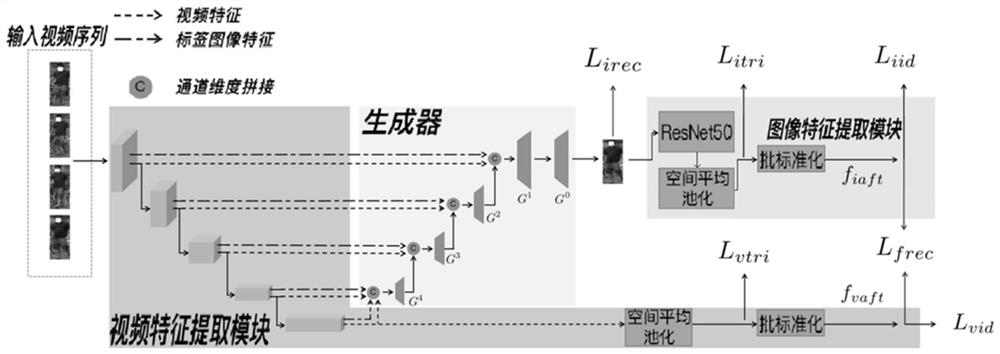

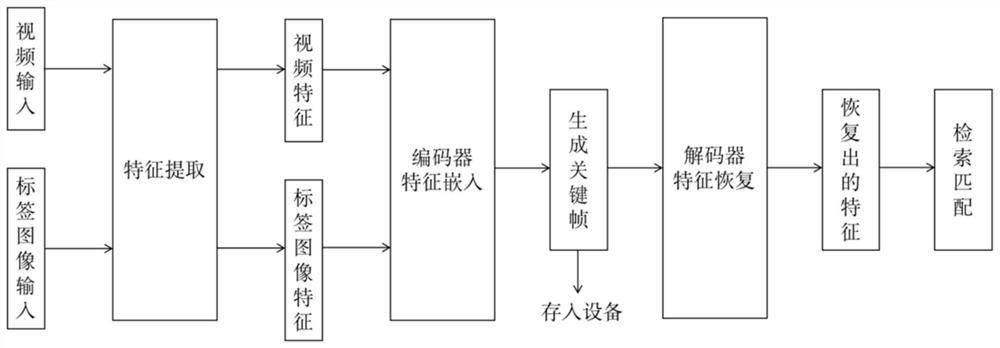

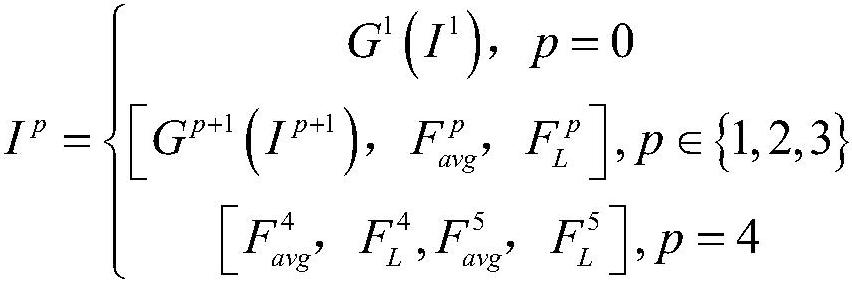

[0047]The present invention is a video sequence encoding and decoding method for video pedestrian re-identification. In the training stage, the tag image feature is fused with the video feature and input to the generator, and then the tag image is used as the reconstruction tag, and the image reconstruction loss is constrained The keyframes generated by the generator. Then the generated key frames are sent to the image feature extraction module for video feature recovery, and the recovered video features and original video features are constrained by the feature reconstruction loss. In the application phase, the HSV-Top-K method is used to select K frames of pictures to generate key frames, and then the generated key frames are stored in the device to reduce storage overhead. When retrieval is required, the image feature extraction module is used to restore the video features of the generated key frames, and the recovered features retain the performance of the video features a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com