Feature point elimination method based on correlation

A feature point and correlation technology, applied in the field of vision guidance, can solve problems such as feature point mismatch, influence deviation solution, robot grasping/assembly failure, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

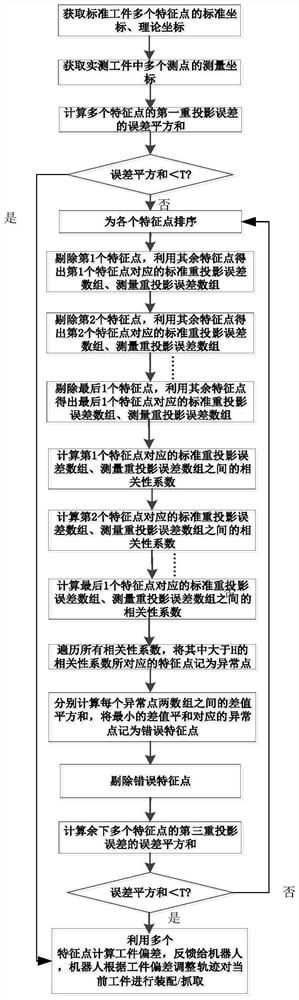

Method used

Image

Examples

Embodiment Construction

[0048] In this embodiment, the technical solution of the present invention is described in detail by taking the car door grasping guide as an example.

[0049] A feature point elimination method based on correlation, which is used for visually guiding the assembly / grabbing process. This embodiment takes the car body-in-white door component grasping guidance as an example;

[0050] Set the detection position in advance, place the standard car door workpiece at the detection position, and when the robot can correctly grasp the car door through the robot teaching, the visual sensor will obtain the coordinates of each feature point of the standard car door workpiece, which will be recorded as the standard coordinates (image Distortion correction has been carried out); the feature point is to select N measuring points on the standard workpiece in advance, and the coordinates of each feature point on the workpiece digital model are marked as theoretical coordinates; N>4;

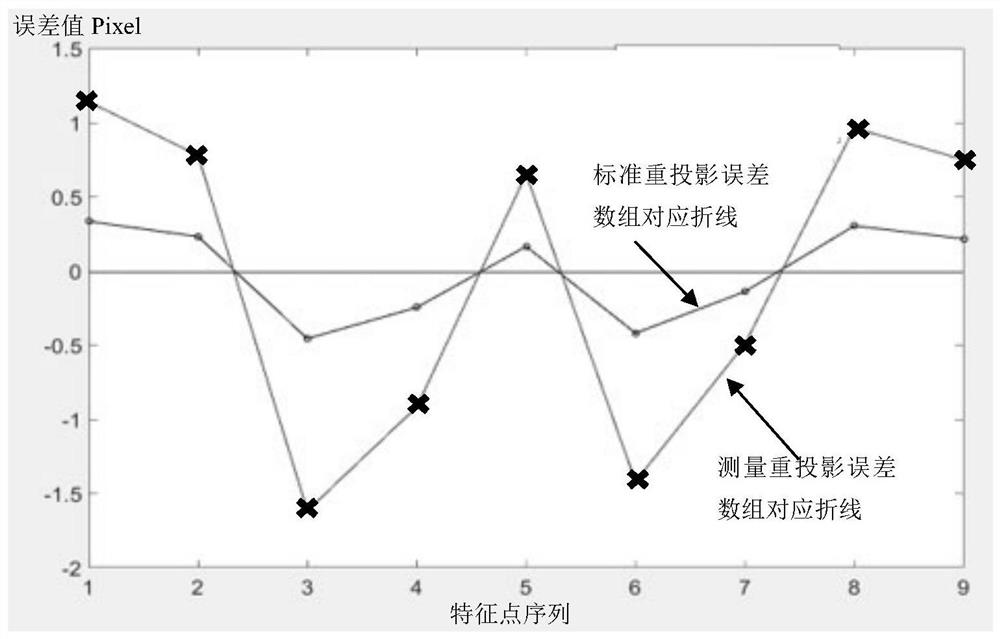

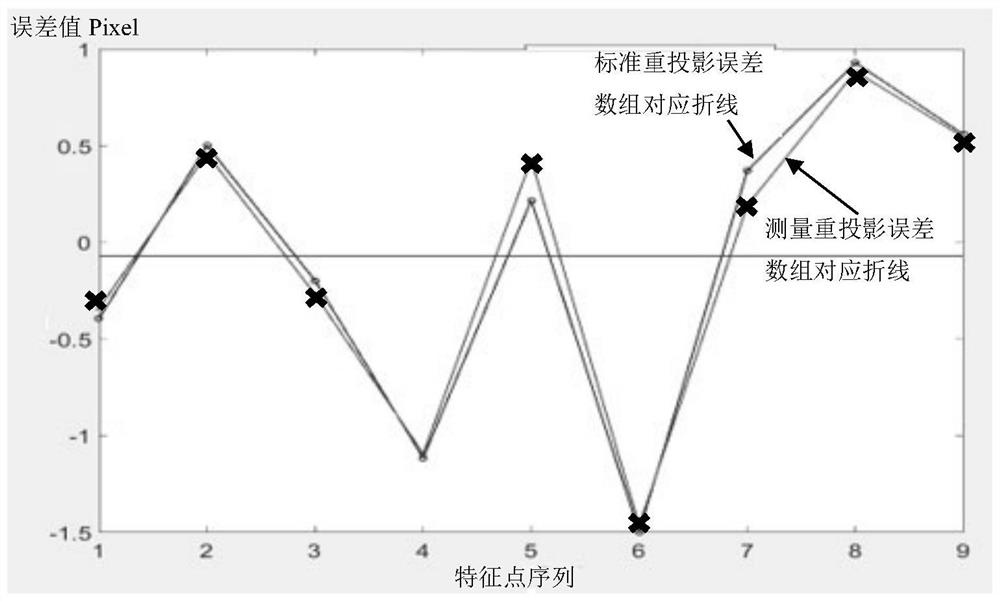

[0051] In...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com