Chinese short text classification method based on graph attention network

A classification method and network text technology, which are applied in the field of Chinese short text classification based on graph attention network, can solve the problems of too many feature classifications with high classification value and insufficient short text information, and achieve the effect of improving the accuracy rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

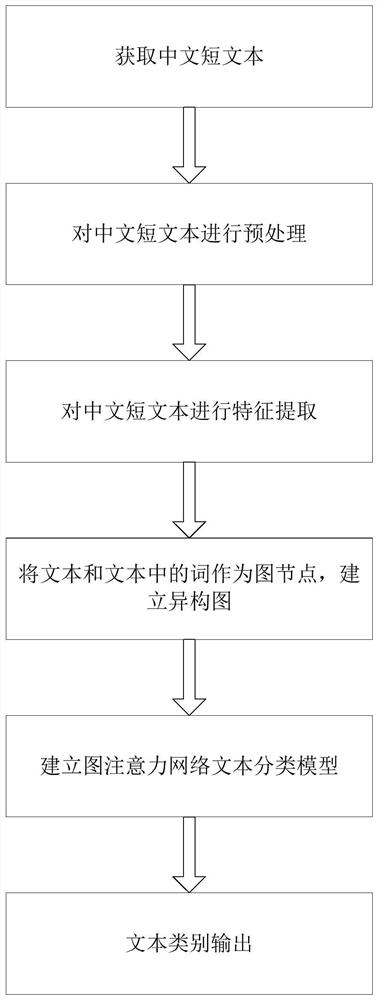

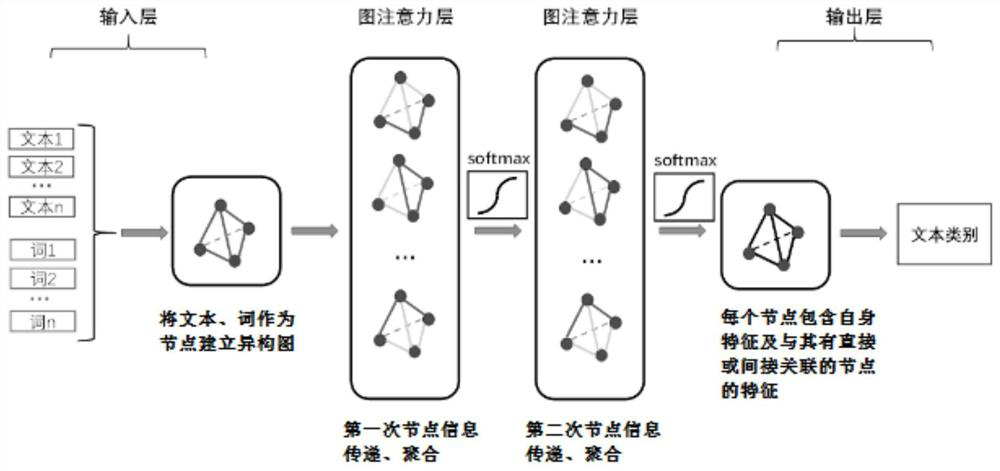

[0054] Such as figure 1 As shown, the main steps of a Chinese short text classification method based on a graph attention network in the present invention are: text data preprocessing, text feature extraction, text and words as nodes to establish a heterogeneous graph, input graph attention network The classification model performs category classification and outputs text categories.

[0055] The steps are described in detail below:

[0056] The first step, text data preprocessing

[0057] The preprocessing process of text data mainly includes noise information removal, word segmentation processing and stop word processing.

[0058] S1.1 Noise information removal

[0059] For short Chinese texts obtained from social platforms and e-commerce platforms that need to be classified, the text data is likely to contain noise information such as user nicknames, URLs, and garbled characters that have nothing to do with classification. Regular expressions are used to preprocess the t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com