Dynamic environment information detection method based on semantic segmentation network and multi-view geometry

A semantic segmentation and dynamic environment technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problems of low detection accuracy and robustness, improve accuracy and robustness, maintain high precision, reduce system Effects of storage and time overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

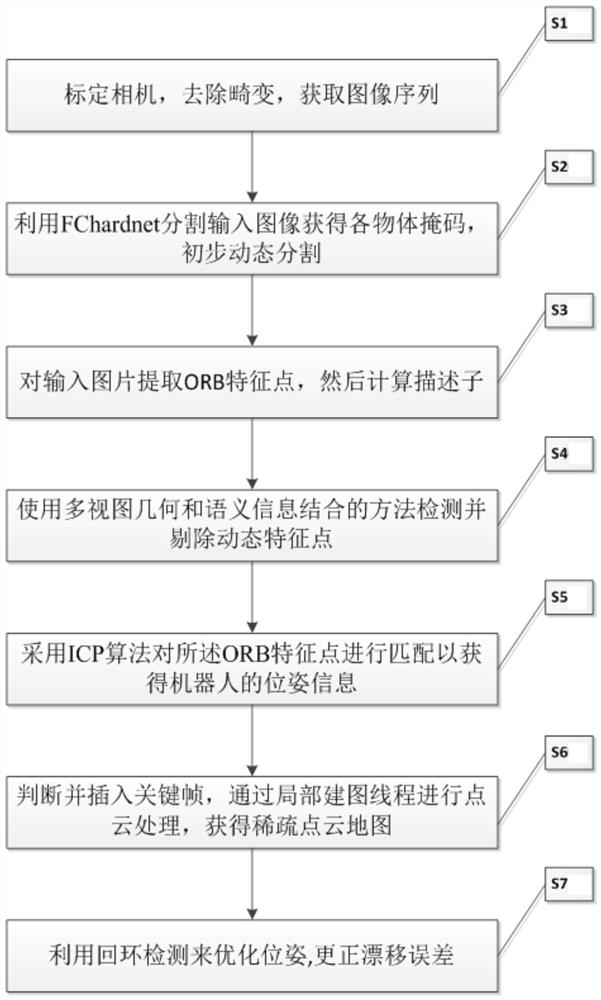

[0052] like Figure 1-3 Shown is an embodiment of a dynamic environment information detection method based on semantic segmentation network and multi-view geometry, including the following steps:

[0053] Step 1: Calibrate the camera to remove image distortion; acquire and input the environment image; calibrate the camera to remove image distortion. The specific steps are:

[0054] S1.1: First obtain the internal parameters of the camera, where the internal parameters include f x ,f y ,c x ,c y , normalize the three-dimensional coordinates (X, Y, Z) to homogeneous coordinates (x, y);

[0055] S1.2: Remove the influence of distortion on the image, where [k 1 ,k 2 ,k 3 ,p 1 ,p 2 ] is the distortion coefficient of the lens, artificially the distance from the point to the origin of the coordinate system:

[0056]

[0057] S1.3: Transfer the coordinates in the camera coordinate system to the pixel coordinate system:

[0058]

[0059] Step 2: Segment the input image...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com