A dynamic caching approach for addressing memory bandwidth efficiency in general-purpose AI processors

A storage bandwidth and dynamic cache technology, applied in memory systems, electrical digital data processing, instruments, etc., can solve problems such as the inability to meet the needs of new general-purpose AI processors, and achieve the effects of reducing memory overhead, increasing bandwidth, and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] The present invention will be further described now in conjunction with accompanying drawing.

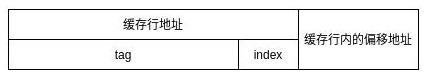

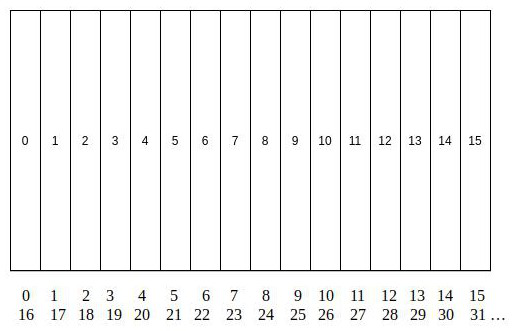

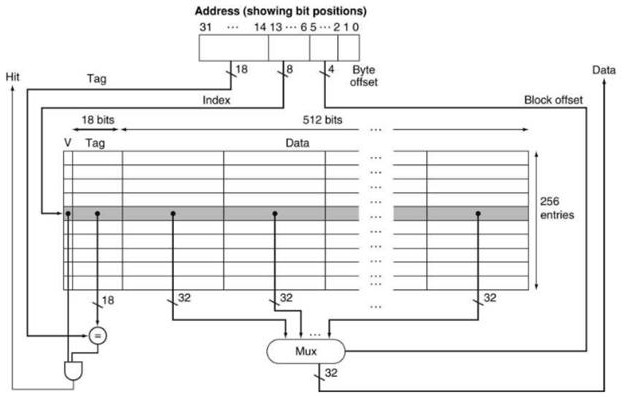

[0055] Such as Figure 1 to Figure 7 As shown, the dynamic caching method used to solve the storage bandwidth efficiency of a general-purpose AI processor adds a bit flag C in the cache line to form a data segment cache, and the data segment is continuously stored in several conventional cache line data Medium; follow the steps below to read data when reading cached data:

[0056] S1. According to the read instruction given by the CPU, it is judged whether it is conventional data reading or data segment reading. If it is conventional data, it will be read according to the conventional data reading steps. If it is data segment reading, it will enter S2;

[0057] S2. Determine the position of the data segment in the cache according to the index field in the start address of the data segment;

[0058] S3, comparing the tag of the start address of the data segment with the tag ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com