Infrared ship video tracking method based on convolutional neural network

A convolutional neural network and network technology, applied in the field of infrared ship video tracking based on convolutional neural network, can solve the problems of few infrared target tracking algorithms, lack of infrared target tracking data sets, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] An embodiment of the present invention provides a convolutional neural network-based infrared ship video tracking method. The present invention will be explained and elaborated below in conjunction with the accompanying drawings:

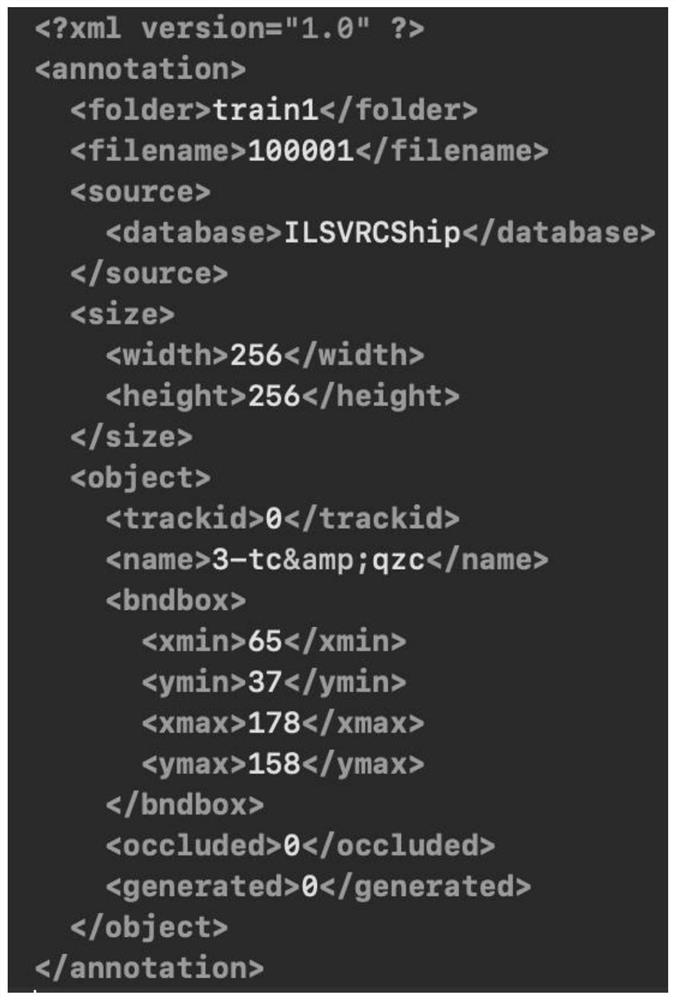

[0019] The data processing method is: write a program to extract each frame of infrared video (Figure 1(a)), the number of channels is 3, the pixel value ∈ [0,256], and the size is 256×256. Use the LableMe software to frame the ship target in each frame. The frame should fit the ship target as much as possible (Fig. 1(b)), and the labeling result is an xml file (Fig. 1(c)), and the file name is the same as the frame picture name. The label file should contain the coordinates of the upper left point and the lower right point of the frame selection target box.

[0020] Embodiment flow process of the present invention is as follows:

[0021] Step 1: Based on the feature extraction network of SiamRPN, add a multi-layer fusion network. The featu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com