A Message Passing Method Based on Shared Memory

A technology of message passing and shared memory, applied in the field of message passing based on shared memory, can solve problems such as increasing message delay, and achieve the effects of reducing message delay, fast message transmission, and improving communication efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

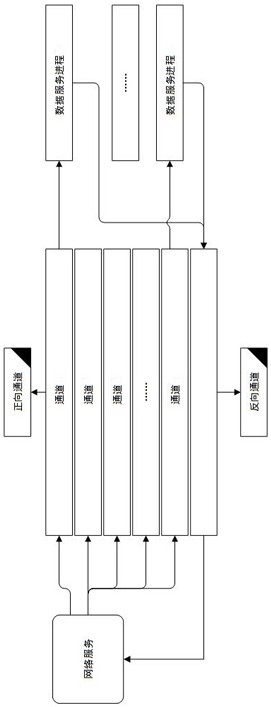

[0030] Such as figure 1 A message delivery method based on a shared memory is shown, including using the shared memory as a data storage carrier to provide rapid message delivery services for both message delivery parties;

[0031] The messaging service process is completed by calling the API from the server's business process;

[0032] Encapsulate the shared memory into a message channel and mark the message channel;

[0033] When performing message read and write operations, use the batch read and write function to read and write multiple pieces of data at one time;

[0034] When executing the read operation of the message, by judging the mark of the channel, obtain whether there is data in the current channel: if there is data, read the data; if there is no data, skip the current channel, read and judge the mark of the next channel;

[0035] For scenarios where messages cannot be lost, messages that cannot be processed in time and cannot be discarded are sent to the distr...

Embodiment 2

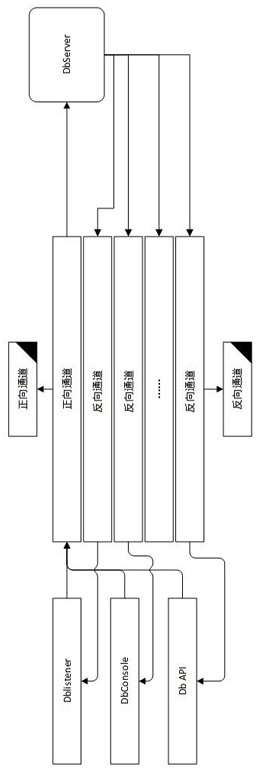

[0061] The difference between Example 2 and Example 1 is that, as figure 2 As shown, Embodiment 2 is a scenario where the present invention is used for service communication of a NOSQL database, and the specific workflow is as follows:

[0062] The client can be a listener for cross-network services, a console accessed by the same host, and a program API accessed by the same host. When each client initializes, it initializes its own read channel according to its own client id.

[0063] The server receives a data request from a channel, and writes the message back to the channel of the corresponding client after processing.

[0064] figure 2 Among them, Dblistener is the database listener, DbConsole is the database console, Db API is the database application program interface, and DbServer is the database server.

[0065] The message transmission method based on the shared memory of the present invention solves the technical problem of high-speed data transmission between p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com