Video abstract generation method fusing local target features and global features

A technology of target features and global features, applied in neural learning methods, computer components, biological neural network models, etc., can solve problems such as lack of visual expressiveness of representational features, neglect of local target features, and neglect of interactive relationships between targets, etc., to achieve Detail-rich, performance-boosting, expressive-rich effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The implementation of the present invention will be described in detail below in conjunction with the drawings and examples.

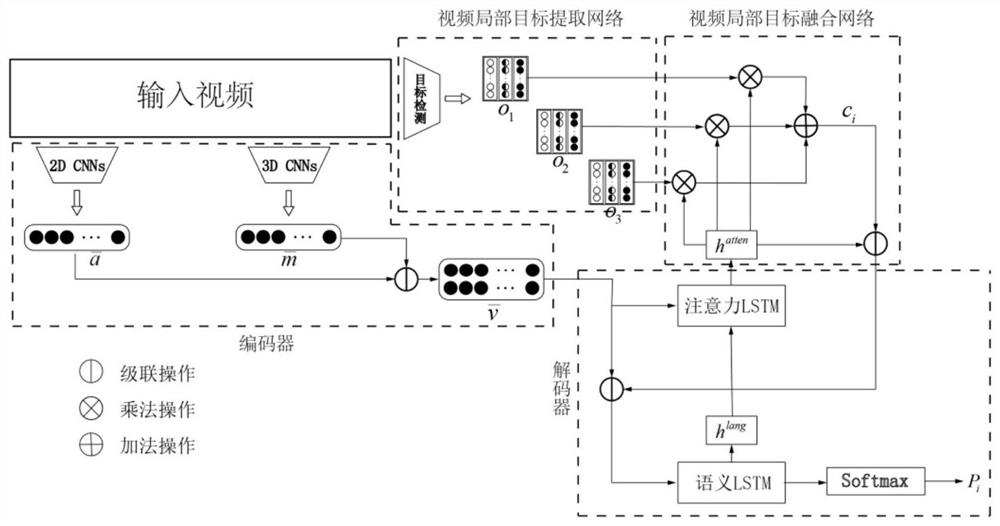

[0019] Such as figure 1 As shown, the present invention is a method for generating a video abstract that fuses local target features and global features, including:

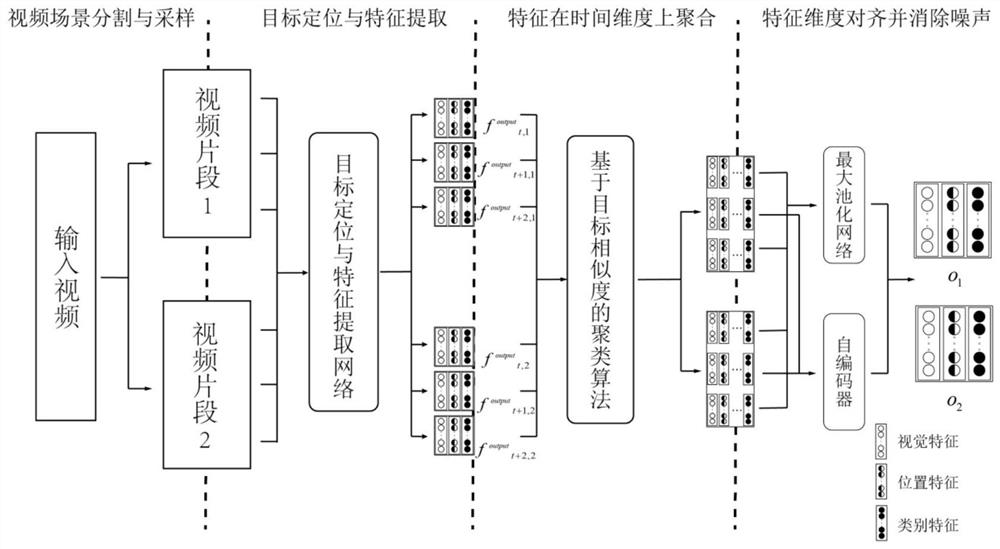

[0020] Step 1, extract the local target features of the video

[0021] The local target features include the visual features of the target, the trajectory features of the target, and the category label features of the target. Refer to figure 2 , the extraction of local target features specifically includes:

[0022] Step 1.1: Segment and sample the original video data according to the video scene to obtain a collection of pictures.

[0023] Since videos usually contain multiple scenes, and there is no temporal relationship between objects in different scenes, multiple complex scenes are an important obstacle for introducing image-based object detection models into videos. The ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com