Multi-agent cluster obstacle avoidance method based on reinforcement learning

A technology of enhanced learning and multi-agents, which is applied in two-dimensional position/channel control, non-electric variable control, instruments, etc., can solve the problems of obstacle avoidance and obstacle avoidance algorithm agents that cannot be performed quickly, and achieve high avoidance The effect of failure efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0108] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings, but the protection scope of the present invention is not limited to the following description.

[0109] The invention combines the Flocking collaborative control algorithm and the Q-learning algorithm according to the obstacle avoidance requirements of the agent cluster in the obstacle environment task execution process, and proposes an autonomous collaborative obstacle avoidance method for multiple agents for complex obstacle environments. In the learning process, there is no need to learn from the historical experience of its neighbors, which helps to speed up the training efficiency of multi-agent clusters, specifically:

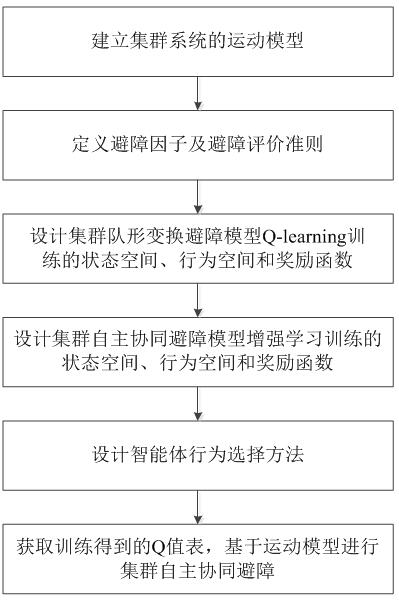

[0110] Such as figure 1 As shown, a multi-agent group obstacle avoidance method based on reinforcement learning includes the following steps:

[0111] S1. Establish the motion model of the swarm system:

[0112]...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com