Patents

Literature

155 results about "Augmented learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Augmented learning is an on-demand learning technique where the environment adapts to the learner. By providing remediation on-demand, learners can gain greater understanding of a topic while stimulating discovery and learning.

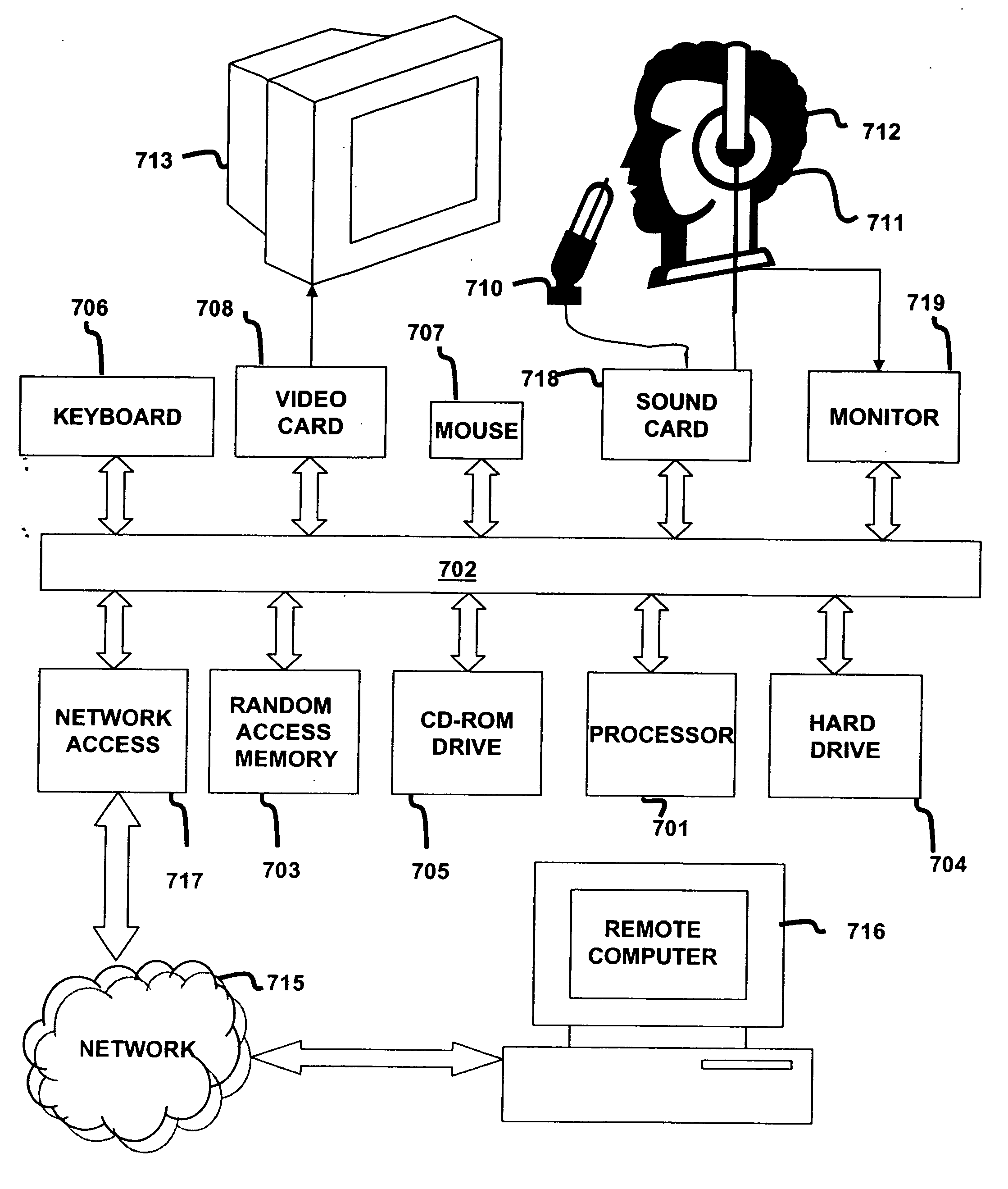

Audio-visual learning system

InactiveUS20130177891A1Easy to learnElectrical appliancesAugmented learningForeign language speaking

Owner:HAMMERSCHMIDT JOACHIM

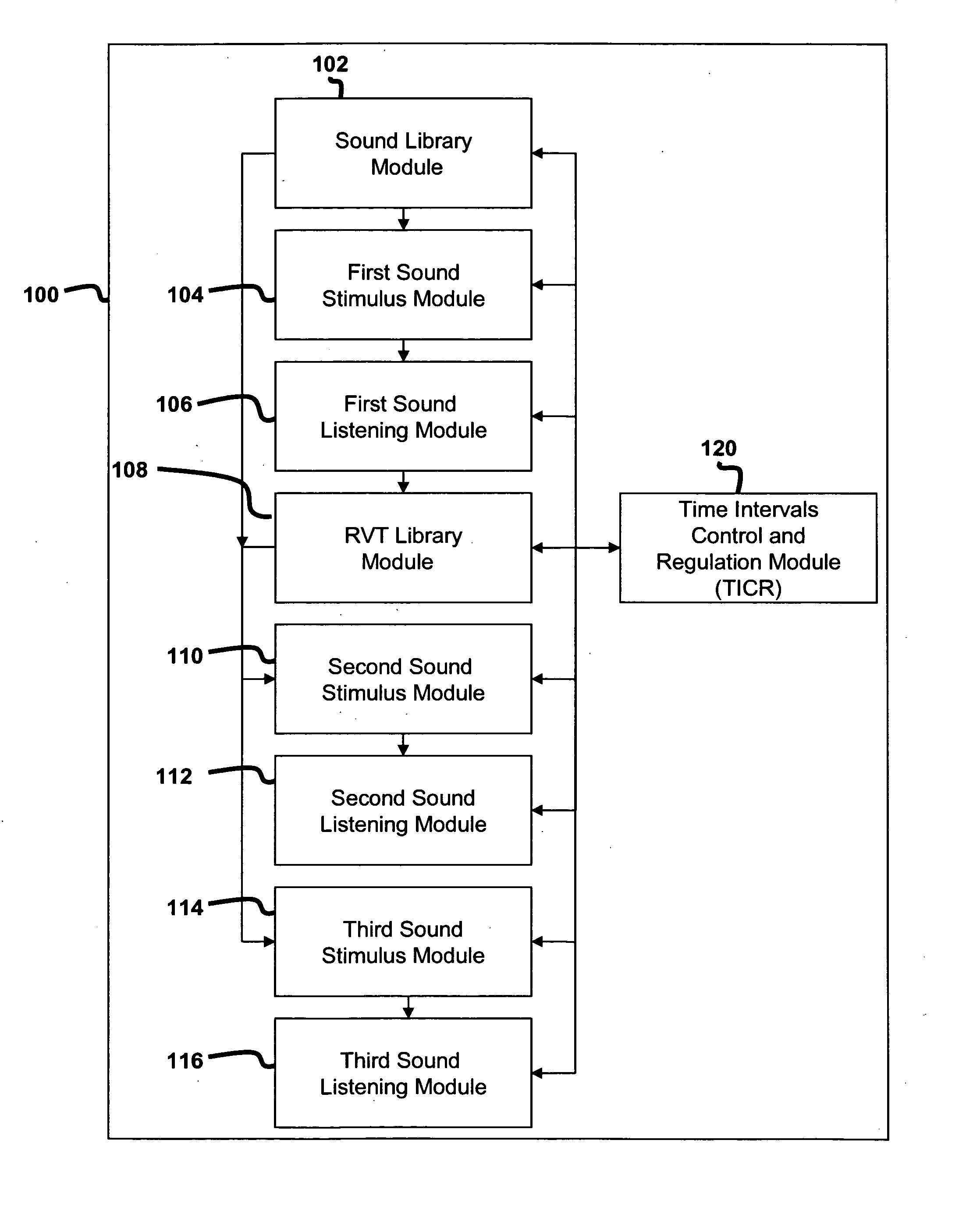

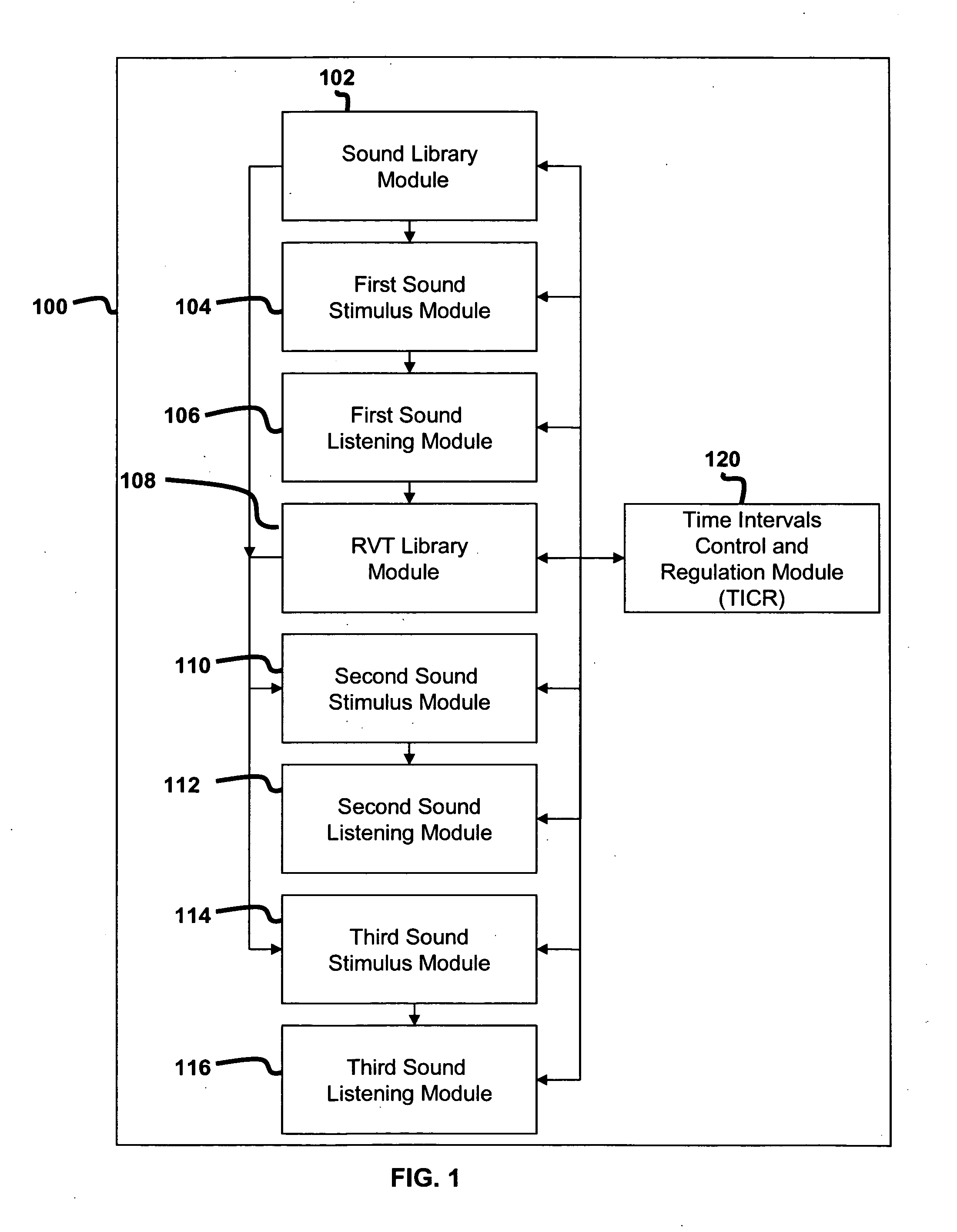

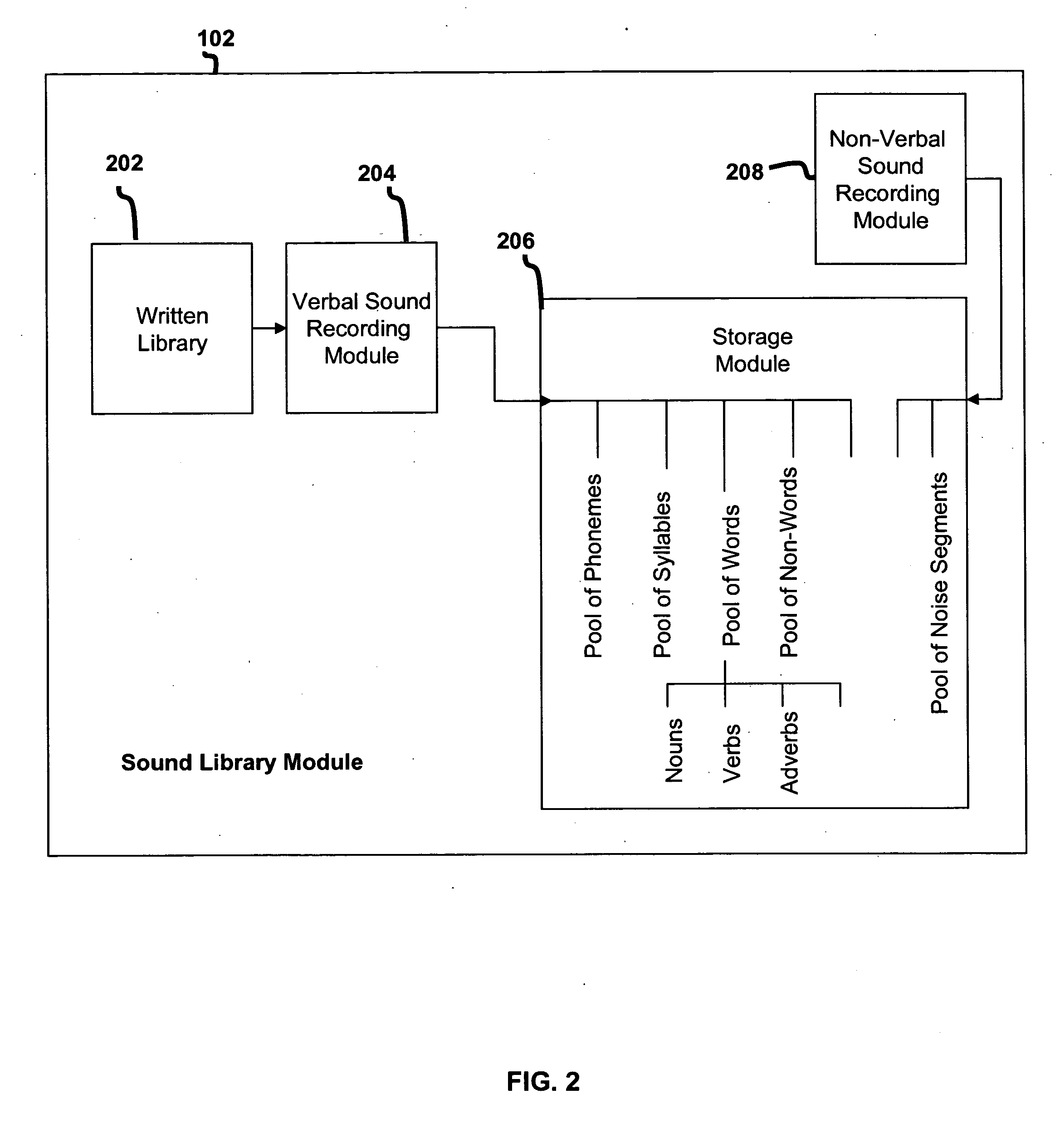

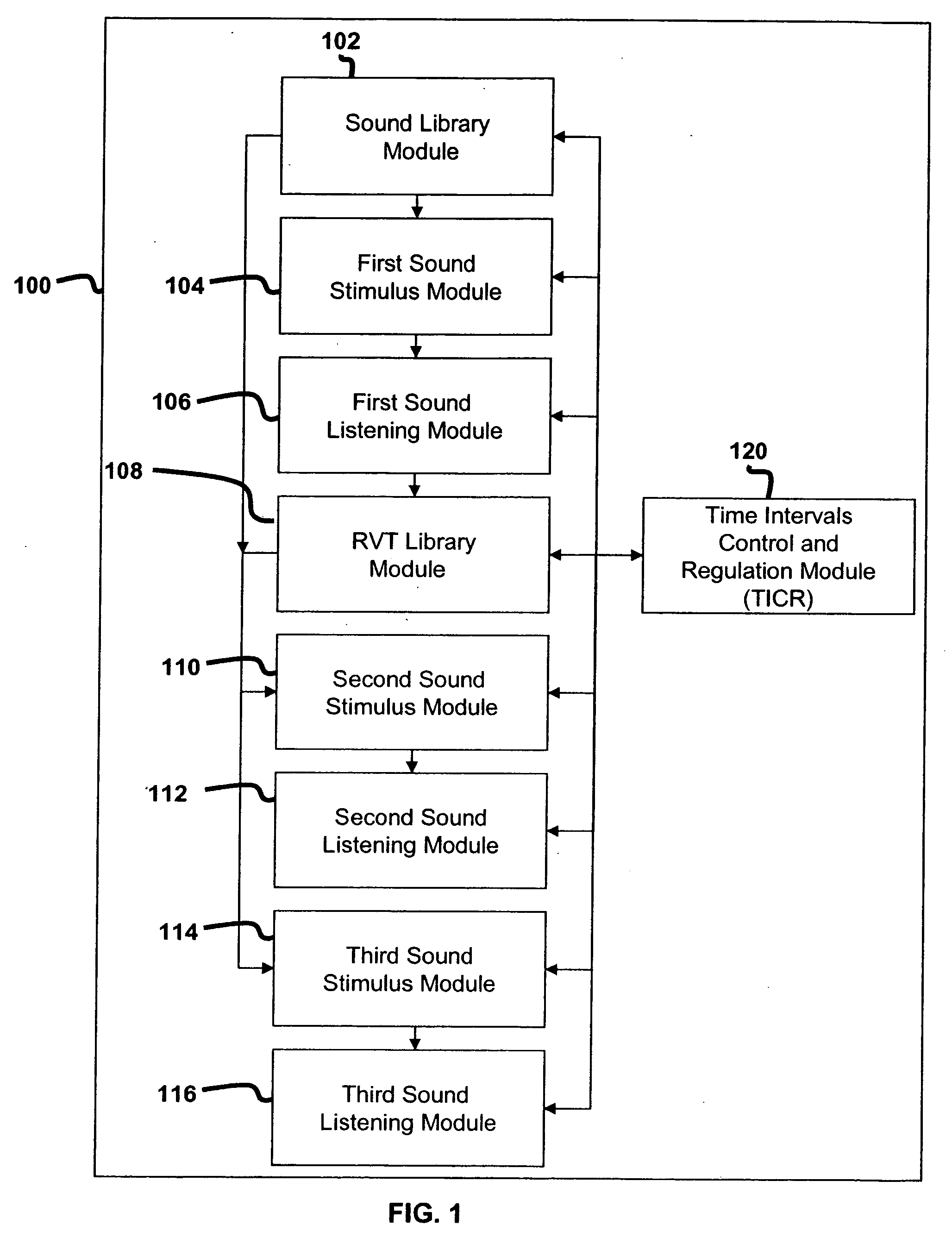

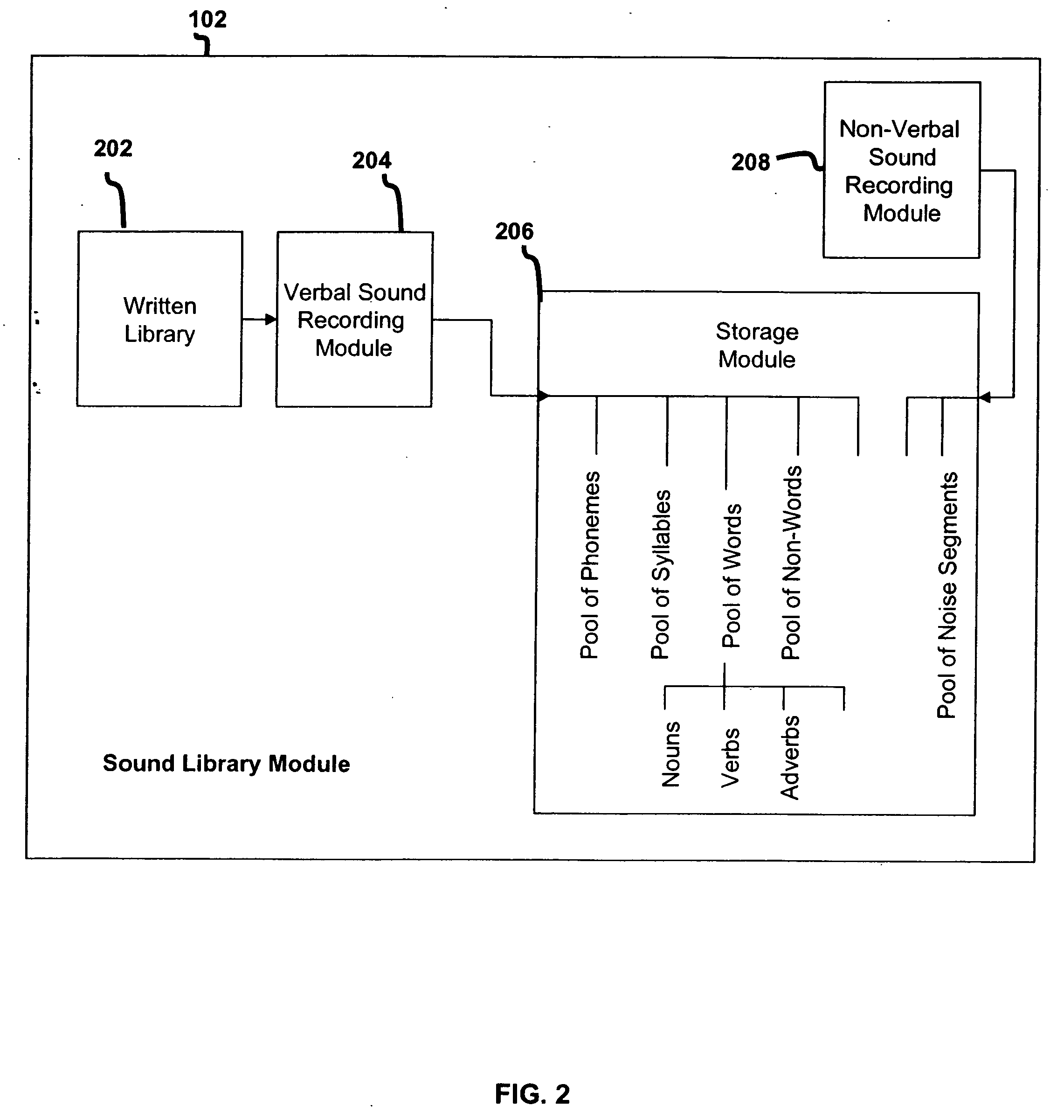

System for treating disabilities such as dyslexia by enhancing holistic speech perception

InactiveUS20050142522A1Increased complexityEasy to demonstrateReadingElectrical appliancesDeep dyslexiaPattern perception

The present invention relates to systems and methods for enhancing the holistic and temporal speech perception processes of a learning-impaired subject. A subject listens to a sound stimulus which induces the perception of verbal transformations. The subject records the verbal transformations which are then used to create further sound stimuli in the form of semantic-like phrases and an imaginary story. Exposure to the sound stimuli enhances holistic speech perception of the subject with cross-modal benefits to speech production, reading and writing. The present invention has application to a wide range of impairments including, Specific Language Impairment, language learning disabilities, dyslexia, autism, dementia and Alzheimer's.

Owner:EPOCH INNOVATIONS

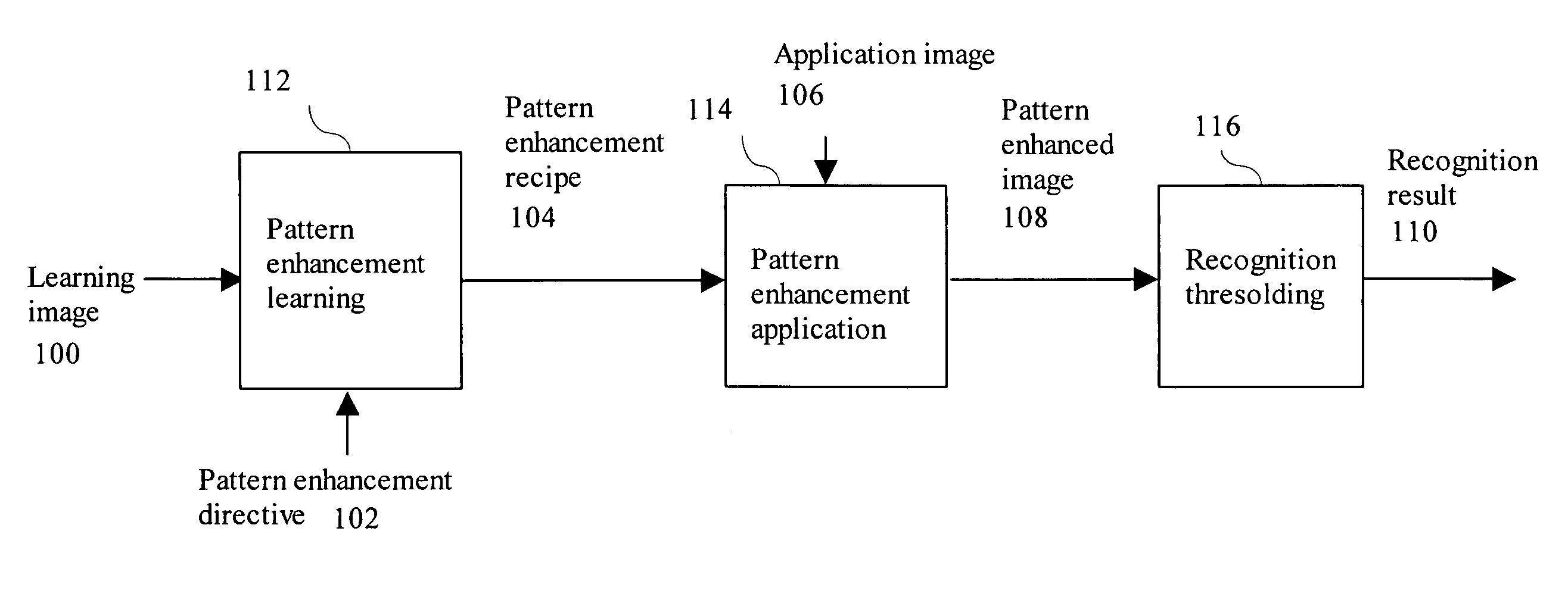

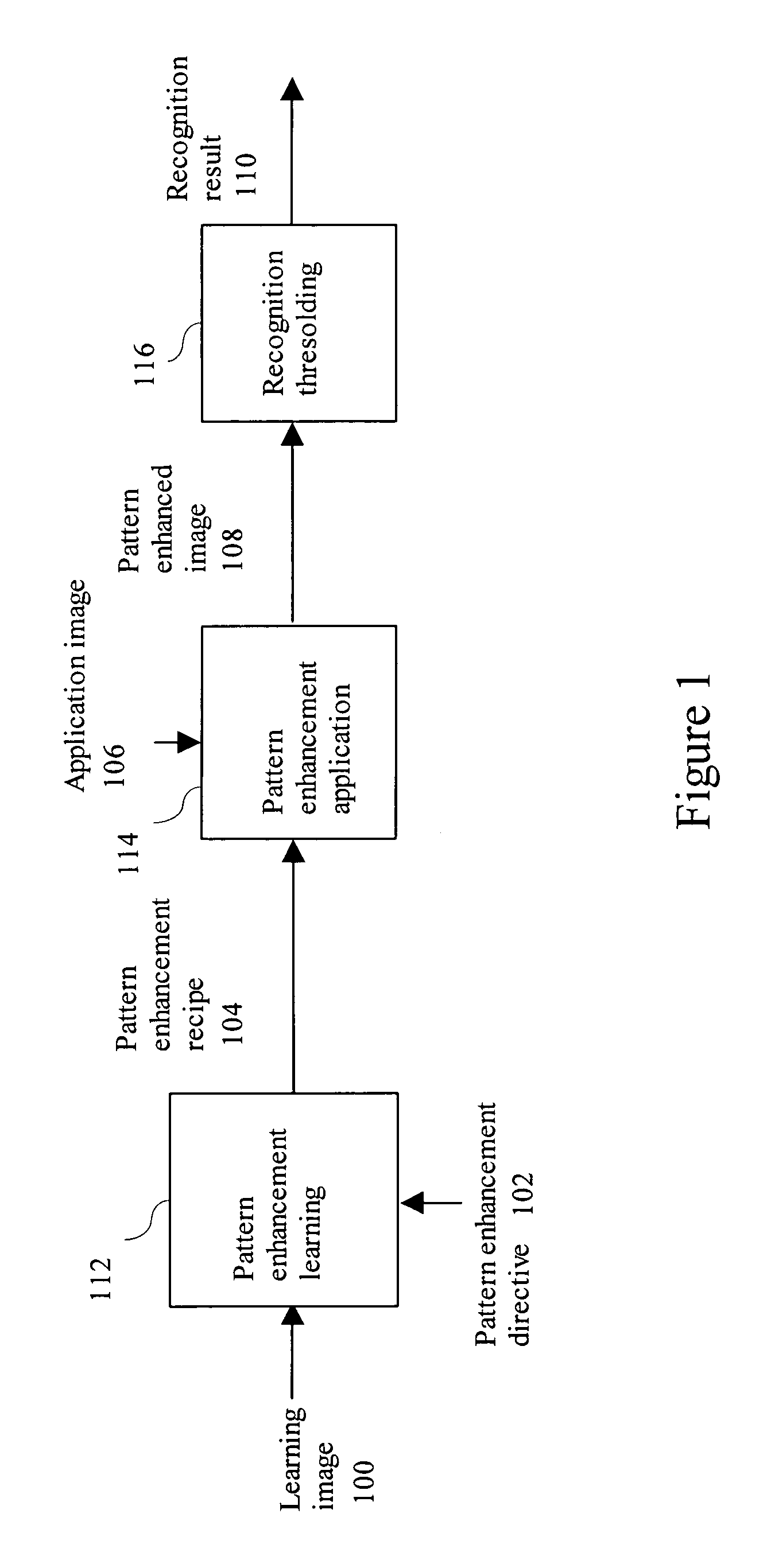

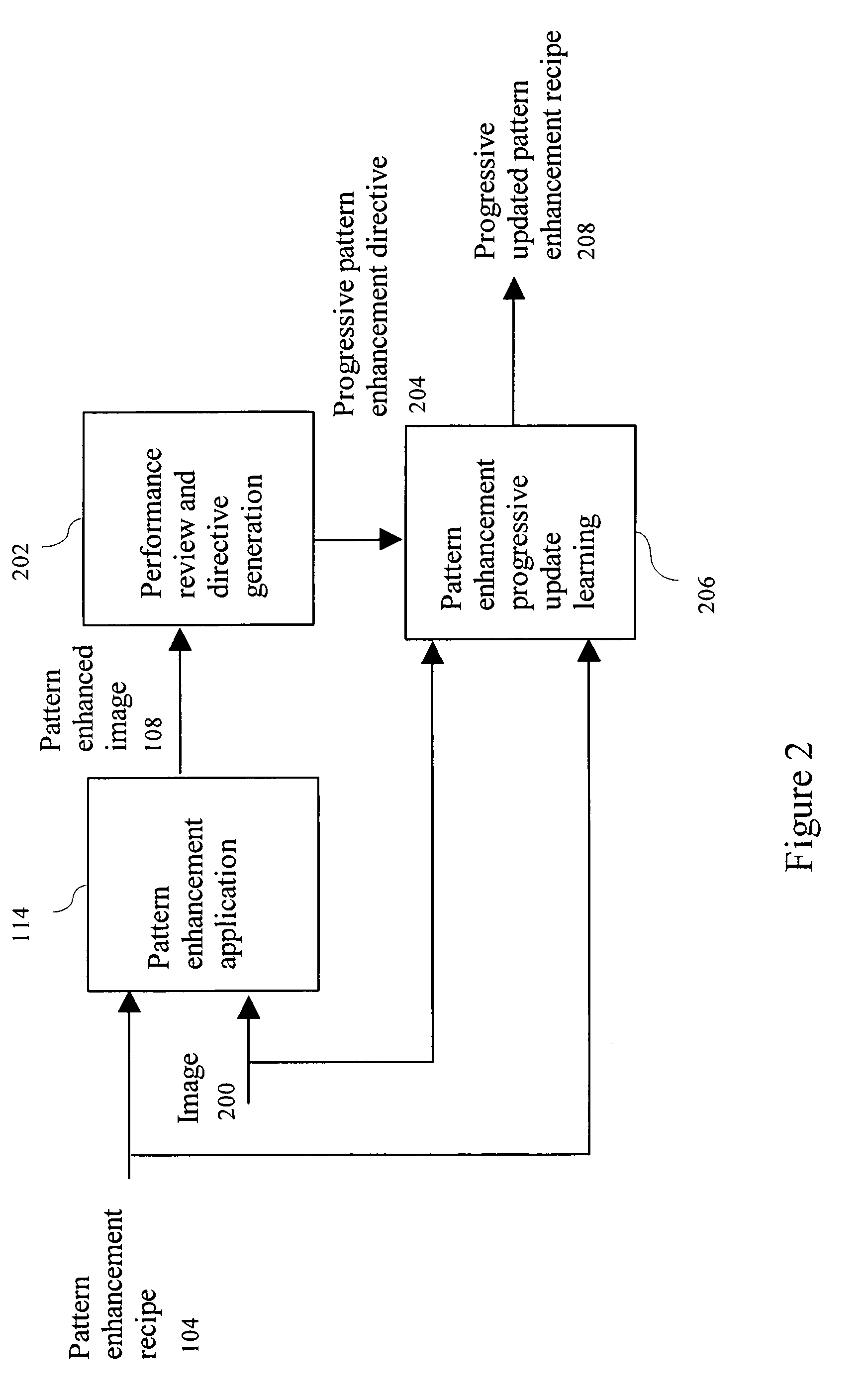

Method of directed pattern enhancement for flexible recognition

Owner:LEICA MICROSYSTEMS CMS GMBH

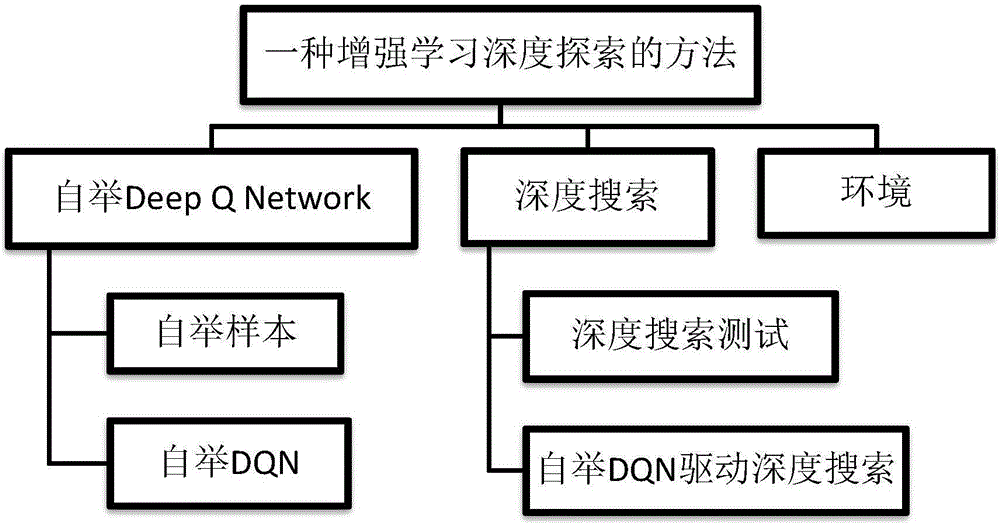

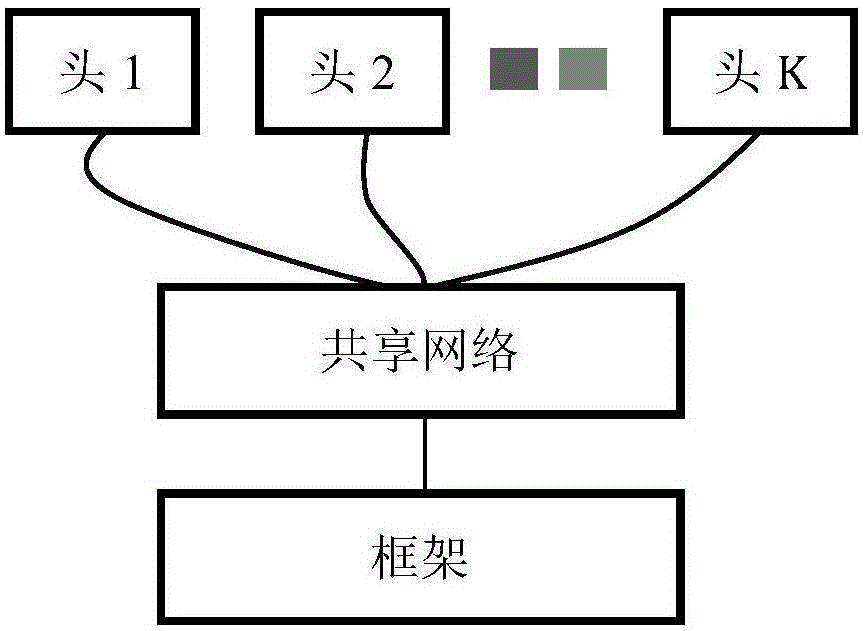

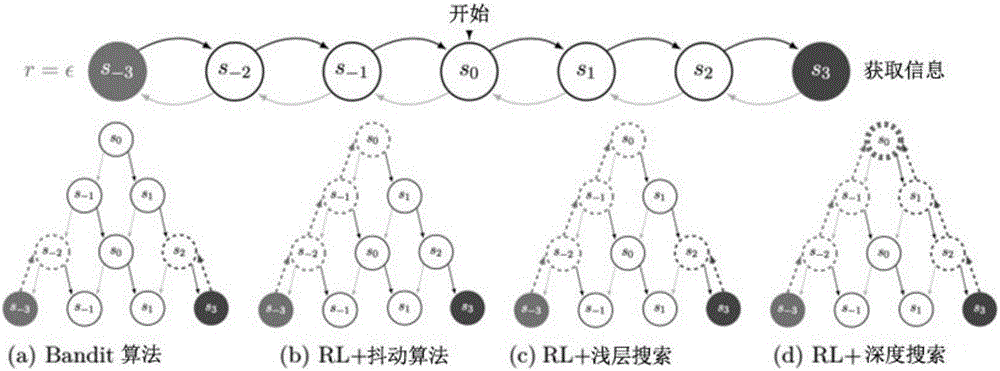

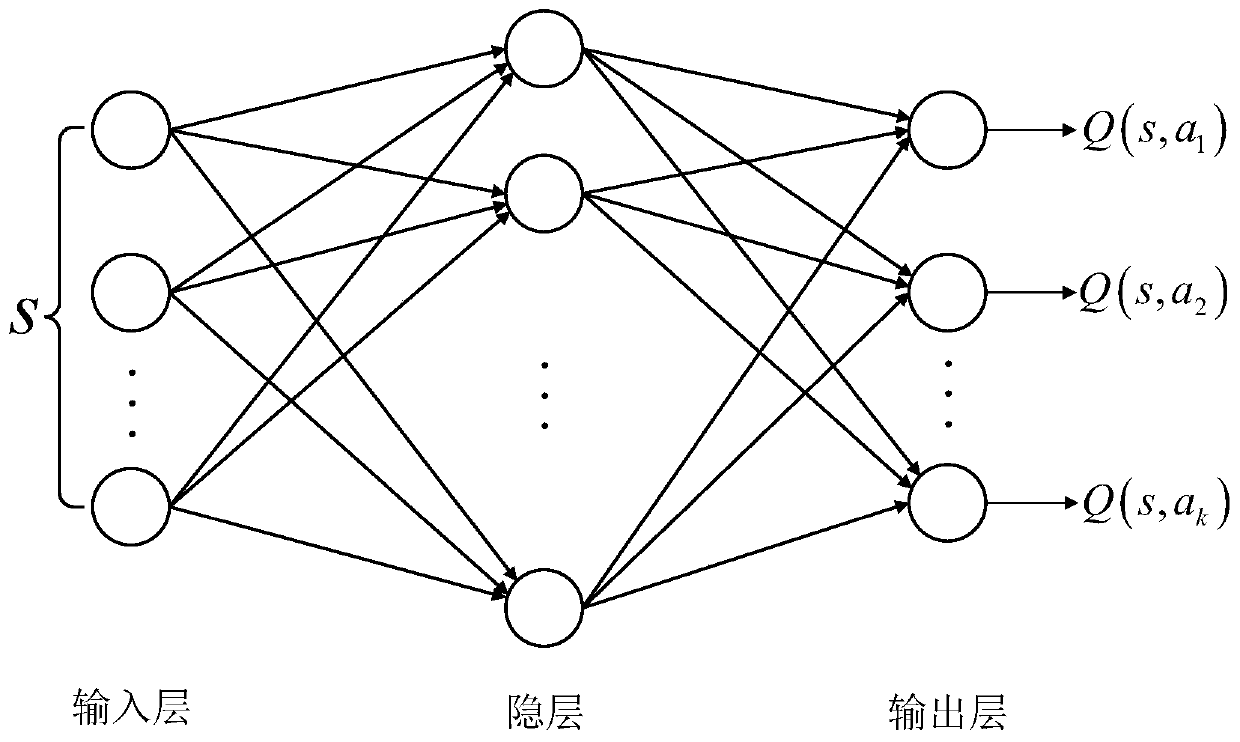

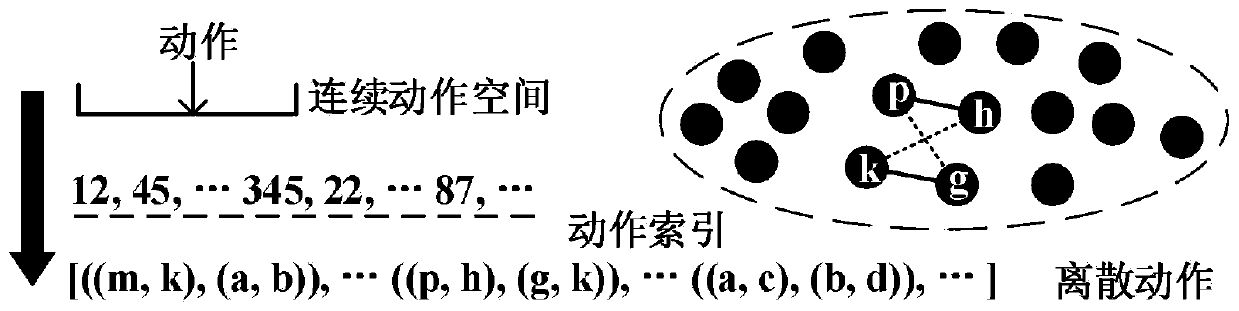

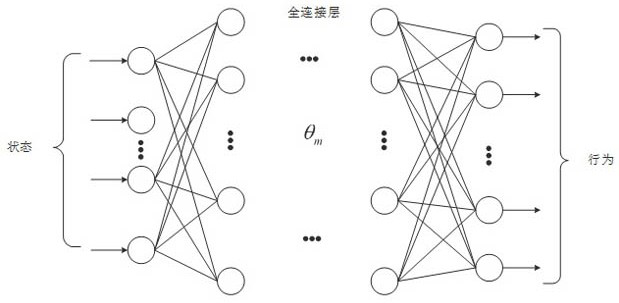

Reinforcement learning deep searching method based on bootstrap DAQN (deep Q network)

InactiveCN106779072AImprove final scoreEfficient searchNeural learning methodsReinforcement learning algorithmParallel processing

The invention provides a reinforcement learning deep searching method based on bootstrap DAQN (deep Q network), mainly comprising: bootstrap DQN, deep searching and environmental background, wherein the bootstrap DQN includes a bootstrap sample and a bootstrap DQN unit; the deep searching includes deep search test and bootstrap DQN drive deep searching; the environmental background includes generating online bootstrap DQN and bootstrap DQN drive. The bootstrap DQN is a practical reinforcement learning algorithm combining deep learning and deep searching, it is proved that bootstrapping may create effective uncertain estimation on a deep neural network and may also be extended to a large-scale parallel system, information is ranked in multiple time steps, and sample diversity is guaranteed; the bootstrap DQN acts as an effective reinforcement learning algorithm in a complex environment to process mass data in parallel, calculation cost is low, learning efficiency is high, and the method has excellent performance.

Owner:SHENZHEN WEITESHI TECH

Learning System for Digitalisation of An Educational Institution

InactiveUS20110250580A1Revolutionizes content and community resourceElectrical appliancesWorld classComputer science

A system that catalyses world-class quality in education in any institutionalized setting, howsoever small and formal or informal by integrating all processes, transactions, resources and controls (such as security of children, emergency response) for an educational organization, gated educational communities such as schools, dispersed private learning communities of kinships / professional organizations / neighborhood / any other source of association, or virtual self-learning communities. The system includes tools and devices which enhance the process, content and context of learning. Intelligence-embedded, self-help tools and appropriate macro- and micro-inputs to the community members, as well as the individual learners within, enable comprehensively unaided instruction and learning context at the community level. All academic subjects in a curricular framework up to a higher secondary level, across a plurality of nations, are covered.

Owner:IYC WORD SOFT INFRASTRUCTURE PVT

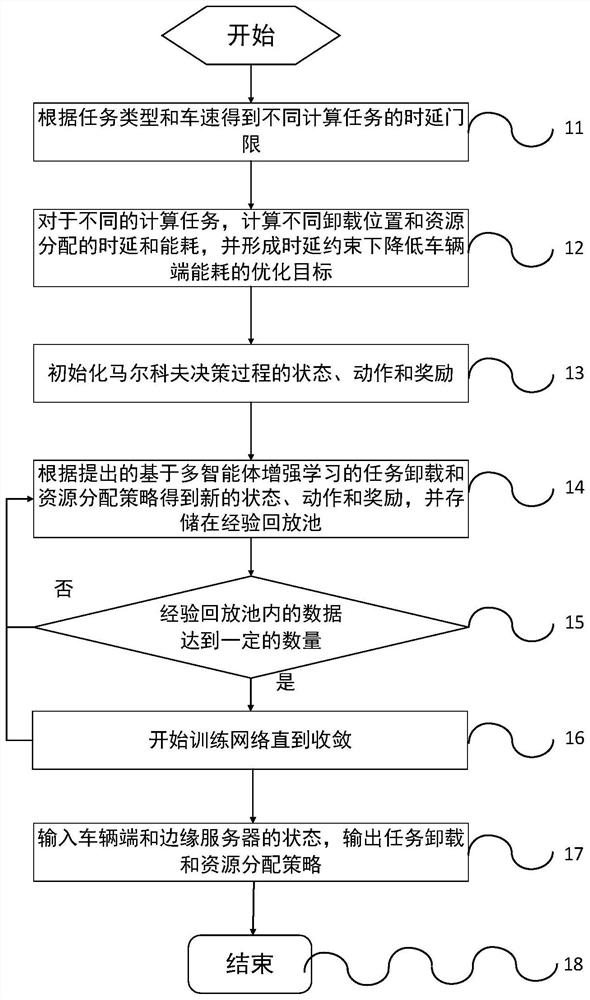

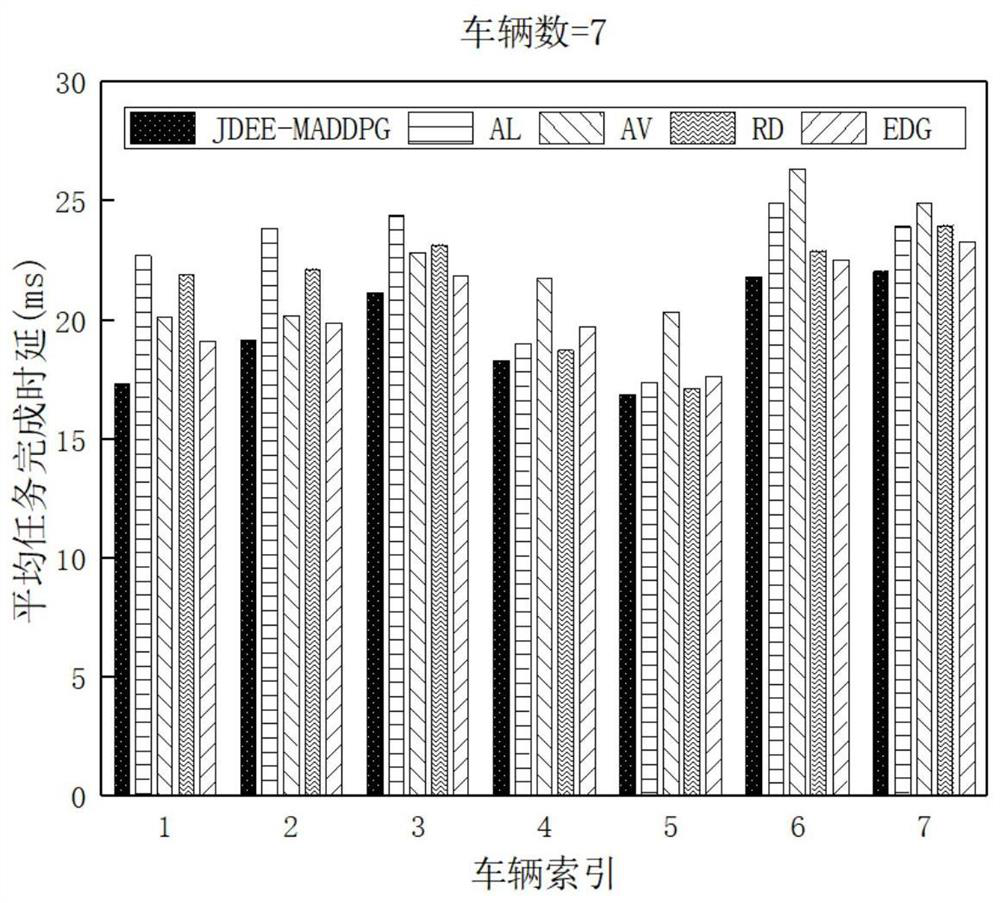

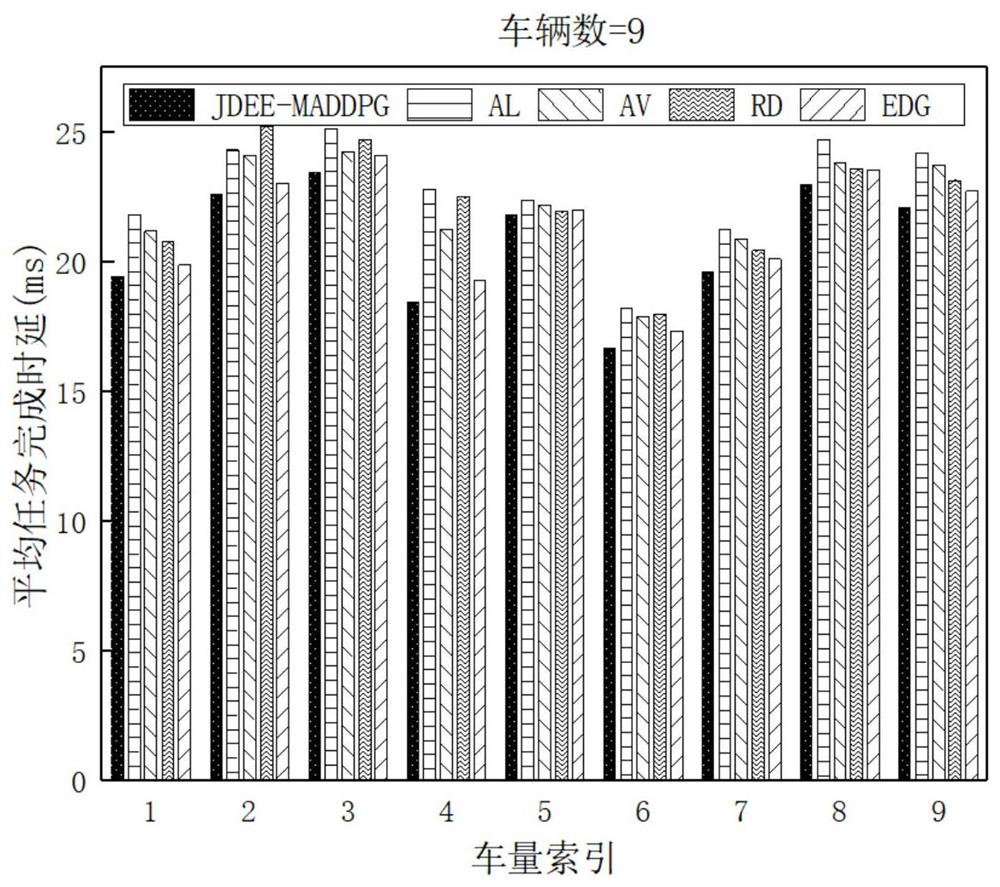

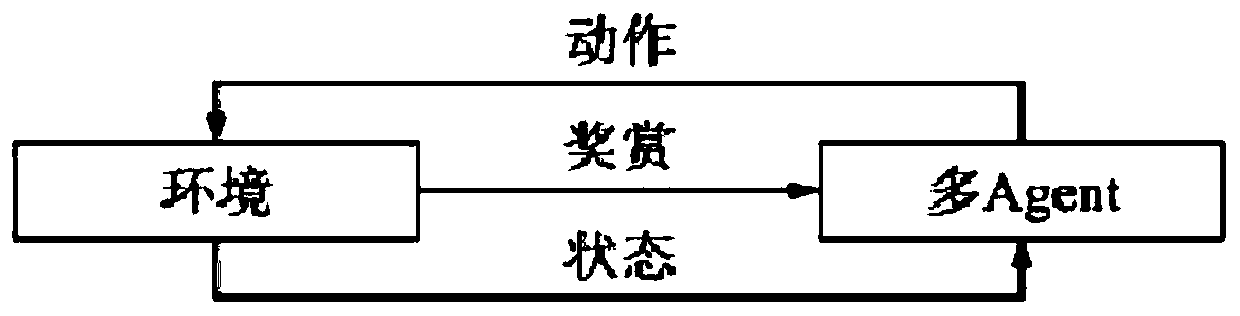

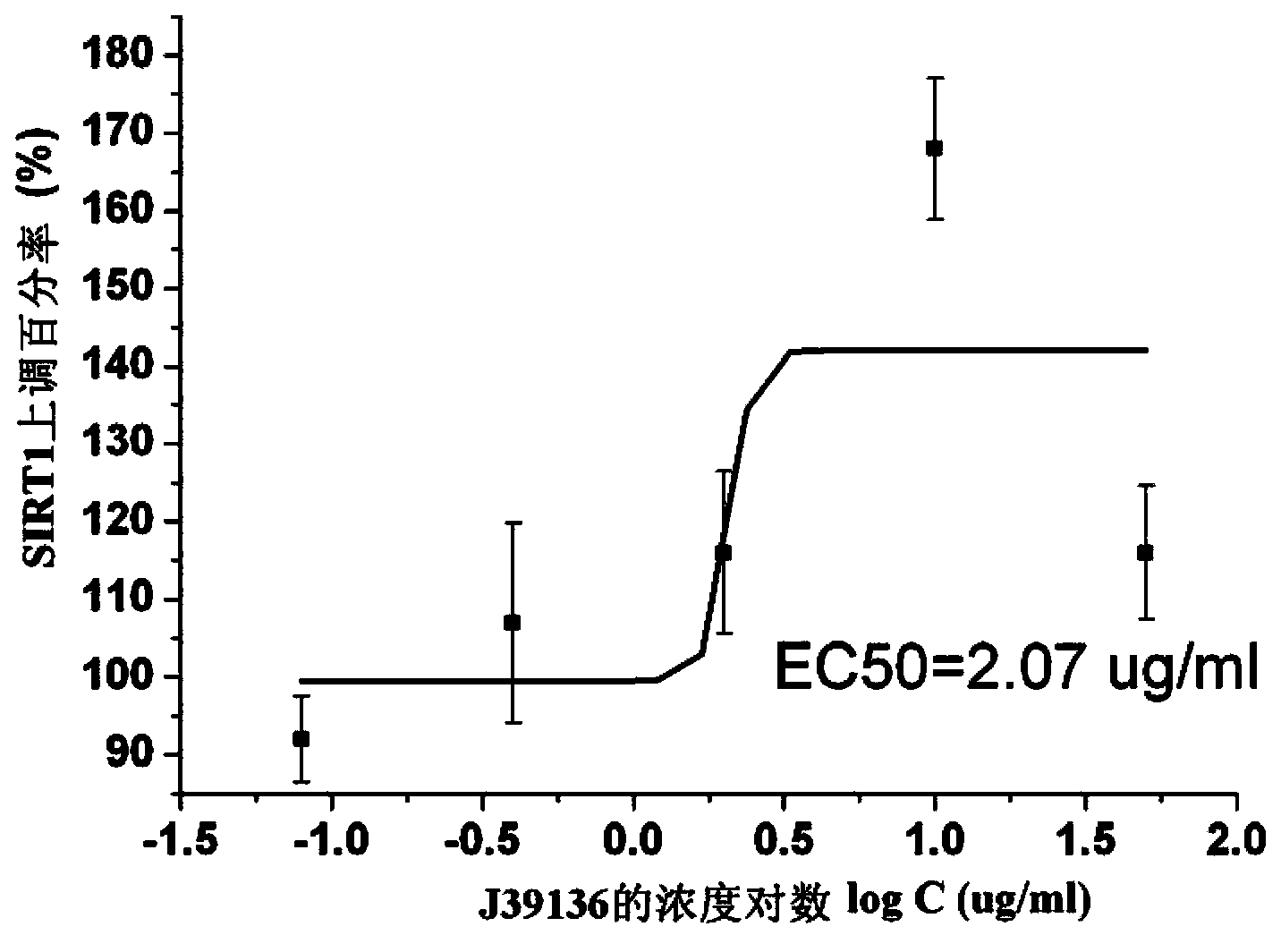

Multi-agent-based calculation task unloading and resources allocation method for vehicle speed perception

ActiveCN111918245AReduce energy consumptionImprove performanceParticular environment based servicesIn-vehicle communicationEdge serverResource assignment

The invention discloses a multi-agent-based calculation task unloading and resources allocation method for vehicle speed perception, which comprises the following steps of: collecting a vehicle end calculation task, and dividing the vehicle end calculation task into a key task, a high priority task and a low priority task according to the type of the vehicle end calculation task; calculating corresponding time delay and energy consumption unloaded to a VEC server, locally executed and continuously waited under different computing resource and wireless resources allocation, and then forming anobjective function for reducing task processing energy consumption of a vehicle end under the constraint of a time delay threshold of each task; converting the target function into a Markov decision process; training the multi-agent reinforcement learning network; and inputting the states of the to-be-allocated vehicle end and the edge server into the trained multi-agent reinforcement learning network to obtain a task unloading and resource allocation result. The invention can effectively improve the overall performance of the vehicle in a VEC server scene.

Owner:XI AN JIAOTONG UNIV

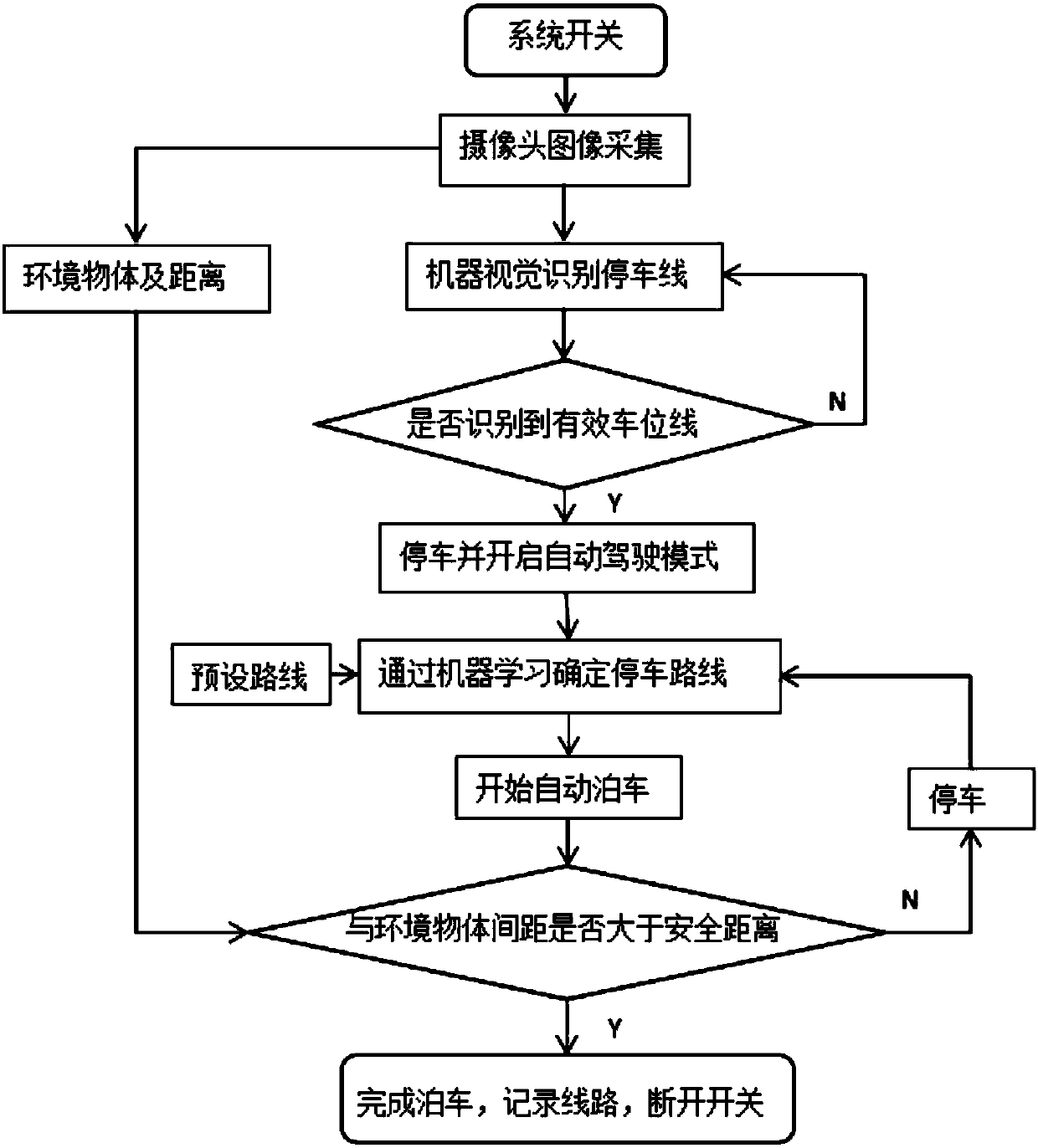

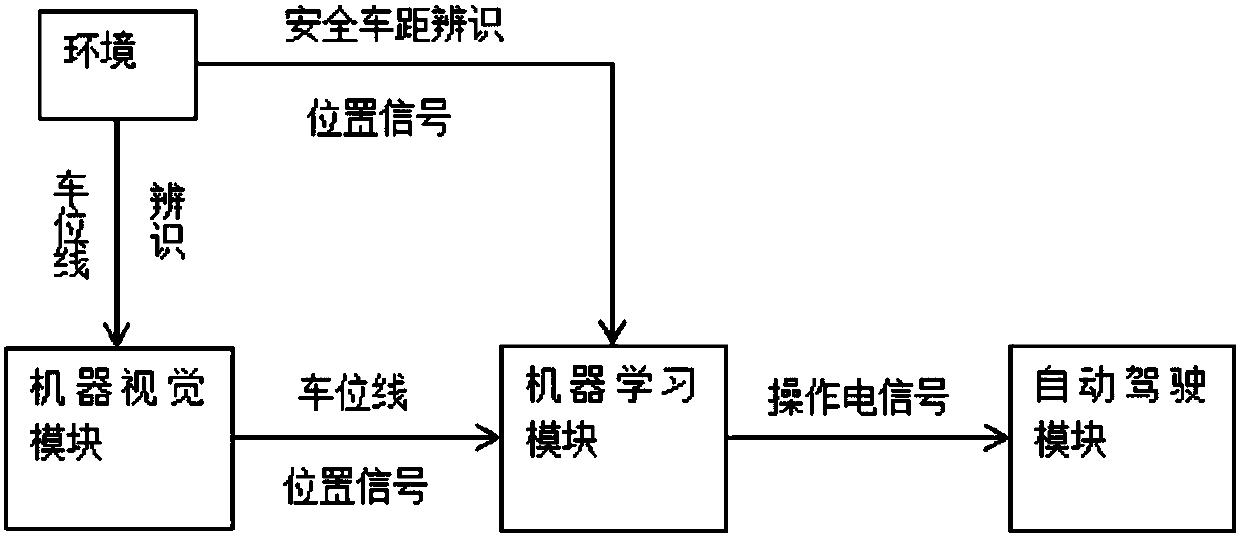

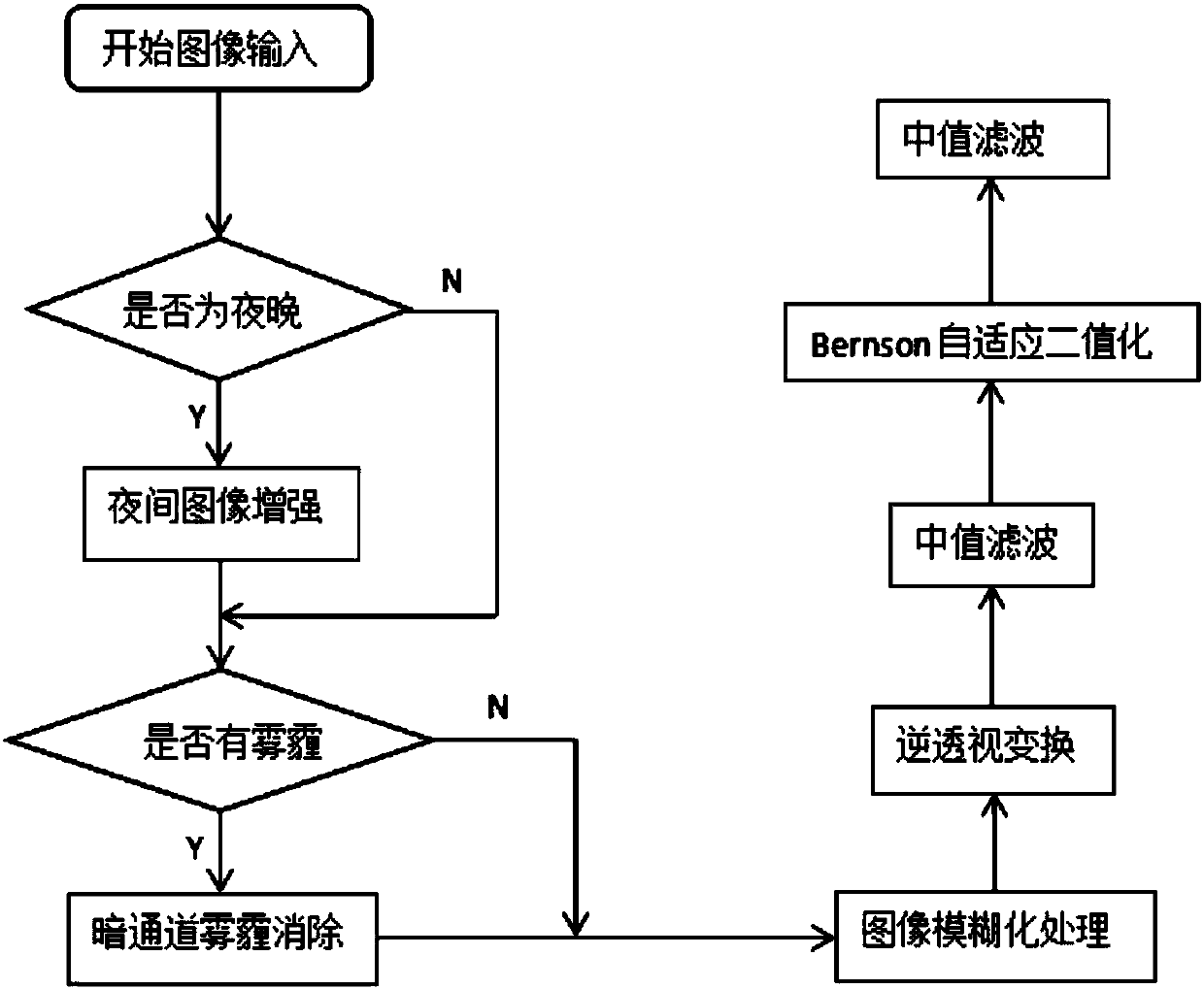

Machine vision and machine learning-based automatic parking method and system

The invention provides a machine vision and machine learning-based automatic parking method and system. Images are collected via cameras arranged all around an automobile body, and a three-dimension image of the surround environment and a distance between the automobile body and a surrounding environment object can be obtained; parking lines can be identified according to a machine vision technology; the automobile is parked when an effective image is identified and an automatic driving mode is started; at the automatic driving mode, an optimal scheme is selected from preset parking lines according to a relative position between the current automobile and a parking lot; and the automobile is parked with a steering wheel, an accelerator and a brake pedal which are controlled by an electriccontrol device. Based on a preset database and reinforced machine learning, improvements are continuously made during the parking process, so less data input is guaranteed upon more application conditions; low demand is required for a parking position of the parking lot; forward and backward operations can be conducted; a wide application range is provided; and by the use of the machine vision andmachine learning-based automatic parking method and system, parking speed is accelerated and a reliable parking process is obtained.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

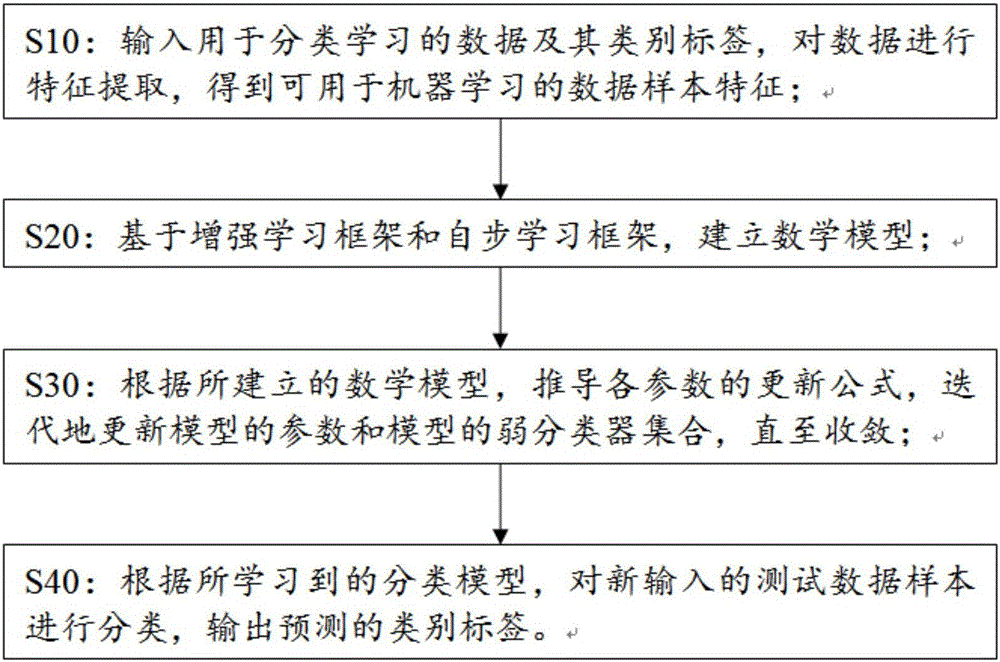

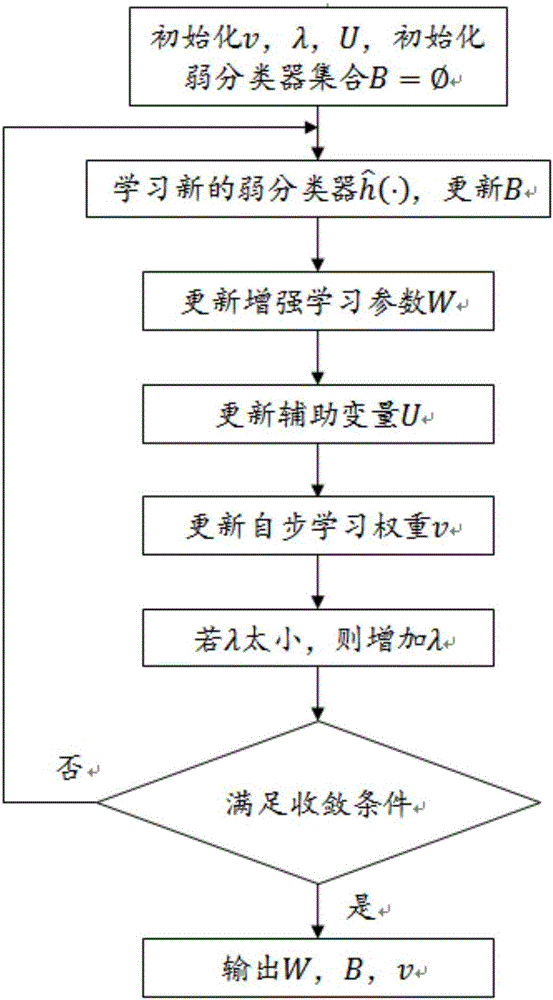

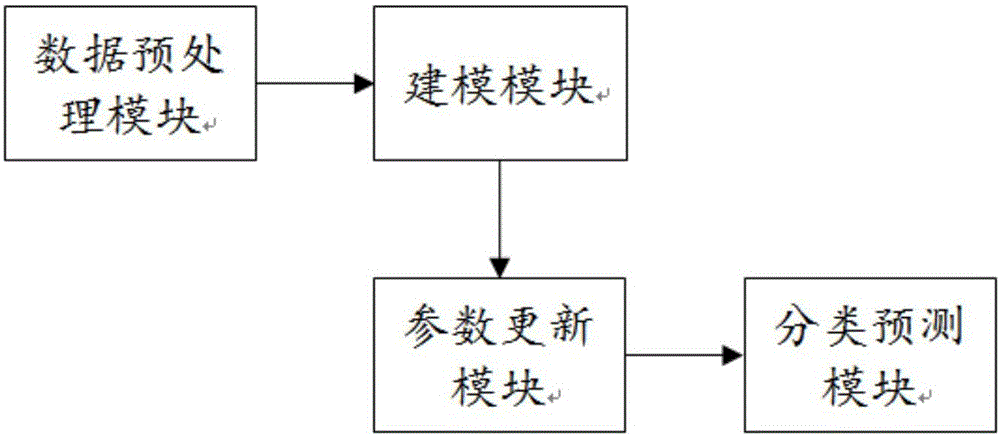

Self-paced reinforcement image classification method and system

InactiveCN106446927AImprove effectivenessHigh standardCharacter and pattern recognitionFeature extractionMathematical model

The invention discloses a self-paced reinforcement image classification method and system. The method comprises the following steps: S10, inputting image data for classification and type labels, and performing feature extraction on the data; S20, based on reinforcement learning and self-paced learning frameworks, establishing a mathematic model; S30, updating parameters of the model and a weak classifier set of the model in an iteration mode until convergence is realized; and S40, predicting types of newly input test images. The method is characterized in that intrinsic consistency and complementarity of a reinforcement learning method and a elf-paced learning method are fully utilized, a distinguishing capability of a classification model and the reliability of image samples participating in learning are simultaneously highlighted in a learning process, and at the same time, effective learning and robust learning are realized. Compared to a conventional image classification method, the method and system have higher classification accuracy and higher robustness for label noise.

Owner:ZHEJIANG UNIV

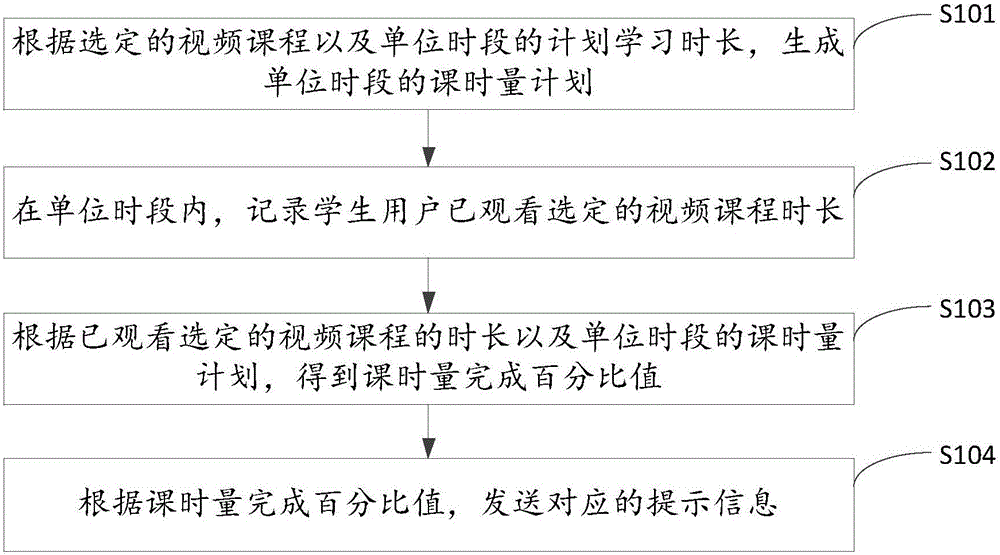

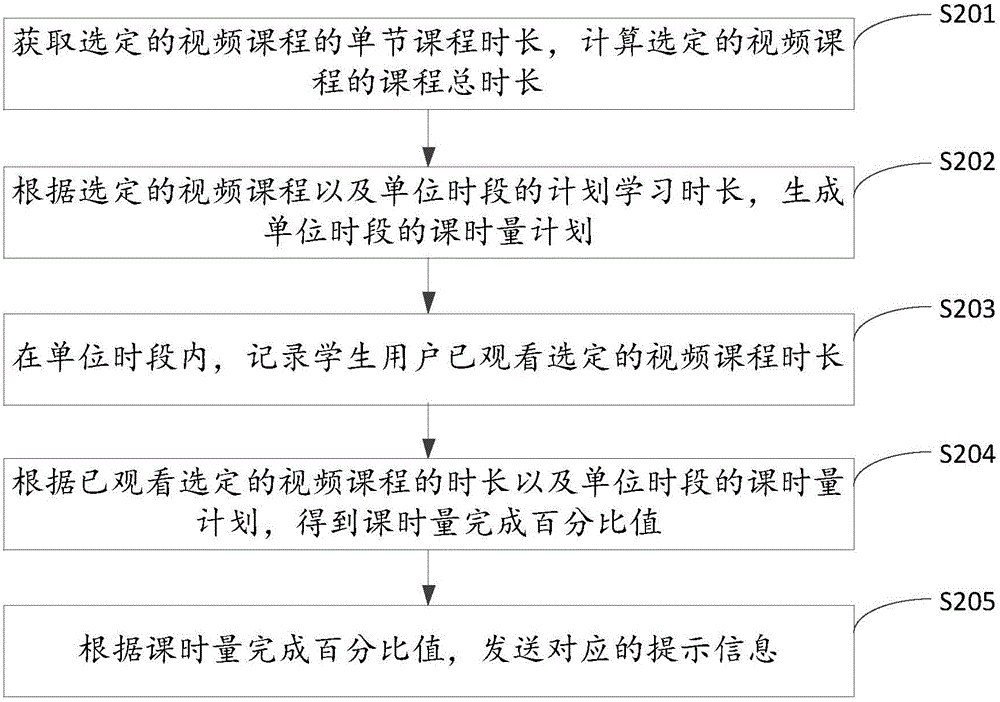

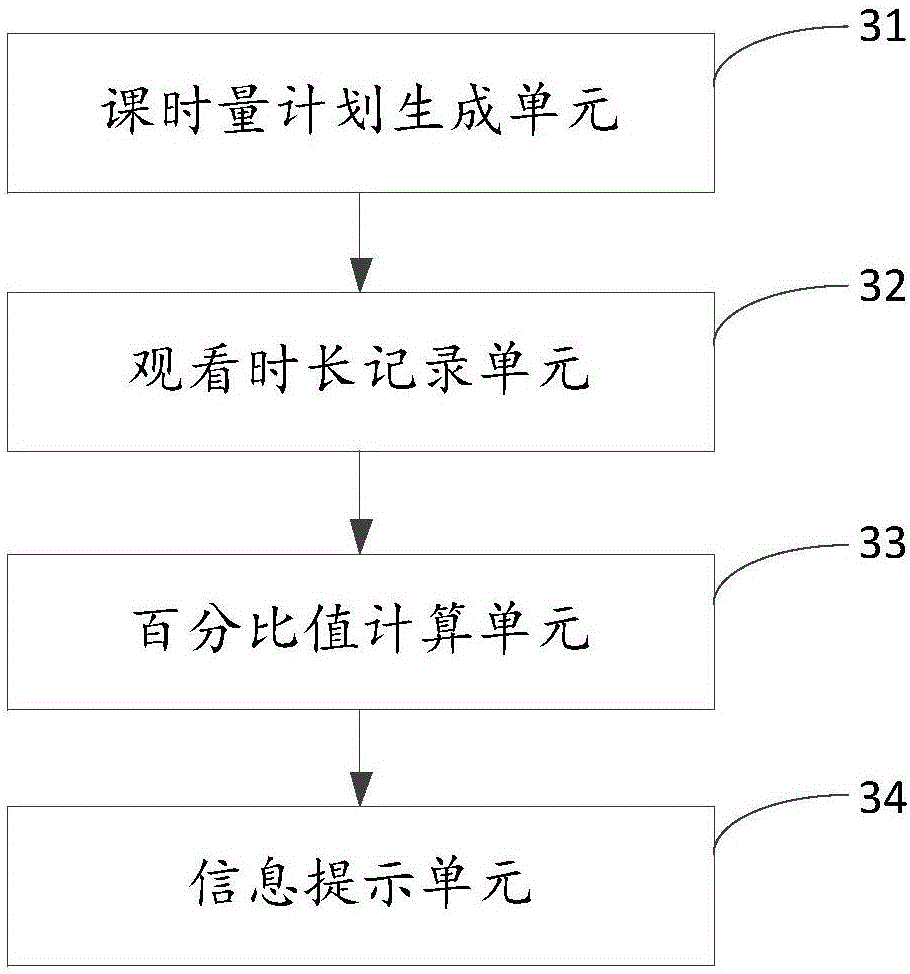

Video curriculum learning method and system

InactiveCN105976287ASolve processing problemsData processing applicationsElectrical appliancesRecording durationProgram planning

The invention is applied to the technical field of computers, and provides a video curriculum learning method and system. The method comprises the following steps: according to a selected video curriculum and a planed learning duration of a unit period, generating a curriculum quantity plan of the unit period; in the unit period, recording durations of the selected video curriculum already watched by students; according to the durations of the already watched video curriculum and the curriculum quantity plan of the unit period, obtaining a curriculum quantity completion percentage value; and according to the curriculum quantity completion percentage value, sending corresponding prompt information. According to the invention, curriculum quantity plans of the unit period are generated for student users, at the same time, the duration of the selection video curriculum already watched by the students are continuously recorded, the curriculum quantity completion percentage value is calculated, and the corresponding prompt information is sent to the students, such that the student users are urged and encouraged to complete the video curriculum according to the plans, the student users are supervised, the learning motivation is enhanced, and the method and system help the students to complete the video curriculum.

Owner:GUANGDONG XIAOTIANCAI TECH CO LTD

System for treating disabilities such as dyslexia by enhancing holistic speech perception

InactiveUS20070105073A1Promotes language learningReadingElectrical appliancesDeep dyslexiaPattern perception

Owner:EPOCH INNOVATIONS

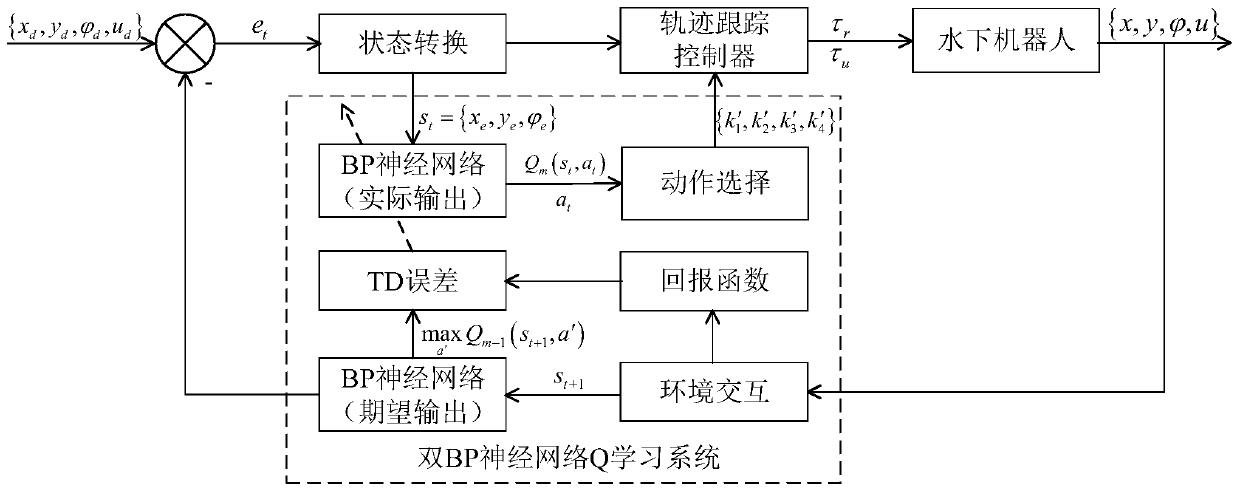

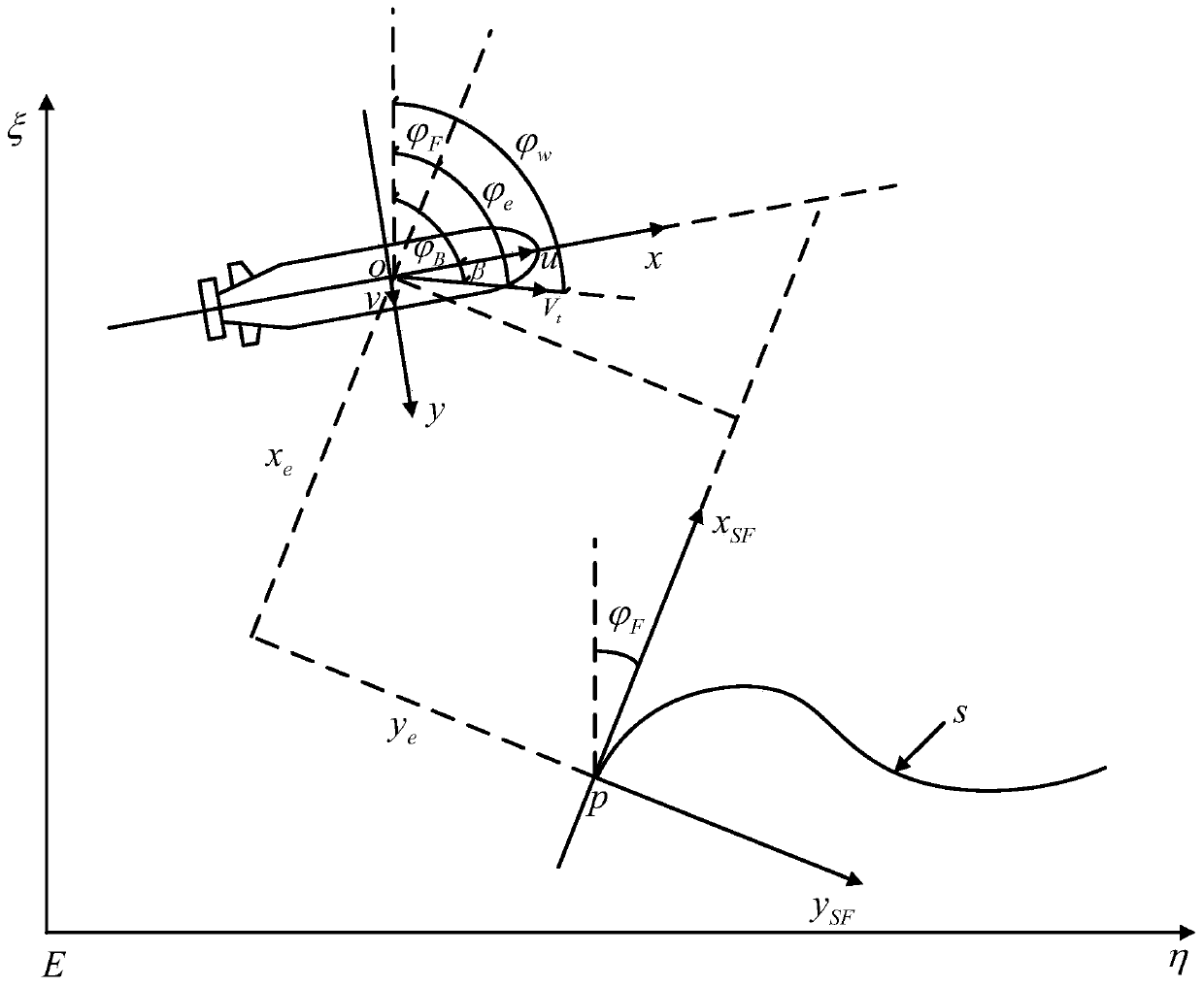

Underwater robot trajectory tracking method based on double-BP network reinforcement learning framework

ActiveCN111240345AOvercoming time-consuming and labor-intensiveAltitude or depth controlFuzzy ruleControl system

The invention discloses an underwater robot trajectory tracking method based on a double-BP network reinforcement learning framework, and belongs to the technical field of underwater robot trajectorytracking. According to the method, the problems that in the prior art, when online optimization of the controller parameters is carried out, fuzzy rules need to be established depending on a large amount of expert prior knowledge, and consequently time and labor are consumed for online optimization of the controller parameters is solved. According to the invention, continuous interaction with theenvironment can be realized by using a reinforcement learning method, after the strengthening value given by the environment is obtained, and the characteristic of the optimal strategy can be found through loop iteration, a reinforcement learning method is combined with a double BP network, by adjusting the speed of the underwater robot and the relevant parameters of the control law of the headingcontrol system on line, the designed speed and heading control system can select the optimal control parameters corresponding to the environment in different environments, and the problem that in theprior art, time and labor are consumed when controller parameters are optimized on line is solved. The method can be applied to trajectory tracking of the underwater robot.

Owner:HARBIN ENG UNIV

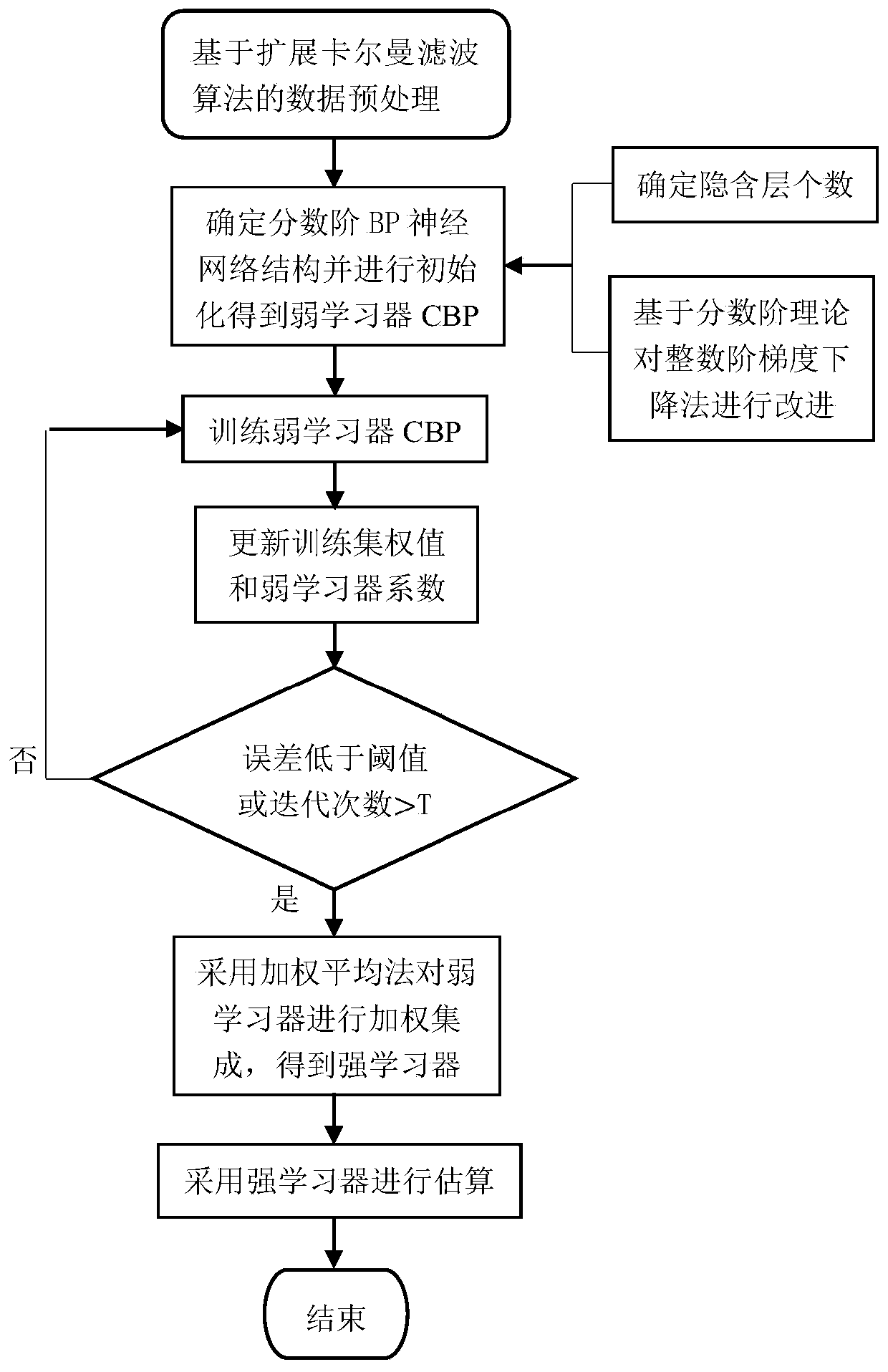

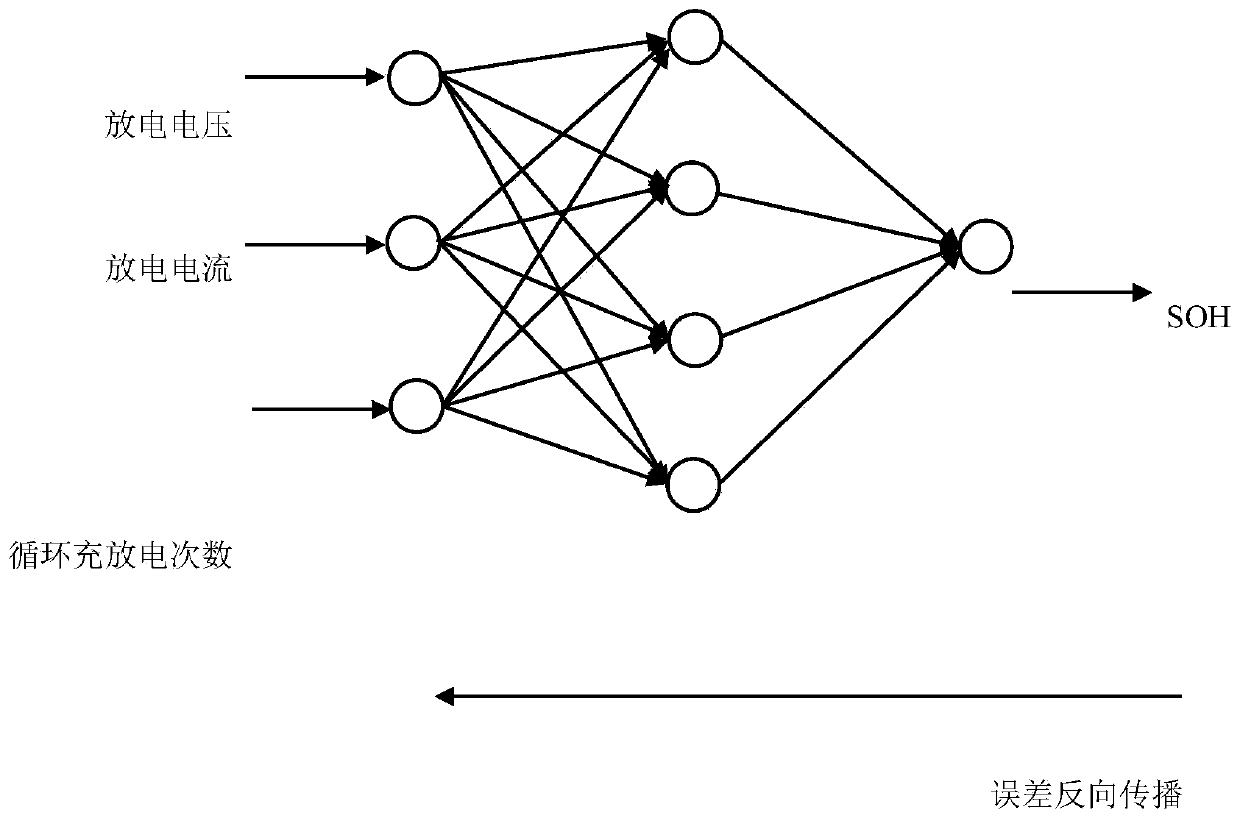

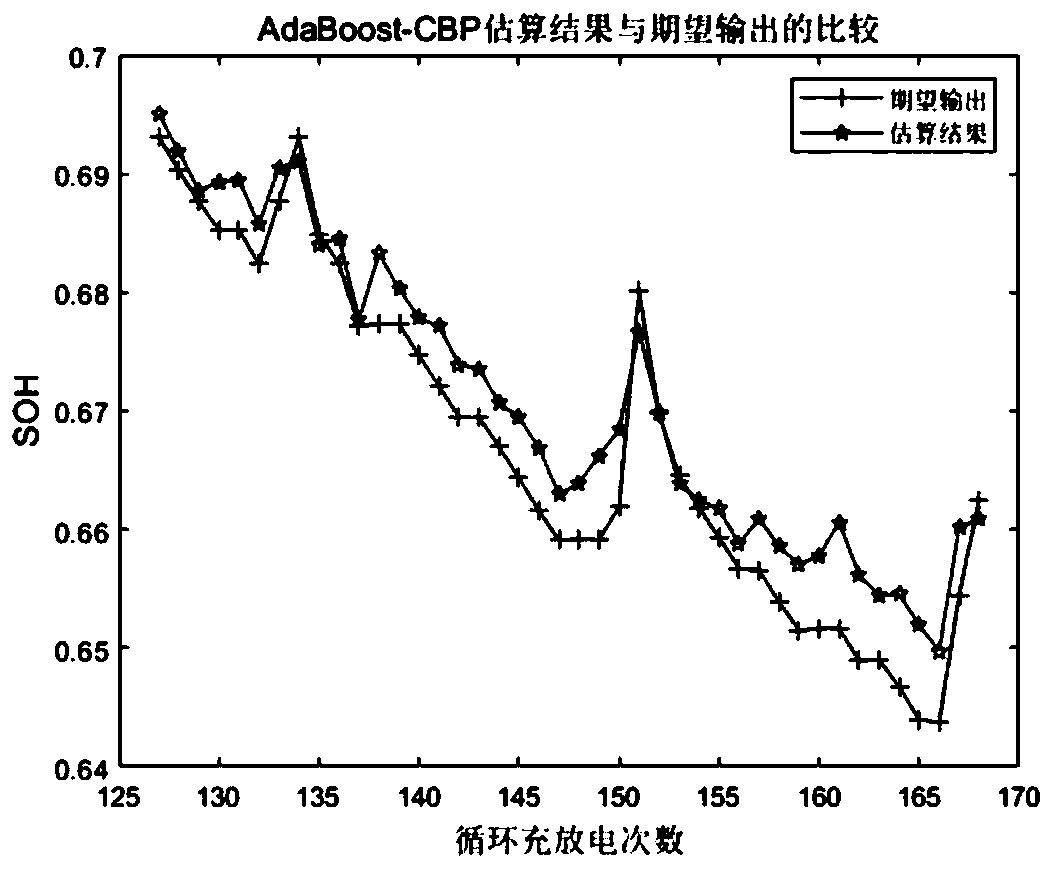

Electric vehicle lithium ion battery health state estimation method based on AdaBoost-CBP neural network

ActiveCN110659722AIncrease diversityAvoid local optimaElectrical testingCharacter and pattern recognitionAlgorithmElectrical battery

The invention provides an electric vehicle lithium ion battery health state estimation method based on an AdaBoost-CBP neural network. Due to the fact that the discharge voltage, the discharge currentand the cyclic charge and discharge frequency are obvious in change trend in the battery using process, the three parameters are adopted as input data of SOH estimation, and the battery capacity is adopted as an output parameter. Because the battery data has noise and presents a nonlinear change characteristic, an extended Kalman filtering algorithm is adopted to carry out denoising. Aiming at the problem that the BP neural network is easy to fall into local optimum, a fractional calculus theory is adopted to optimize a gradient descent method. Finally, the fractional order BP neural networkis used as a weak learner; the fitting capability of the learners is enhanced by utilizing the self-adaptive enhancement performance of the AdaBoost algorithm, and each round of weak learners are integrated to obtain the strong learners, so that the diversity of the learners is improved, the performance advantage complementation of the learners under different working condition data is realized, and the estimation precision is effectively improved.

Owner:JIANGSU UNIV

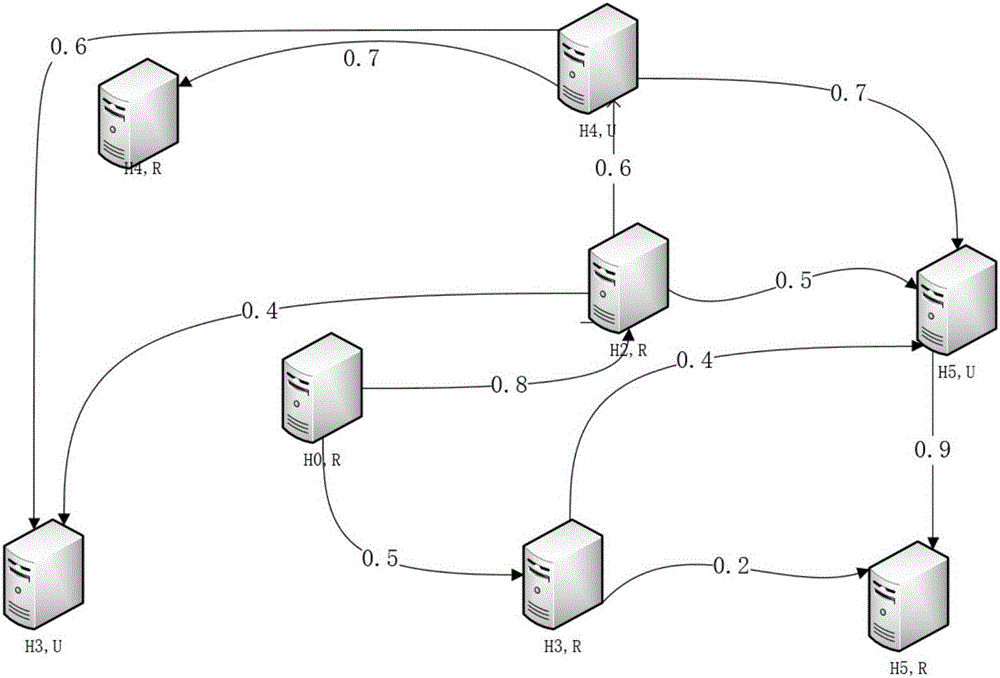

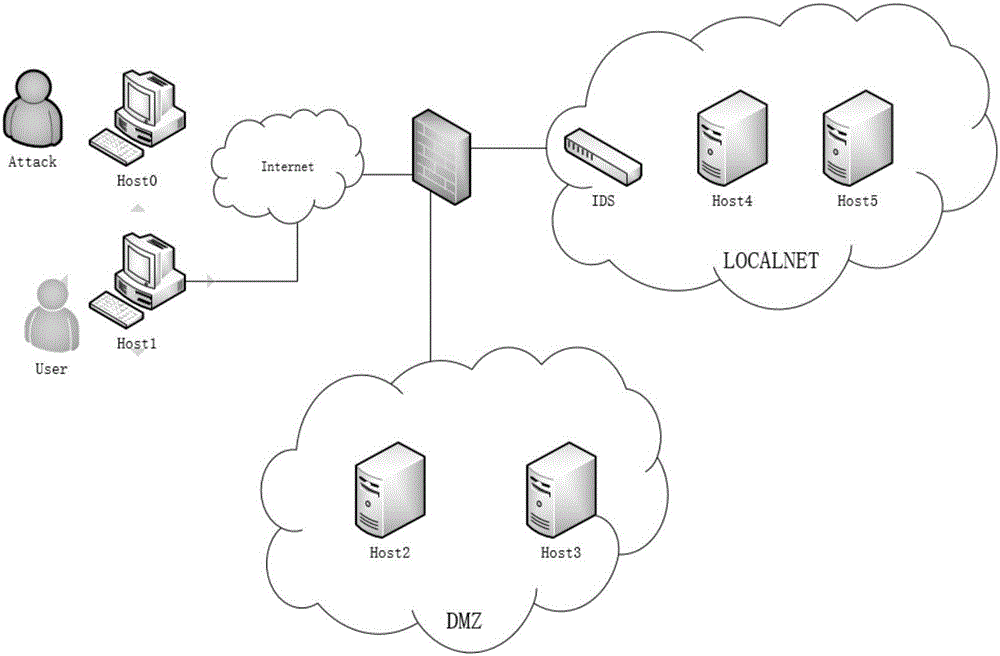

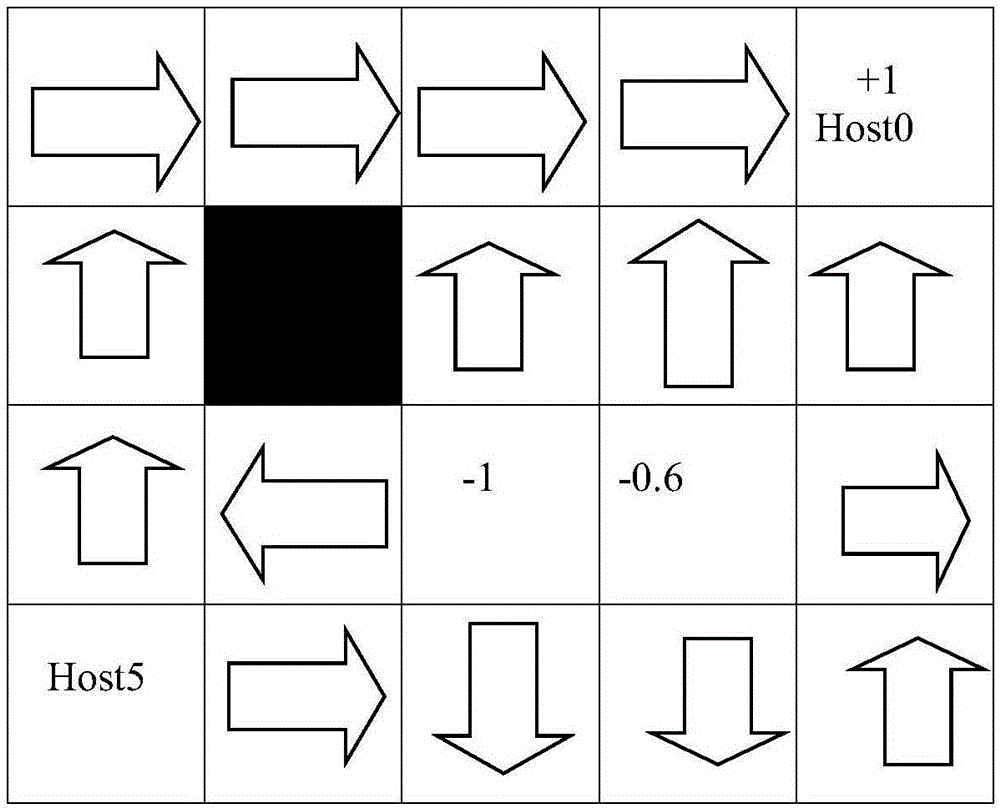

Dynamic protection path planning method based on reinforcement learning

ActiveCN106657144ABest protection pathIncreased protectionData switching networksNetwork modelOnline learning

The invention relates to a dynamic protection path planning method based on reinforcement learning, and belongs to the technical field of information security. The method specifically comprises the operating steps of 1, generating a distributed network attack graph; 2, finding the worst attack path; 3, generating a network model; and 4, acquiring the best protection path by reinforcement learning. Compared with existing technologies, the method provided by the invention has the following advantages: 1, the operation of collecting training data to train a network model is not needed; 2, online learning can be performed to continuously determine the best protection path corresponding to the different network sates at different times; 3, a protection degree to transmission data is high; and 4, the generation speed of the best protection path is high.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

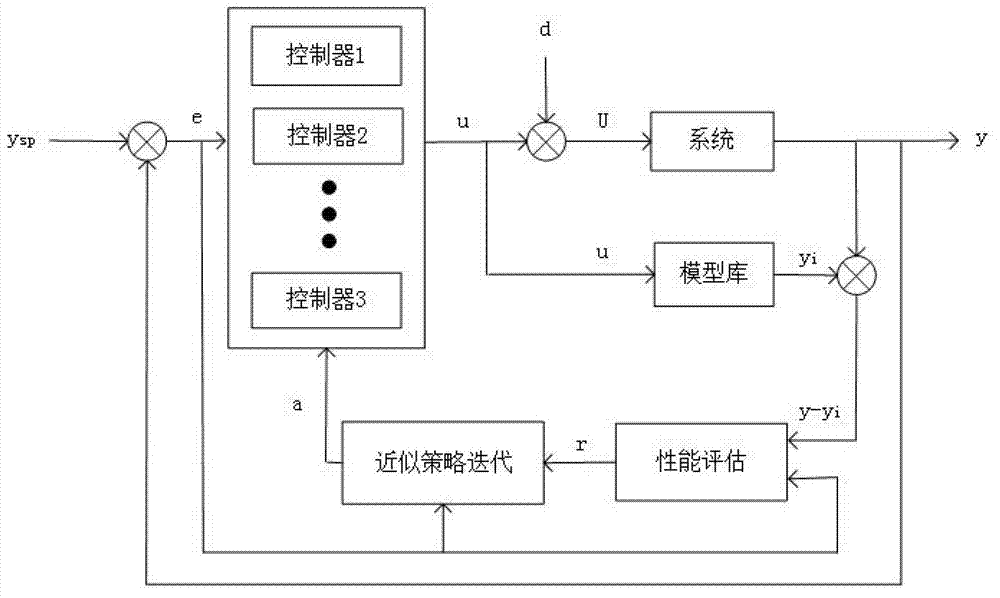

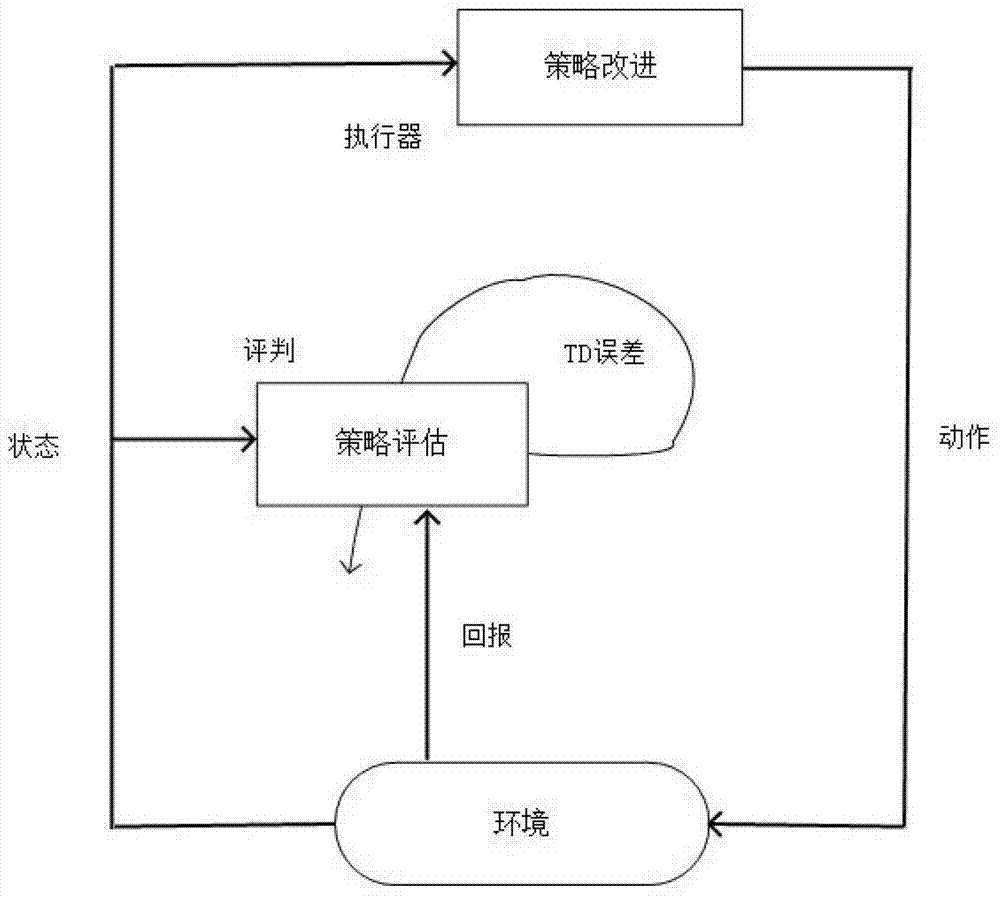

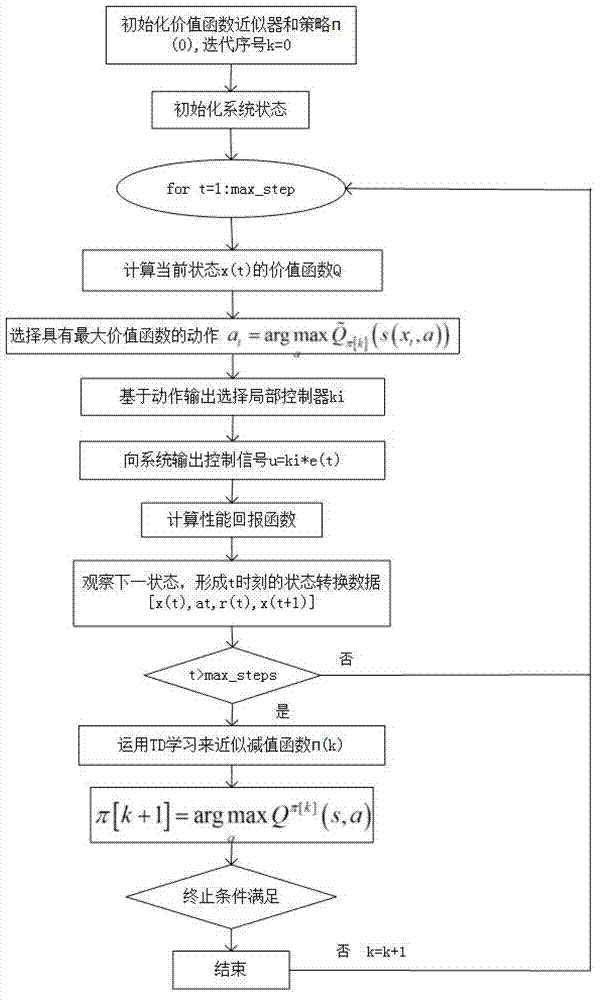

Multi-model control method based on self learning

ActiveCN103399488AThe principle is simpleStrong reliabilityAdaptive controlControl engineeringModel control

The invention discloses a multi-model control method based on self learning. The multi-model control method comprises the steps that (1) a model base is built, and the model base consists of a group of local models of a non-linear model; (2) a group of controllers are built, and a group of local controllers are designed according to the local model in the model base; (3) the performance evaluation is executed: output errors and differences between system output y and model output yi are observed, and a performance feedback or value function is calculated or sent to an API (application program interface) module on the basis of signals; and (4) a similar policy iteration algorithm is executed: performance feedback signals are observed, error signals between reference output and system output are received, the signals are used as the Markov decision process states, and meanwhile, the states are fed back to become return signals for enhancing the leaning. The multi-model control method has the advantages that the principle is simple, the application range is wide, the reliability is high, the general performance and the convergence of the control can be ensured, and the like.

Owner:NAT UNIV OF DEFENSE TECH

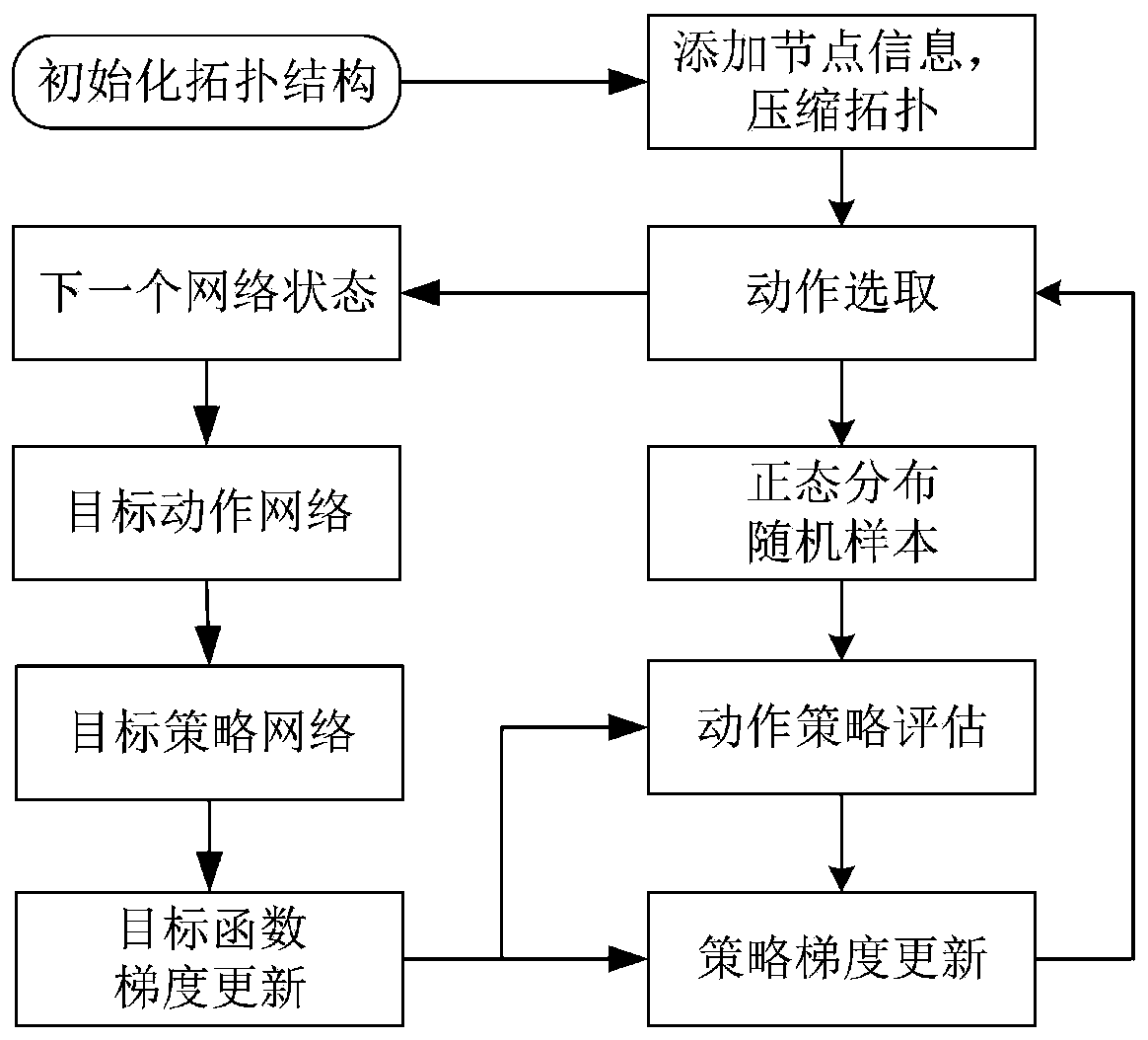

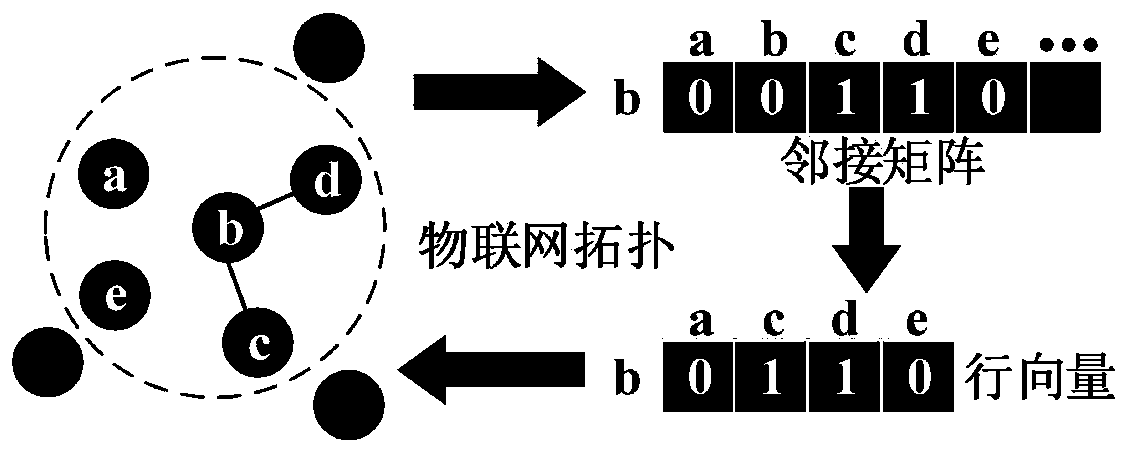

Method for optimizing robustness of topological structure of Internet of Things through autonomous learning

PendingCN110807230AIncreased ability to resist attacksHighly Reliable Data TransmissionGeometric CADAlgorithmThe Internet

The invention discloses a method for optimizing robustness of a topological structure of the Internet of Things through autonomous learning. The method comprises: 1, initializing the topological structure of the Internet of Things; 2, compressing the topological structure; 3, initializing an autonomous learning model; constructing a deep deterministic learning strategy model to train the topological structure of the Internet of Things according to the features of deep learning and reinforcement learning; 4, training and testing a model; 5, periodically repeating the step 4 in one independent repeated experiment, and periodically repeating the steps 1, 2, 3 and 4 in multiple independent repeated experiments until the maximum number of iterations is reached. In the process, the maximum number of iterations is set, the experiment is independently repeated each time, and the optimal result is selected. Experiments are repeated for many times, andan average value is selected as a result ofthe experiment. According to the method, the attack resistance of the initial topological structure can be remarkably improved; the robustness of a network topology structure is optimized through autonomous learning, and high-reliability data transmission is ensured.

Owner:TIANJIN UNIV

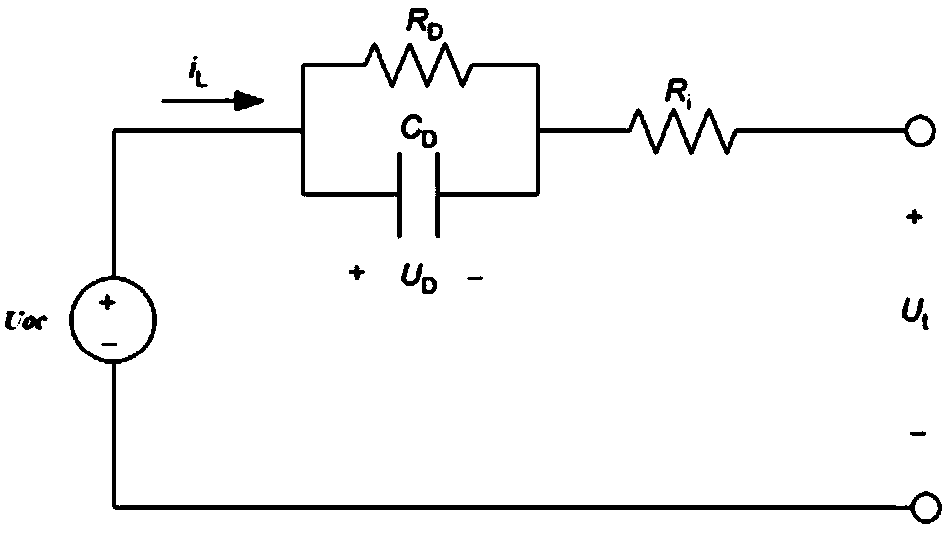

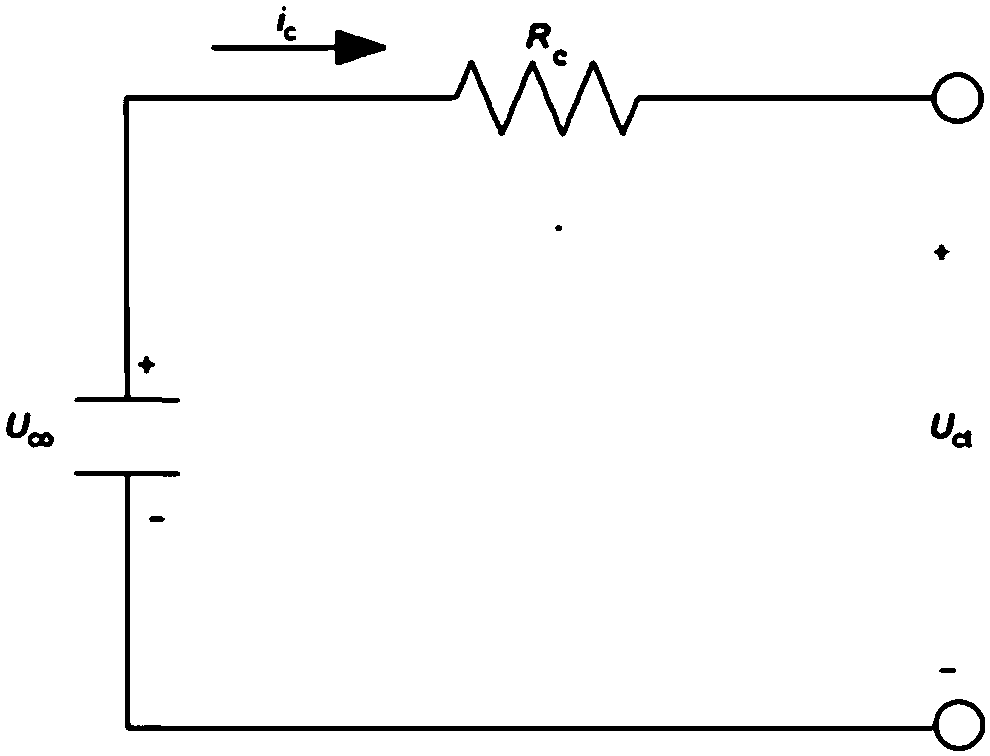

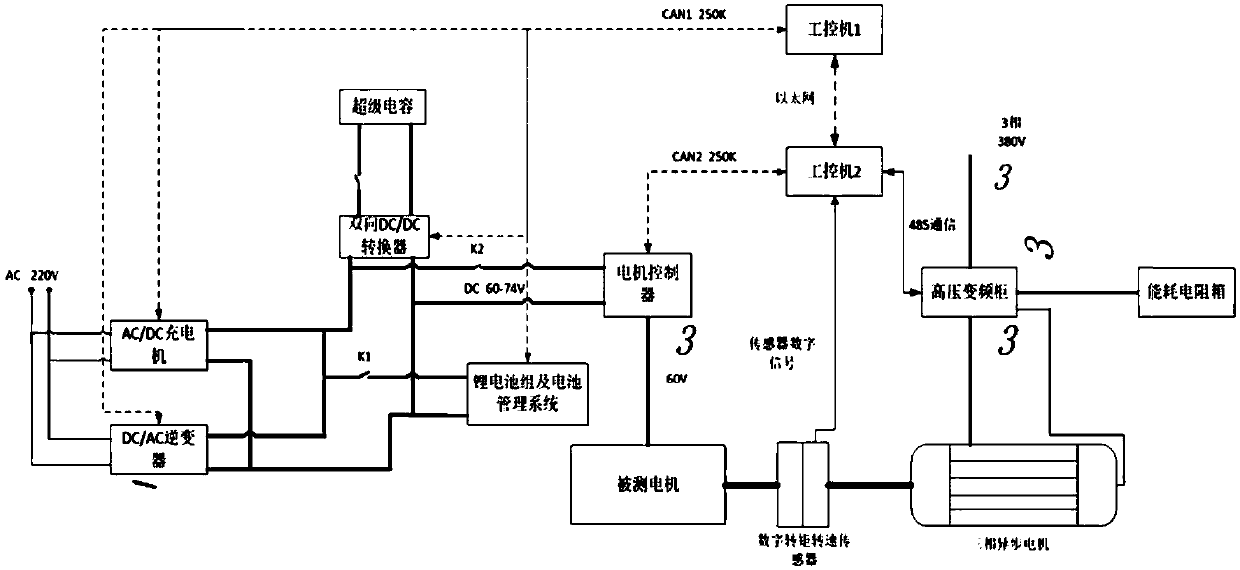

Electric automobile compound energy management method based on rule and Q-learning reinforcement learning

ActiveCN109552079AGuaranteed powerExtend your lifePropulsion by capacitorsPropulsion by batteries/cellsReinforcement learning algorithmSimulation

The invention discloses an electric automobile compound energy management method based on a rule and Q-learning reinforcement learning. By the adoption of the method, energy management is conducted according to the power requirement of a vehicle at every moment and the SOCs of a lithium battery and a super capacitor. In an energy management strategy based on Q-learning reinforcement learning, an energy management controller takes actions through observation of the system state, calculates the corresponding award value of each action and makes updates in real time, an energy management strategywith the minimum system loss power is obtained through utilization of the award values through Q-learning reinforcement learning algorithm simulative training, finally, real-time power distribution is conducted through the energy management strategy obtained through learning, and meanwhile, the award values are continuously updated to adapt to the current driving condition. By the adoption of themethod, on the basis that the required power is met, the electric quantity of the lithium battery can be kept, the service life of the lithium battery is prolonged, meanwhile, system energy loss is reduced, and the efficiency of a hybrid power system is improved.

Owner:NINGBO INST OF TECH ZHEJIANG UNIV ZHEJIANG

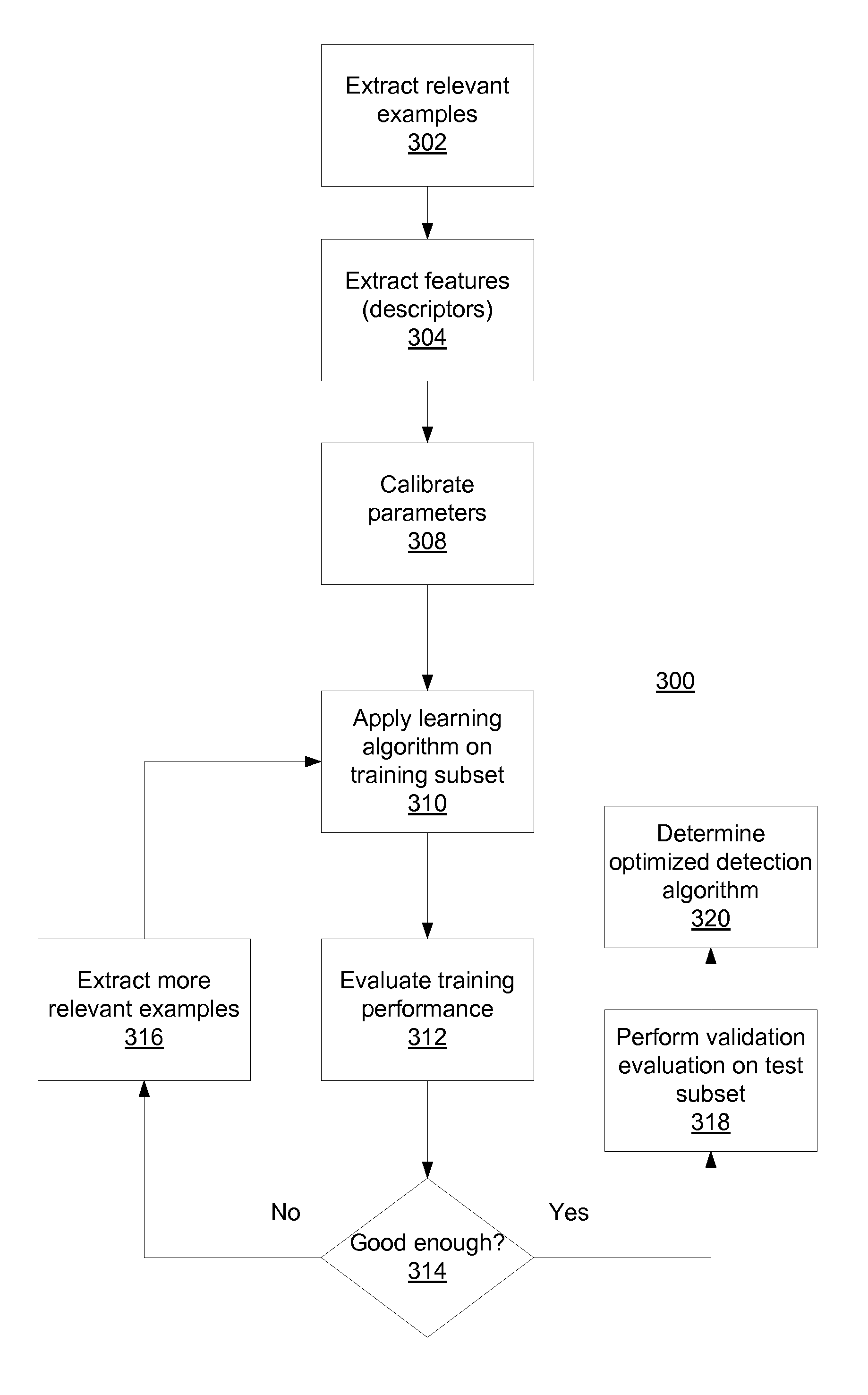

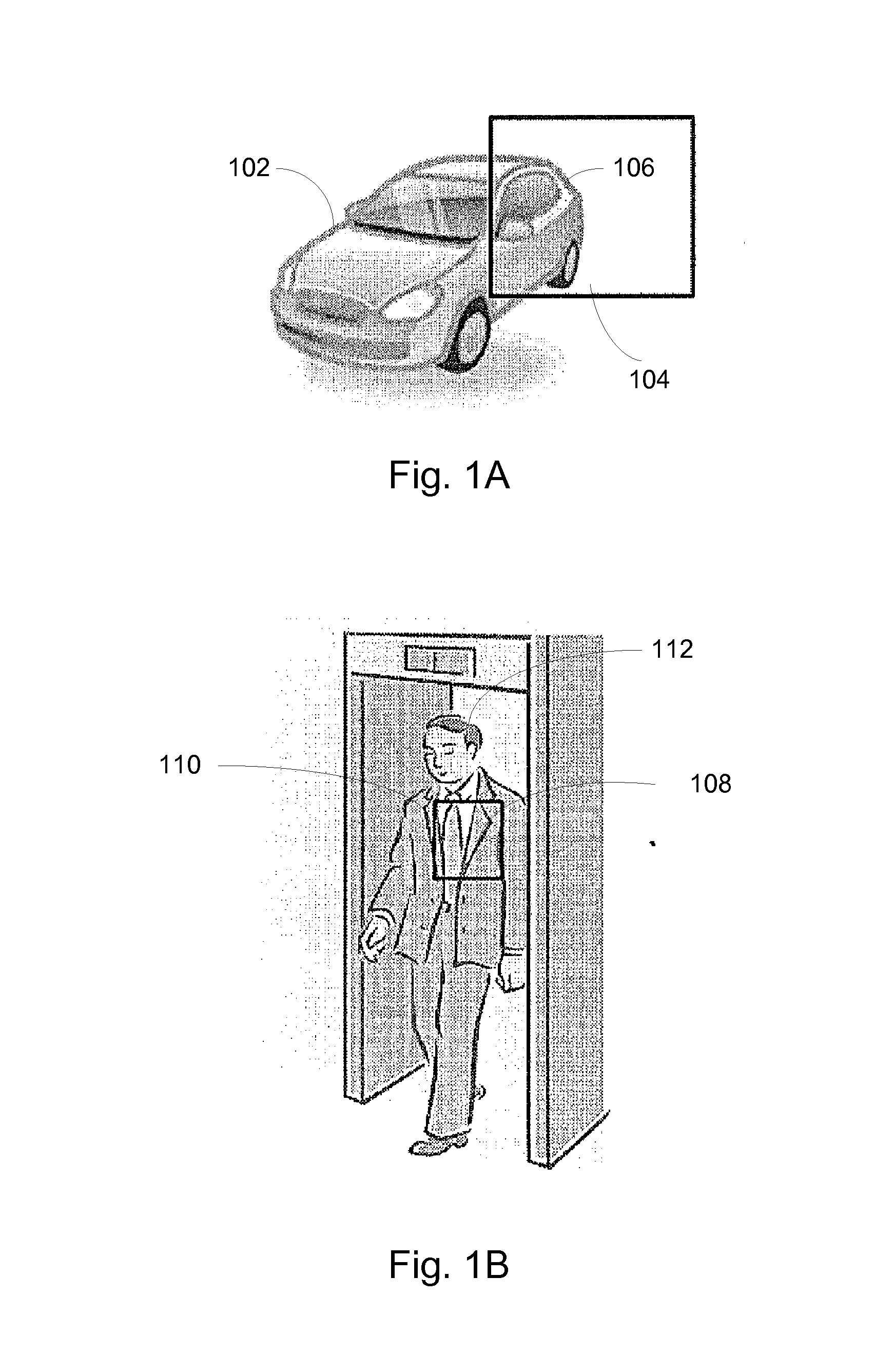

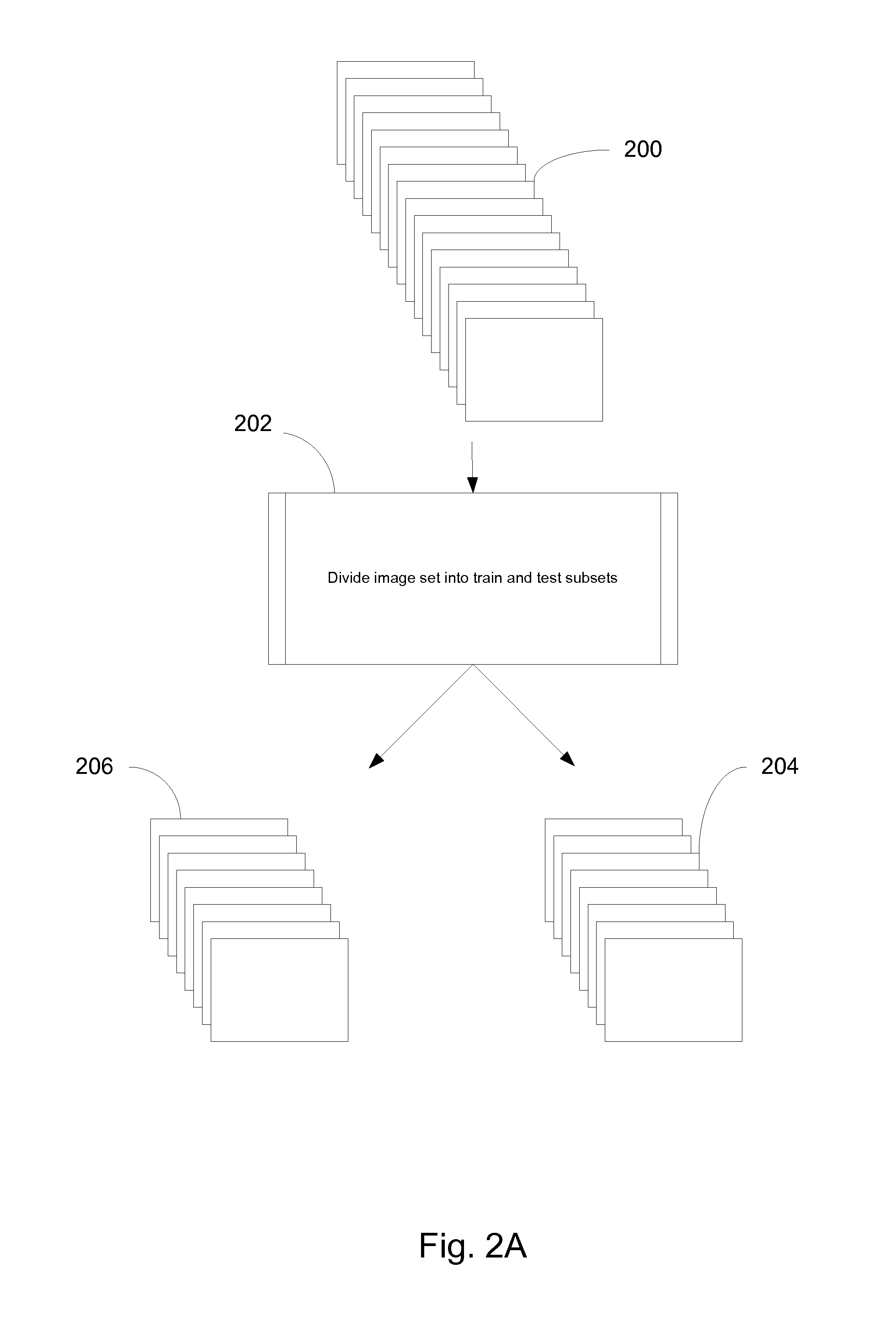

System and method for detection of a characteristic in samples of a sample set

InactiveUS20140040173A1Easy to detectDigital computer detailsCharacter and pattern recognitionEngineeringData mining

A computer-implemented method for detecting a characteristic in a sample of a set of samples is described. The method may include receiving from a user an indication for each sample of said set of samples that the user determines to include the characteristic. The method may also include defining samples of said set of samples that were not indicated by the user to include the characteristic as not including the characteristic. The method may further include iteratively applying by a processing unit, a detection algorithm on a first subset of the set of samples, said detection algorithm using a set of detection criteria that includes one or a plurality of detection criteria, evaluating a detection performance of the detection algorithm and modifying the detection algorithm by making changes in the set of detection criteria to enhance detection performance of the learning algorithm. The method may still further include, upon reaching a desired level of detection performance for the modified detection algorithm, performing validation by testing the modified detection algorithm on a second subset of the set of samples.

Owner:VIDEO INFORM

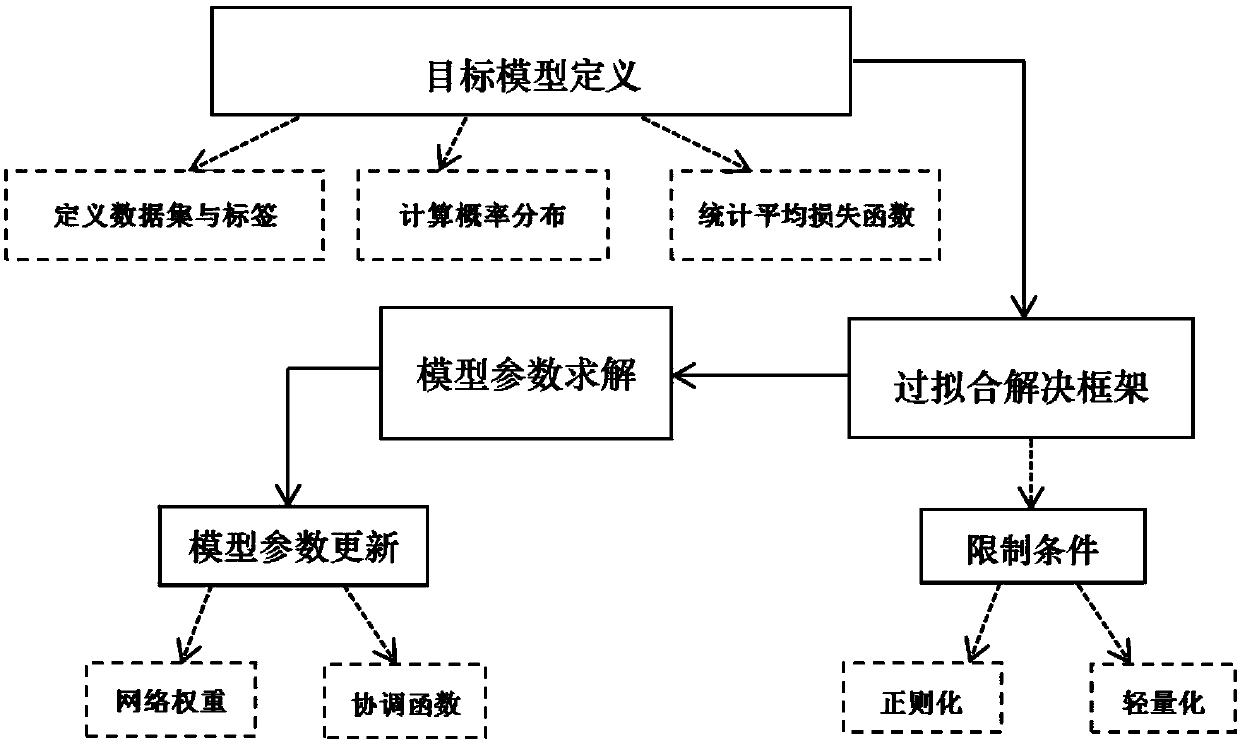

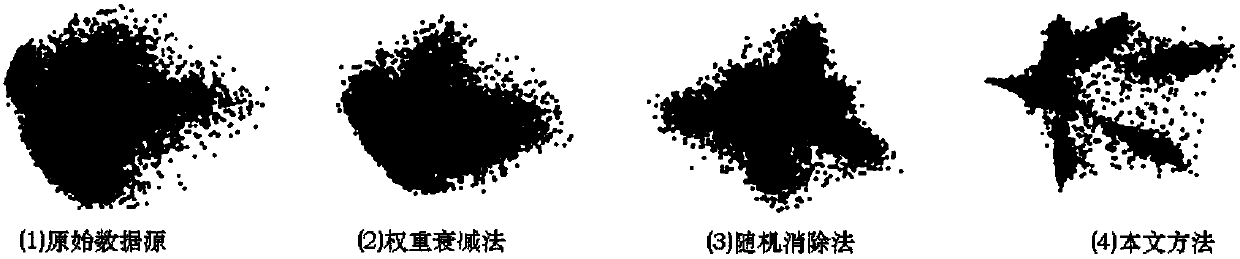

Over-fitting solution based on low-dimensional manifold regularized neural network

The invention discloses an over-fitting solution based on a low-dimensional manifold regularized neural network. Target model definition, over-fitting solution framework, model parametric solution andmodel parameter update are involved in the method. The method comprises the steps that restrictive definition is conducted on a target model, wherein the definition comprises a data set, the label ofthe data set and an average loss function; a framework for solving an over-fitting phenomenon is proposed, network parameters are solved by using a method based on regularization and weight lightening under restrictive conditions, and study capability and robustness are enhanced by proposing a bidirectional noise variable; the training optimum solution is finally obtained by using methods based on counterpropagation and point integration respectively to update a network weight and a coordination function according to the obtained network parameter set. By means of the method, solutions aimingat non solution, locally optimal solution and over-fitting solution of training results of a deep neural network can be provided, and demands on calculation resources are reduced by using an appropriate method to improve the efficiency of actual application calculations.

Owner:SHENZHEN WEITESHI TECH

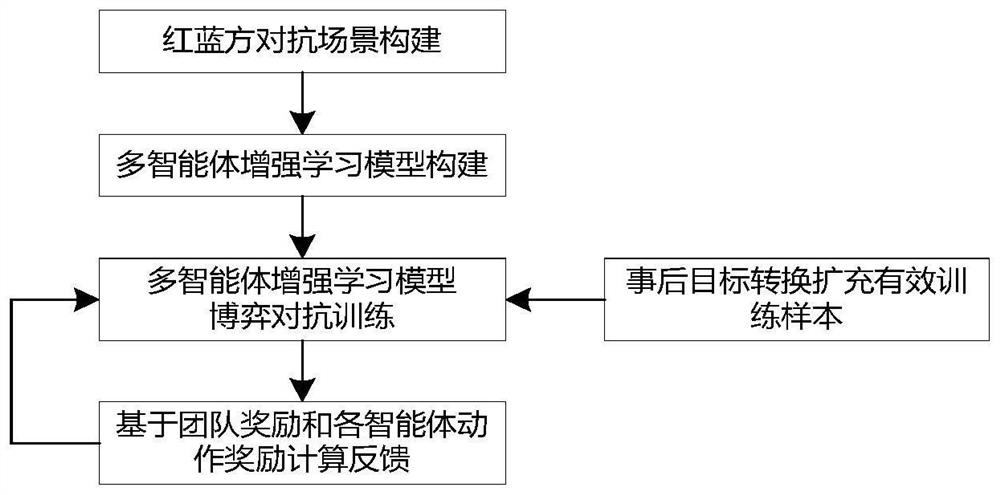

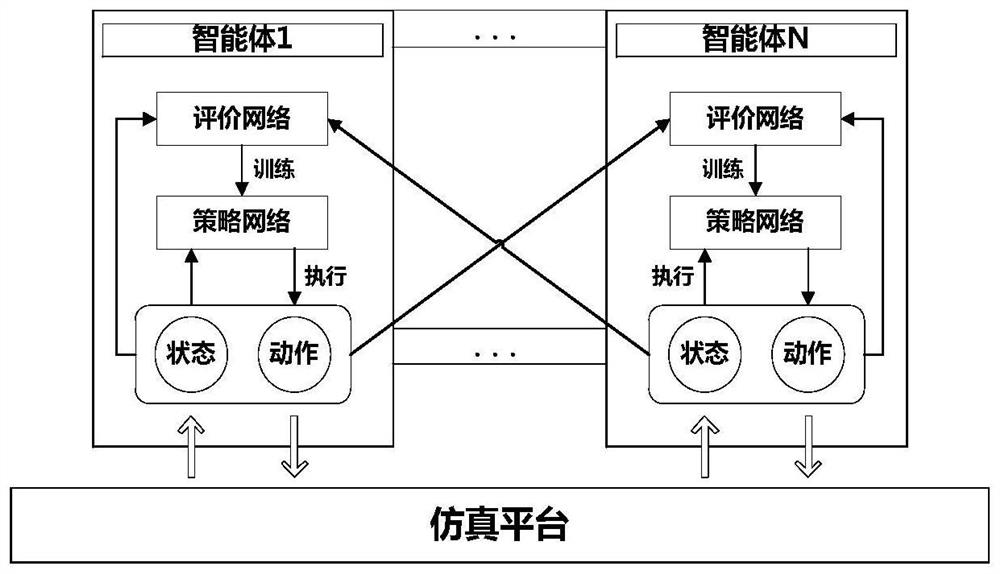

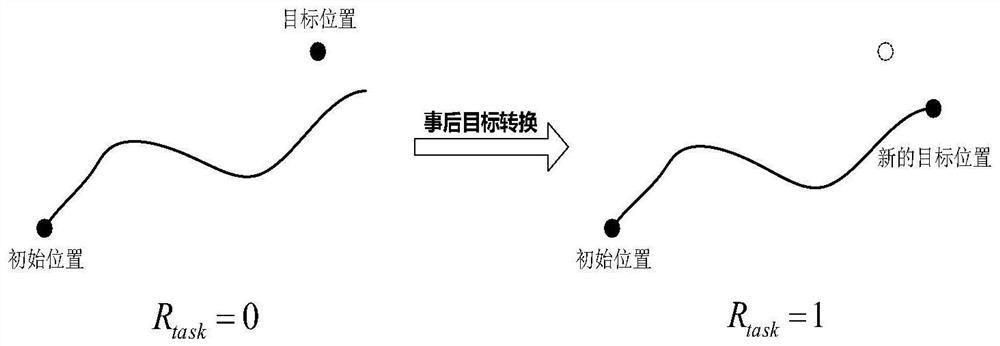

Multi-agent reinforcement learning method for collaborative decision-making of multiple combat units

PendingCN114358141AFast optimization convergenceIncrease the number of positive samplesCharacter and pattern recognitionEngineeringData mining

A multi-agent reinforcement learning method for collaborative decision-making of multiple combat units comprises the following steps that a multi-agent reinforcement learning model is established for a red-blue square game confrontation scene, and intelligent collaborative decision-making modeling for the multiple combat units is achieved; the number of effective training samples is increased by adopting a post-event target conversion method, and optimization convergence of a multi-agent reinforcement learning model is achieved; constructing a reward function by taking a team global task reward as a benchmark and taking a specific action reward of each combat unit as feedback information; and generating a plurality of opponent strategies according to different combat schemes, and training the multi-agent reinforcement learning model through massive simulation game confrontation by using a reward function. The method solves the problems that in the prior art, red and blue square game confrontation multi-combat-unit decision collaboration is low, and valuable training samples are difficult to obtain.

Owner:CHINA ACAD OF LAUNCH VEHICLE TECH

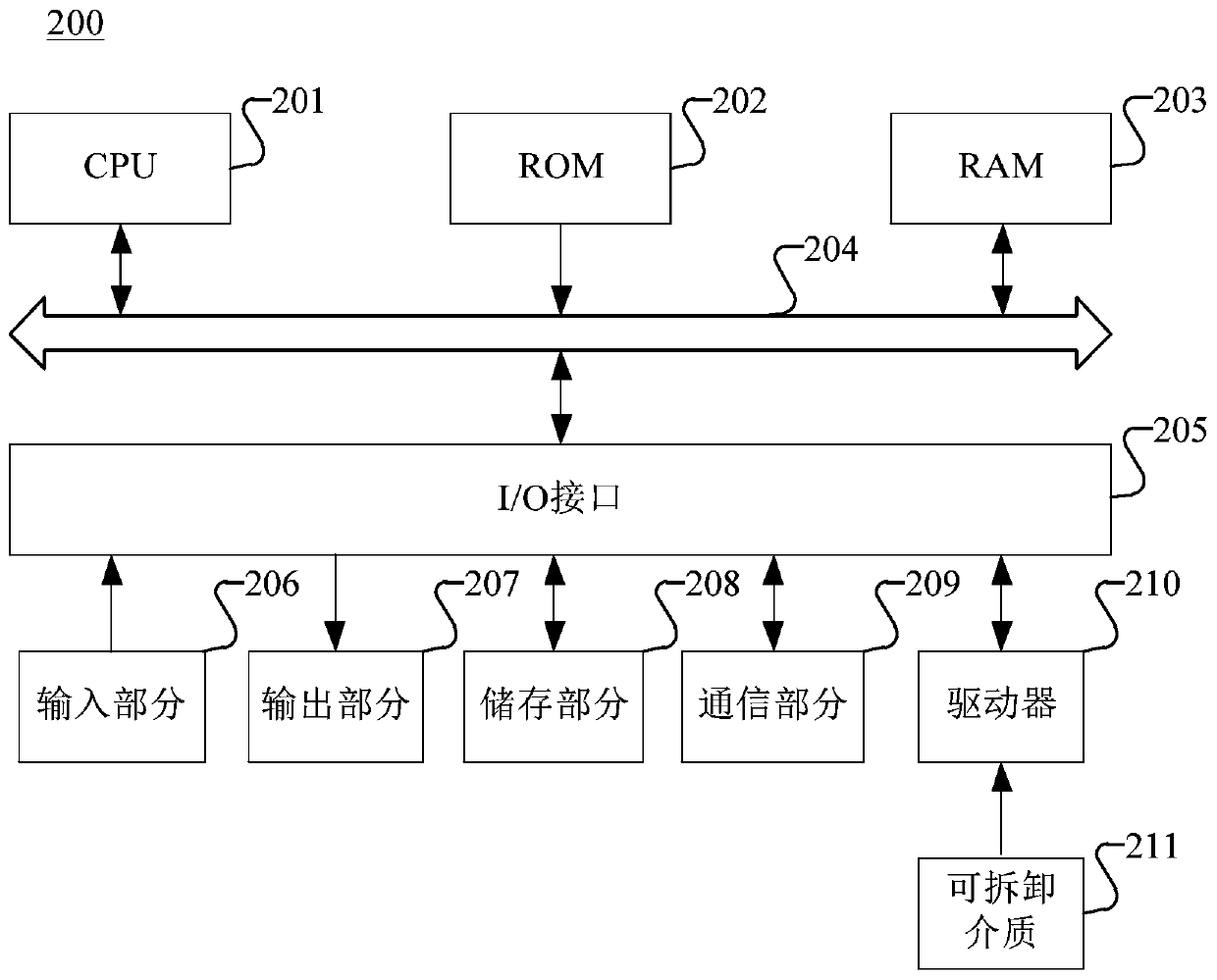

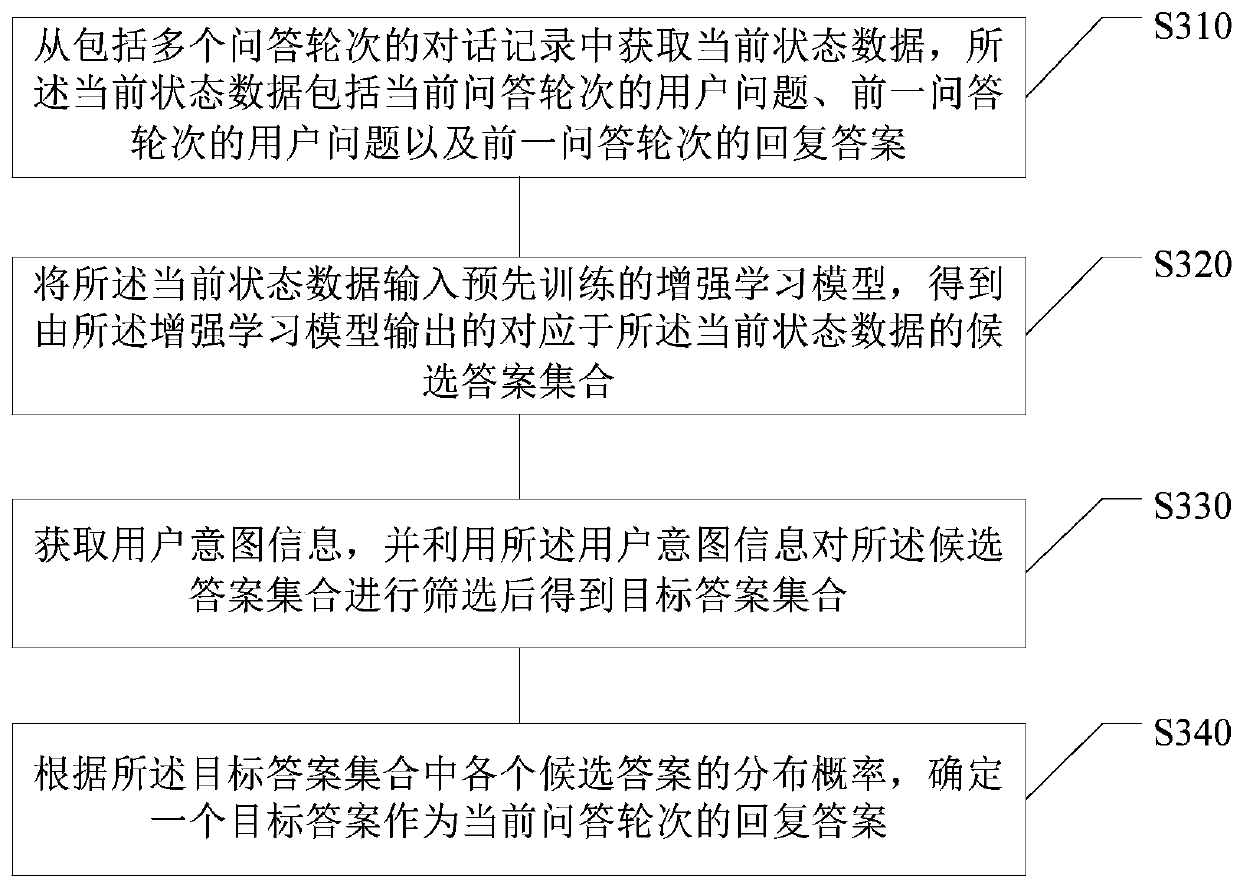

Answer matching method and device, electronic equipment and storage medium

ActiveCN110837548ASolve the problem of divergenceImprove accuracyDigital data information retrievalArtificial lifeEngineeringQuestions and answers

The invention relates to an answer matching method and device, electronic equipment and a storage medium, and belongs to the technical field of deep learning. The method comprises the following steps:acquiring current state data from a dialogue record comprising a plurality of question and answer rounds; inputting the current state data into a pre-trained reinforcement learning model to obtain acandidate answer set which is output by the reinforcement learning model and corresponds to the current state data; obtaining user intention information, and screening the candidate answer set by using the user intention information to obtain a target answer set; and according to the distribution probability of each candidate answer in the target answer set, determining one target answer as a reply answer of the current question and answer round. The invention further discloses an answer matching device, electronic equipment and a computer readable storage medium. According to the method, theanswer is generated by combining the context and the user intention, so that the accuracy and the accepted rate of the answer can be greatly improved, and the defect of answer divergence in task-typemulti-round dialogues is overcome.

Owner:TAIKANG LIFE INSURANCE CO LTD +1

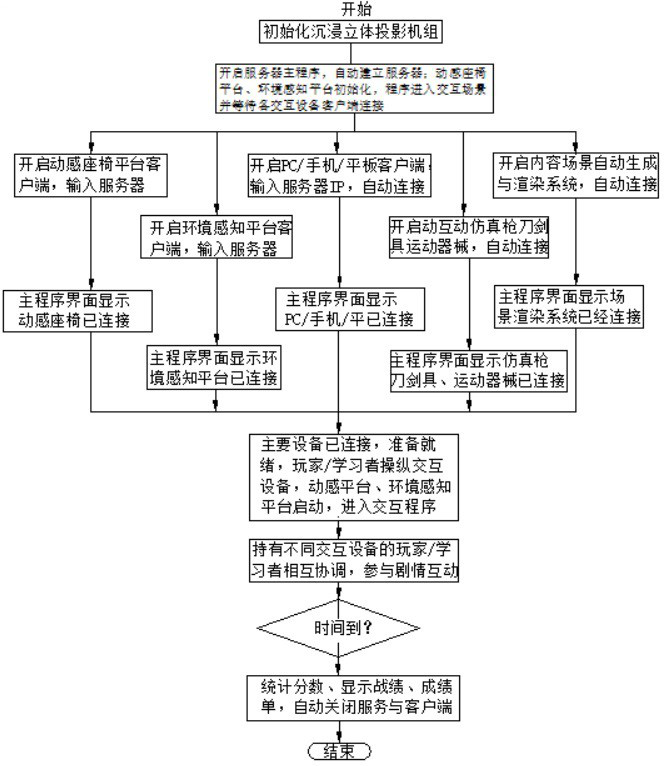

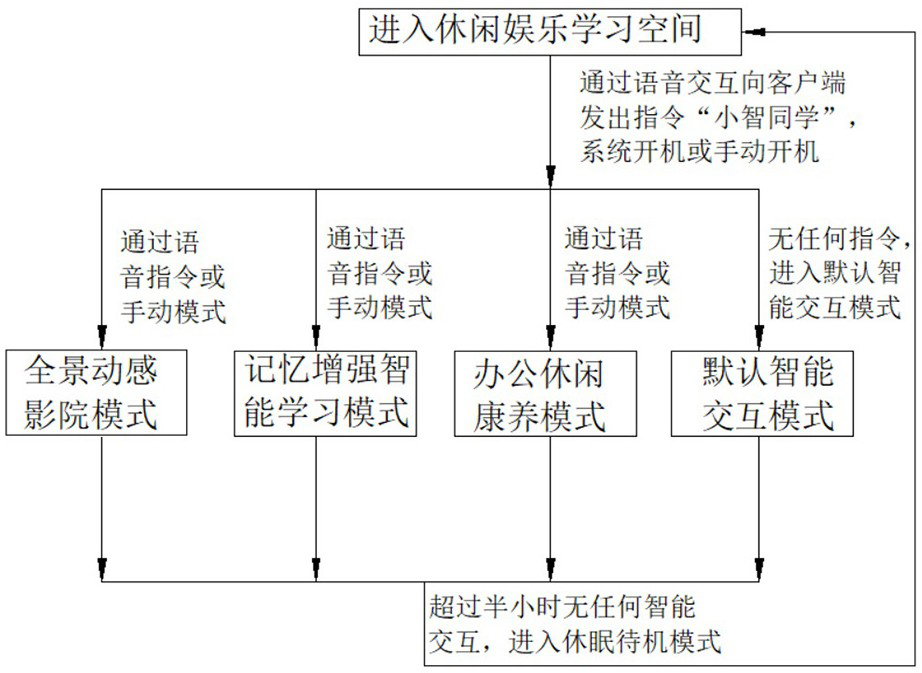

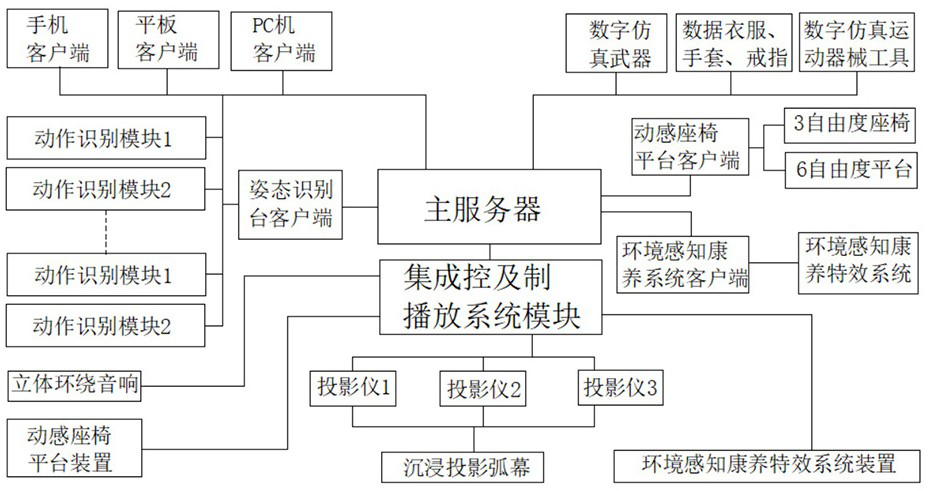

Entertainment and leisure learning device based on 5G Internet of Things virtual reality

PendingCN111862711AImprove utilization efficiencyLow costCosmonautic condition simulationsVideo gamesHardware structureEngineering

The invention designs an entertainment and leisure learning device based on 5G Internet of Things virtual reality. A hardware structure comprises a leisure, entertainment and learning space element, and a panoramic immersion stereo display system, an environment perception health care special effect system, a dynamic platform seat device, a main server, an interaction module, a communication network module, a client, an integrated control and playing system and a stereo surround sound system are comprehensively integrated in the space element. Numerous software and hardware devices are organically and comprehensively integrated and thus the device has the following multiple use functions based on the software and hardware equipment: a a panoramic virtual reality dynamic cinema device, a panoramic virtual reality immersion memory enhancement learning device, a panoramic virtual reality immersion leisure health maintenance device, a panoramic virtual reality medical health maintenance rehabilitation device and the like, multiple uses of one device are realized, the comprehensive utilization efficiency of software and hardware devices is improved, and the cost is reduced.

Owner:广州光建通信技术有限公司

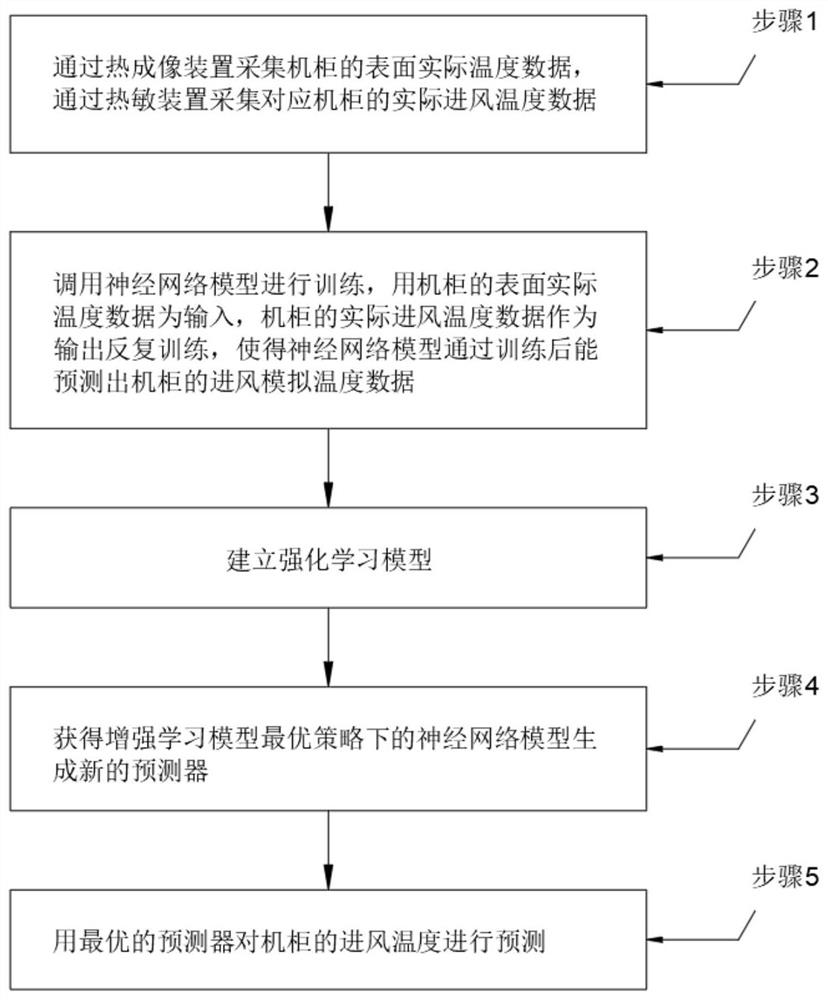

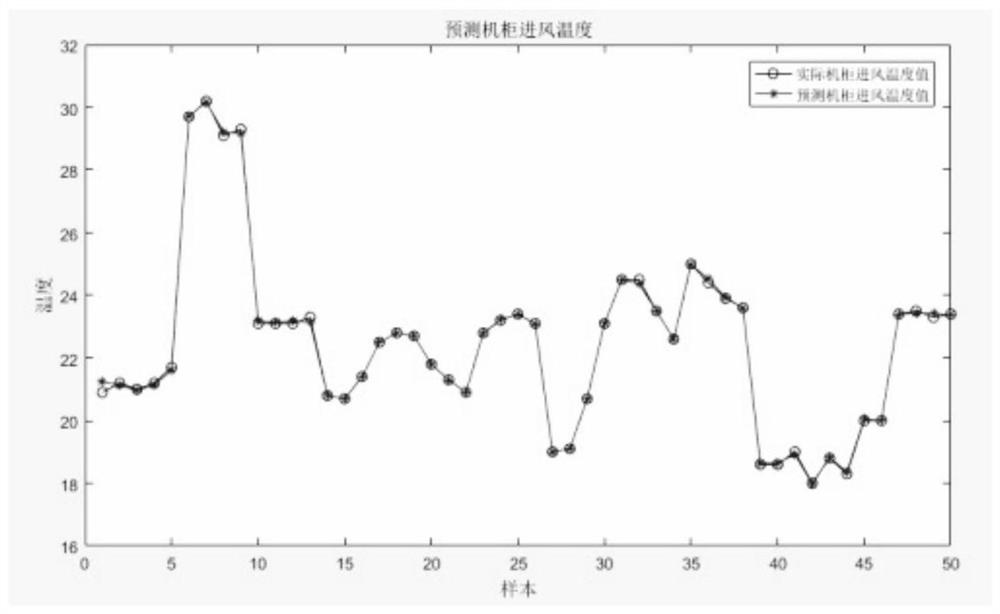

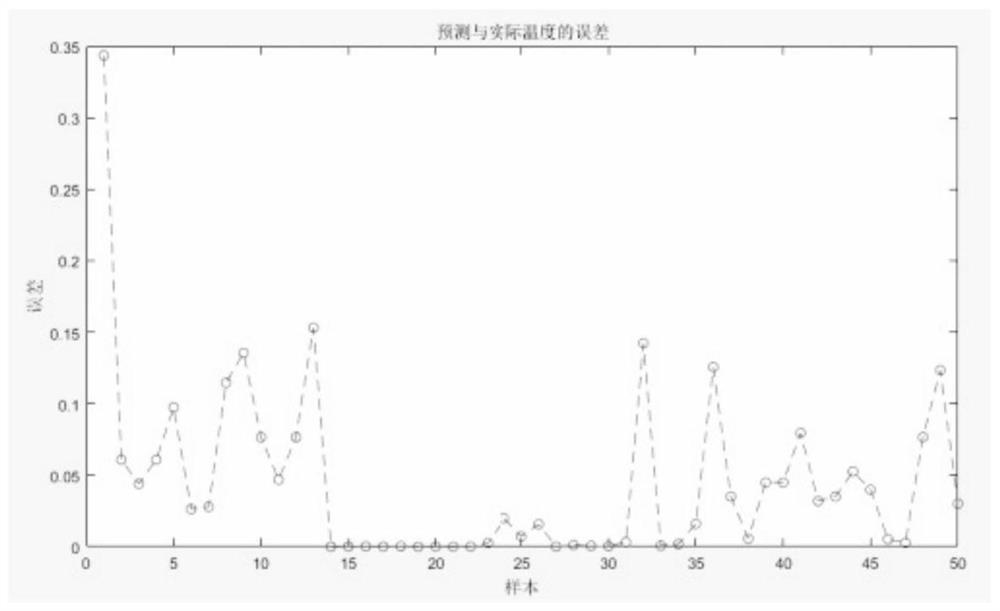

Method for predicting air inlet temperature of cabinet based on reinforcement learning model

ActiveCN111795761AImprove accuracyReduce in quantityRadiation pyrometryThermometer applicationsSimulationNetwork model

The invention relates to the technical field of artificial intelligence, in particular to a method for predicting the air inlet temperature of the cabinet based on the reinforcement learning model. The method comprises the following steps: 1, collecting actual surface temperature data of the cabinet through a thermal imaging device, and collecting actual air inlet temperature data of the corresponding cabinet through a thermosensitive device; 2, calling a neural network model for training, and repeatedly training by taking the surface actual temperature data of the cabinet as input and the actual air inlet temperature data of the cabinet as output, so that the neural network model can predict air inlet simulation temperature data of the cabinet after training; 3, establishing a reinforcement learning model; 4, obtaining a neural network model under the optimal strategy of the reinforcement learning model to generate a new predictor; and 5, predicting the air inlet temperature of the cabinet by using the optimal predictor. According to the invention, the accuracy of the air inlet simulation temperature data of the cabinet is improved, the material is saved and labor cost is reduced,and use is convenient.

Owner:菲尼克斯(上海)环境控制技术有限公司

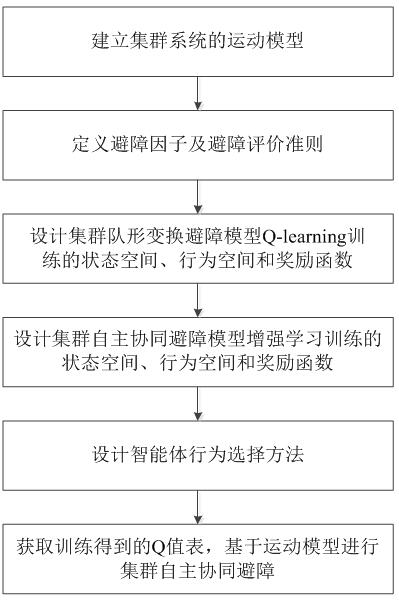

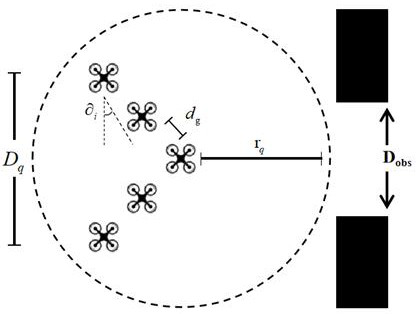

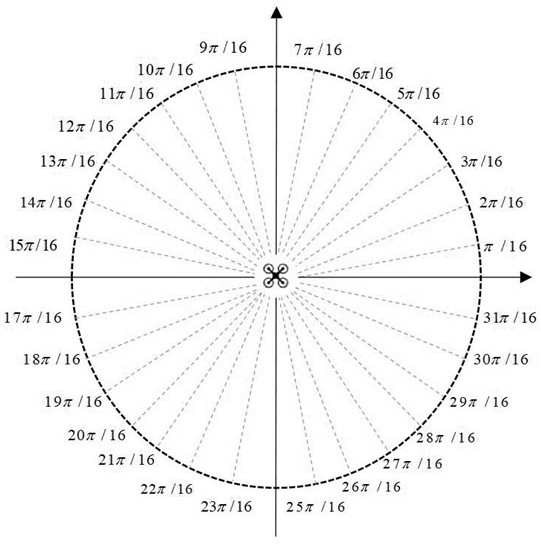

Multi-agent cluster obstacle avoidance method based on reinforcement learning

ActiveCN113156954AOptimal Cluster Individual Obstacle Avoidance StrategyImprove obstacle avoidance efficiencyPosition/course control in two dimensionsAlgorithmSimulation

The invention discloses a multi-agent cluster obstacle avoidance method based on reinforcement learning. The method comprises the following steps: S1, establishing a motion model of a cluster system; S2, defining an obstacle avoidance factor xi and an obstacle avoidance evaluation criterion; S3, designing a state space, a behavior space and a reward function trained by a cluster formation transformation obstacle avoidance model Q-learning when ximin is less than ximin; S4, enhancing a state space, a behavior space and a reward function of learning training by the cluster autonomous collaborative obstacle avoidance model during design; S5, designing an agent behavior selection method; and S6, obtaining a Q value table obtained by training, and carrying out cluster autonomous collaborative obstacle avoidance based on the motion model defined in the S1. According to the invention, parameters such as an obstacle avoidance factor and an obstacle avoidance evaluation criterion are used for selection and judgment of an intelligent agent cluster obstacle avoidance model, and a Q-learning algorithm is combined to train a cluster autonomous collaborative obstacle avoidance model, so that an optimal cluster individual obstacle avoidance strategy and high obstacle avoidance efficiency are obtained.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

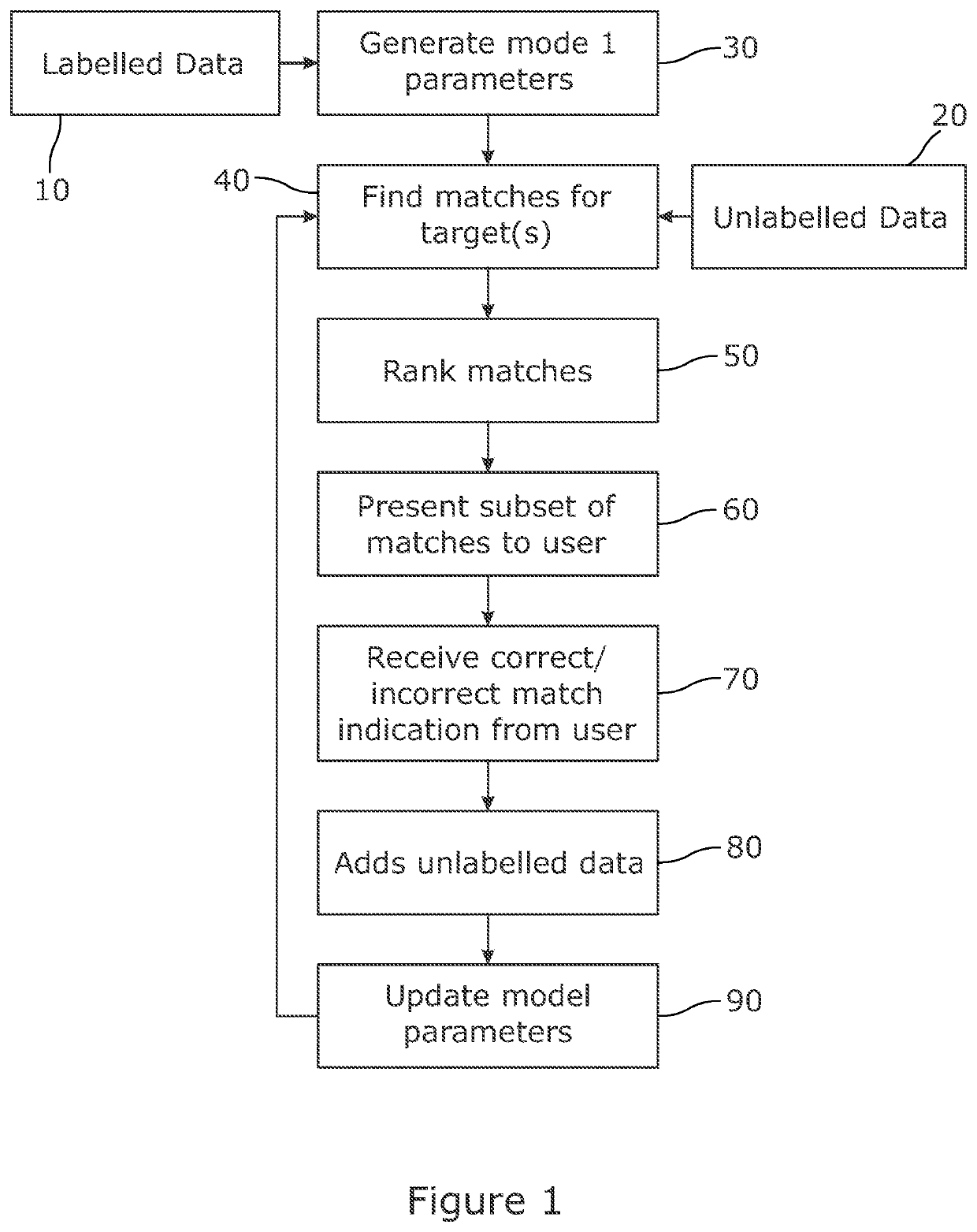

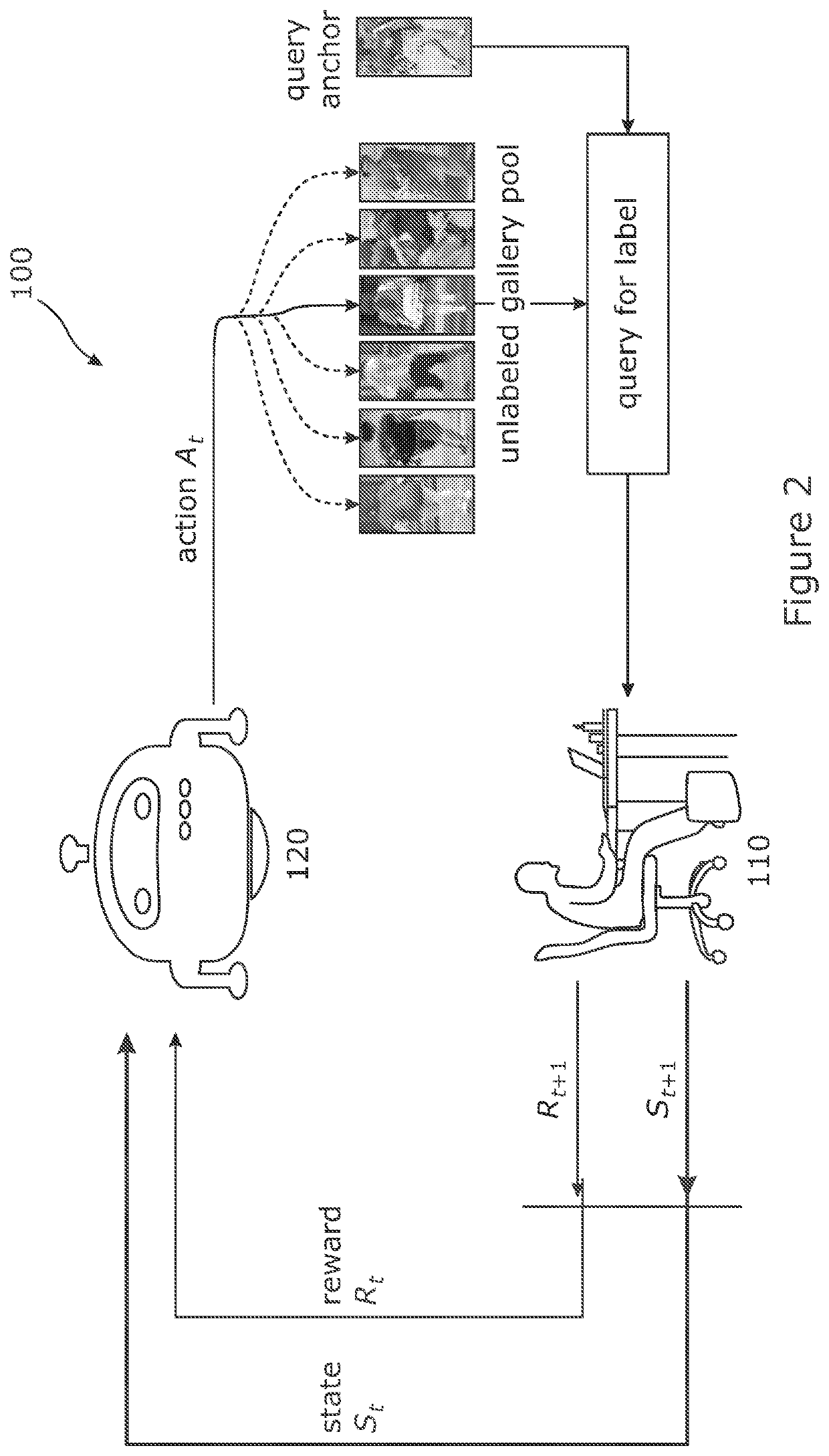

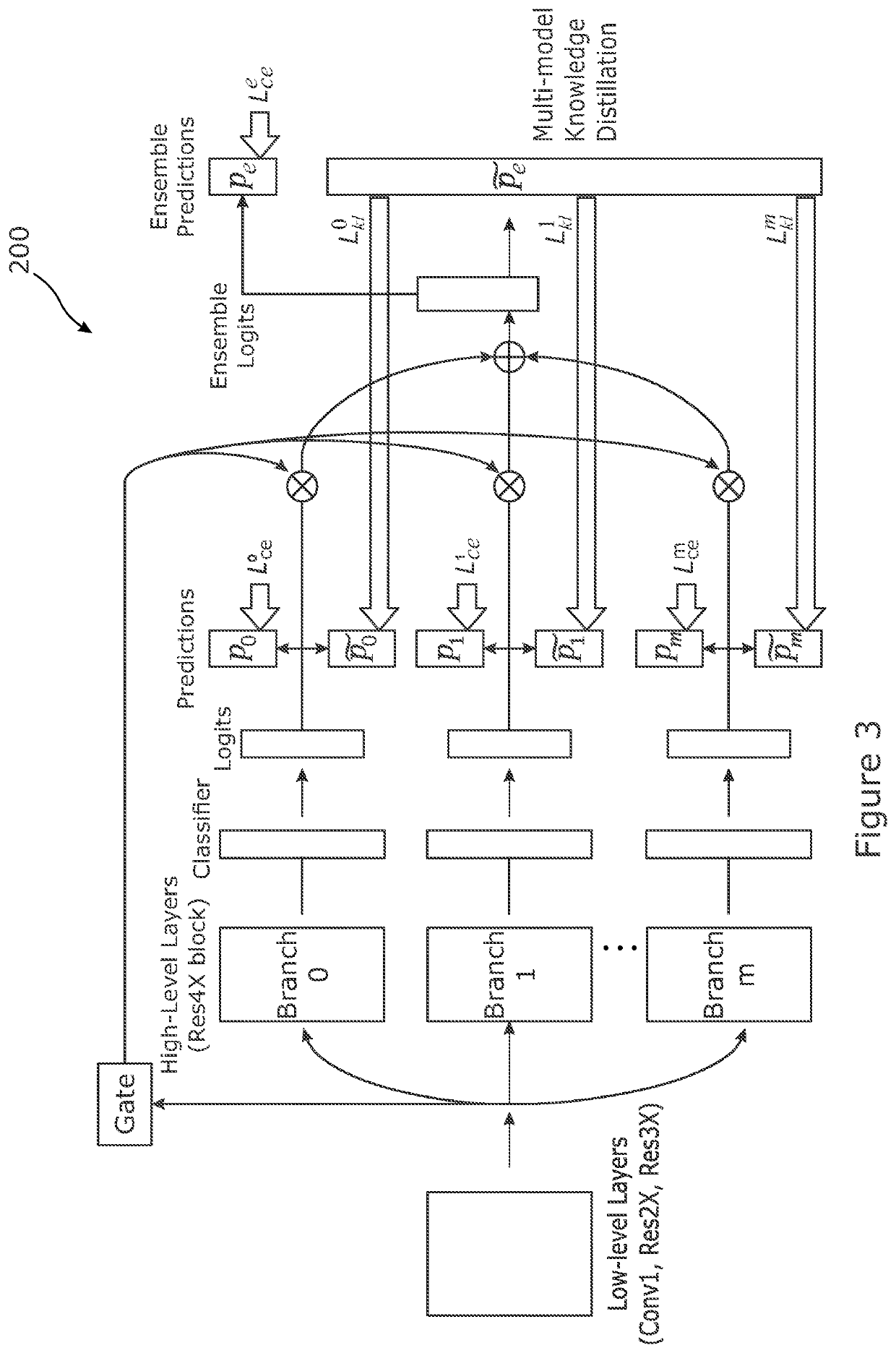

Optimised Machine Learning

PendingUS20220318621A1Eliminate needAvoid the needMathematical modelsArtificial lifeData setLabeled data

Method for optimising a reinforcement learning model comprising the steps of receiving a labelled data set. Receiving an unlabelled data set. Generating model parameters to form an initial reinforcement learning model using the labelled data set as a training data set. Finding a plurality of matches for one or more target within the unlabelled data set using the initial reinforcement learning model. Ranking the plurality of matches. Presenting a subset of the ranked matches and corresponding one or more target, wherein the subset of ranked matches includes the highest ranked matches. Receiving a signal indicating that one or more presented match of the highest ranked matches is an incorrect match. Adding information describing the indicated incorrect one or more match and corresponding target to the labelled data set to form a new training data set. Updating the model parameters of the initial reinforcement learning model to form an updated reinforcement learning model using the new training data set.

Owner:VERITONE

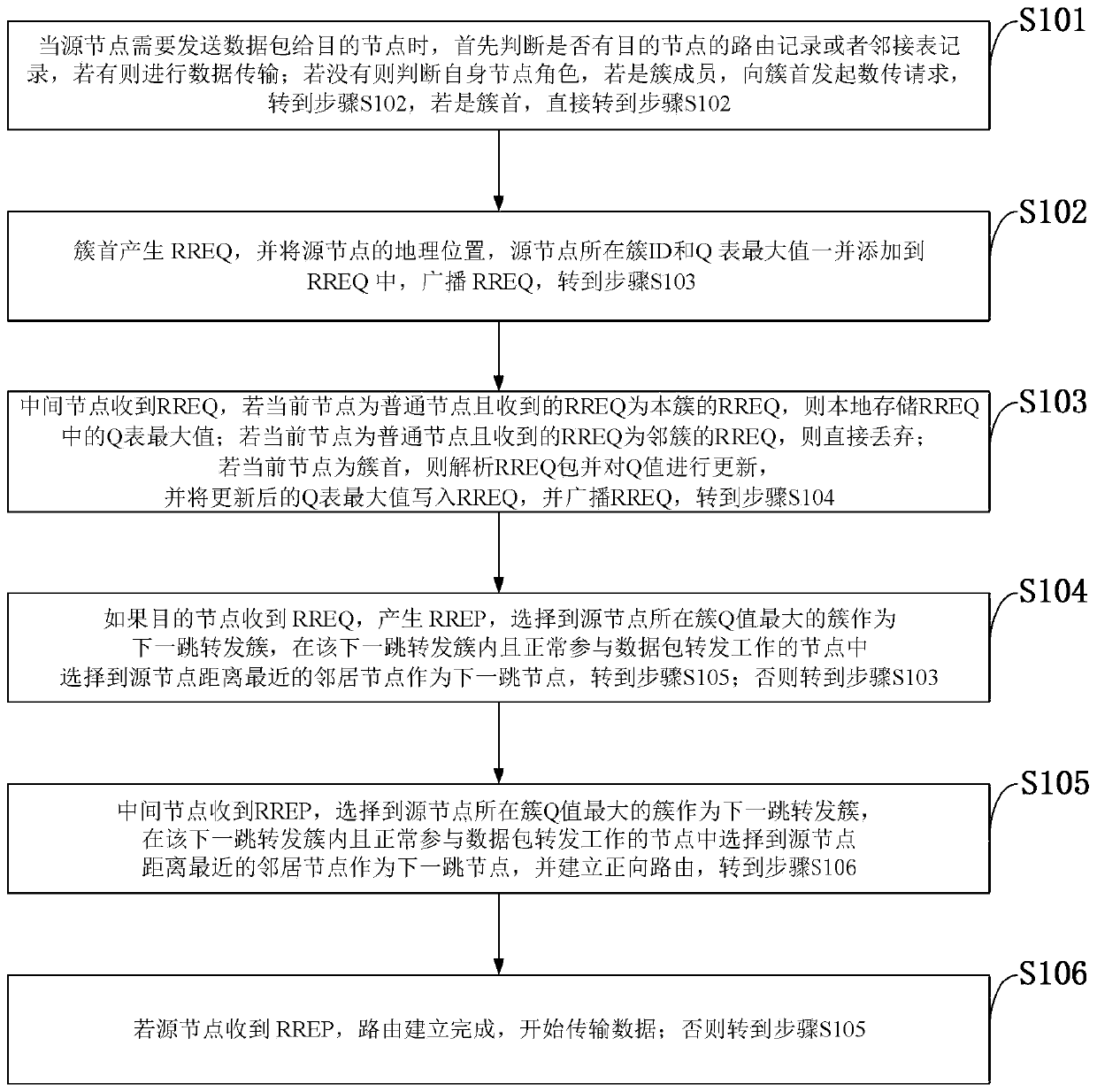

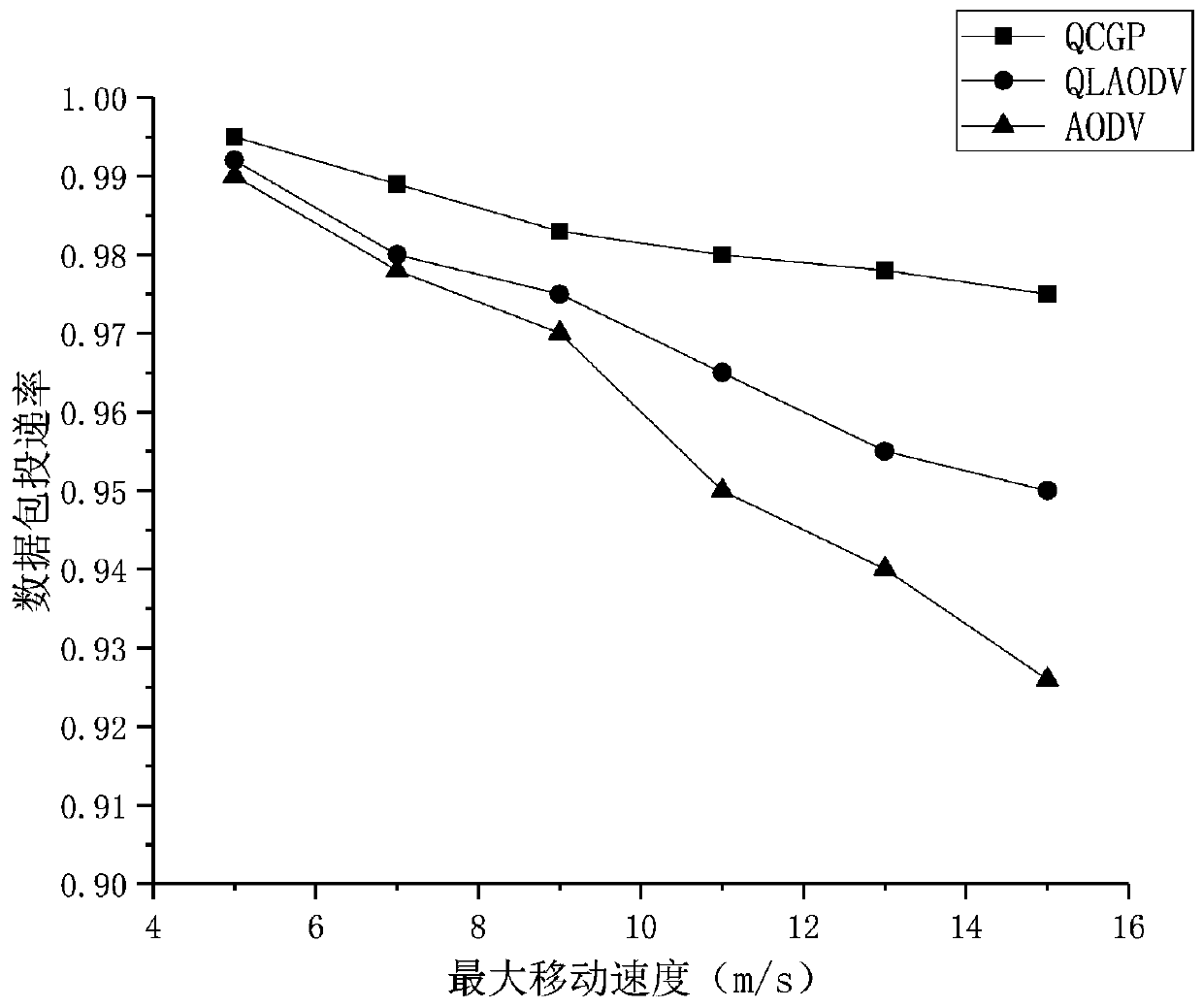

Hybrid routing method based on clustering and reinforcement learning and ocean communication system

ActiveCN111510956ANot easy to loseReduce overheadNetwork traffic/resource managementNetwork topologiesPathPingData pack

The invention belongs to the technical field of ocean communication and discloses a hybrid routing method based on clustering and reinforcement learning and an ocean communication system. The method is characterized by macroscopically learning the Q value of the whole cluster on line to determine the optimal next-hop grid; locally determining a specific node in the optimal grid; selecting a next hop node; realizing a route discovery process in combination with the thought of on-demand routing of an AODV algorithm and the greedy principle of GPSR; when the node needs to send the data packet toa destination node, if the corresponding routing information exists, directly sending the data packet, and if the corresponding routing information does not exist, searching for whether the destination node is in the adjacency list or not, and performing forwarding according to a corresponding node forwarding strategy until the destination node is reached. According to the invention, the floodingof broadcast is reduced, and the routing overhead is reduced; the routing void can be effectively avoided, the selected path is more suitable for the current network state, and the packet loss rate islower.

Owner:DALIAN HAOYANG TECH DEV CO LTD

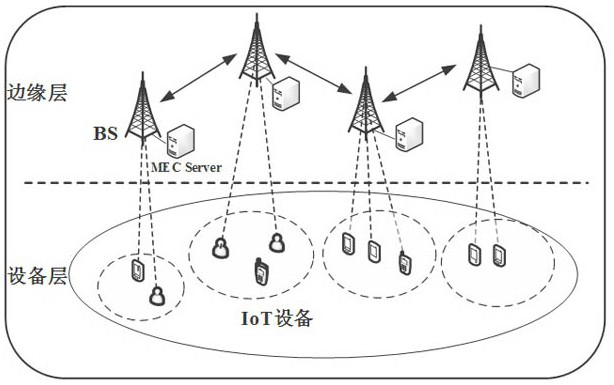

Cooperative unloading and resource allocation method based on multi-agent DRL under MEC architecture

The invention relates to a cooperative unloading and resource allocation method based on a multi-agent DRL under an MEC architecture. The method comprises the following steps: 1) proposing a collaborative MEC system architecture, and considering collaboration between edge nodes, namely, when the edge nodes are overloaded, migrating a task request to other low-load edge nodes for collaborative processing; 2) adopting a partial unloading strategy, namely unloading partial calculation tasks to an edge server for execution, and distributing the rest calculation tasks to local IoT equipment for execution; 3) modeling a joint optimization problem of a task unloading decision, a computing resource allocation decision and a communication resource allocation decision into an MDP problem according to dynamic change characteristics of task arrival; and 4) further using a multi-agent reinforcement learning collaborative task unloading and resource allocation method to dynamically allocate the resources to maximize the experience quality of users in the system. According to the method, dynamic management of the system resources under the collaborative MEC system architecture is realized, and the average delay and energy consumption of the system are reduced.

Owner:STATE GRID FUJIAN POWER ELECTRIC CO ECONOMIC RES INST +1

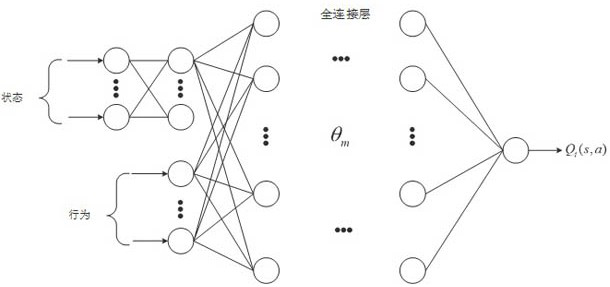

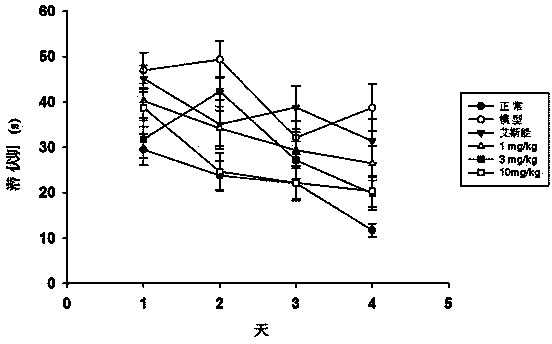

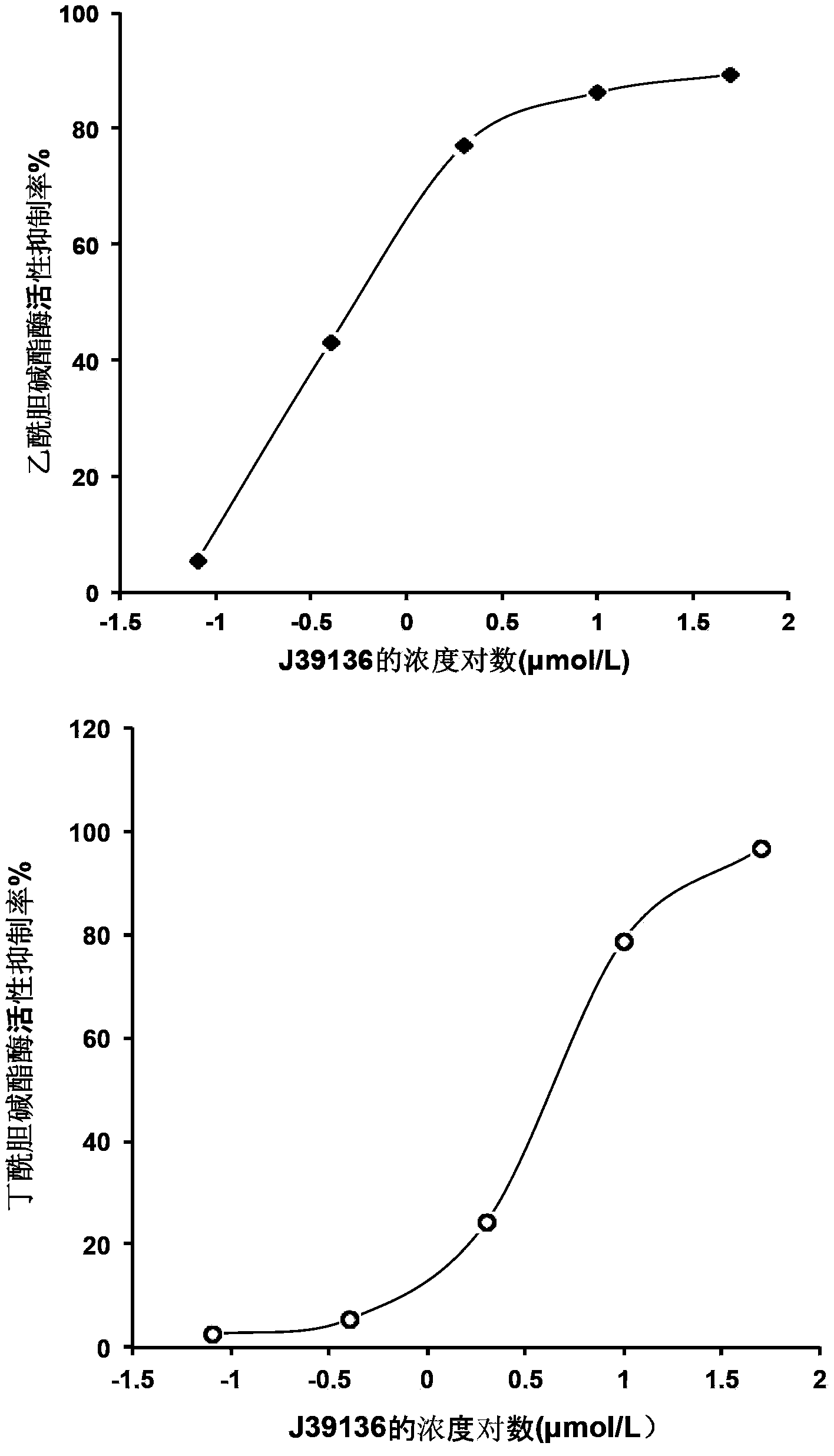

Multiple-target effects of isoflavone derivative and its application in improvement of learning and memory

ActiveCN104095849ARegulating neuroinflammationImprove learning and memory functionOrganic active ingredientsNervous disorderDamage repairDisease injury

The invention discloses multiple-target effects of an isoflavone derivative and its application in improvement of learning and memory. Specifically, the invention discloses that a compound J39136 has the effects of: upregulating the expression of SIRT1 protein with damage repair and neuroprotective effects; inhibiting the activity of acetylcholinesterase and butyrylcholinesterase, and dose-dependently inhibiting the acetylcholinesterase activity of cells under a safe dose; protecting nerve cells, inhibiting abnormal expression of beta-APP, and reducing Abeta1-42 secretion; and regulating neuroinflammation. Animal experimental results prove that J39136 has low toxicity, can penetrate the blood-brain barrier, and can significantly improve scopolamine caused mouse dementia and strengthen learning and memory functions. Preclinical study results prompt that J39136 is expected to become the drug for prevention and / or treatment of learning and memory disorder and Alzheimer's disease.

Owner:INST OF MATERIA MEDICA AN INST OF THE CHINESE ACAD OF MEDICAL SCI

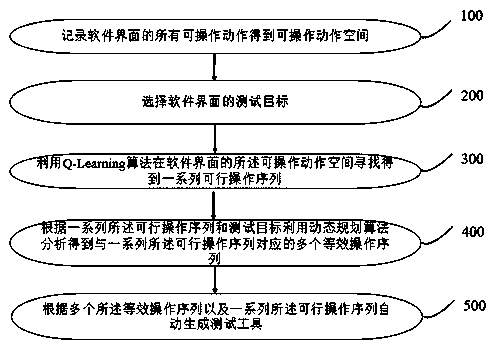

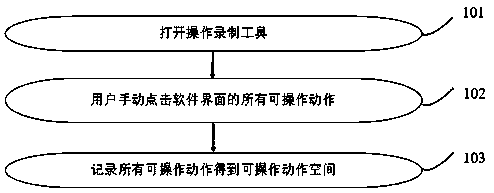

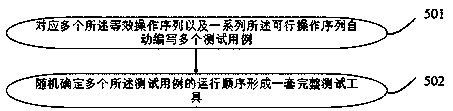

Interface test case automatic generation method and tool

PendingCN111352826AReduce heavy liftingIncrease coverageSoftware testing/debuggingCode generationTest script

The invention provides an interface test case automatic generation method and tool. The method comprises the following steps of by recording an operable action space of a software interface , exploring the operable action space of the software interface by utilizing a reinforcement learning algorithm to obtain a state space and obtain a series of feasible operation sequences; and according to thestate space and the test target, utilizing a dynamic programming algorithm to analyze and obtain one or more operation sequences which are optimal or closest to real person operation, and finally automatically compiling codes according to the operation sequences to generate a test tool. A Q-learning reinforcement learning algorithm and a Dynamic Program dynamic programming algorithm are combined to autonomously learn operable actions of a software interface; the complete test tool is automatically generated, heavy tasks of writing the test tool by a tester in interface testing are reduced, thesituation that a test script needs to be rewritten after a program or an interface is changed is reduced, and the coverage rate of the test suite is greatly increased.

Owner:上海云扩信息科技有限公司

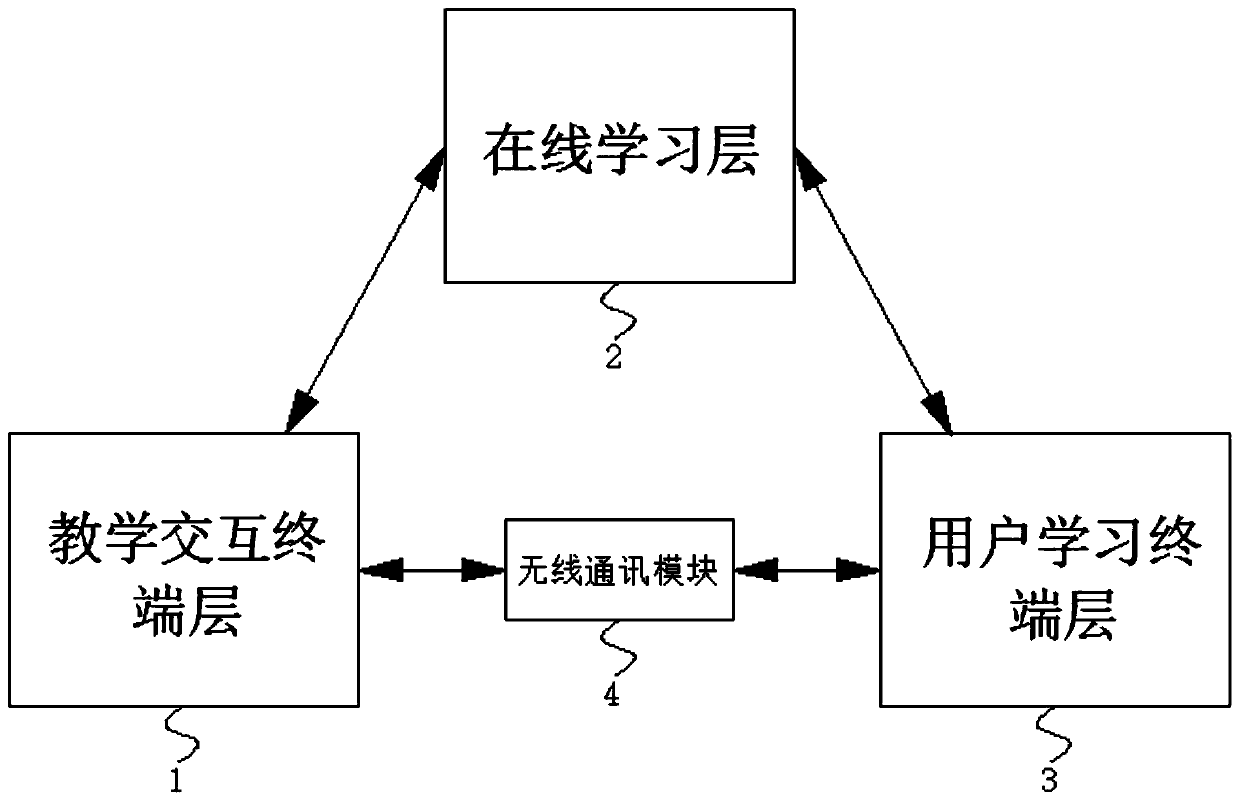

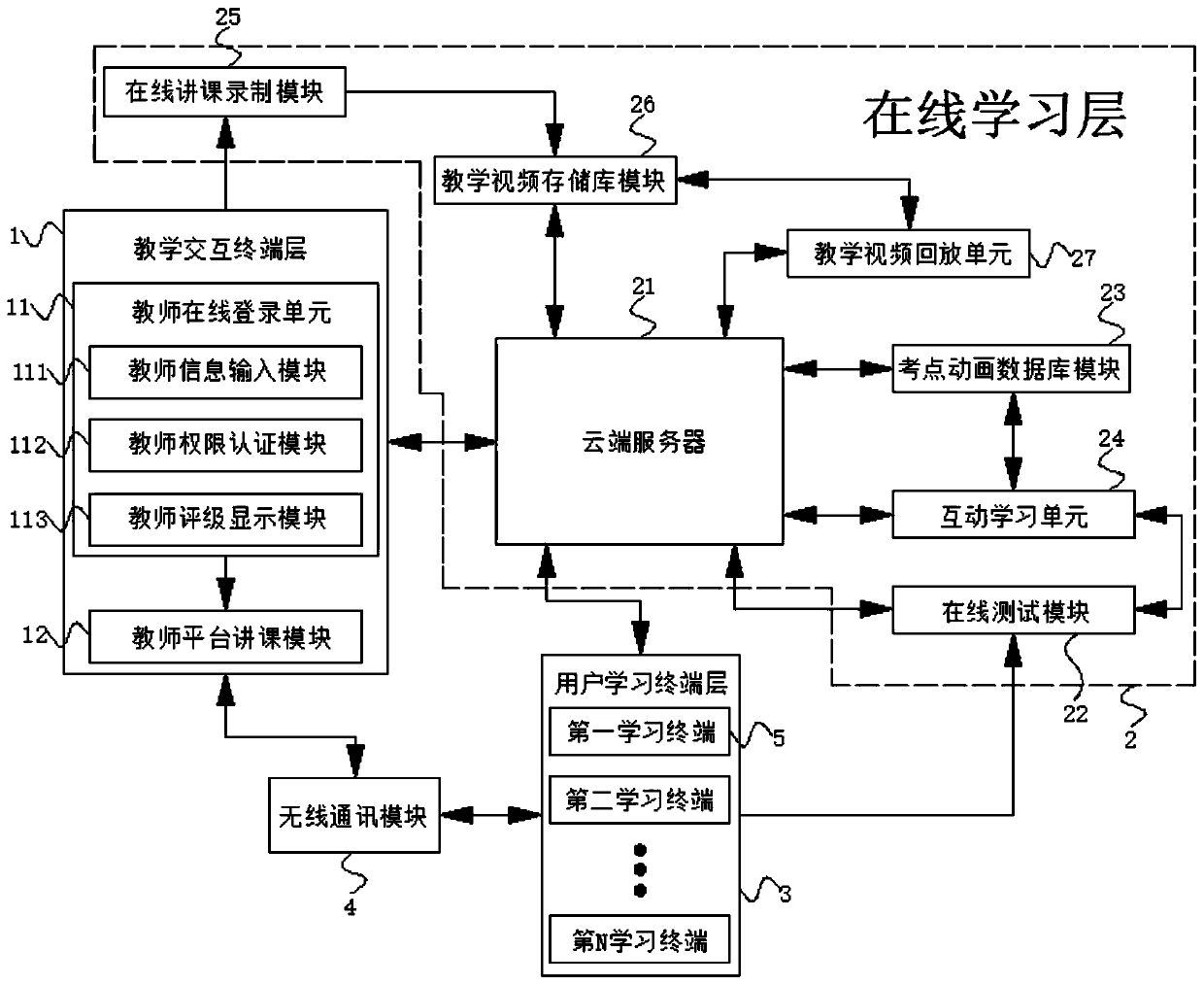

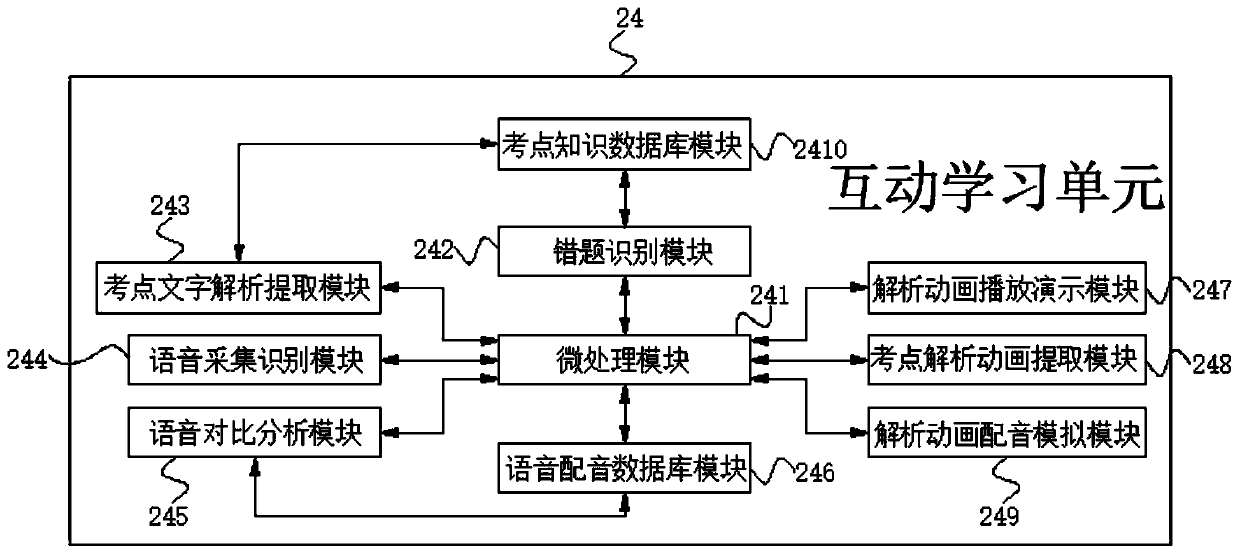

Online video learning system

InactiveCN110379231AAchieve two-way connectionIncrease interest in learningElectrical appliancesAnimationOnline learning

The invention discloses an online video learning system, which comprises a teaching interaction terminal layer, an online learning layer and a user learning terminal layer; the online learning layer has a double-way connection with the teaching interaction terminal layer and the user learning terminal layer; and the teaching interaction terminal layer has a double-way connection with a communication module and the user learning terminal layer in wireless way. The invention relates to the field of online teaching technology. By adoption of the online video learning system, the leaner can learnin entertainment by the form of animation dubbing; the learning interest of the learner is enhanced; the learning quality of the learner is improved through enhancing the learning interest of the learner; the leaner is convenient and efficient to learn online, and the online learning method is more interesting; the learner is avoided to be tired for a long term of learning, so that the learning quality of the learner is well ensured; and thus, the online video learning system is very helpful for online learning of the learner.

Owner:湖北金百汇文化传播股份有限公司

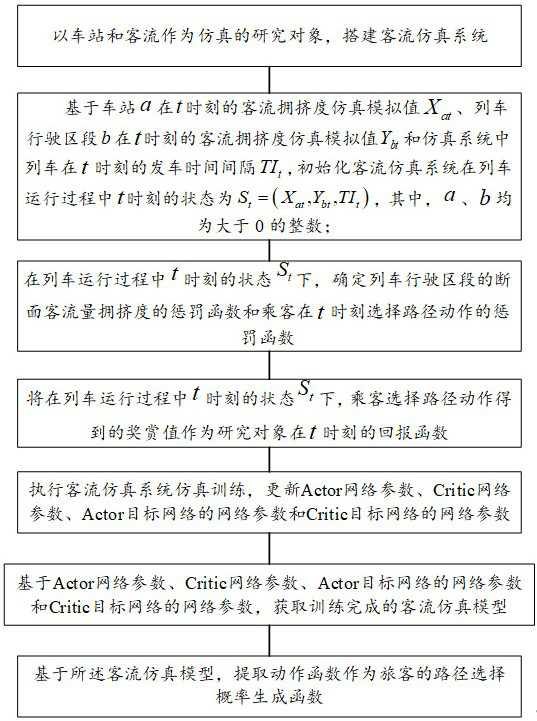

Rail transit automatic simulation modeling method and device based on reinforcement learning

ActiveCN111737826AReduce operational index varianceAccurate descriptionGeometric CADDesign optimisation/simulationReal systemsSimulation training

The invention discloses a rail transit automatic simulation modeling method and device based on reinforcement learning, and the method comprises the steps: building a passenger flow simulation systemthrough employing passenger flow as a research object of simulation; initializing the state of the passenger flow simulation system at the moment t, and then performing analogue simulation to obtain asection passenger flow congestion degree penalty function of the train in the running section and a penalty function of path selection action of passengers at the moment t; then, taking a reward value obtained by the passenger selecting the path action as a return function of the research object at the moment t; then, executing simulation training of a passenger flow simulation system, updating related network parameters, and then, obtaining a trained passenger flow simulation model; and finally, extracting an action function as a passenger path selection probability generation function. A simulation system is established according to known operation logic and parameters, unknown parameter values in the simulation system are automatically obtained, and therefore the obtained simulation model can accurately describe a real system.

Owner:CRSC RESEARCH & DESIGN INSTITUTE GROUP CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com