Target tracking method and system based on eye movement tracking and storage medium

An eye-tracking and target technology, applied in the visual field, can solve problems such as untargeted, high power consumption, and inability to clearly define the gaze point, so as to improve the comfort of use and expand the market.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

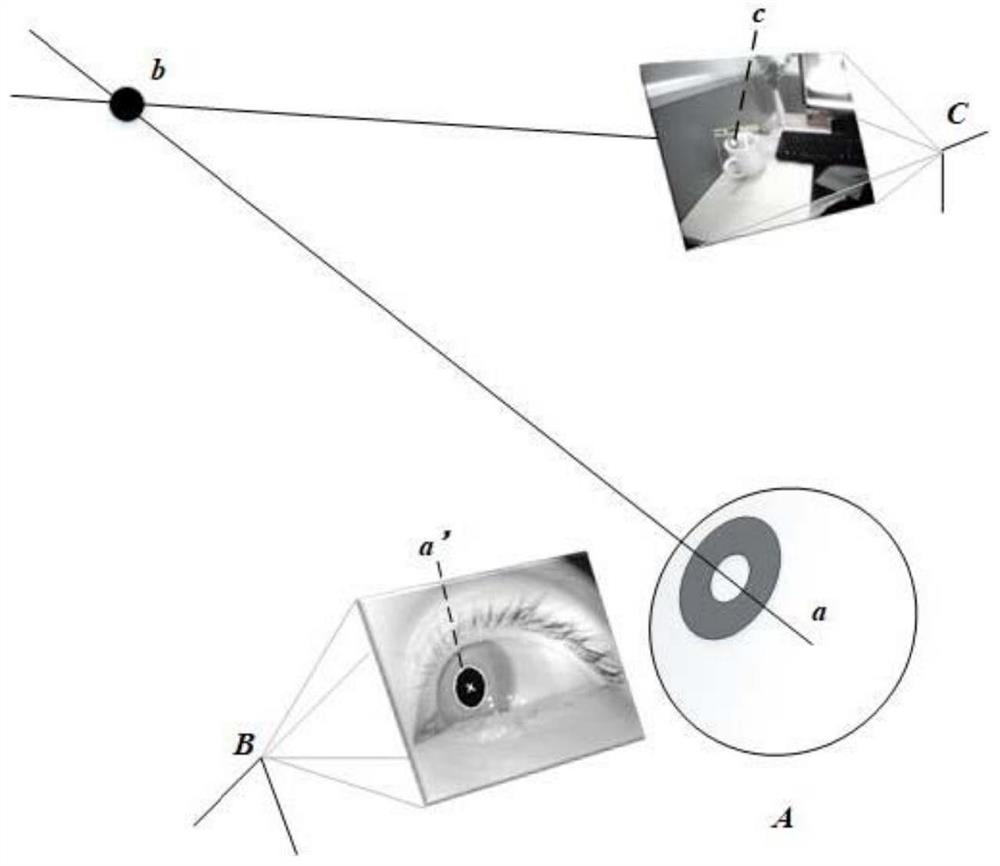

[0057] The target tracking method step of the present embodiment is:

[0058] Step 1, the human eye area video stream I captured in real time by the infrared camera module t , the foreground video stream G that can be seen by the human eye captured by the wide-angle camera module t ;

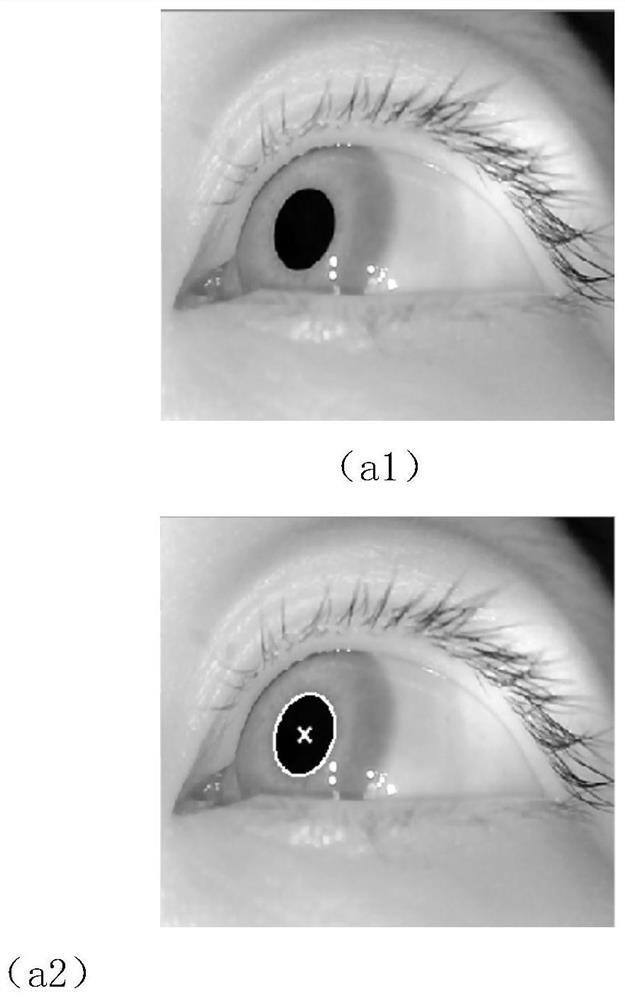

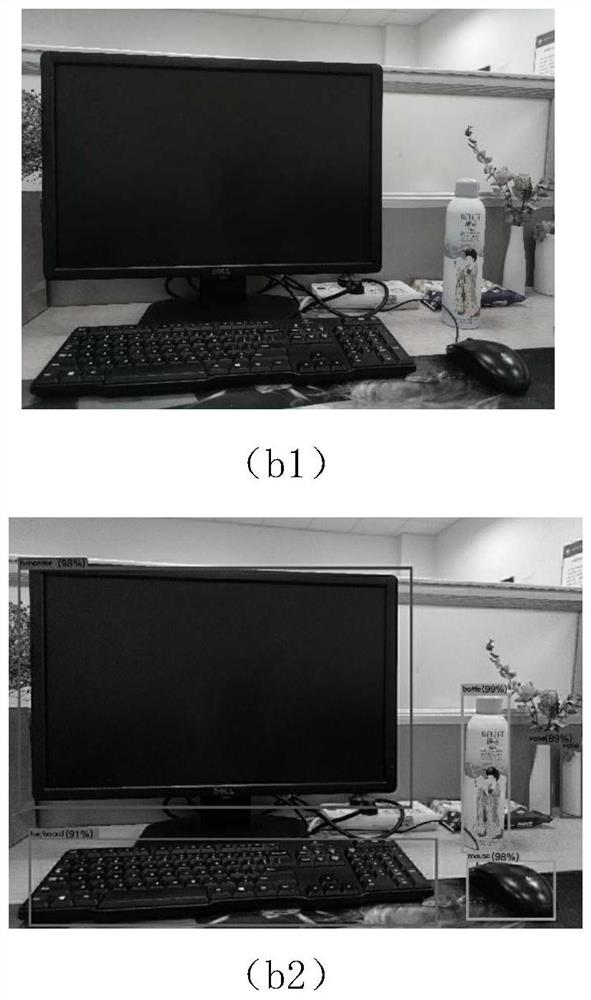

[0059] In this embodiment, α=1280 / 640=2, ω=720 / 360=2, pr°=5°, Dis=1000 pixels, and β is specifically set to be 0.3; figure 1 (a1) is I t One frame of the human eye image, and at the same time collect the foreground video stream G that the human eye can see through the wide-angle camera t , figure 2 (b1) is G t The foreground image of the same sequence frame as (a1);

[0060] Step 2, using the literature "Wang Peng, Chen Yuanyuan, Shao Minglei, et al. Smart Home Controller Based on Eye Tracking [J]. Journal of Electrical Machinery and Control, 2020, v.24; No.187(05):155-164. The method disclosed in " human eye region video stream I t Perform pupil center detection in each frame to obtain...

Embodiment 2

[0065] The difference between this embodiment and embodiment 1 is: the specific steps of pupil center detection method in step 2 are:

[0066] The video stream of the human eye area I t As input, the pyramid LK optical flow method (Bouguet J Y. Pyramidalimplementation of the Lucas Kanade feature tracker. Opencv Documents, 1999.) is used to estimate the motion state of the eyes, and the video stream I of the human eye area is eliminated. t In the blink state frame and the frame of the nystagmus state, the output removes the video stream I that is in the blink state and the nystagmus state frame t '; in nystagmus, the current vector is very small, and in this embodiment, the light loss of adjacent frames is taken as nystagmus when it is less than 100 pixels; in blinking, the current vector is particularly large, and in this embodiment, the light loss of adjacent frames is taken Blink when the amount of loss is greater than 6000 pixels;

[0067] Step 2.1.2, remove the video str...

Embodiment 3

[0069] The difference between this embodiment and embodiment 2 is that step 3 is specifically:

[0070] Processed as foreground video stream G by perceptual hashing algorithm t Generate a "fingerprint" string for each frame; compare the "fingerprint" string information of adjacent frames to judge the similarity of adjacent frames, if the similarity exceeds 98%, directly use the target detection result of the previous frame; if The similarity does not exceed 98%, and the object detection is carried out; the object detection method in this embodiment adopts the YOLOv4 method, for details, please refer to the document "Bochkovskiy A, Wang C Y, Liao H.YOLOv4: Optimal Speed and Accuracy of Object Detection[J].2020. The method disclosed in ".

[0071] The scheme of embodiment 1-3 is carried out real-time performance analysis by frame rate (general frame rate reaches 15 frames / second when human eyes seem to be continuous, then thinks that basic real-time, the frame rate is larger,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com