Application deployment method and device based on multi-inference engine system, and equipment

An inference engine, application deployment technology, applied in the field of deep learning, can solve problems such as low application deployment efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

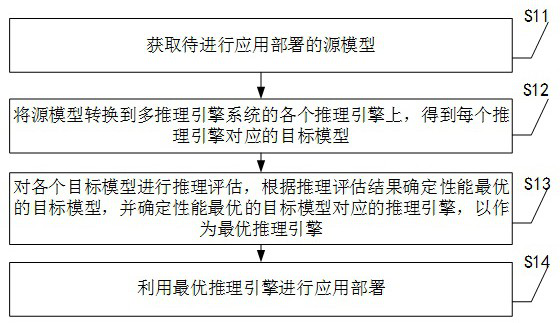

[0053] The following introduces Embodiment 1 of the application deployment method based on the multi-reasoning engine system provided by this application, see figure 1 , embodiment one includes:

[0054] S11. Obtain a source model for application deployment.

[0055] The above source model is a trained deep learning model that needs to be deployed in actual applications. Specifically, the file path of the source model is input, and the source model is read according to the file path. In order to ensure reliability, during the reading process, it is judged whether the path is correct and the file is readable.

[0056] S12. Convert the source model to each reasoning engine of the multi-reasoning engine system, and obtain a target model corresponding to each reasoning engine.

[0057] In this embodiment, the reasoning engine is used to implement optimization, conversion and reasoning evaluation of the source model. Specifically, before converting the source model to the infer...

Embodiment 2

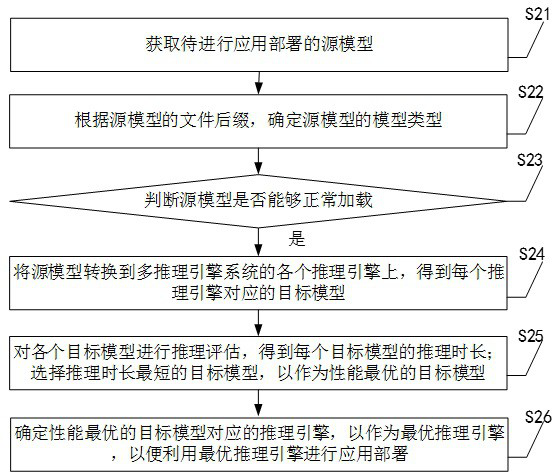

[0064] The second embodiment of the application deployment method based on the multi-reasoning engine system provided by the present application will be introduced in detail below. see figure 2 , Embodiment 2 specifically includes the following steps:

[0065] S21. Obtain a source model for application deployment;

[0066] S22. Determine the model type of the source model according to the file suffix of the source model;

[0067] S23. Call the loading method of the model type to load the source model to determine whether the source model can be loaded normally; if so, go to S24, otherwise it prompts that the model is wrong;

[0068] S24. Convert the source model to each reasoning engine of the multi-reasoning engine system, and obtain a target model corresponding to each reasoning engine;

[0069] S25. Perform inference evaluation on each target model to obtain the inference duration of each target model; select the target model with the shortest inference time as the targ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com