Lip language recognition method and system based on cross-modal attention enhancement

A recognition method and attention technology, applied in the field of computer vision and pattern recognition, can solve problems such as changes in the upper lip area of the image, the impact of visual feature extraction, etc., and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

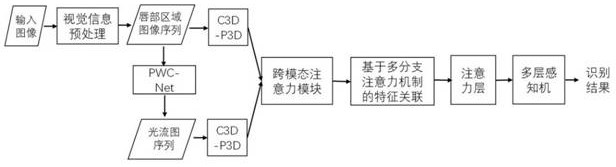

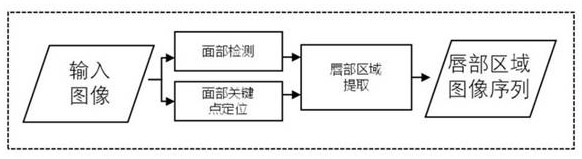

[0054] like figure 1 As shown, the lip recognition method based on cross-modal attention enhancement in this embodiment includes:

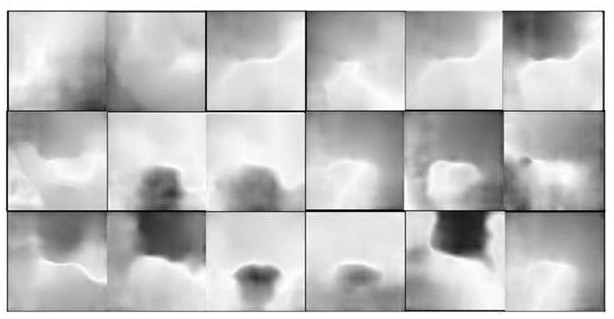

[0055] 1) Extract the lip region image sequence from the input image containing key points of the speaker's face Va , and according to the lip region image sequence Va Extract optical flow map sequence Vo ; Sequence of lip region images Va, Optical Flow Map Sequence Vo Input the pre-trained feature extractor separately to get the lip feature sequence Hv, Characteristic sequence of movement between lips Ho ; the lip feature sequence Hv, Characteristic sequence of movement between lips Ho Position encoding is performed separately to obtain the lip feature sequence that introduces position information Hvp and lip-to-lip motion feature sequence Hop The characteristic sequence of introducing position information composed of the two X ∈ { Hvp , Hop};

[0056] 2) The feature sequence that will be introduced into the position infor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com