Voiceprint recognition method based on variational information bottleneck and system thereof

A voiceprint recognition and information bottleneck technology, which is applied in the field of voiceprint recognition methods and systems based on variational information bottlenecks, can solve the problems of low voiceprint recognition accuracy, improve recognition accuracy, reduce feature redundancy, and improve The effect of robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] An embodiment of the present invention provides a voiceprint recognition method based on variational information bottleneck, including:

[0049] S1: Obtain original voice data;

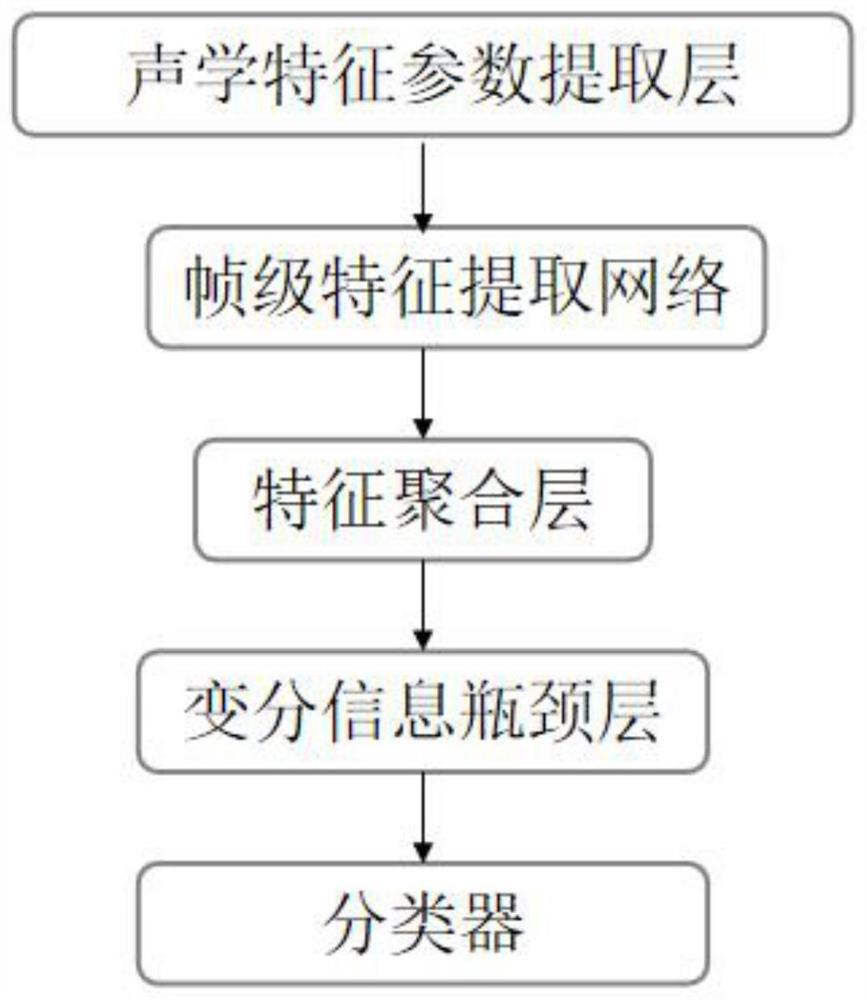

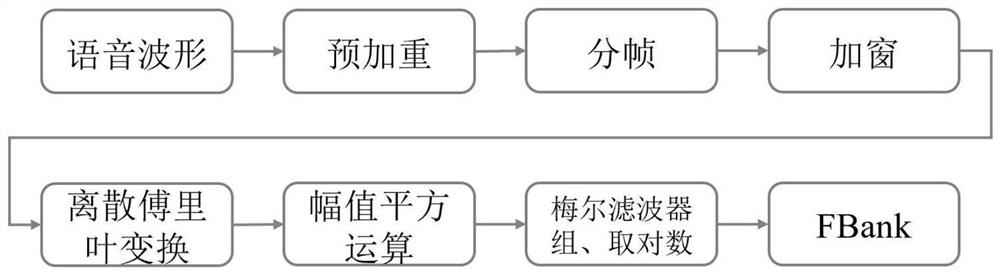

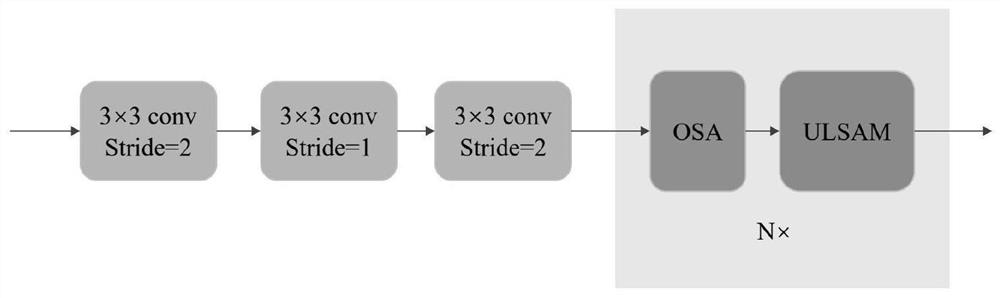

[0050] S2: Build a voiceprint recognition model that introduces a variational information bottleneck. The voiceprint recognition model includes an acoustic feature parameter extraction layer, a frame-level feature extraction network, a feature aggregation layer, a variational information bottleneck layer, and a classifier. The acoustic feature The parameter extraction layer is used to convert the input original speech waveform into the acoustic feature parameter FBank, and the frame-level feature extraction network is used to extract multi-scale and multi-frequency frame-level speaker information from the acoustic feature parameter FBank by one-time aggregation to obtain frame-level Feature vectors, the feature aggregation layer is used to convert frame-level feature vectors into low-dimensiona...

Embodiment 2

[0118] Based on the same inventive concept, this embodiment provides a voiceprint recognition system based on variational information bottleneck, including:

[0119] The data acquisition module is used to obtain the original voice data;

[0120] The model construction module is used to construct a voiceprint recognition model that introduces a variational information bottleneck, wherein the voiceprint recognition model includes an acoustic feature parameter extraction layer, a frame-level feature extraction network, a feature aggregation layer, a variational information bottleneck layer, and a classifier, Among them, the acoustic feature parameter extraction layer is used to convert the input original speech waveform into the acoustic feature parameter FBank, and the frame-level feature extraction network is used to extract multi-scale and multi-frequency frame-level speaker information from the acoustic feature parameter FBank to obtain frame-level Feature vectors, the featur...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com