Data reconstruction method based on auto-encoder

An auto-encoder and data reconstruction technology, applied in the field of data reconstruction based on auto-encoder, can solve the problem that auto-encoder is difficult to achieve lossless data reconstruction, and achieve the effect of improving the quality of data reconstruction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

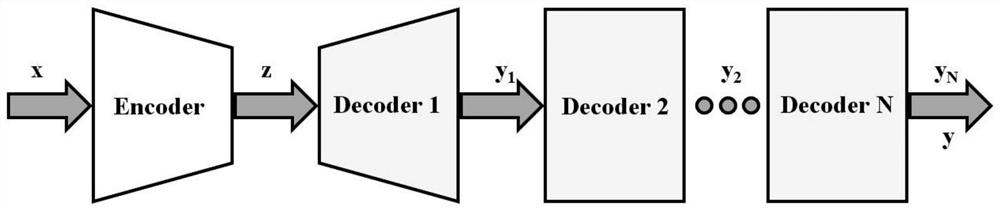

[0017] A data reconstruction method based on an autoencoder. The autoencoder includes an encoding unit and a cascaded decoding unit; the sender uses the encoding unit to encode the original data, and the receiver uses the cascaded decoding unit to decode the data to achieve refactor.

[0018] The self-encoder based on cascaded decoding units (Cascade-Decoders) includes: encoding unit (Encoder), decoding unit 1 (Decoder 1), decoding unit 2 (Decoder 2), ..., decoding unit N (Decoder N). N decoding units are cascaded in the autoencoder.

[0019] Such as figure 1 As shown, when the general decoding unit is used, the autoencoder is expressed as:

[0020]

[0021] Among them, E represents the coding unit; D n Indicates the nth decoding unit; x is the input data of the self-encoder; z is the data output by the encoding unit, which is a low-dimensional representation in the latent space; N is the number of decoding units; y n-1 It is the output of the decoding units at all leve...

Embodiment 2

[0031] A data reconstruction method based on an autoencoder. The autoencoder includes an encoding unit and a cascaded decoding unit; the sender uses the encoding unit to encode the original data, and the receiver uses the cascaded decoding unit to decode the data to achieve refactor.

[0032] The self-encoder based on cascaded decoding units (Cascade-Decoders) includes: encoding unit (Encoder), decoding unit 1 (Decoder 1), decoding unit 2 (Decoder 2), ..., decoding unit N (DecoderN). N decoding units are cascaded in the autoencoder.

[0033] Such as figure 2 As shown, when the autoencoder adopts residual cascaded decoding units, the autoencoder is expressed as:

[0034]

[0035] Among them, E represents the coding unit; D n Indicates the nth decoding unit; x is the input data of the self-encoder; z is the data output by the encoding unit, which is a low-dimensional representation in the latent space; N is the number of decoding units; y n-1 It is the output of decoding...

Embodiment 3

[0045] A data reconstruction method based on an autoencoder. The autoencoder includes an encoding unit and a cascaded decoding unit; the sender uses the encoding unit to encode the original data, and the receiver uses the cascaded decoding unit to decode the data to achieve refactor.

[0046] The self-encoder based on cascaded decoding units (Cascade-Decoders) includes: encoding unit (Encoder), decoding unit 1 (Decoder 1), decoding unit 2 (Decoder 2), ..., decoding unit N (Decoder N). N decoding units are cascaded in the autoencoder.

[0047] Such as Figure 4 As shown, when the self-encoder adopts an adversarial cascade decoding unit, the self-encoder is expressed as:

[0048]

[0049] Among them, E represents the coding unit; D n Indicates the nth decoding unit; x is the input data of the self-encoder; z is the data output by the encoding unit, which is a low-dimensional representation in the latent space; N is the number of decoding units; y n-1 It is the output of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com