Method for converting infrared video into visible light video in unmanned driving

An unmanned driving and video conversion technology, applied in the field of video conversion, can solve the problems of small amount of data, large inter-domain spacing, and retain infrared images, etc., to achieve the effect of increasing mutual information distance, optimizing detail generation, and alleviating style drift

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

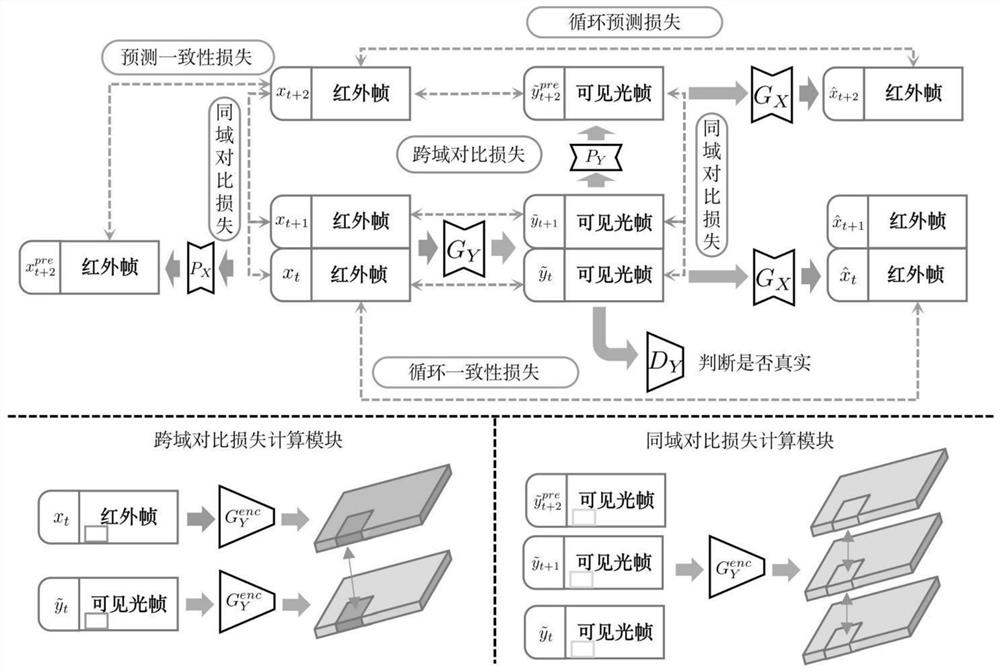

[0064] For ease of understanding, in this embodiment, two video domains are included: source domain X={x}, target domain Y={y}, and a video sequence x in the source domain is counted as a continuous video frame sequence {x 0 ,x 1 ,...,x t}, abbreviated as Similarly, a video sequence y in the target domain is counted as a continuous video frame sequence {y 0 ,y 1 ,...,y s}, abbreviated as It should be noted that the tth frame in the sequence x is counted as x t , the sth frame in the sequence y is counted as y s , the goal of the method described in this embodiment is to learn two different mappings between the source domain and the target domain, so that given any one of the videos, the corresponding videos belonging to different domains can be generated. For example, given an infrared video, the model can Visible light video of the corresponding scene is generated by mapping.

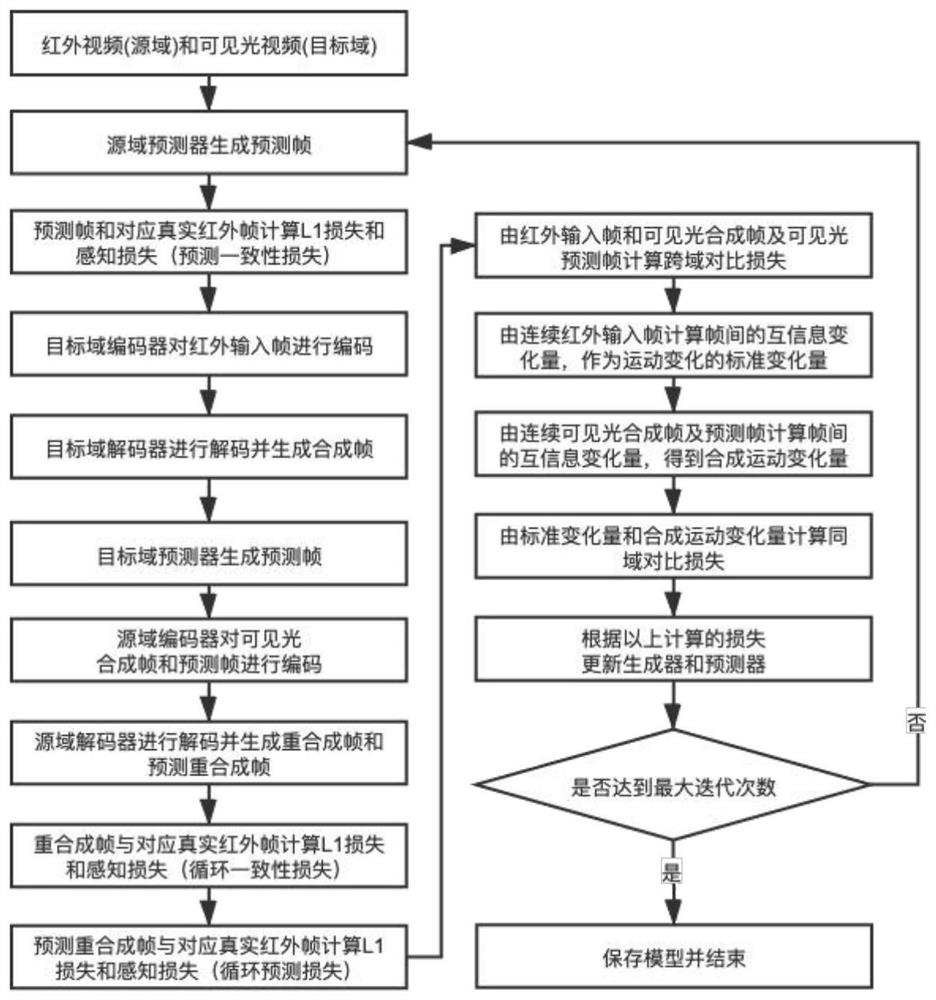

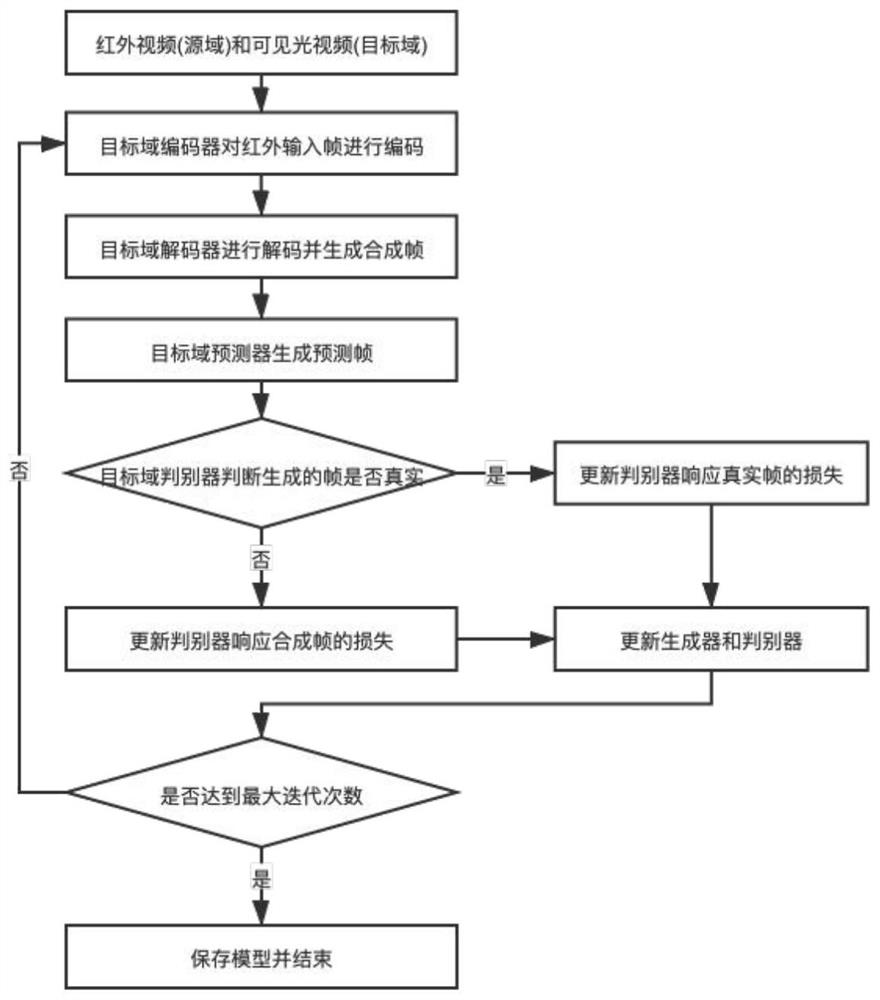

[0065] The method described in this embodiment first builds a model of video style transf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com